AI-900: Microsoft Certified Azure AI Fundamentals

Concepts of Computer Vision

Explore Multi Modal Models

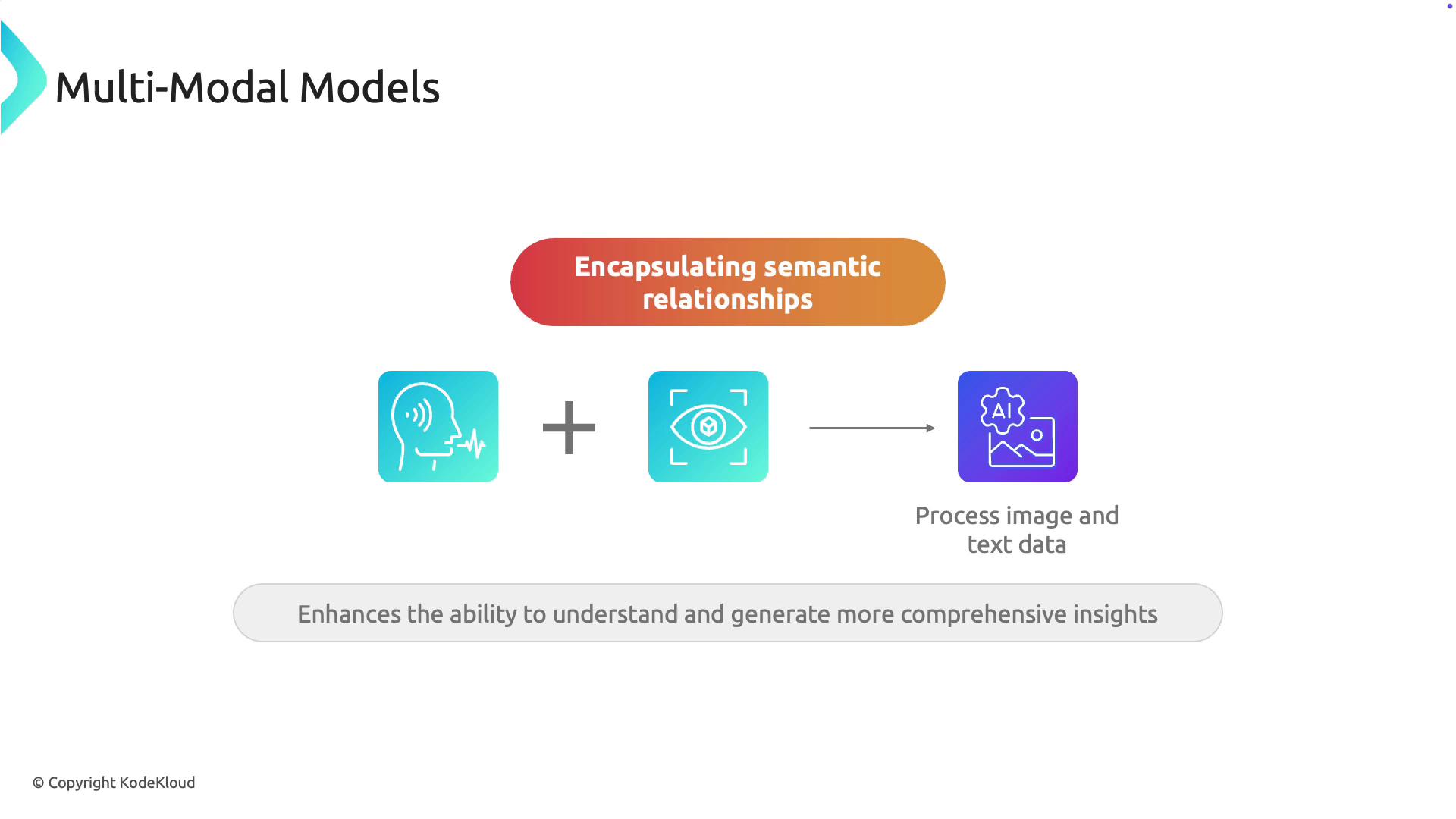

Multimodal models are revolutionizing artificial intelligence by simultaneously processing diverse data types, such as images and text. This fusion of language and vision capabilities makes them exceptionally versatile for a variety of computer vision tasks.

When a multimodal model processes content—like a picture of a fruit accompanied by a label reading "apple"—it leverages both visual and textual context. This integrated approach leads to more informed and accurate interpretations.

Core Capabilities

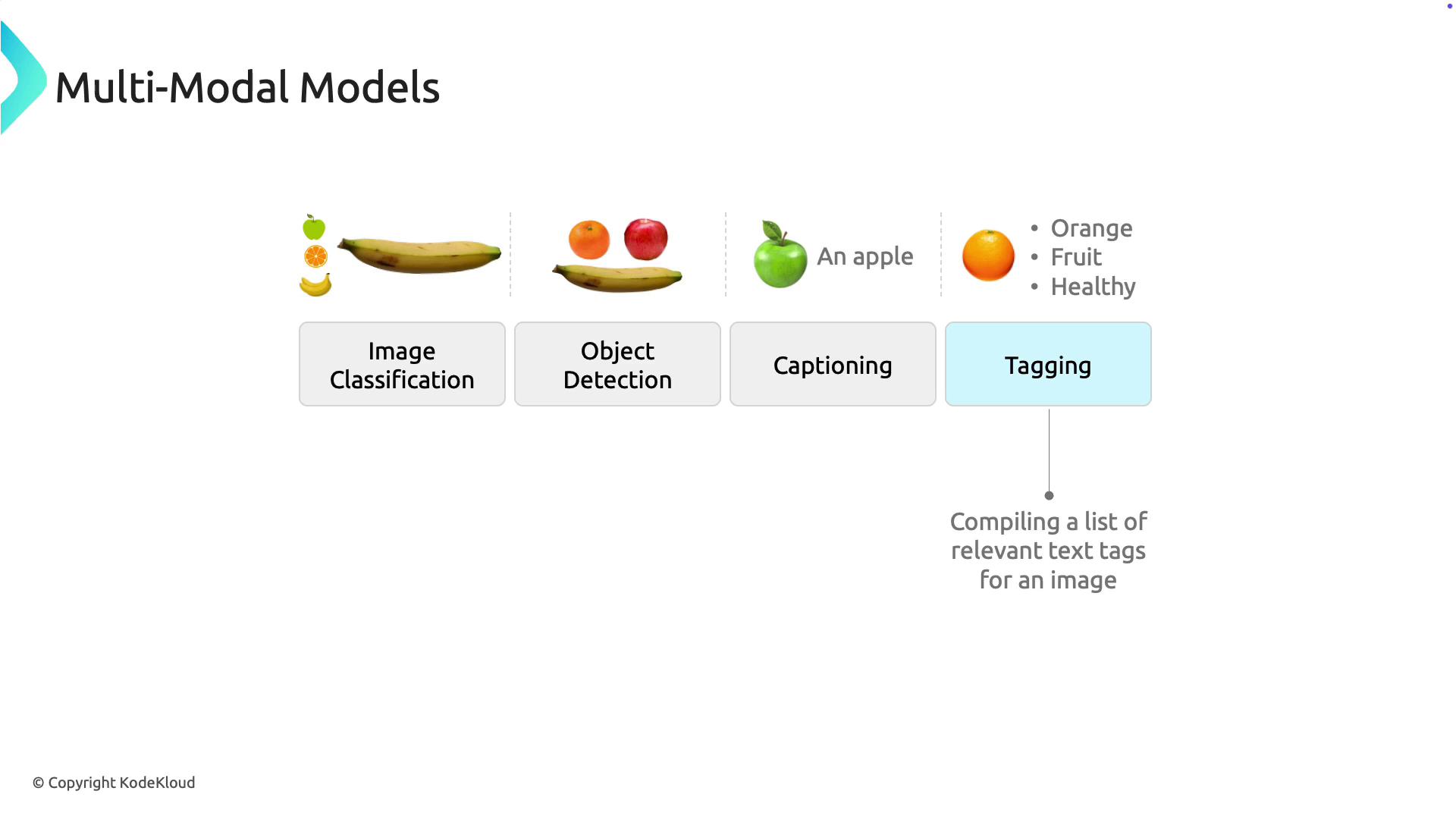

Multimodal models can execute several tasks concurrently:

- Image Classification: Automatically categorizes images into predefined classes.

- Object Detection: Identifies and locates objects within an image.

- Image Captioning: Generates descriptive captions that reflect the content of an image.

- Tagging: Associates relevant keywords with images to improve searchability and further training (e.g., tagging an image of an orange with “orange, fruit, healthy, citrus”).

The strength of these models lies in capturing semantic relationships between visual elements and descriptive language. For instance, linking the shape and color of an apple with its textual label helps the model generate precise predictions and enhanced image descriptions.

Model Architecture

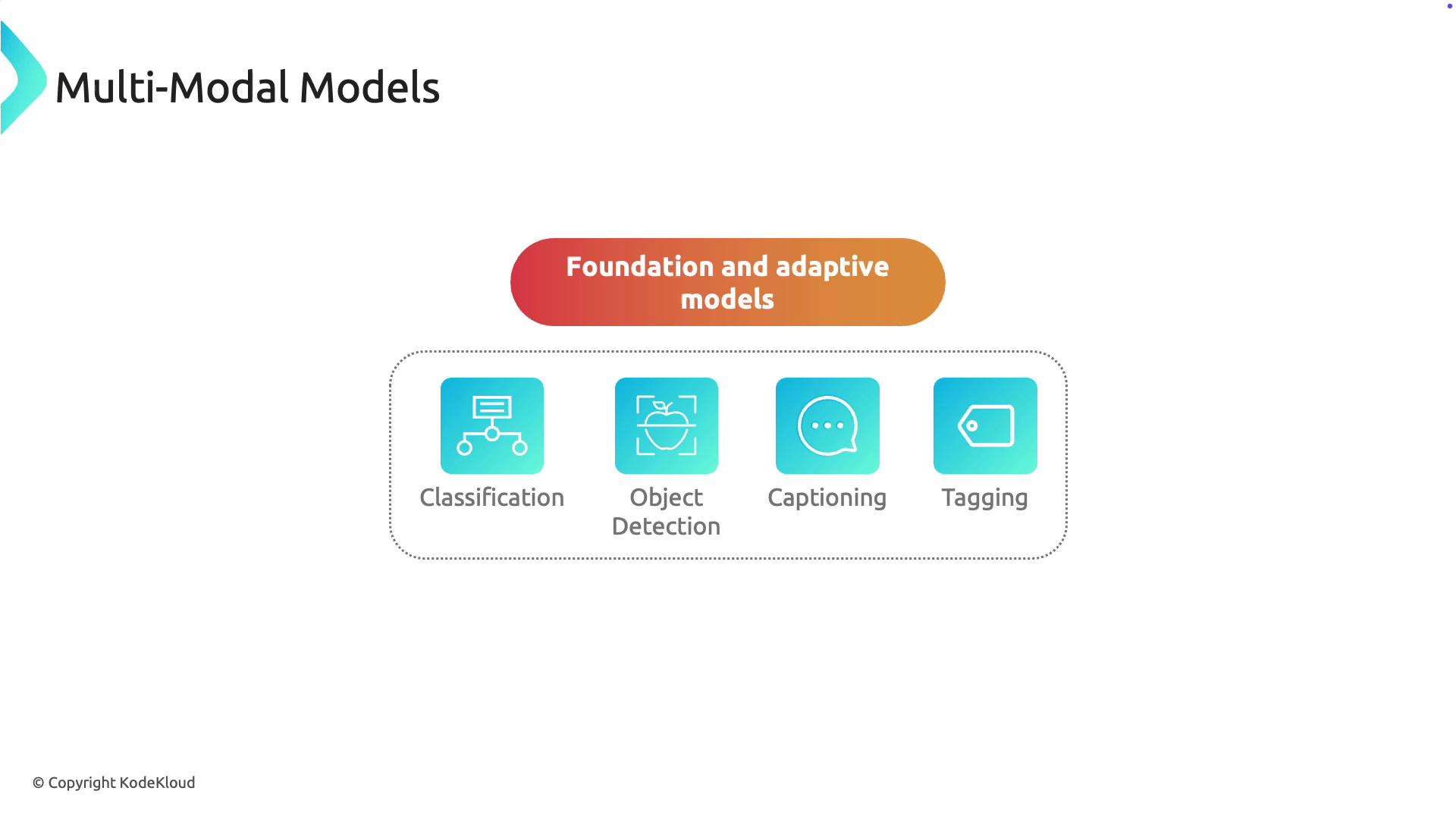

Multimodal models typically consist of two main components:

- Foundation Model: A pre-trained model on extensive datasets, providing general knowledge of image and text representations.

- Adaptive Model: A fine-tuned version of the foundation model, optimized for specific tasks such as image classification, object detection, captioning, or tagging.

Note

Microsoft's Florence model serves as a prominent example of a foundation model. Trained on millions of images coupled with text captions from the internet, Florence comprises two main parts:

- Language Encoder

- Image Encoder

These components enable Florence to be adapted for targeted tasks within Azure AI Vision, such as image categorization, object detection, caption generation, and image tagging.

Leveraging foundation models like Florence accelerates the development of adaptable computer vision solutions. This approach minimizes development time and enhances the performance of systems dealing with both images and text.

With the fundamentals of computer vision and multi-modal models outlined, the next section provides an overview of the computer vision services available in Azure.

Watch Video

Watch video content