AI-900: Microsoft Certified Azure AI Fundamentals

Responsible Generative AI

Plan a Responsible Generative AI Solution

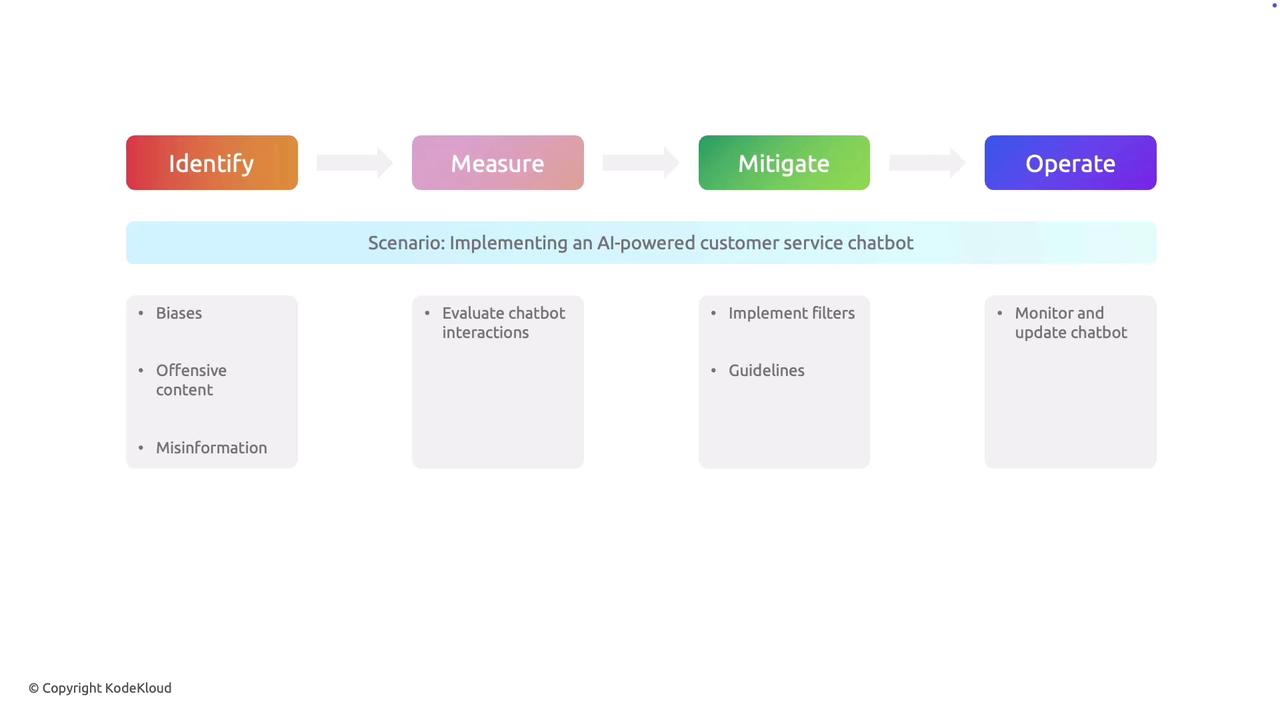

This article outlines a structured and SEO-friendly approach for planning a responsible generative AI solution. It details four essential stages—Identify, Measure, Mitigate, and Operate—that are critical to ensuring ethical, safe, and reliable performance in AI-driven applications like customer service chatbots.

Overview of the Four-Stage Framework

To build AI systems that consistently meet ethical standards, developers must follow a four-stage process. The table below provides an overview of each stage along with its key objectives and activities:

| Stage | Purpose | Key Activities |

|---|---|---|

| Identify | Recognize potential harmful outcomes | Detect biases, offensive language, and misinformation |

| Measure | Continuously evaluate AI performance | Monitor and assess for unintended biases and errors |

| Mitigate | Implement safeguards to prevent harmful responses | Apply language filters and enforce ethical guidelines |

| Operate | Ensure continuous compliance and improvement | Regular updates and monitoring to maintain performance |

Note

Early identification and continuous evaluation are critical in minimizing risks associated with AI systems. Implementing robust safeguards not only enhances user trust but also ensures that the solution remains aligned with ethical standards.

Stage 1: Identify Potential Harms

During the first stage, it’s essential to analyze where the AI might produce harmful outputs. Potential risks include biased responses, offensive language, and the spread of misinformation. By identifying these issues at the outset, developers can proactively design safeguards that prevent these undesirable outcomes in an AI-powered customer service chatbot.

Stage 2: Measure Performance

After identifying potential harms, the next step is to evaluate the chatbot’s performance on an ongoing basis. Regular monitoring helps to detect biases, inappropriate content, or misinformation early. This continuous assessment ensures that any issues are promptly addressed, keeping the chatbot aligned with ethical guidelines and performance standards.

Stage 3: Mitigate Risks

In the mitigation phase, specific filters and operational guidelines are implemented to prevent undesirable responses. This includes:

- Applying language filters to block offensive phrases.

- Enforcing guidelines that maintain accuracy and impartiality.

The goal is to establish a system that consistently delivers respectful, accurate, and reliable information without compromising ethical standards.

Stage 4: Operate Continuously

The final stage focuses on the continuous operation of the AI system. Consistent monitoring and regular updates are crucial to ensure that the chatbot maintains ethical standards and delivers smooth performance over time. The diagram below illustrates the operational workflow for an AI-powered customer service chatbot:

Regular updates guarantee that as AI technology and user expectations evolve, the chatbot remains effective, secure, and trustworthy.

Key Takeaway

By rigorously following the stages of Identify, Measure, Mitigate, and Operate, developers can create AI systems that not only meet operational demands but also uphold high ethical standards. This approach lays a solid foundation for responsible AI development.

This four-stage process forms the backbone of a Responsible AI strategy. By meticulously following these steps, developers and businesses can ensure that their AI solutions are both reliable and ethically sound. For further learning, consider reviewing the AI-900: Microsoft Certified Azure AI Fundamentals materials before proceeding to the quiz and material review.

For more resources, see:

Watch Video

Watch video content