AWS Certified Developer - Associate

Databases

DynamoDB Streams Demo

This guide provides a step-by-step walkthrough to set up DynamoDB Streams for a table using the Products table as an example. Follow along to configure the stream, integrate it with a Lambda function, and validate stream events via CloudWatch Logs.

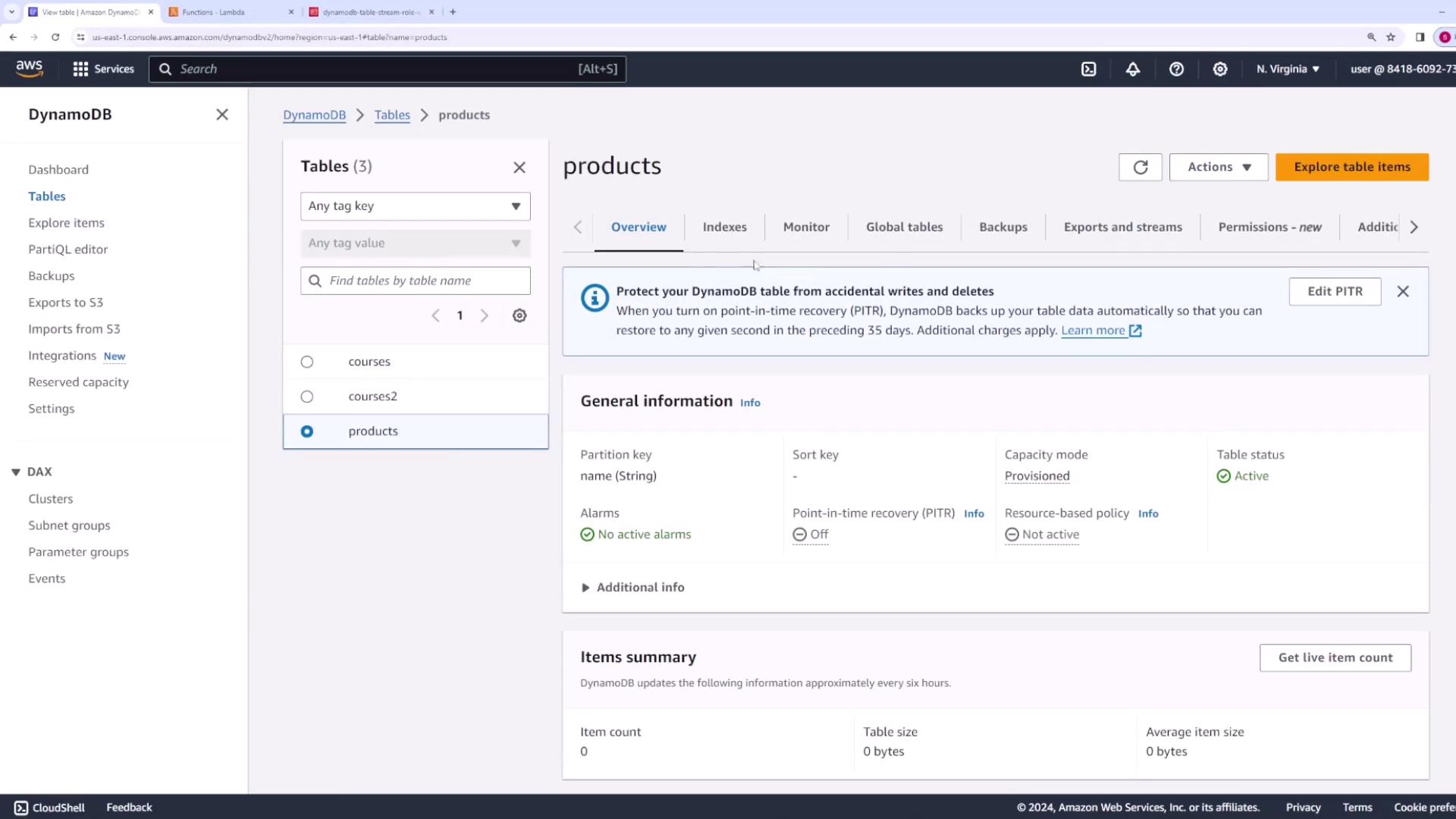

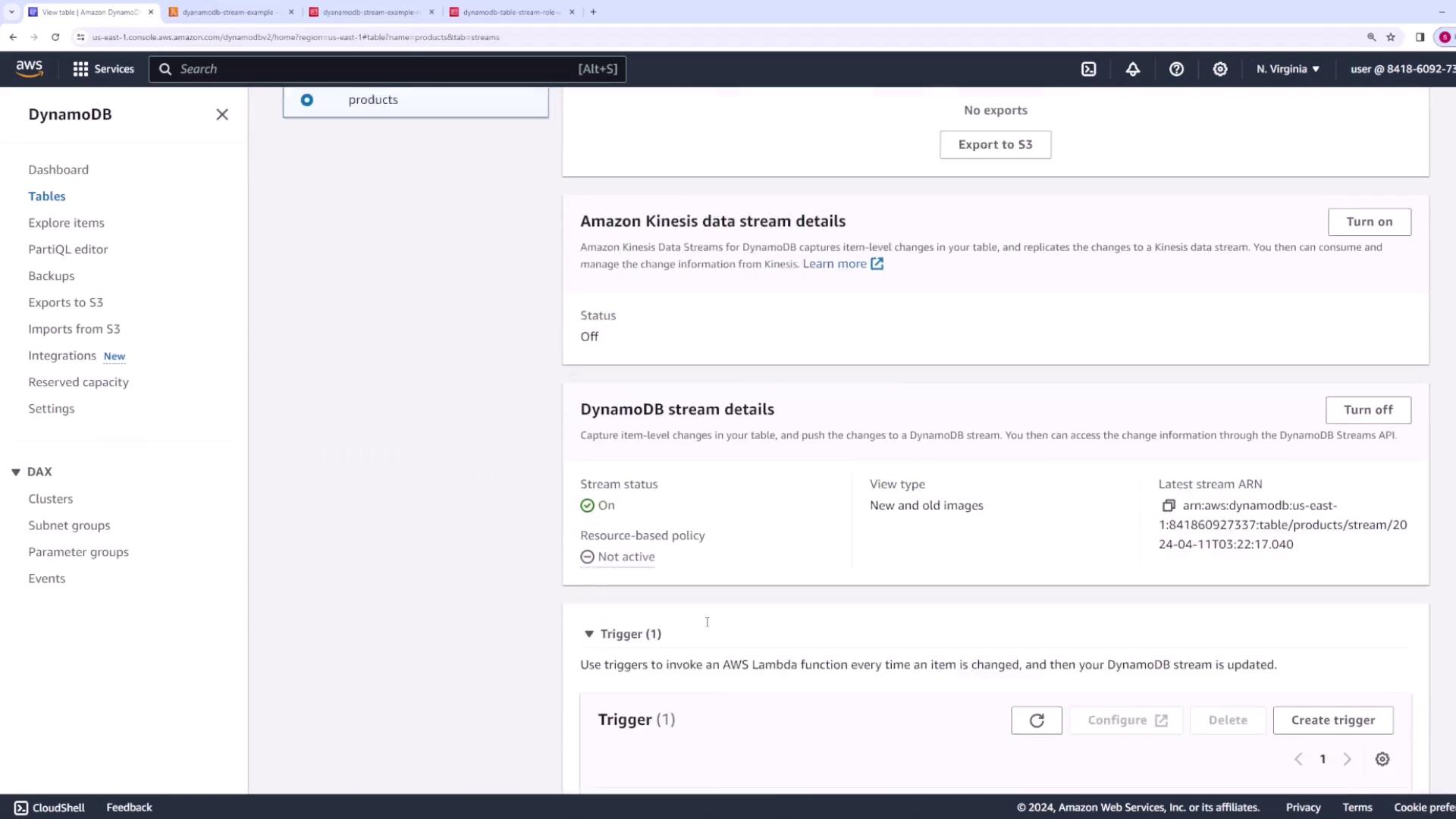

Step 1: Enable DynamoDB Streams on the Products Table

First, open the Products table in your AWS DynamoDB console. Navigate to the Exports and Streams section and scroll down to review the DynamoDB Streams details.

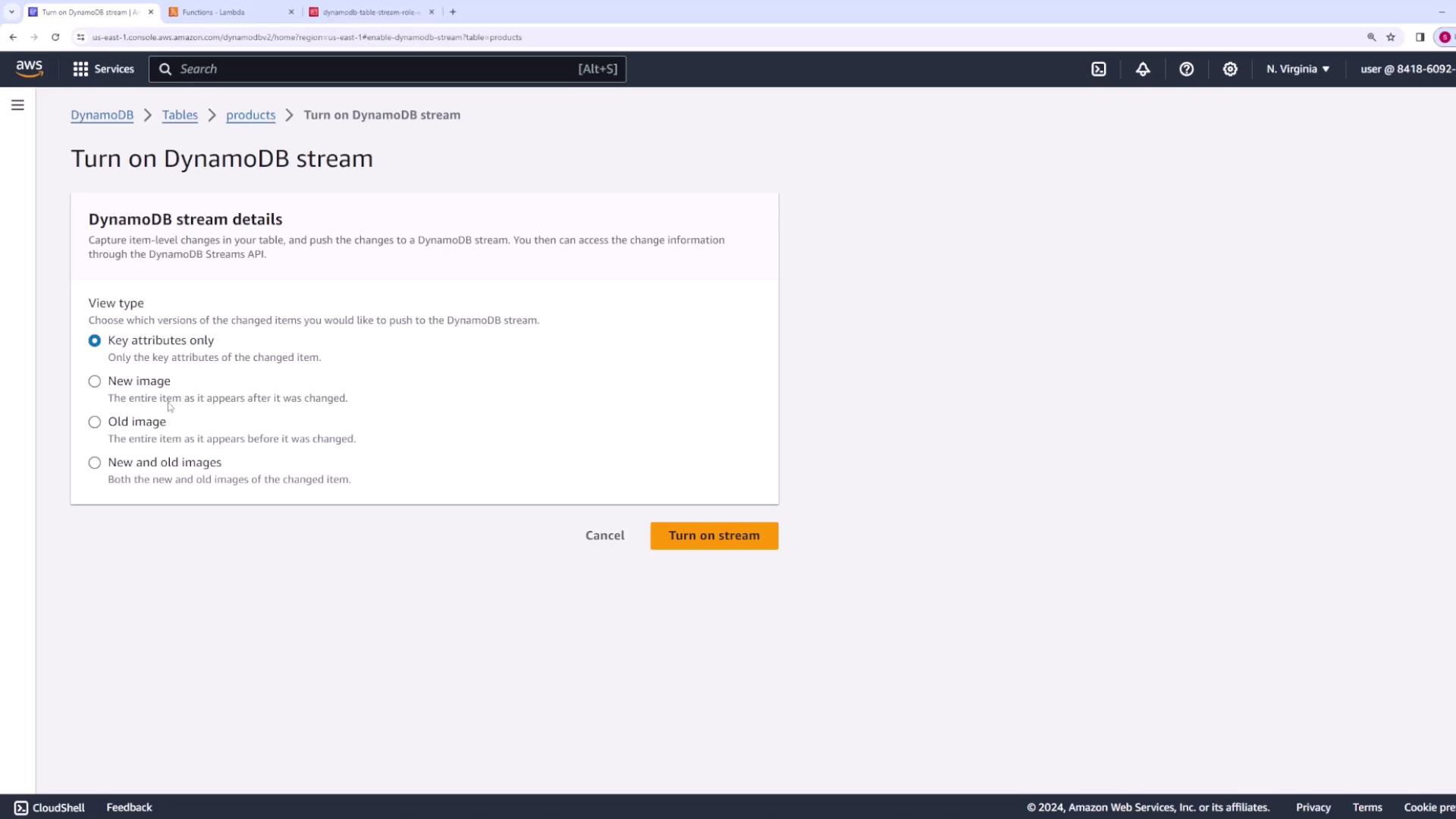

Here, you’ll notice that DynamoDB Streams is set to Off. Click Turn On and select the desired streaming option:

- Key Attributes Only - Streams only the key attributes of the modified item.

- New Image - Streams the entire item as it exists after the change.

- New and Old Images - Captures both the previous and new images of the item.

For the richest dataset, choose New and Old Images and enable the stream.

Step 2: Create a Lambda Trigger for the Stream

Since there is no trigger configured yet, create one by associating a Lambda function to process the stream events.

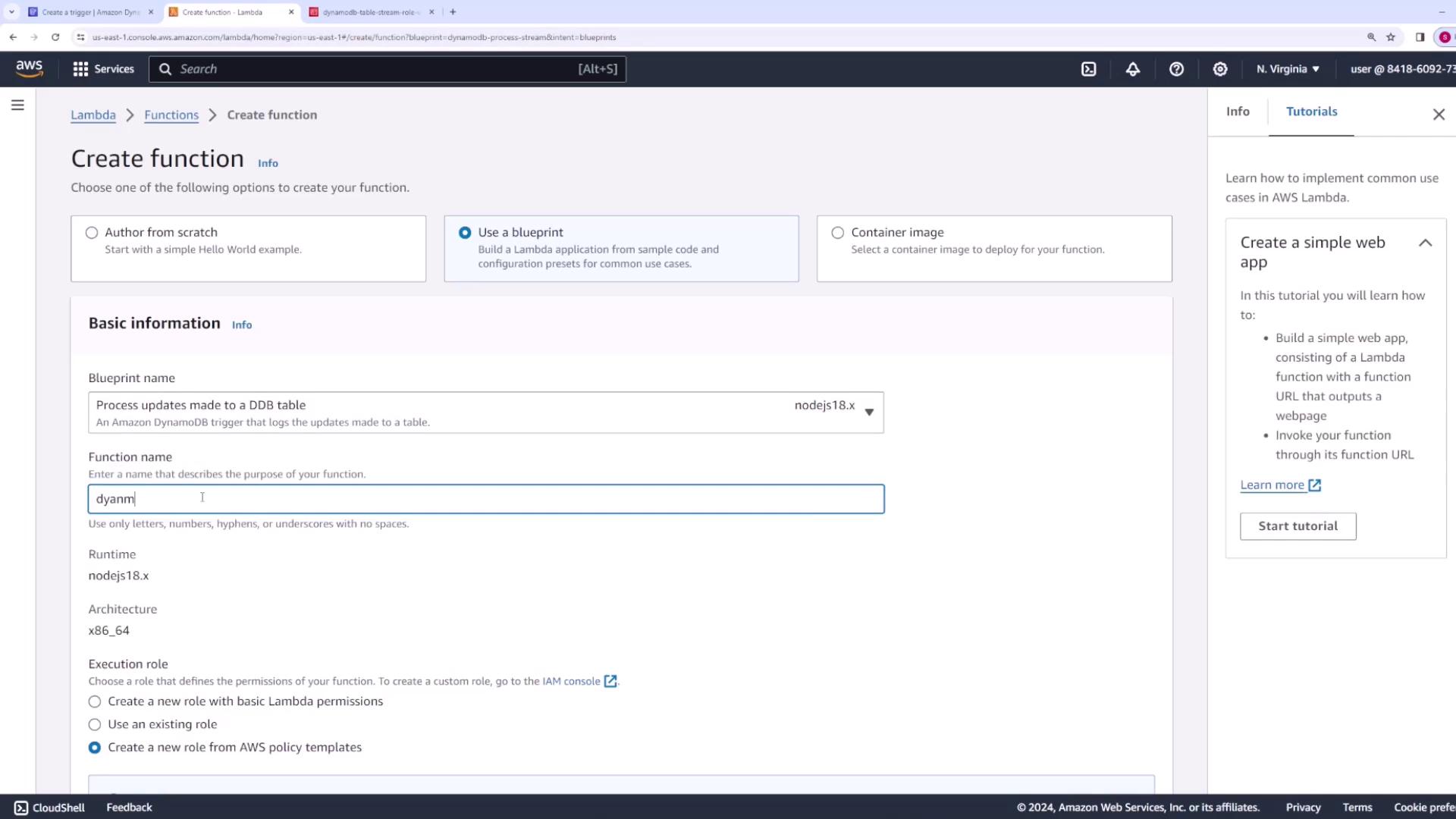

If you haven’t already created a Lambda function, follow these steps:

- In the AWS Lambda console, choose to create a new function.

- Use the provided DynamoDB Streams template. When prompted, select the blueprint named “process updates made to a DynamoDB table” and choose the Node.js version.

- Name your function (e.g., "DynamoDBStreamExample") and create a new role with basic Lambda permissions. Note that you may need to add additional permissions later.

Review the Example Code

The template provides sample Node.js code which iterates over the records from DynamoDB and logs the event details. Below is the sample code used to process the stream events:

console.log('Loading function');

export const handler = async (event) => {

for (const record of event.Records) {

console.log(record.eventID);

console.log(record.eventName);

console.log('DynamoDB Record: %j', record.dynamodb);

}

return `Successfully processed ${event.Records.length} records.`;

};

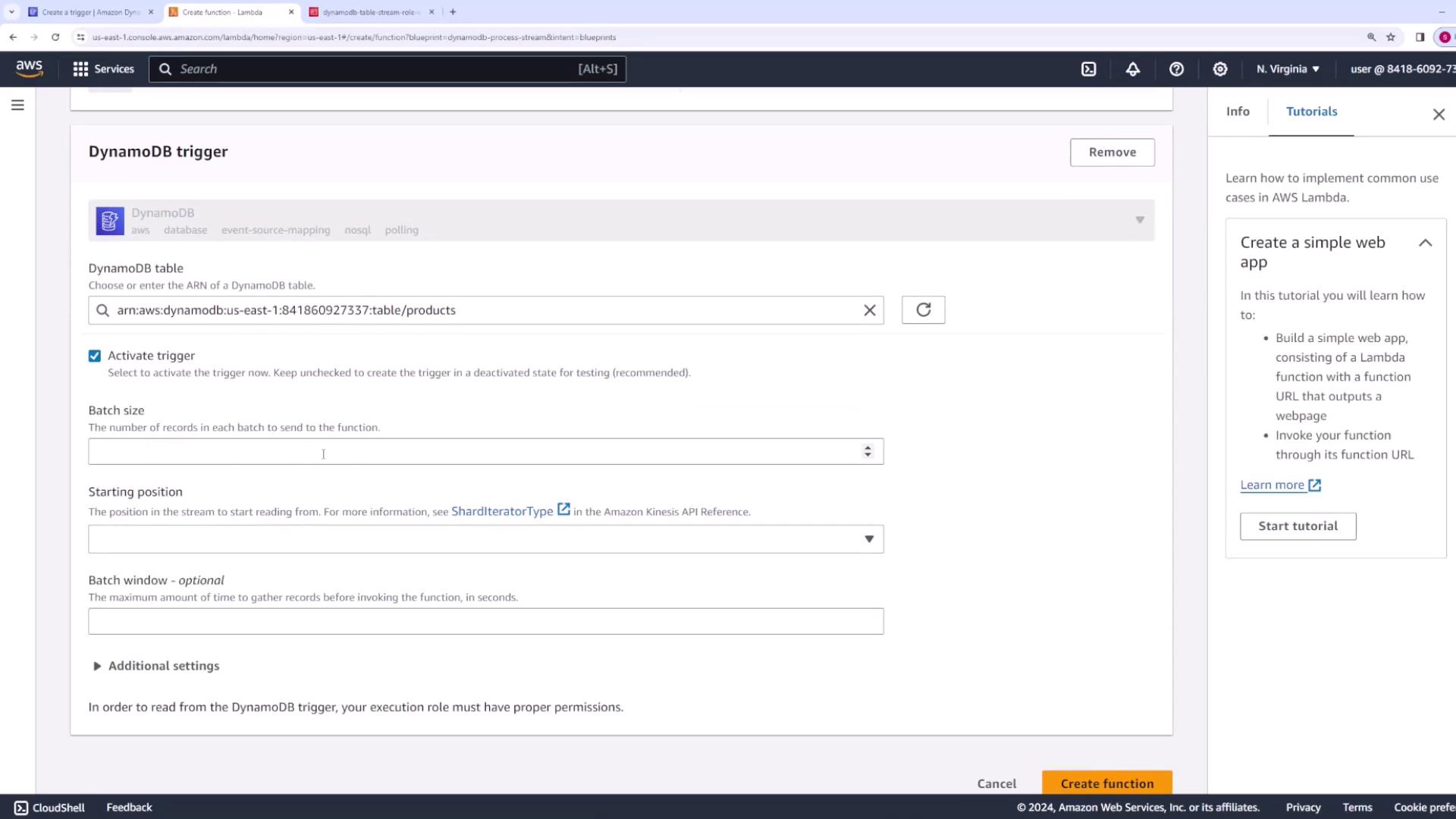

Step 3: Link the DynamoDB Table to the Lambda Function

Configure the trigger by specifying that the Products table should stream data to the new Lambda function. You can adjust the batch size (e.g., 10 records per invocation) and choose "LATEST" for the starting position. Once configured, create the trigger.

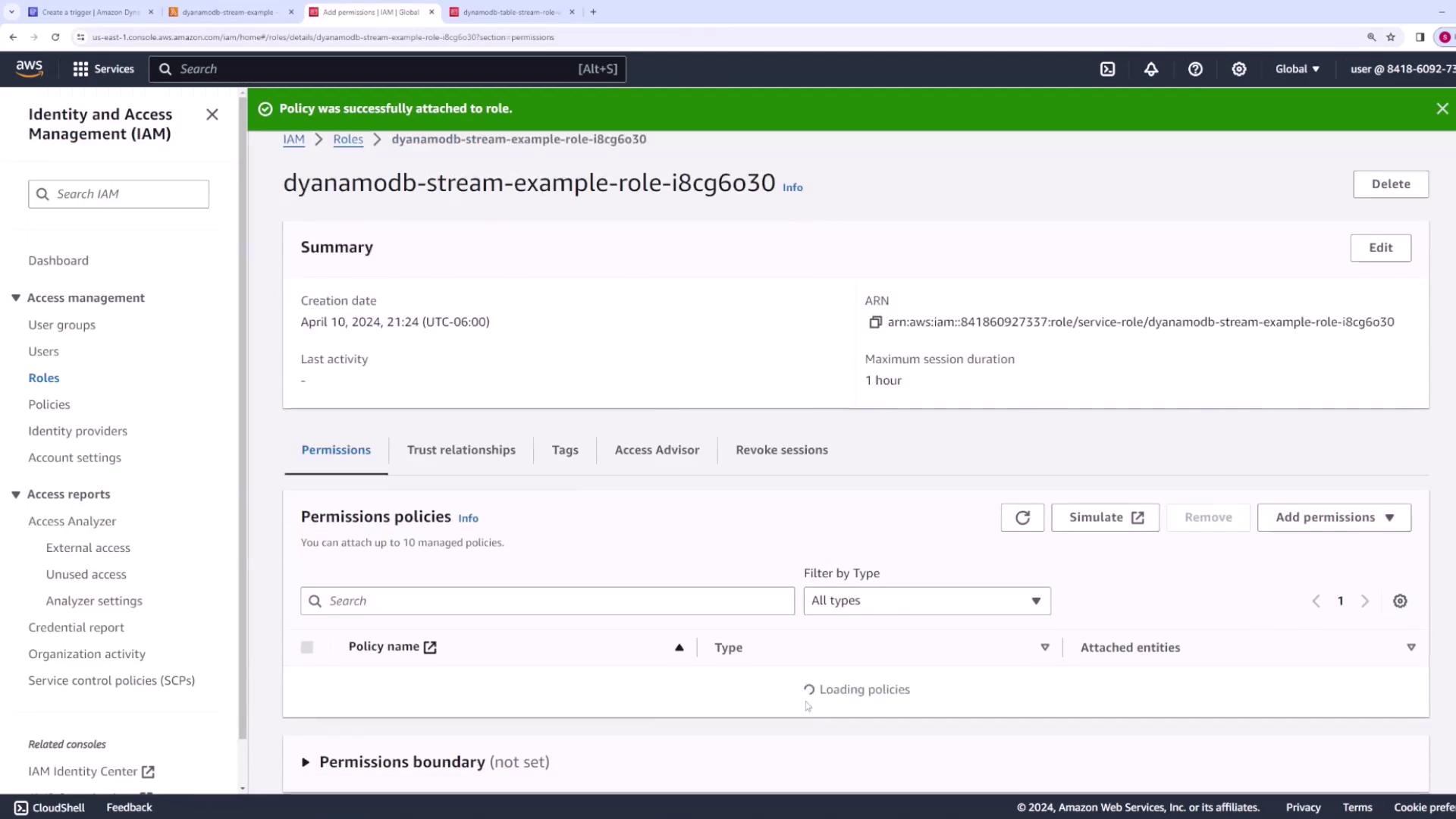

Step 4: Update IAM Permissions If Needed

After creating the Lambda function, you might see an error indicating that the function lacks permissions to access DynamoDB Streams. To resolve this:

- Navigate to the Lambda function's Configuration -> Permissions tab.

- Click the role associated with the Lambda function.

- Attach the policy AWS Lambda DynamoDB Execution Role to grant the necessary permissions.

Note

After updating the IAM policies, refresh the Lambda console and the DynamoDB Streams configuration. You should now see that the Lambda function is properly attached as a trigger.

Step 5: Test the Stream with Table Operations

To confirm that your setup is working, perform some of the following operations on your DynamoDB table:

- Create an Item: Add a new product (e.g., a computer) with attributes like price ($2000) and category (electronics).

- Modify an Item: Update an existing item (for example, change the price of a shampoo item from $10 to $5).

- Delete an Item: Remove an item (such as a TV).

These table operations will trigger the stream events, which the Lambda function processes. Then, check CloudWatch logs to verify that the events are captured correctly.

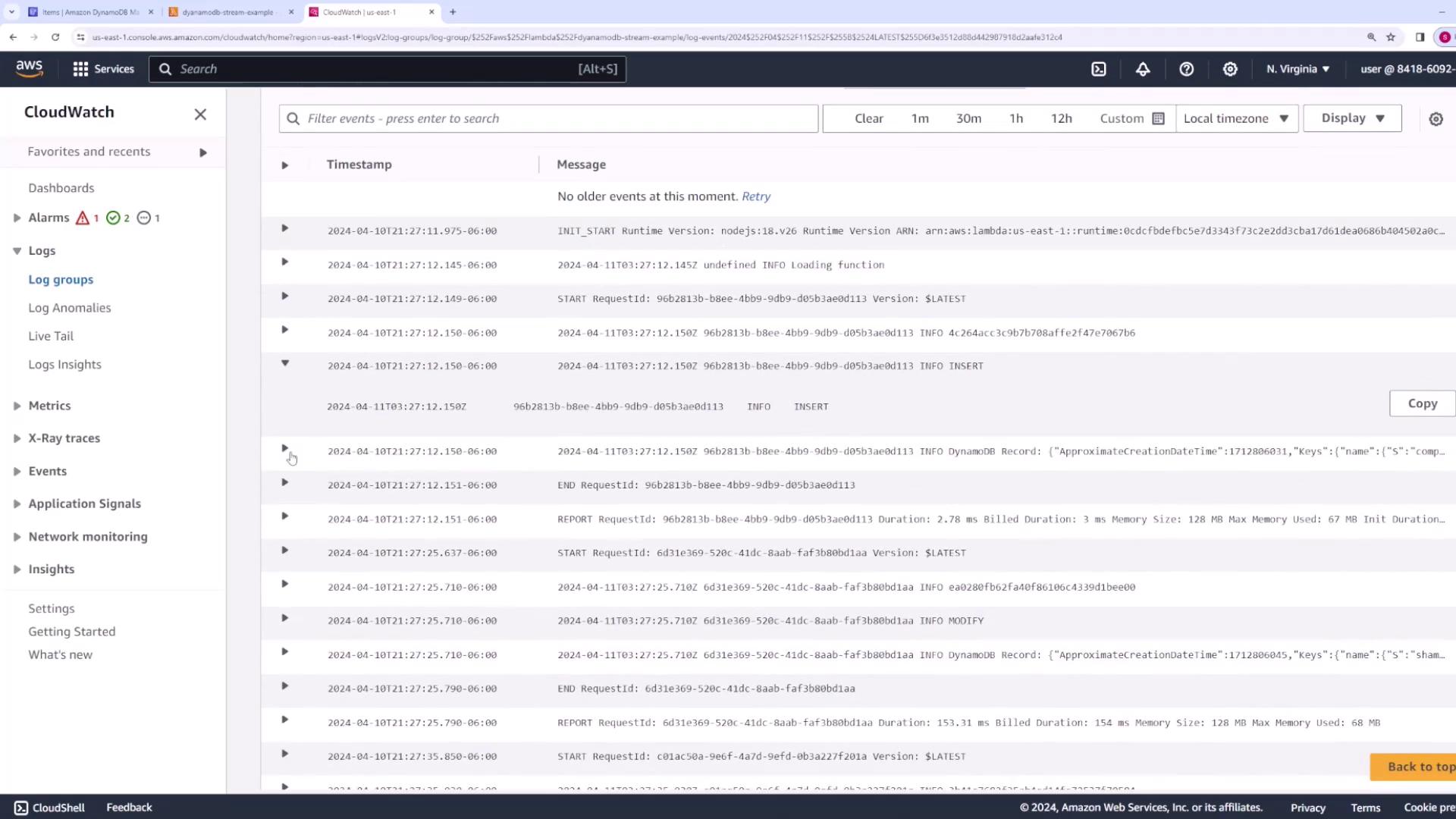

Example Log Entries

In one of the CloudWatch log streams, you might see an entry for an insert event similar to:

{

"ApproximateCreationDateTime": 1712886031,

"Keys": {

"name": {

"S": "computer"

}

},

"NewImage": {

"price": {

"N": "2000"

},

"name": {

"S": "computer"

},

"category": {

"S": "electronics"

}

},

"SequenceNumber": "750000000034589963794",

"SizeBytes": 50,

"StreamViewType": "NEW_AND_OLD_IMAGES"

}

This confirms that a new product item with a price of 2000 and category electronics has been successfully created.

A log entry for a modification event will capture both the old and new values. For example, when modifying the "shampoo" item:

{

"ApproximateCreationDateTime": 1712886045,

"Keys": {

"name": {

"S": "shampoo"

}

},

"NewImage": {

"price": {

"N": "5"

},

"name": {

"S": "shampoo"

},

"category": {

"S": "essentials"

}

},

"OldImage": {

"price": {

"N": "10"

},

"name": {

"S": "shampoo"

},

"category": {

"S": "essentials"

}

},

"SequenceNumber": "760000000034589984308",

"SizeBytes": 83,

"StreamViewType": "NEW_AND_OLD_IMAGES"

}

Here, you can observe that the shampoo price was adjusted from 10 to 5.

For a delete operation, the log might show:

{

"NewImage": {

"price": {

"N": "5"

},

"name": {

"S": "shampoo"

},

"category": {

"S": "essentials"

}

},

"OldImage": {

"price": {

"N": "100"

},

"name": {

"S": "tv"

},

"category": {

"S": "electronics"

}

},

"SequenceNumber": "7600000000345890984308",

"SizeBytes": 83,

"StreamViewType": "NEW_AND_OLD_IMAGES"

}

In addition, the Lambda function's CloudWatch logs might include runtime reports like the following:

2024-04-10T21:27:25.790-06:00 END RequestId: 6d13e369-520c-41dc-8aab-faf3b80b1daa

2024-04-10T21:27:25.796-06:00 REPORT RequestId: 6d13e369-520c-41dc-8aab-faf3b80b1daa Duration: 153.31 ms Billed Duration: 154 ms Memory Size: 128 MB Max Memory Used: 68 MB

2024-04-10T21:27:35.936-06:00 START RequestId: 01c50a50-96cf-4a7d-9efd-0b322f201a Version: $LATEST

2024-04-10T21:27:35.936: 01c50a50-96cf-4a7d-9efd-0b322f201a INFO REMOVE

2024-04-10T21:27:35.951-06:00 END RequestId: 01c50a50-96cf-4a7d-9efd-0b322f201a

2024-04-10T21:27:35.951-06:00 REPORT RequestId: 01c50a50-96cf-4a7d-9efd-0b322f201a Duration: 100.89 ms Billed Duration: 101 ms Memory Size: 128 MB Max Memory Used: 68 MB

These logs confirm that the delete operation was successfully processed.

Conclusion

You have now set up and integrated DynamoDB Streams with a Lambda trigger, enabling real-time processing of changes to your DynamoDB table. With the steps outlined above, you can confidently process stream events and monitor them via CloudWatch. Happy coding, and see you in the next article!

For more details, check out the AWS Lambda Documentation and DynamoDB Streams Overview.

Watch Video

Watch video content