AWS Certified Developer - Associate

Serverless

Limits Concurrency

In this article, we explore AWS Lambda limits and concurrency with a focus on cold starts and how provisioned concurrency can help mitigate their impact. Understanding these concepts is essential for optimizing the performance of your serverless applications.

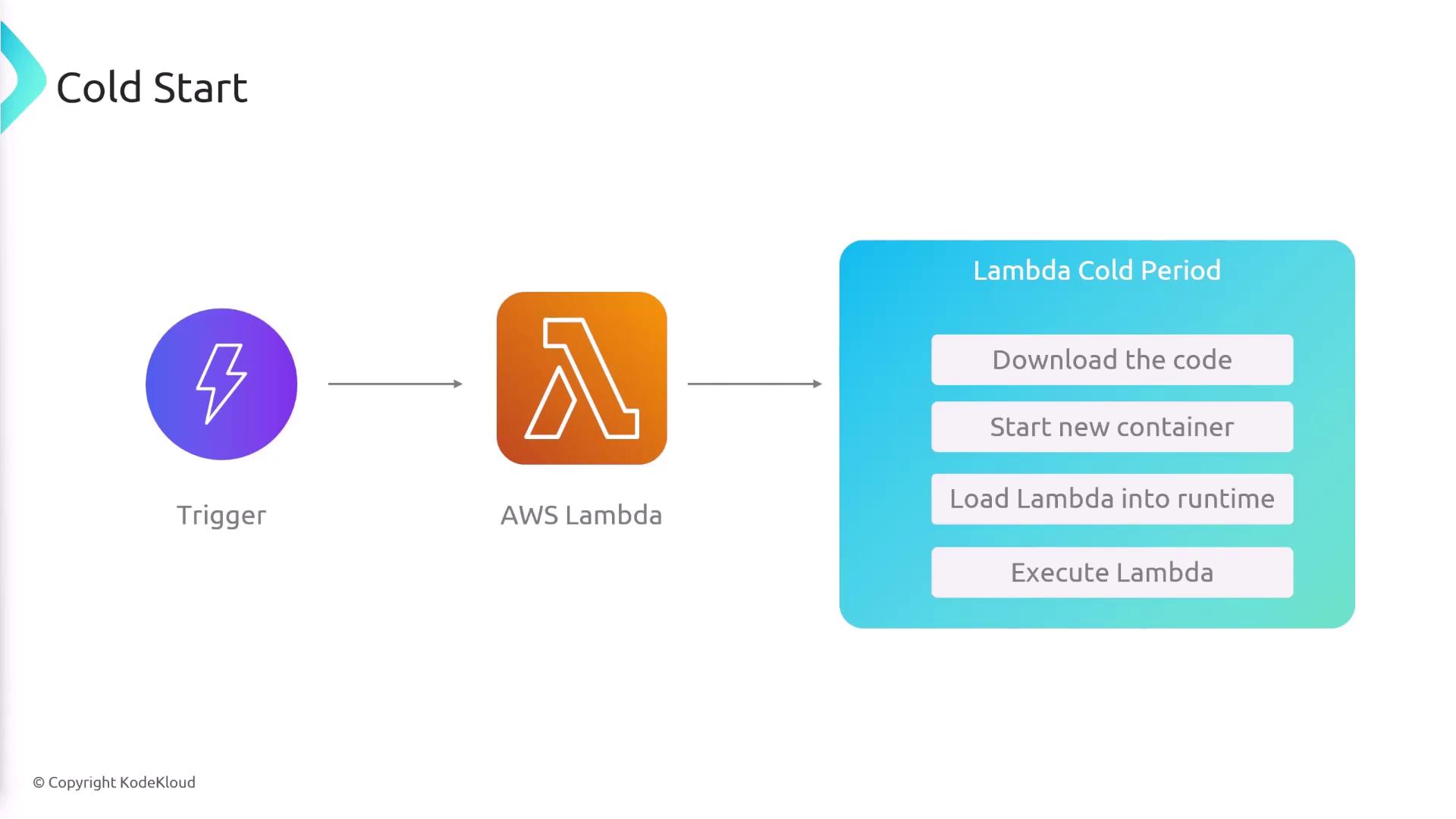

Cold Starts

A cold start occurs when an AWS Lambda function is invoked after a period of inactivity. During a cold start, AWS initializes a new instance of the function by downloading the code, starting a new container, and loading the runtime. This initialization process introduces additional latency, which can affect latency-sensitive applications.

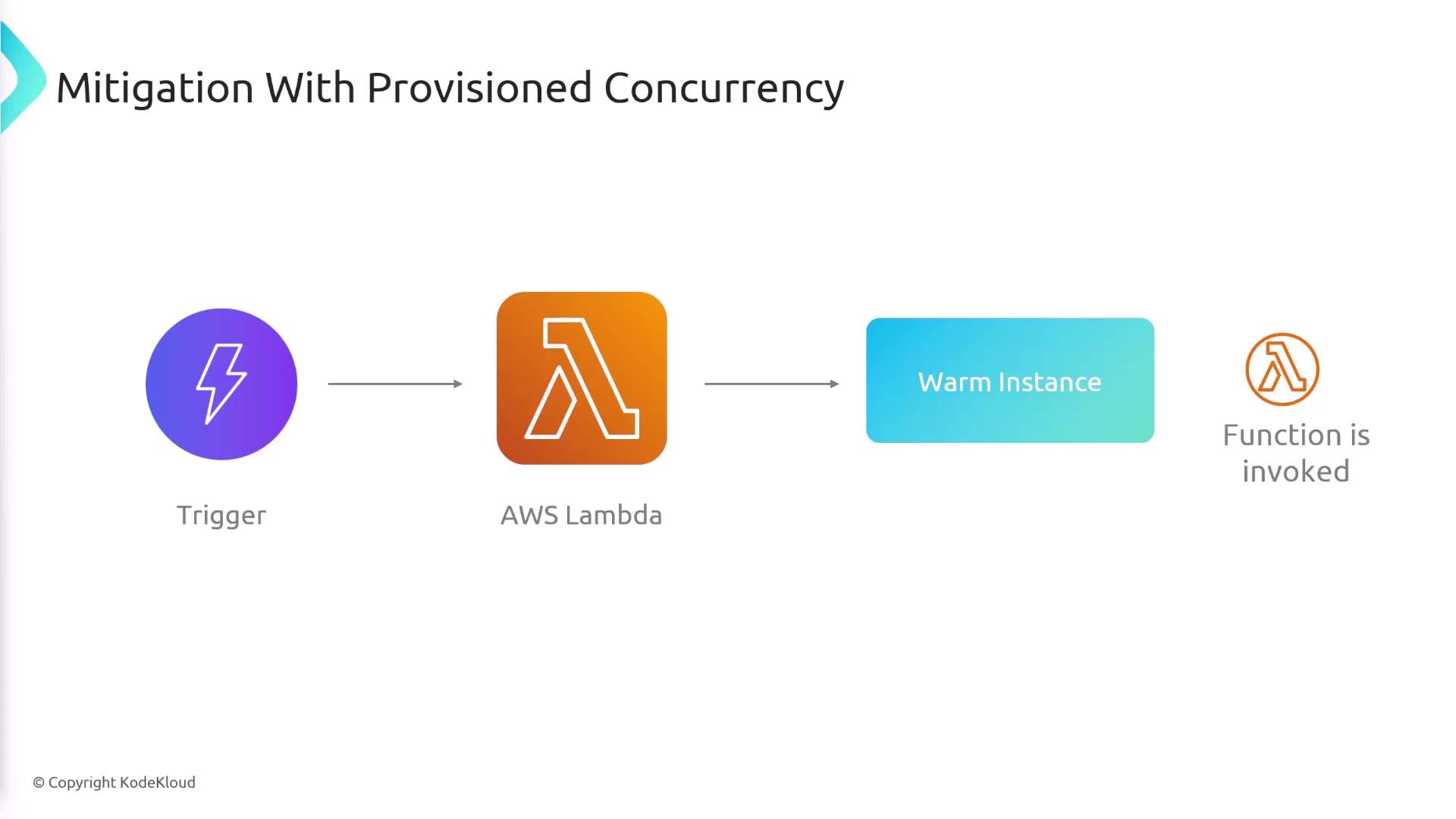

To reduce the impact of cold starts, AWS offers a feature known as provisioned concurrency. With provisioned concurrency, you can pre-allocate a specific number of function instances that are fully initialized and ready to serve incoming requests immediately—even during high-demand periods.

Tip

Provisioned concurrency is particularly useful for applications with unpredictable usage patterns or for those that require consistent low latency.

Lambda Limits

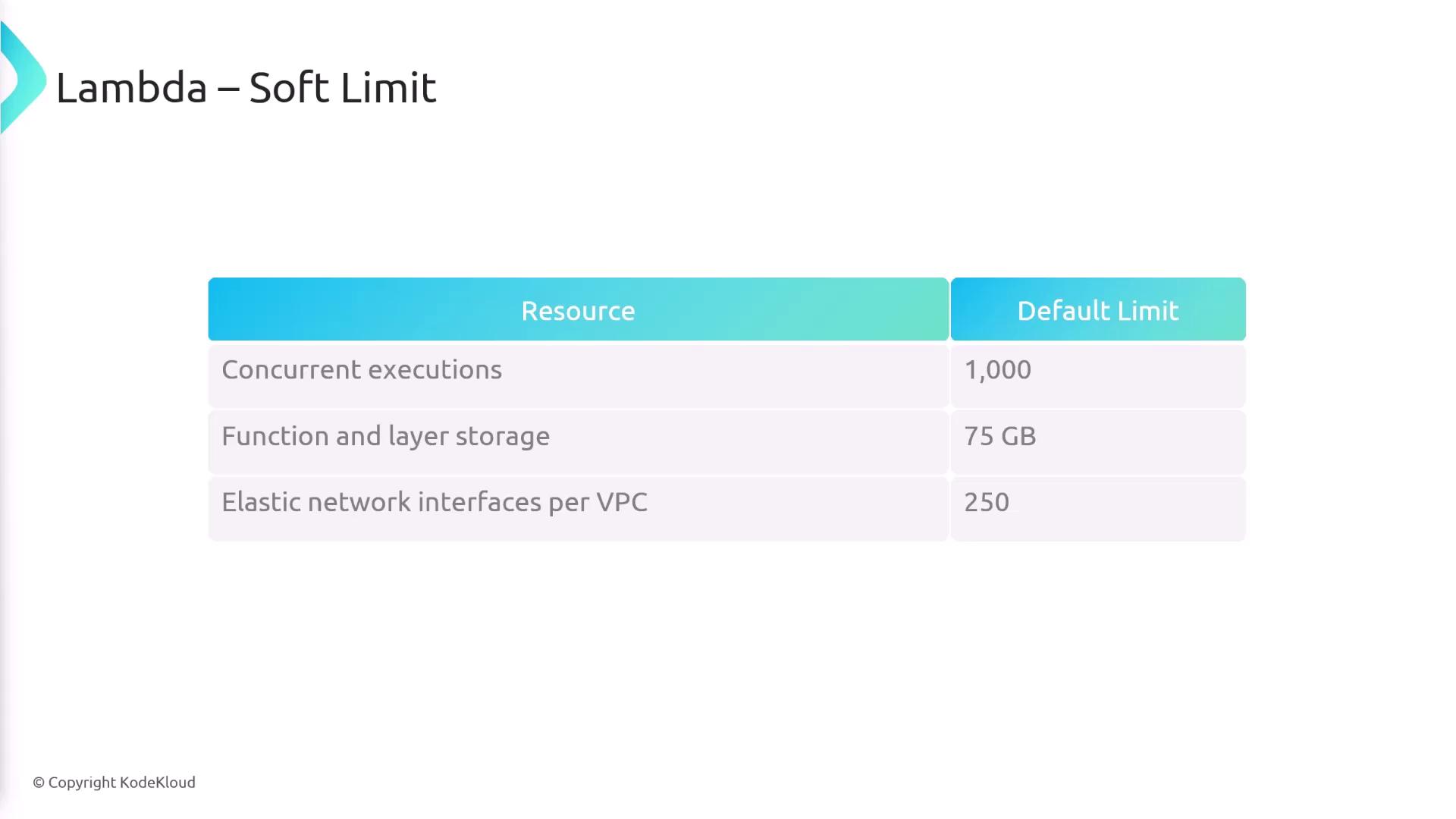

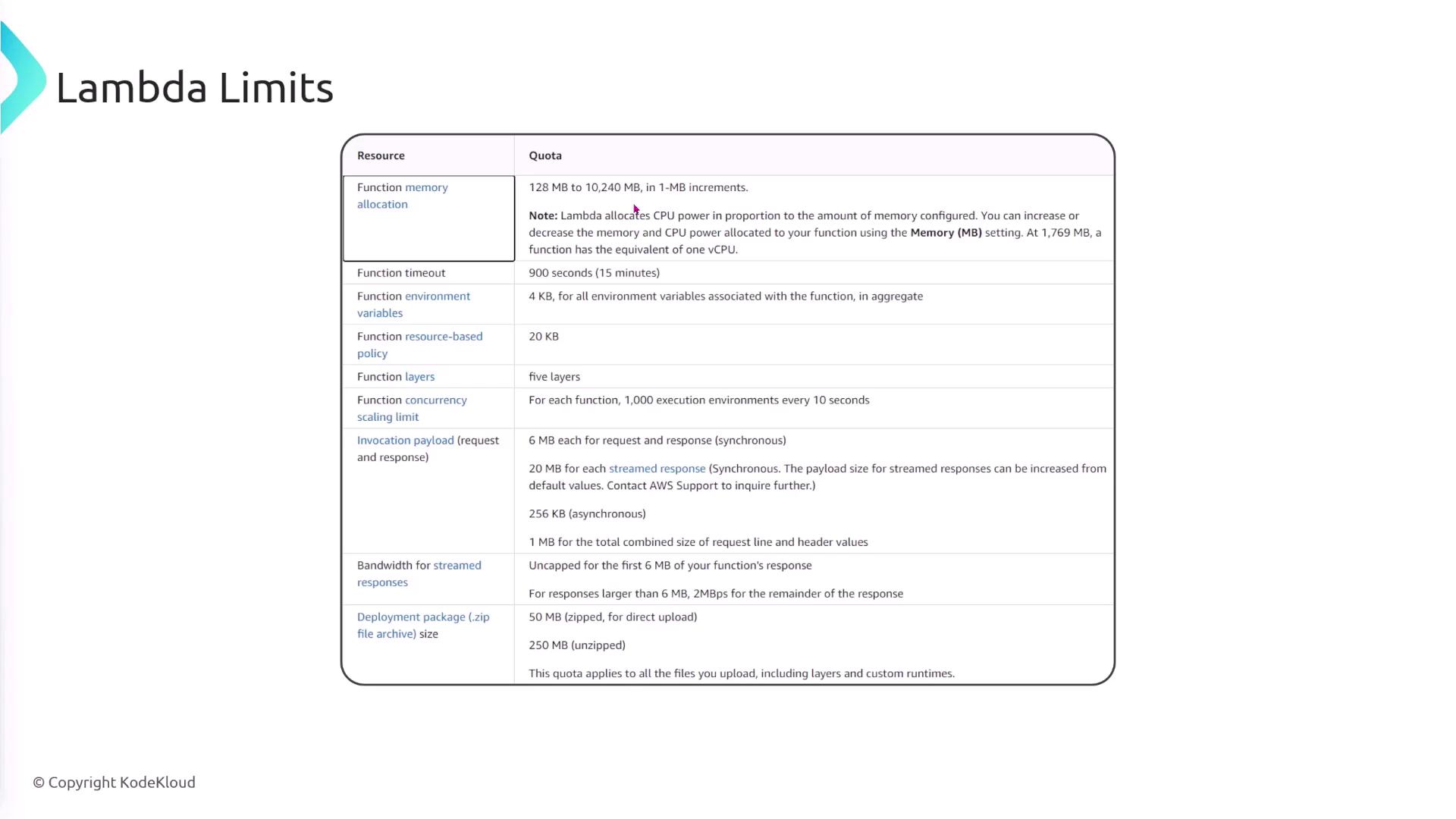

AWS Lambda enforces various limits to ensure efficient resource usage. These limits fall into two categories: soft limits, which can be increased upon request, and hard limits, which are fixed.

Soft Limits

| Resource | Default Limit | Additional Details |

|---|---|---|

| Concurrent Executions | 1,000 concurrent executions | This limit is shared across all functions in your account. Request an increase if needed. |

| Function and Layer Storage | 75 GB | Applies to the combined storage of your function code and its layers. |

| Elastic Network Interfaces | 250 ENIs per VPC (up to 2,000 on request) | Relevant for functions that require VPC connectivity. |

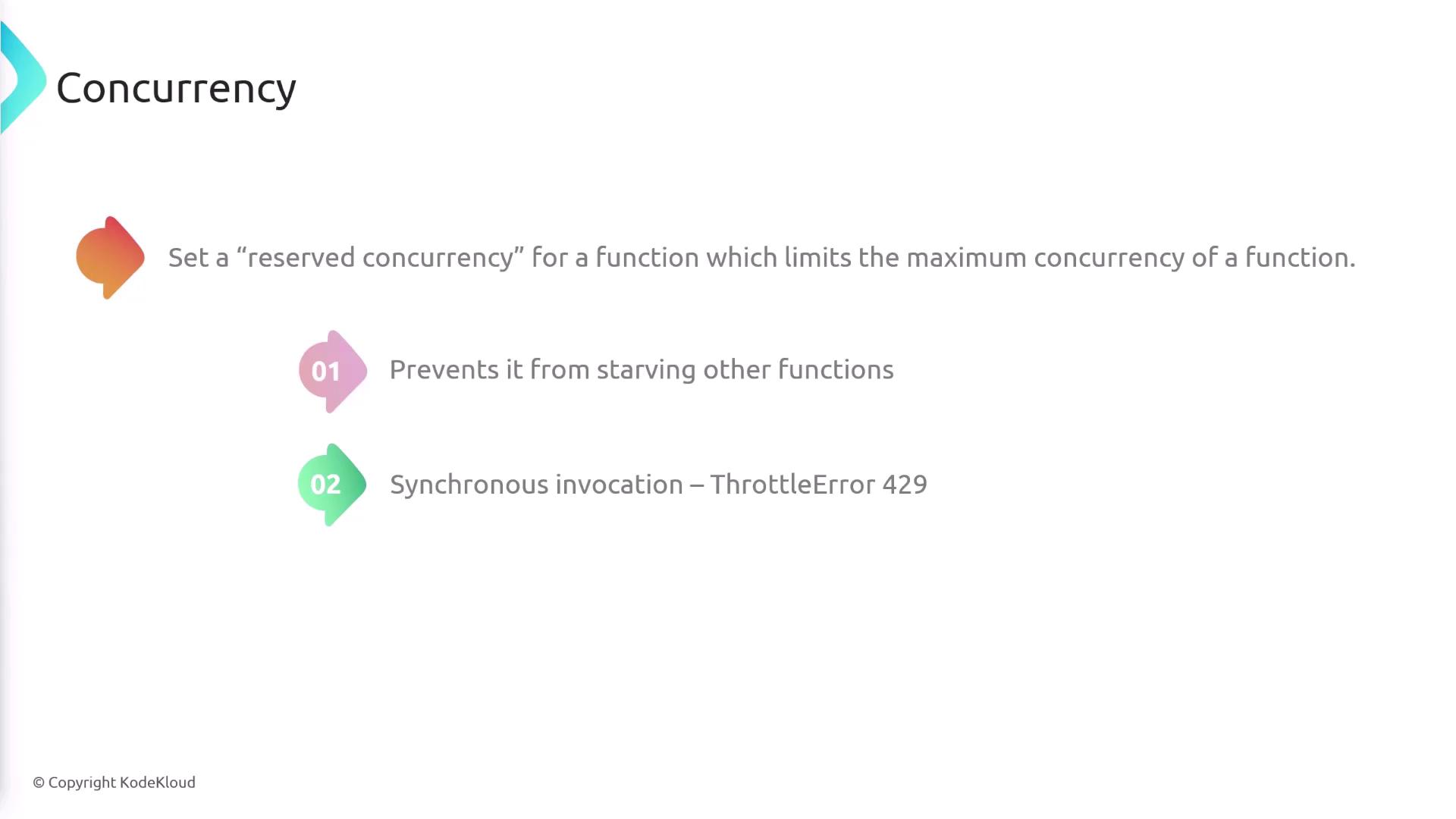

Concurrency Management

Concurrency in AWS Lambda refers to the number of function instances processing events simultaneously. If one function consumes a significant portion of the available concurrency, it could negatively affect other functions. To address this, you can set reserved concurrency for individual functions. For example, reserving 500 concurrent executions for a function ensures that it will never exceed that limit. When the reserved concurrency is reached, synchronous invocations may return a throttle error (HTTP 429), while asynchronous invocations will retry and may eventually be sent to a dead letter queue.

Additional Soft Limits

- Memory Allocation: Assign between 128 MB and 10,240 MB to your Lambda function.

- Function Timeout: Functions can run for up to 15 minutes.

- Environment Variables Size: The total size of environment variables is limited to 4 KB.

- Layers per Function: Up to five layers can be added to a function.

- Concurrency Scaling: Each function supports up to 1,000 execution environments, scaling every 10 seconds.

Additional Limits and Hard Restrictions

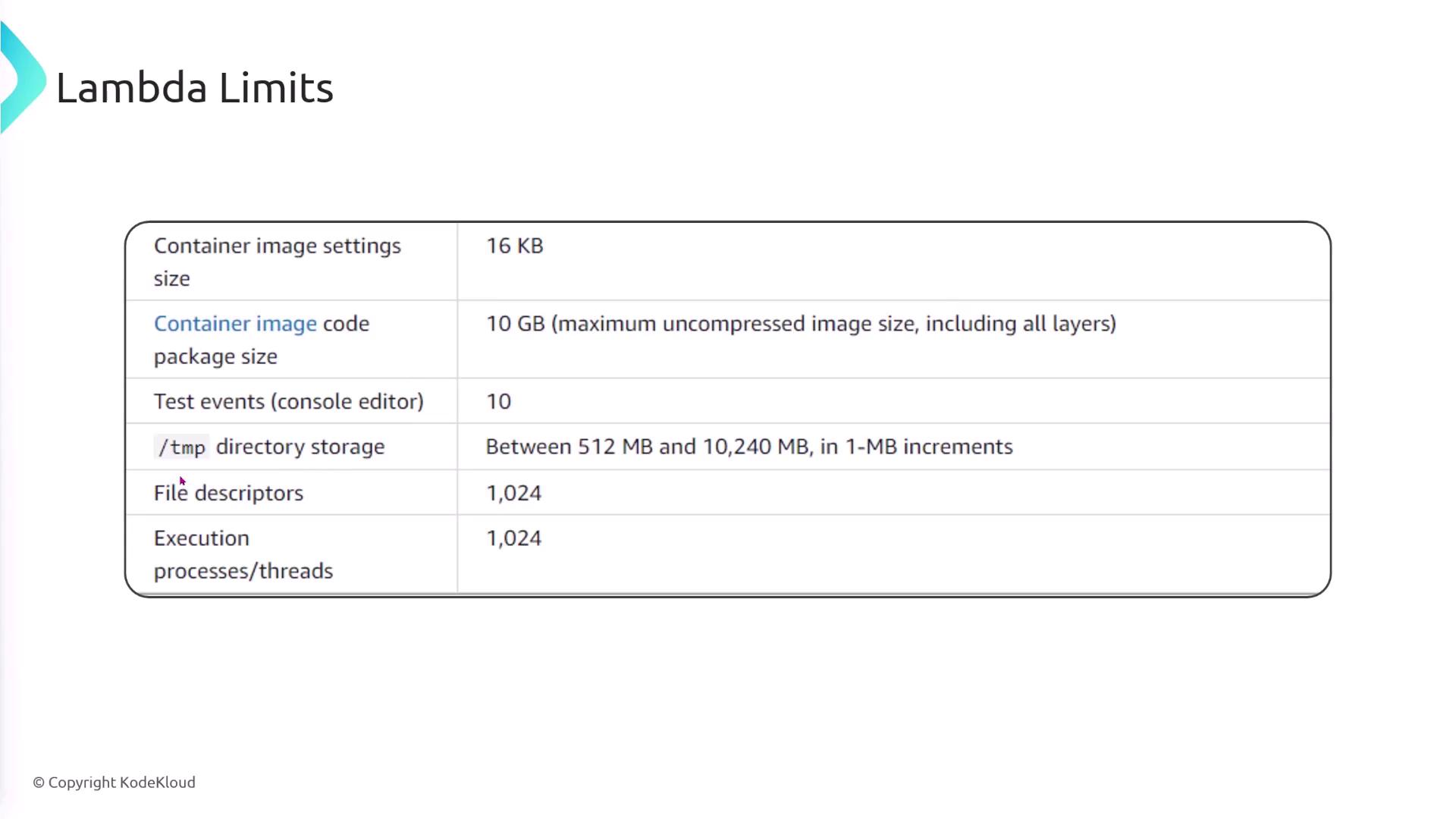

AWS Lambda also enforces additional specific limits:

- Container Image Settings: Container image code packages can be as large as 10 GB.

- Temporary Directory Storage (/tmp): Configurable up to 10,240 MB.

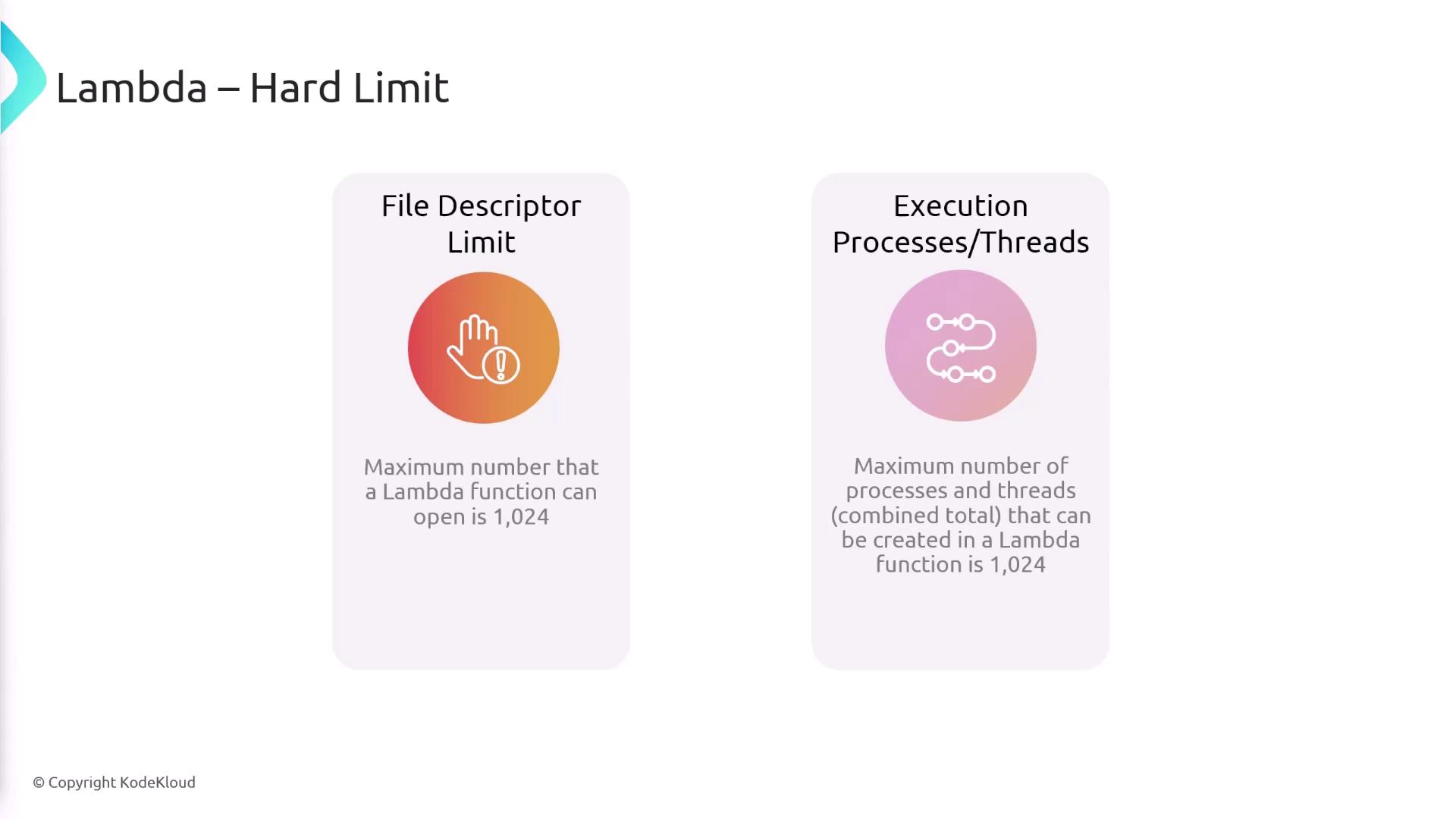

- File Descriptors and Threads: Limited to 1,024 per function.

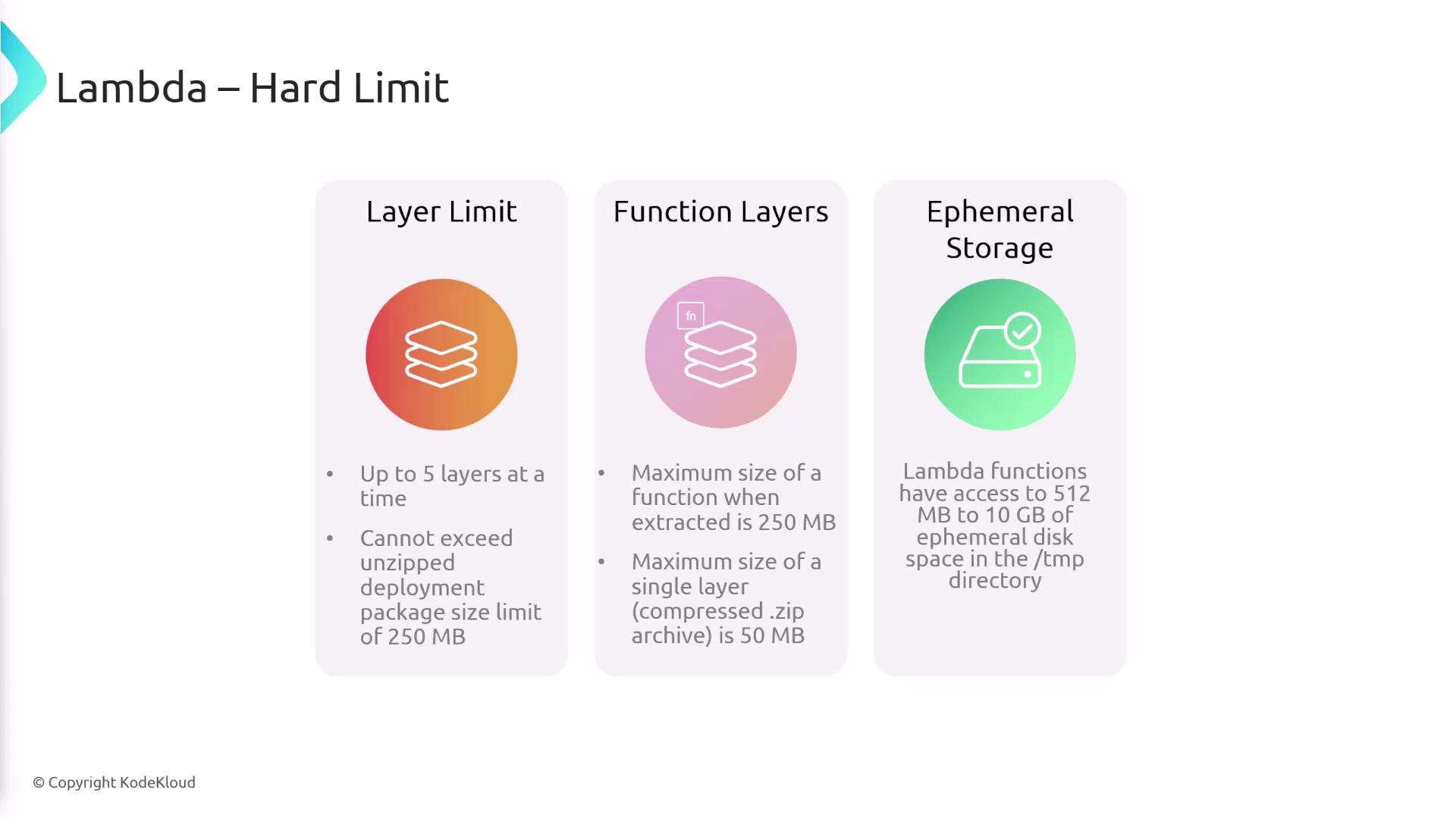

Hard Limits Overview

Below are the key hard limits applied to AWS Lambda functions:

- Memory: Maximum allocation of 10,240 MB.

- Function Timeout: Capped at 15 minutes.

- Deployment Package Size: 50 MB for direct uploads; 250 MB when uploaded via S3.

- Environment Variables: Total size must not exceed 4 KB.

- Layers: Maximum of five layers is allowed, with additional constraints on unzipped deployment sizes.

- Temporary Storage (/tmp): Supports up to 10 GB.

- File Descriptors, Processes, and Threads: Each is limited to 1,024.

Important

Ensure that your application’s requirements align with AWS Lambda's limits to avoid performance bottlenecks or resource starvation. For critical workloads, consider reviewing and adjusting these limits as necessary.

Summary

Cold starts can lead to increased latency as AWS initializes a new instance of your Lambda function after inactivity. Provisioned concurrency can alleviate this by keeping a defined number of instances warm and ready for use. AWS Lambda enforces several limits—both soft and hard—on resources such as concurrent executions, memory, deployment package sizes, and more. Being aware of these constraints and managing concurrency appropriately is key to maintaining optimal performance across your serverless applications.

The memory allocation for your Lambda functions can range from 128 MB to 10,240 MB, and the maximum function timeout is 15 minutes. Additionally, direct deployment package uploads are limited to 50 MB, while packages uploaded through S3 can be up to 250 MB.

For further details, always refer to the AWS Lambda Documentation.

Watch Video

Watch video content