Flat Networking in Kubernetes

Kubernetes mandates a flat pod network: every pod must have a unique IP and be able to reach any other pod directly, without NAT or VLANs. All segmentation and isolation occur via higher-level constructs like NetworkPolicies.

AWS VPC and Subnets

AWS uses a Virtual Private Cloud (VPC) as your networking boundary. Inside a VPC, subnets carve out IP ranges for resources. Example:- VPC CIDR:

10.10.0.0/16 - Subnet CIDR:

10.10.1.0/24(≈250 usable IPs after AWS reservations)

Choose subnet CIDRs with enough headroom for both node ENIs and pod IP assignments when using the AWS VPC CNI.

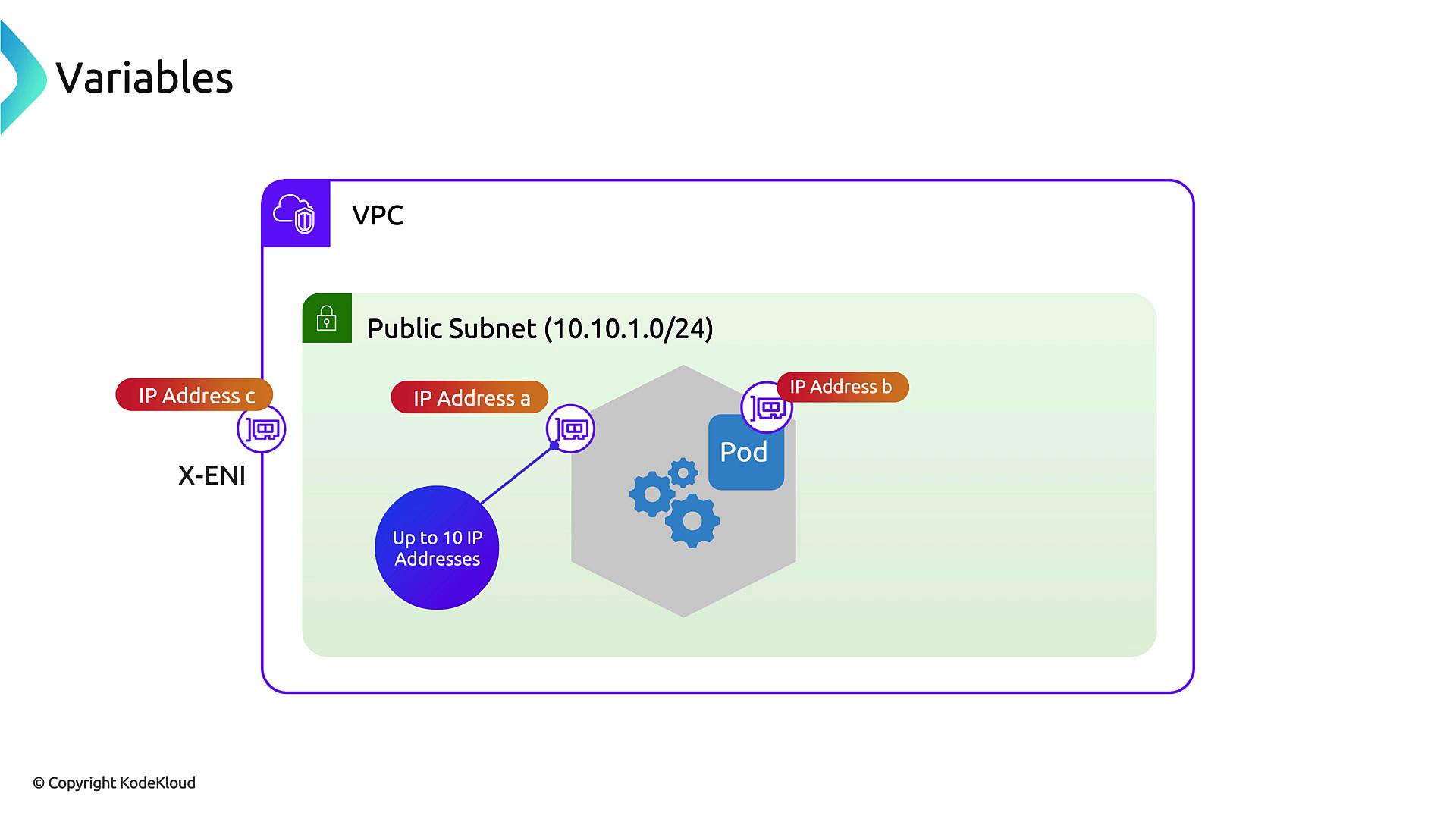

Nodes, ENIs, and API Server Connectivity

Each EKS node (EC2 instance) attaches an Elastic Network Interface (ENI) in your VPC. AWS also adds a cross-account ENI (X-ENI) so nodes can reach the Amazon-hosted control plane:- Node ENI

Assigned dynamically to each worker node for VPC communications. - X-ENI

Enables secure API traffic from your nodes to the EKS control plane outside your account.

Pod IP Allocation with AWS VPC CNI

The AWS VPC CNI plugin assigns each pod an IP from your VPC subnet by adding secondary IPs to the node’s ENI. Internally, the Linux kernel routes those addresses into pod network namespaces. Key characteristics:- 1 VPC IP per pod

- Up to N secondary IPs per ENI (instance-type dependent)

- Leverages AWS’s network fabric—no overlays or tunnels required

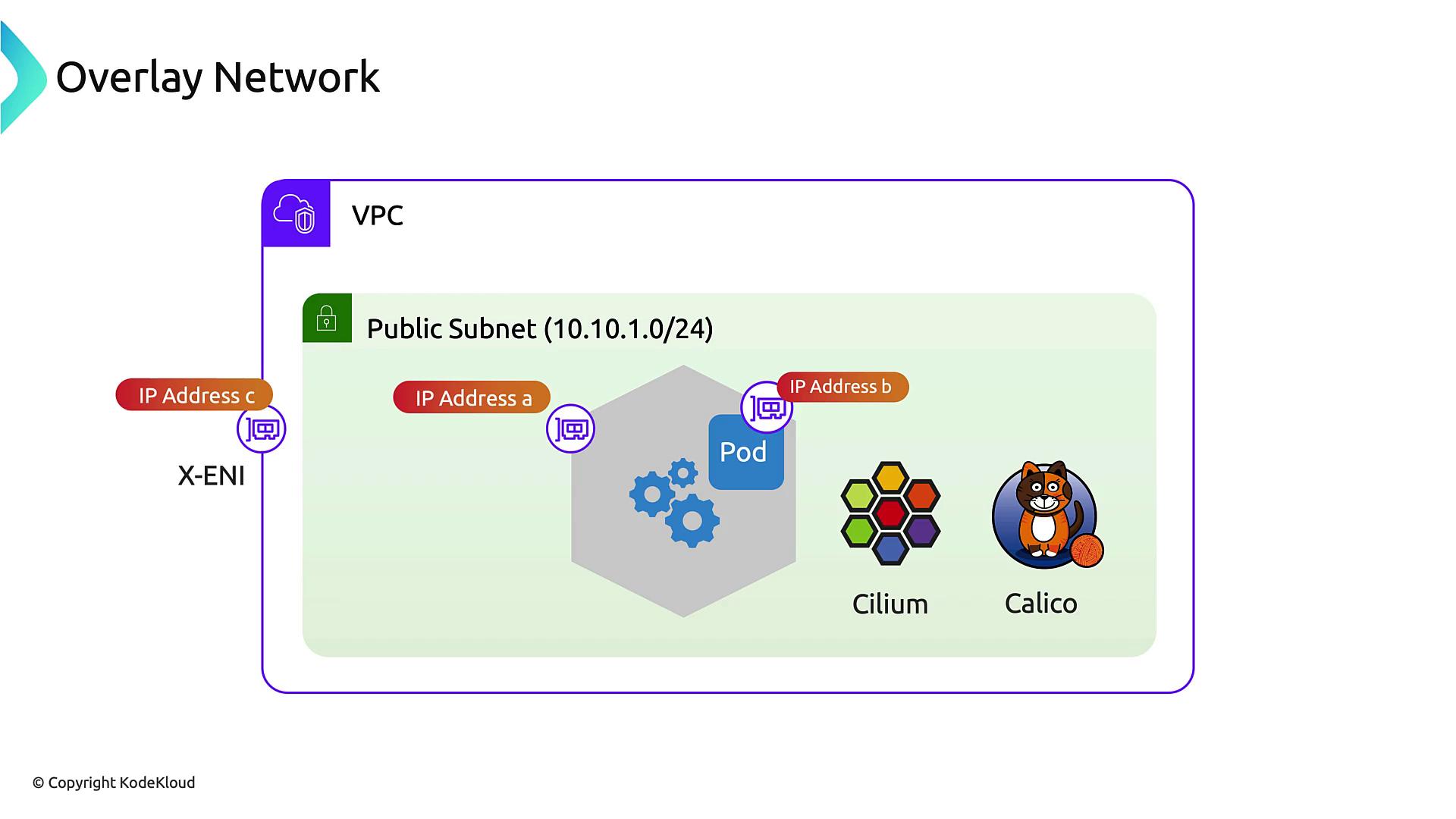

Overlay Networking Alternatives

If you’d rather not consume VPC IPs for pods, consider overlay CNIs such as Flannel, Cilium, or Calico. They allocate pod IPs from an independent CIDR and encapsulate traffic between nodes.

| Plugin | Pod IP Source | Overlay | Encapsulation Overhead |

|---|---|---|---|

| AWS VPC CNI | VPC subnet | No | Low |

| Flannel | Custom CIDR | Yes | Medium (VXLAN) |

| Cilium | Custom CIDR | Yes | Variable (e.g., Geneve) |

| Calico | Custom CIDR | Yes | Low (IP-in-IP) |

- Preserve VPC IP space

- Decouple pod addressing from VPC design

- Extra encapsulation latency

- Additional configuration and monitoring

Managing IP Consumption

When using the AWS VPC CNI, plan your subnet sizes and pods-per-node carefully. Each ENI has a maximum number of secondary IPs—and network throughput limits are tied to instance type.Exhausting your subnet’s IP pool will prevent new pods from scheduling. Monitor

kubectl describe node for IP allocations and right-size your VPC ranges accordingly.