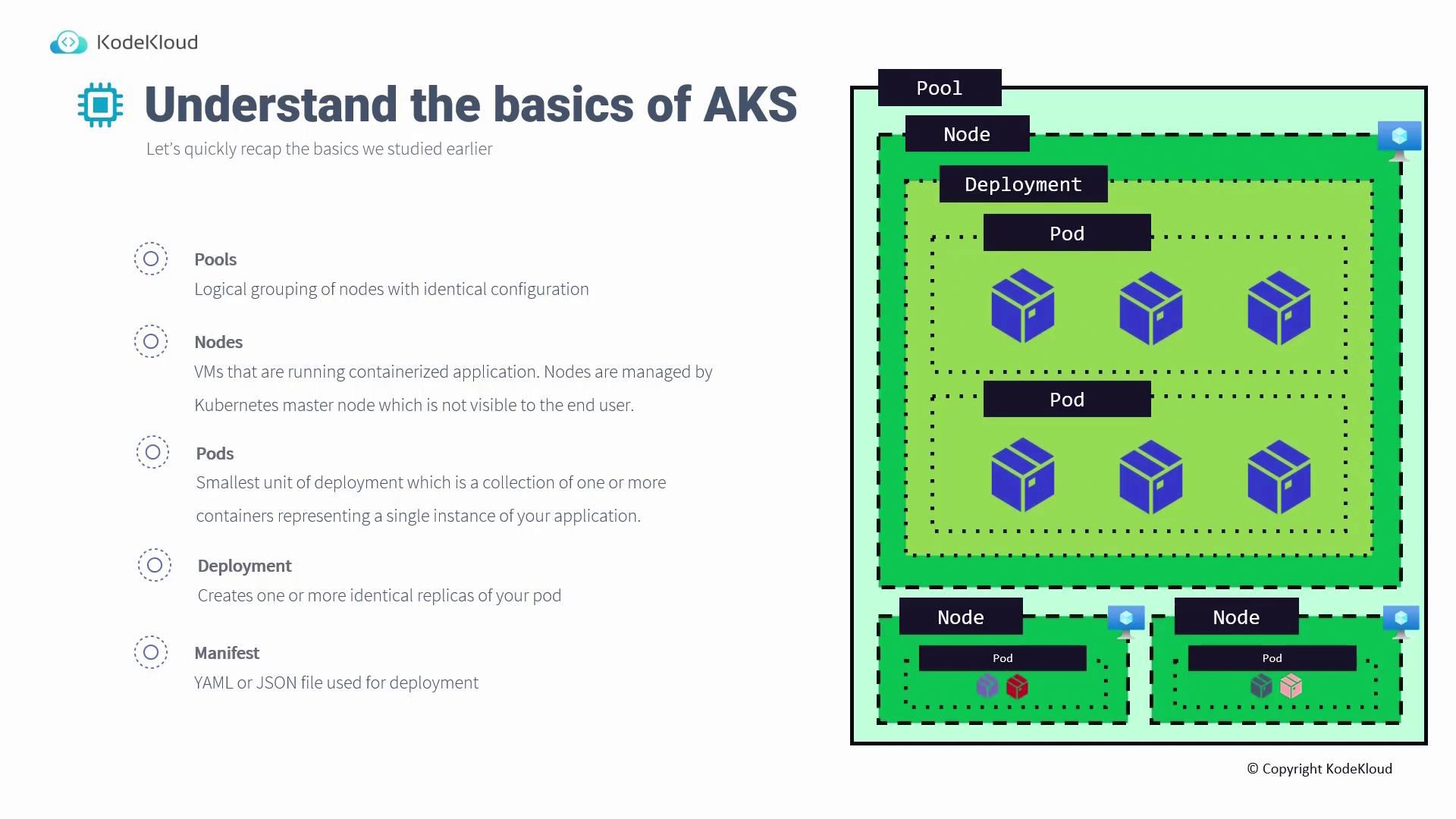

Key Concepts of AKS

Pools and Nodes

Pools are logical groupings of nodes with identical configurations. For example, you might have separate pools for Linux machines, Windows machines, or GPU-enabled machines. This grouping ensures that containers are scheduled onto nodes equipped with the necessary capabilities. Within each pool, nodes (which are virtual machines) host your containerized applications. In AKS, these nodes are managed by the Kubernetes master node, which is abstracted from the end user since AKS is a managed cluster. This allows Microsoft to handle master node management seamlessly.Pods

A pod is the smallest deployable unit in a Kubernetes cluster. It represents a collection of one or more containers that collectively run a single instance of your application. Each pod is defined by a pod manifest that outlines its container configuration.Deployments

Deployments manage the creation and maintenance of identical pod replicas. For instance, a deployment configured with two pods ensures that at all times, two identically configured pods are active. If one pod is deleted or fails, the system automatically creates a replacement to maintain the desired state.Manifests

Manifests are YAML or JSON files used to deploy resources on Kubernetes. They define the configuration settings and desired state of your applications.

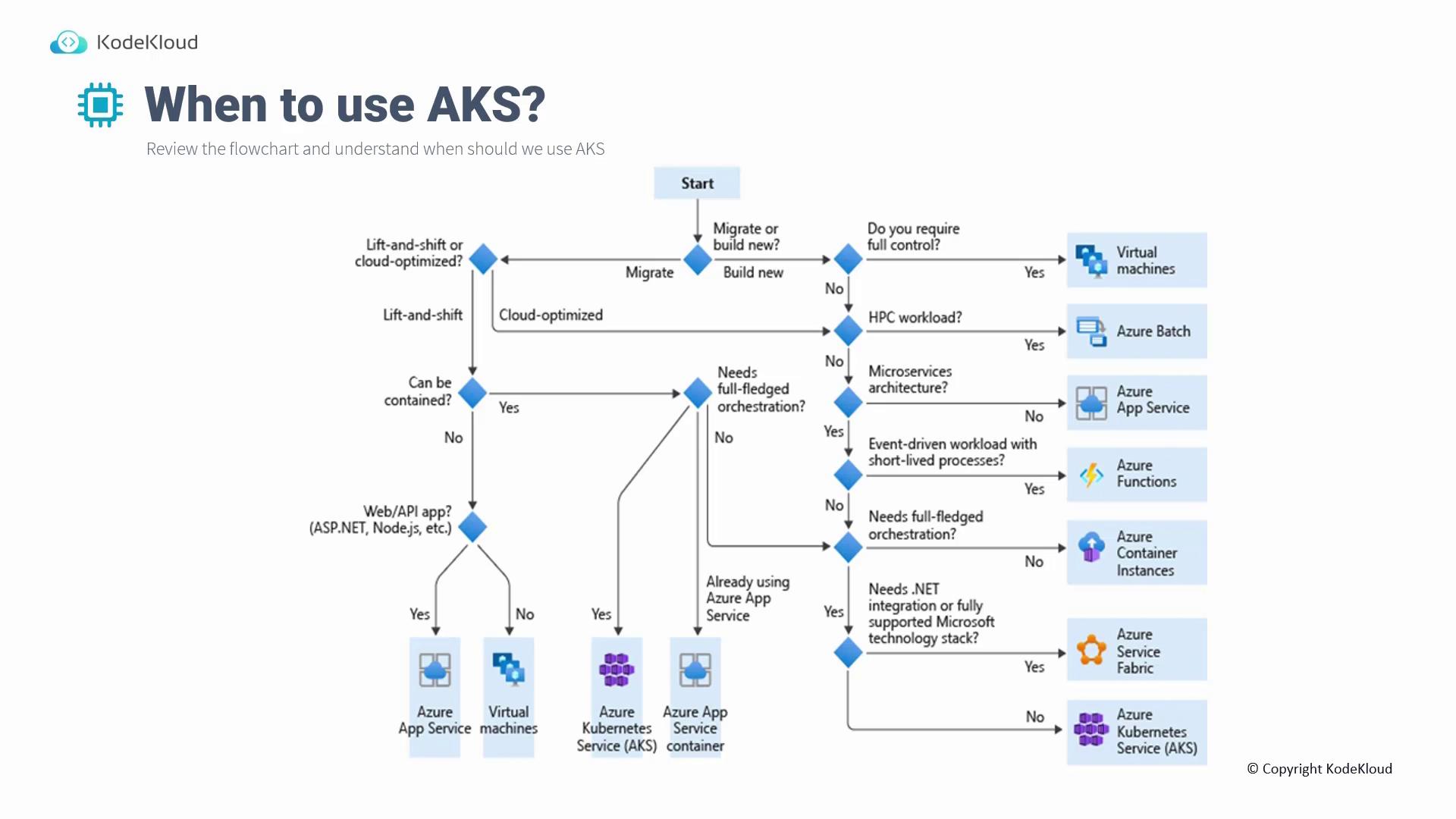

When to Use AKS

Determining whether AKS is the right choice for your application is essential. The decision flowchart below illustrates scenarios where containerized applications—whether for lift-and-shift, migration, or enhanced cloud optimization—would benefit from full-fledged orchestration using AKS.

Considerations for Using AKS

When planning your AKS deployment, consider the following key aspects:Scalability

AKS provides robust scaling options suitable for large-scale containerized applications. There are two primary scaling mechanisms:- Horizontal Pod Autoscaler: Dynamically adjusts the number of pod replicas in response to changes in demand.

- Cluster Autoscaler: Automatically increases or decreases the number of nodes in your cluster based on workload requirements.

Network Segmentation

For scenarios requiring port-to-port communication or integration with on-premises systems, network segmentation is critical. AKS can be deployed within an existing Virtual Network (VNet), enabling integration with:- VNet peering

- ExpressRoute

- VPN connectivity

Integrating with your existing network infrastructure can enhance security and performance by isolating network traffic.

Version Upgrades

Kubernetes continuously evolves, and periodic version upgrades are necessary. With AKS, Microsoft manages these upgrades, including the cordoning and uncordoning of nodes. This automated process minimizes downtime and eliminates the need for manual intervention when updating your cluster.Storage and Image Repository

For persistent storage, AKS supports:- Azure Files

- Azure Disks

Load Balancing and Ingress Control

AKS integrates seamlessly with native Azure load balancing solutions, including Azure Load Balancer and Application Gateway Ingress Controllers. These services ensure efficient traffic distribution and negate the need for third-party load balancing solutions.Summary Table of AKS Components

| Component | Functionality | Example Usage |

|---|---|---|

| Pool | Logical grouping of nodes with similar configurations | Separate pools for Linux, Windows, or GPU nodes |

| Node | Virtual machine hosting containerized applications | A node running multiple pods |

| Pod | Smallest deployable unit consisting of one or more containers | A pod running a microservice instance |

| Deployment | Manages replicas of pods ensuring desired state is maintained | A deployment configured with 3 replicas |

| Manifest | YAML/JSON file defining Kubernetes configurations and desired state | A pod manifest file |