Amazon Elastic Container Service (AWS ECS)

Deploying a new application from scratch

Demo Creating multi container application

In this guide, we demonstrate how to build and deploy a multi-container application on Amazon ECS using Fargate. We will create a task definition, configure containers for MongoDB and an Express-based web API, mount an Elastic File System (EFS) volume for persistent MongoDB data, and launch a service behind an Application Load Balancer (ALB) for efficient traffic routing.

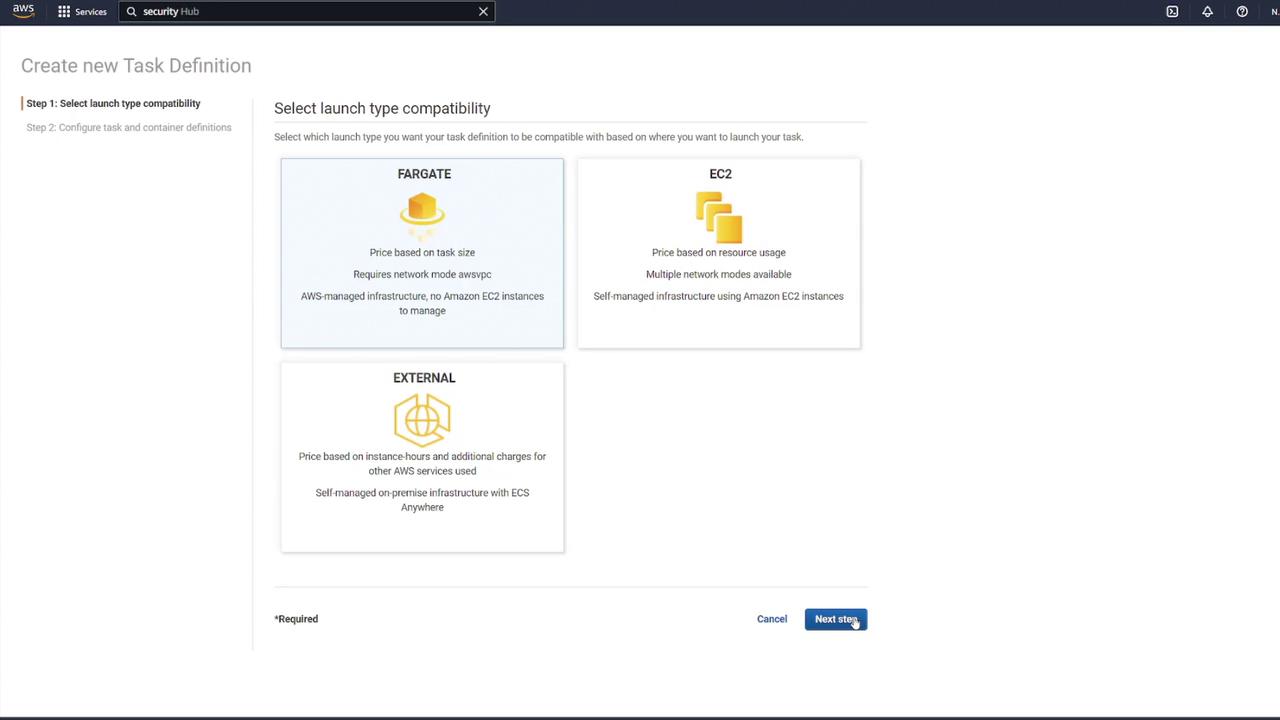

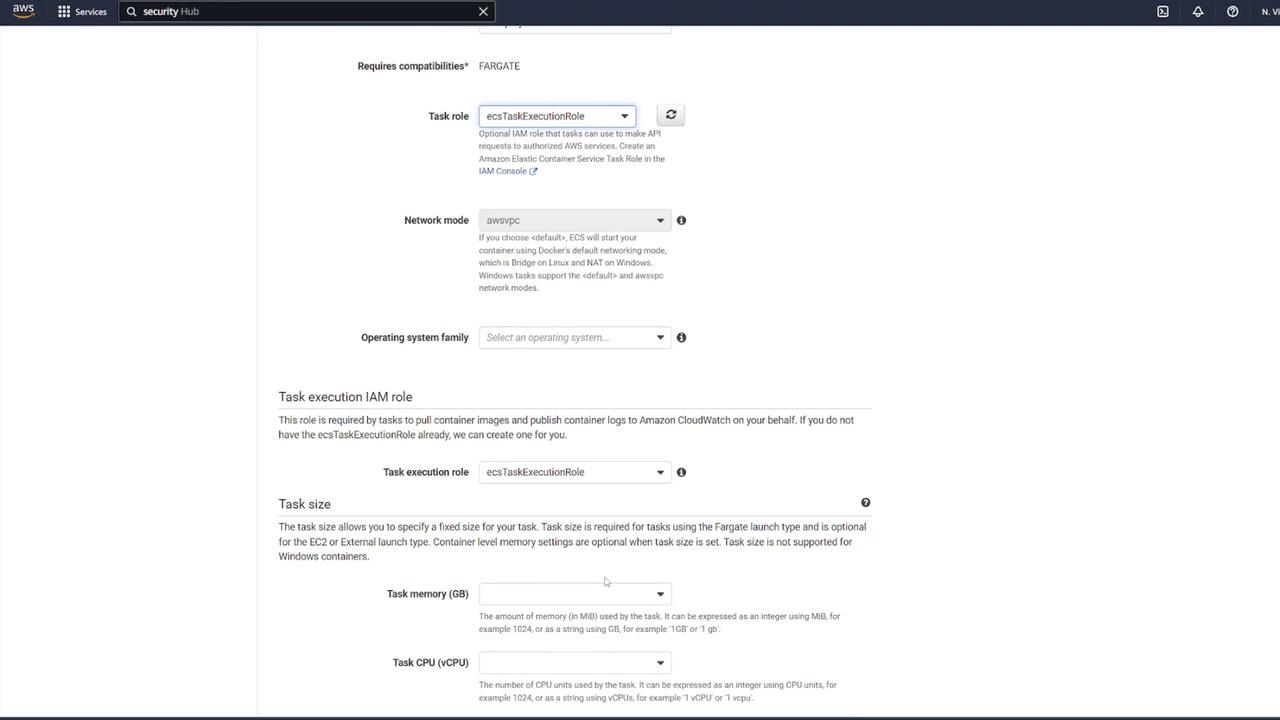

1. Creating the Task Definition

Begin by navigating to your ECS dashboard and selecting Task Definitions. Create a new task definition by choosing Fargate as the launch type. Give it a meaningful name (for example, "ECS-Project1"), assign the ECS task execution role, and select the smallest available memory option. After these steps, proceed to add your containers.

2. Configuring the MongoDB Container

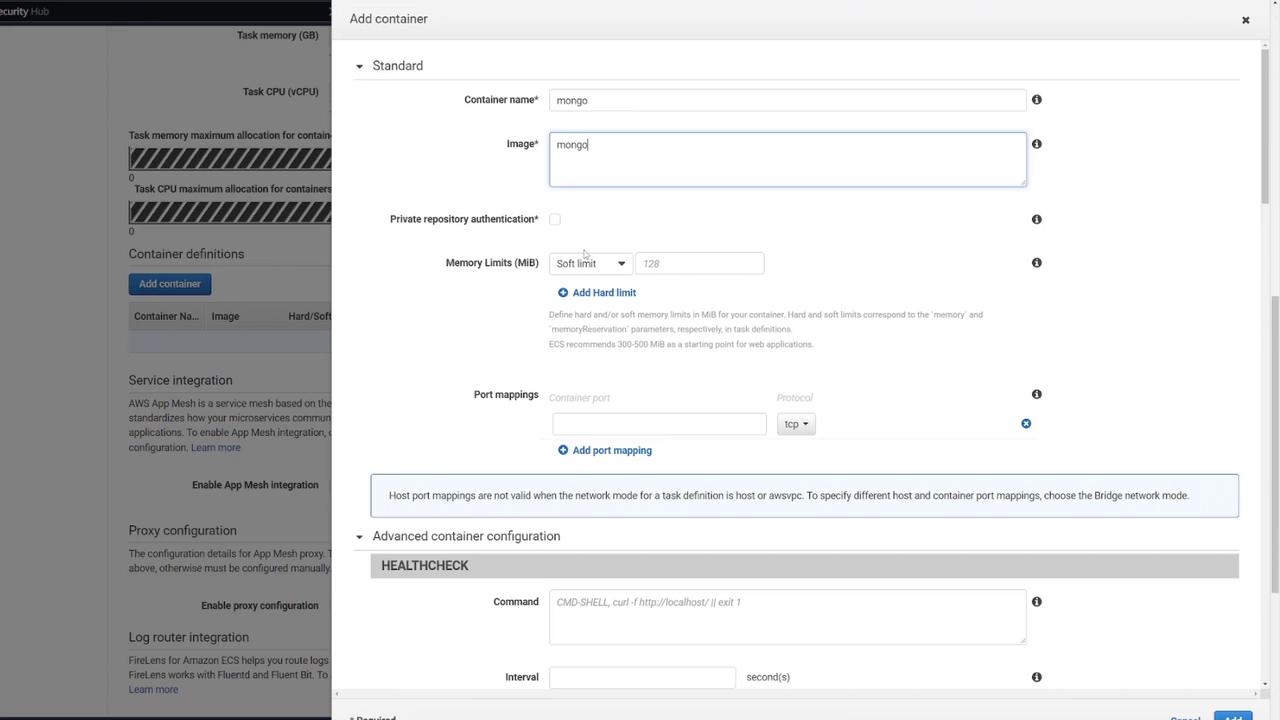

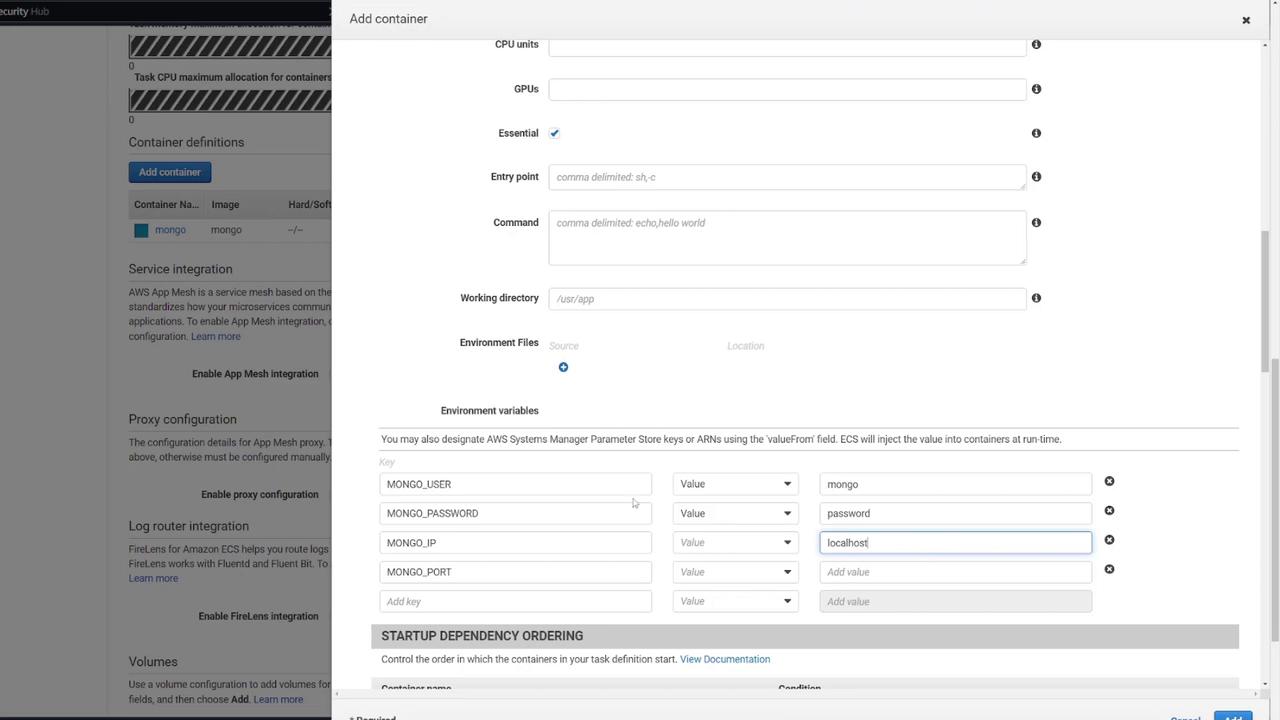

The first container will run the MongoDB database. Configure it with the following steps:

- Name the container "Mongo" and select the default Mongo image from Docker Hub.

- Set the port mapping to 27017 (MongoDB’s default port).

- Define the necessary environment variables for MongoDB initialization.

Health Check

You can add an optional health check for MongoDB. For example:

CMD-SHELL, curl -f http://localhost/ || exit 1

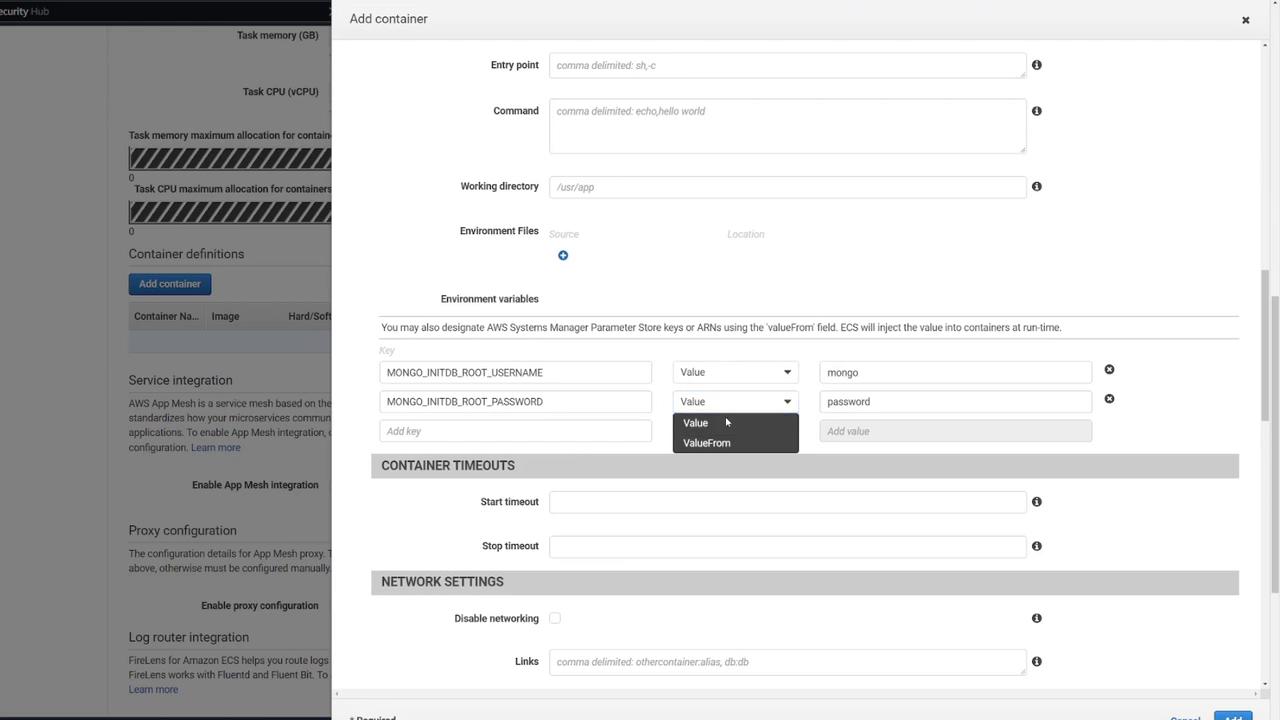

Next, include the following environment variables based on your docker-compose configuration:

environment:

- MONGO_INITDB_ROOT_USERNAME=mongo

- MONGO_INITDB_ROOT_PASSWORD=password

- MONGO_IP=mongo

- MONGO_PORT=27017

ports:

- "27017:27017"

mongo:

image: mongo

environment:

- MONGO_INITDB_ROOT_USERNAME=mongo

- MONGO_INITDB_ROOT_PASSWORD=password

volumes:

- mongo-db:/data/db

volumes:

mongo-db:

Security Notice

Using "password" for MongoDB credentials is not secure and is used here solely for demonstration purposes.

After confirming the settings, close the MongoDB container configuration.

3. Configuring the Web API Container

Next, configure the second container to host your Express-based web API:

- Add a new container (for example, named "web API").

- Use the image "ECS-Project2" from Docker Hub.

- Set the container to listen on port 3000.

Ensure the environment variables match those from the MongoDB container for seamless connectivity:

version: "3"

services:

api:

build: .

image: kodekloud/ecs-project2

environment:

- MONGO_USER=mongo

- MONGO_PASSWORD=password

- MONGO_IP=mongo

- MONGO_PORT=27017

ports:

- "3000:3000"

mongo:

image: mongo

environment:

- MONGO_INITDB_ROOT_USERNAME=mongo

- MONGO_INITDB_ROOT_PASSWORD=password

volumes:

- mongo-db:/data/db

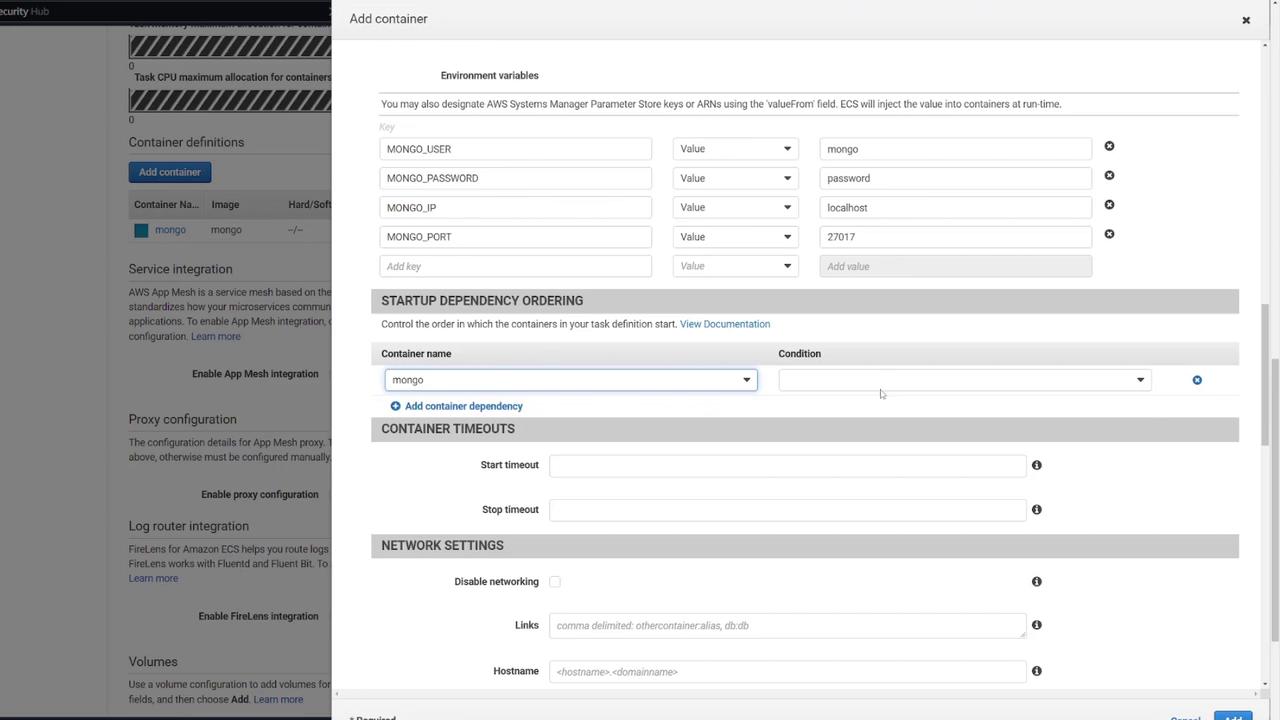

Although Docker Compose supports built-in DNS resolution between services, ECS tasks do not. Therefore, ensure the web API leverages the proper host for intra-task communication.

A typical health check command for the web API may look like this:

HEALTHCHECK

Command: CMD-SHELL, curl -f http://localhost/ || exit 1

Interval: second(s)

While ECS does offer options to define container dependencies (ensuring MongoDB starts before the web API), this example does not include that configuration.

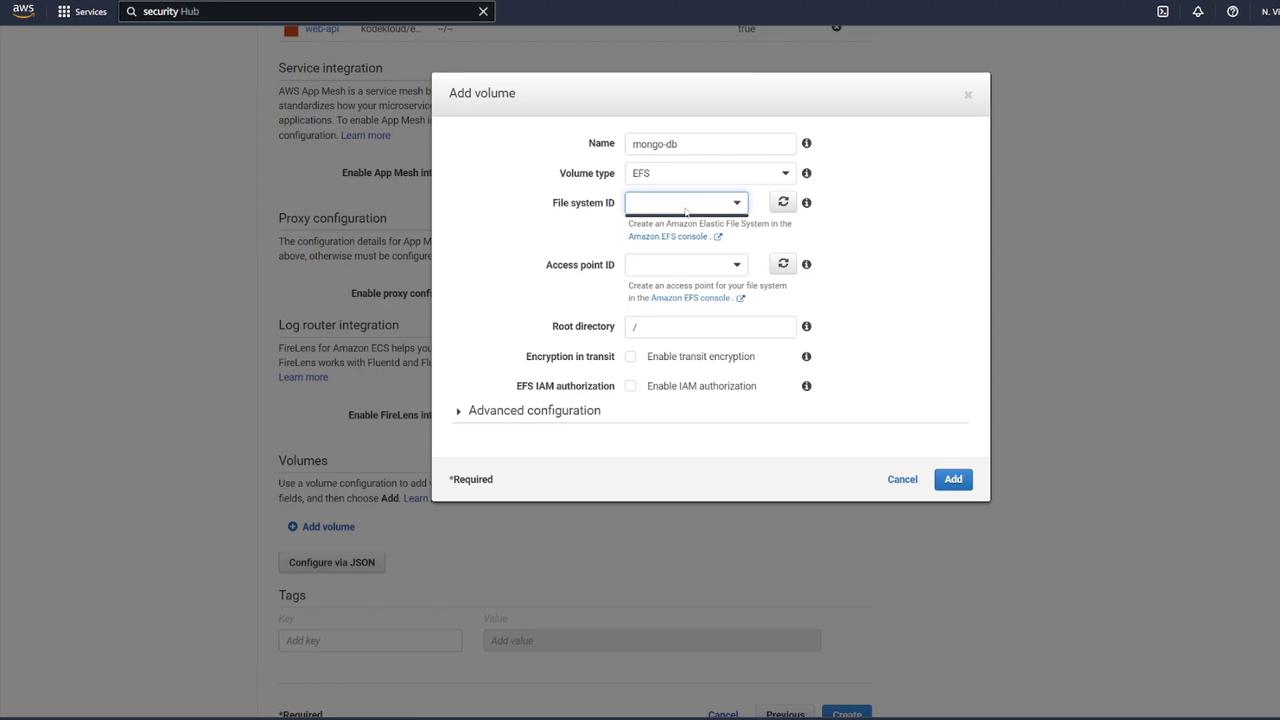

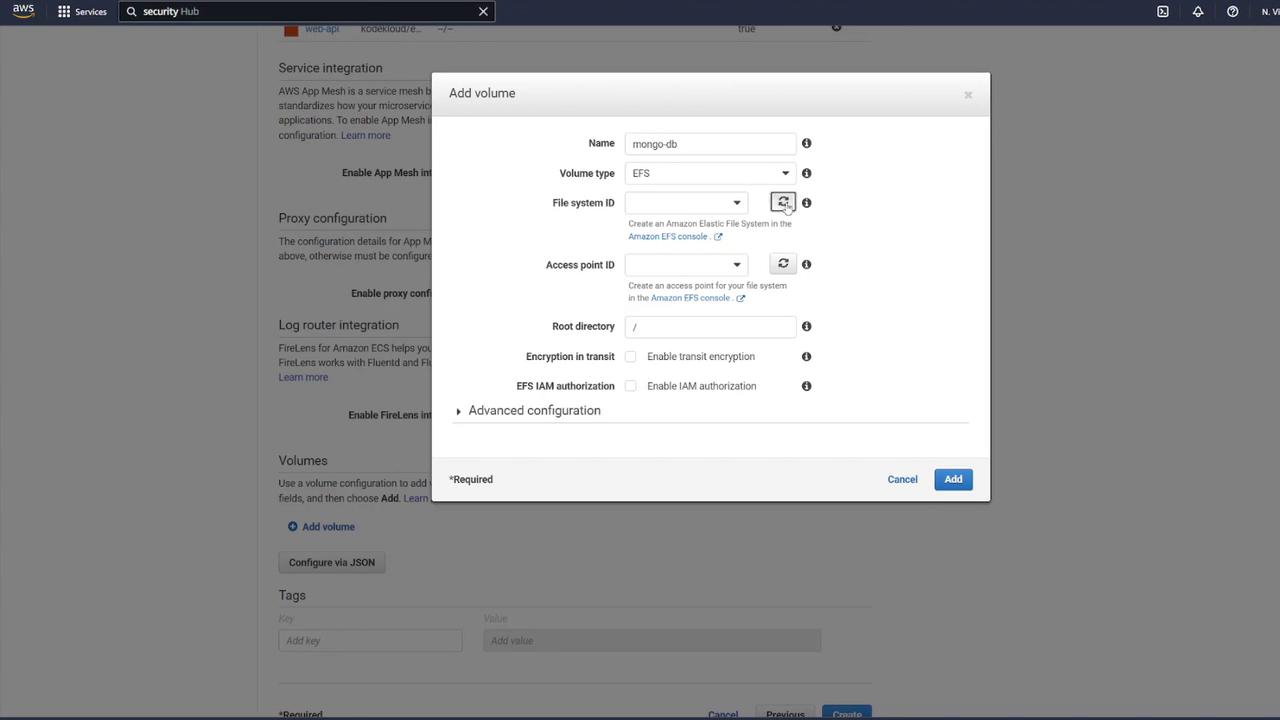

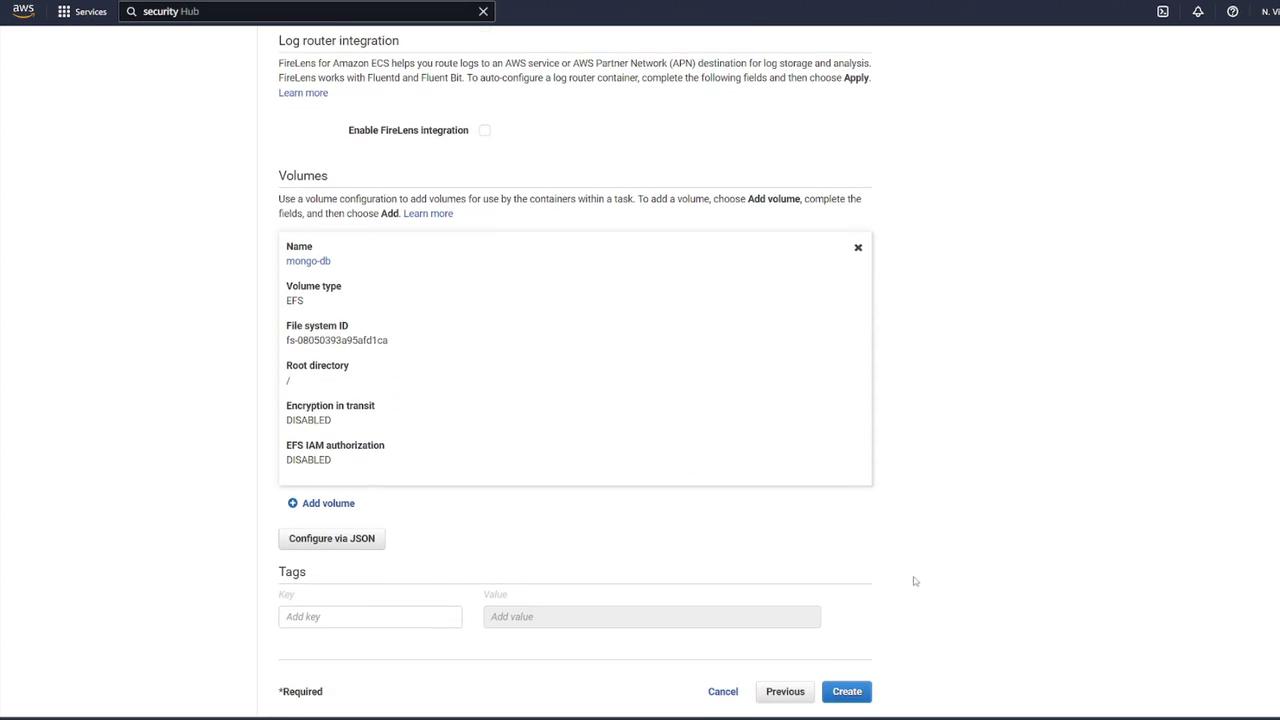

4. Defining a Volume for MongoDB

To ensure data persistence for MongoDB, attach an EFS volume to the MongoDB container:

- In the ECS task definition, locate the volume section and add a new volume.

- Name the volume "mongo-db" and choose AWS Elastic File System (EFS) as the volume type.

- If no EFS is available, click the link to create a new one.

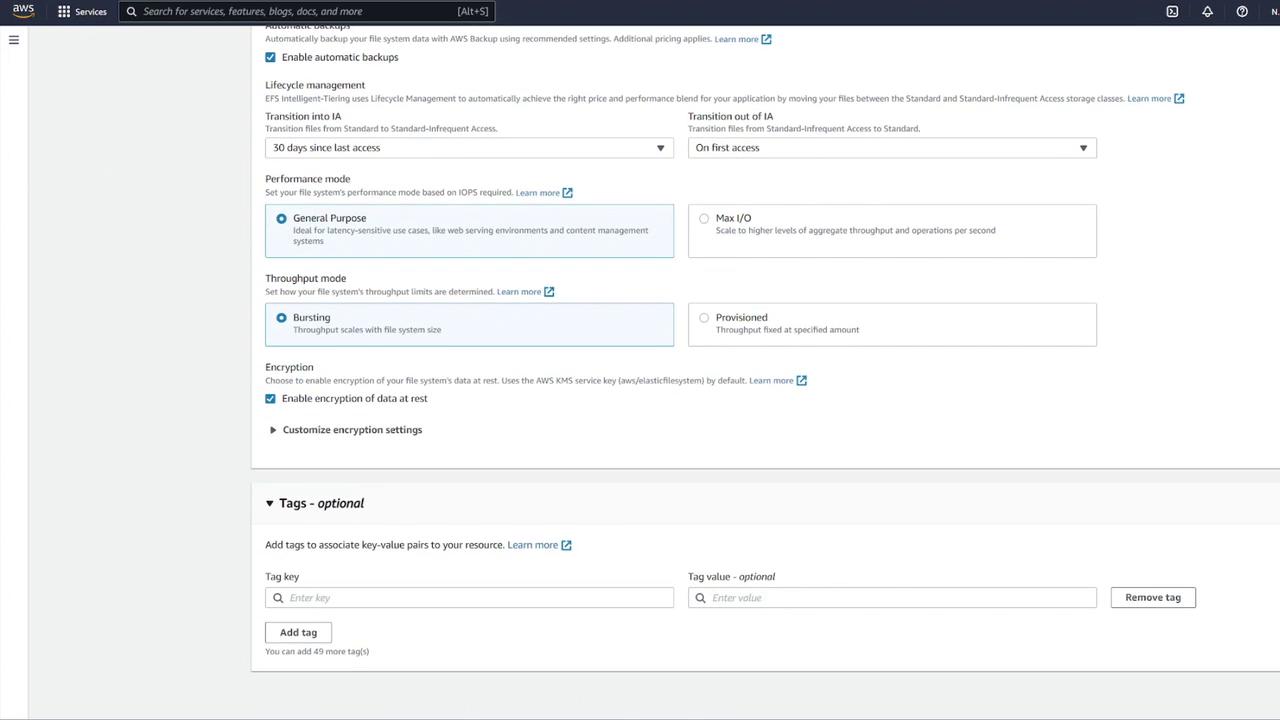

When creating the EFS:

- Name it "mongo-db".

- Use the same VPC as your ECS cluster.

- Select the proper subnets and update the security group to permit ECS container communication.

Create a dedicated security group for EFS (e.g., "EFS security group") and modify its inbound rules to allow NFS traffic (TCP port 2049) only from your ECS security group.

After updating the settings and refreshing the EFS dialog, remove any default security groups and proceed with volume creation.

5. Mounting the EFS Volume in the MongoDB Container

Associate the EFS volume with your MongoDB container by performing the following:

- Edit the MongoDB container’s settings.

- In the "Storage and Logging" section, select Mount Points.

- Choose the "mongo-db" volume and set the container path to

/data/dbas recommended by MongoDB.

Example container settings snippet:

Command: echo,hello world

Environment variables:

MONGO_INITDB_ROOT_PASSWORD: password

MONGO_INITDB_ROOT_USERNAME: mongo

Click Update and then create your task definition. You may notice the task definition revision incrementing (e.g., revision 4).

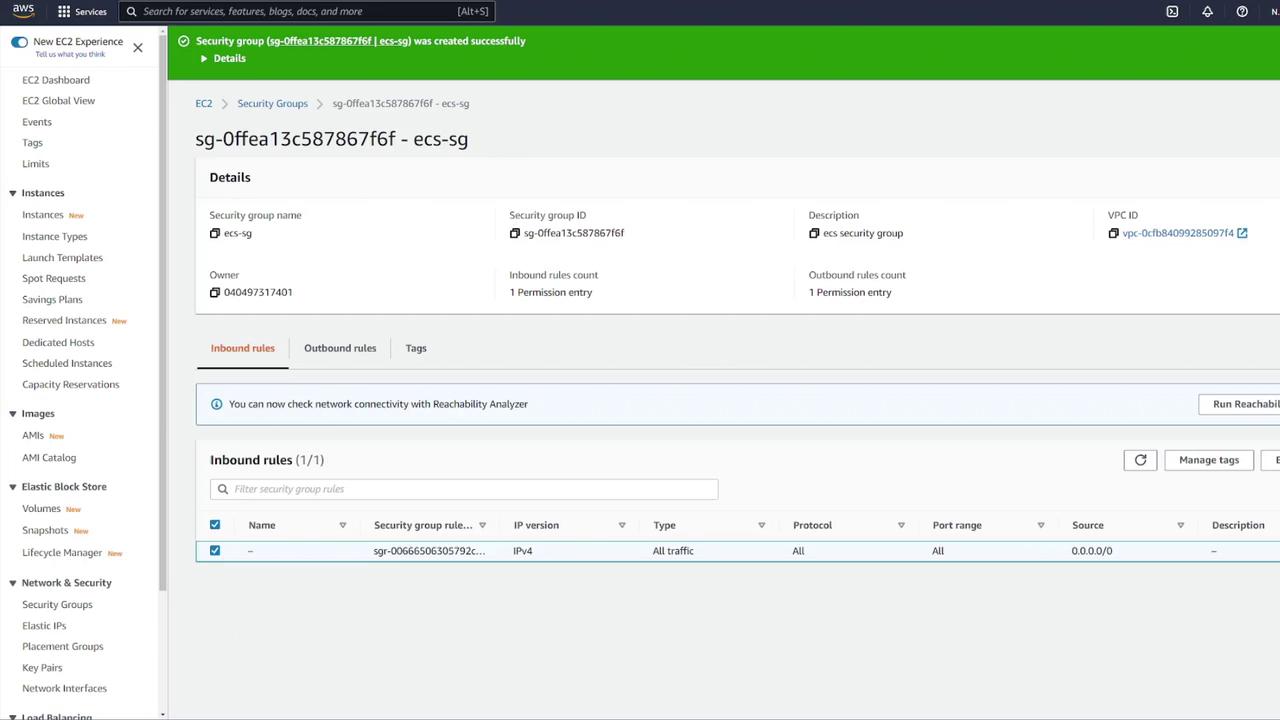

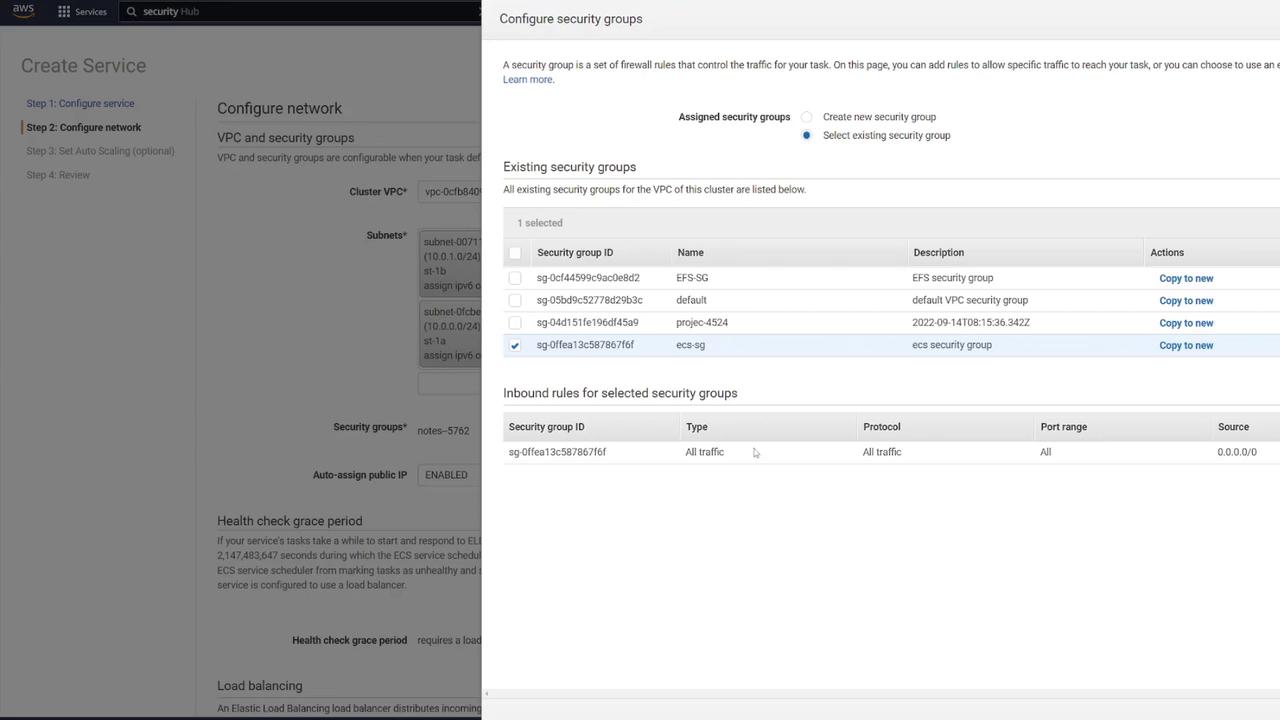

6. Creating the ECS Service and Configuring the Load Balancer

Now that the task definition is complete, create a new ECS service:

- Navigate to your ECS cluster (e.g., "cluster one") and create a service.

- Select Fargate as the launch type and Linux as the operating system.

- Choose the newly created task definition (e.g., "ECS-Project2") and assign a service name (for instance, "notes app service").

- Set the number of tasks to 1.

- Select the appropriate VPC, subnets, and ECS security group (e.g., "ECS-SG").

Configuring the Application Load Balancer

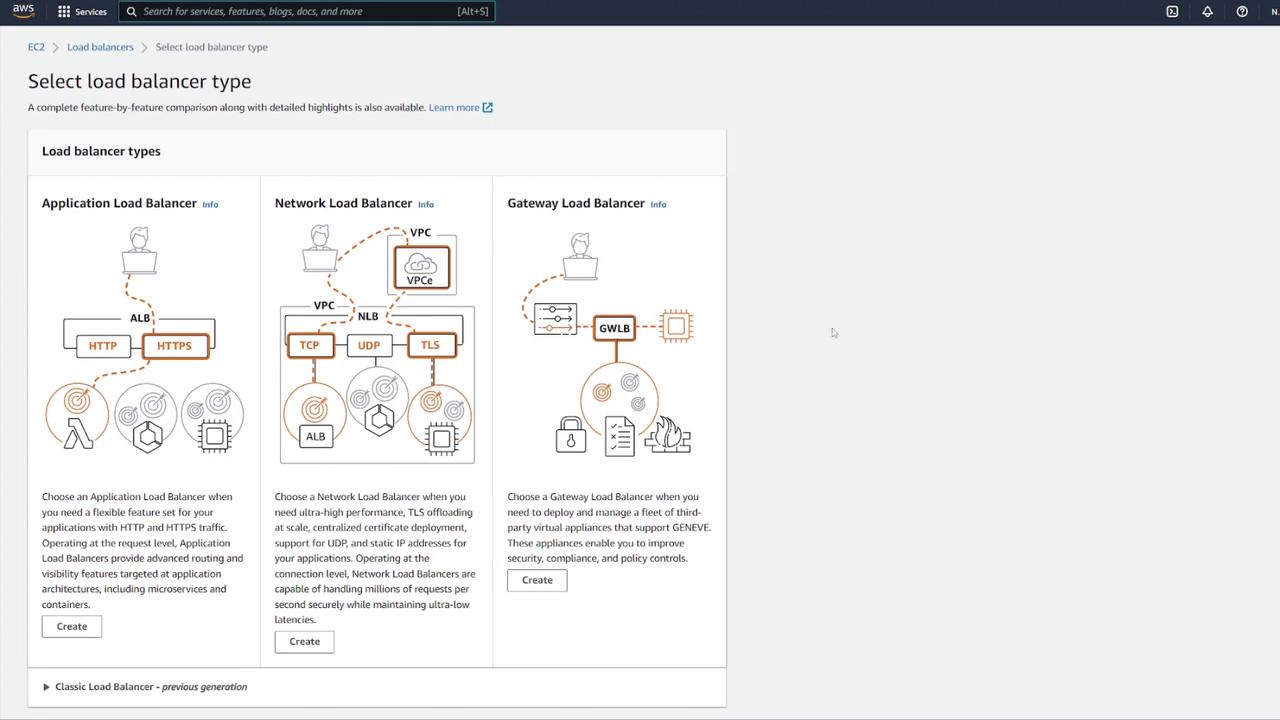

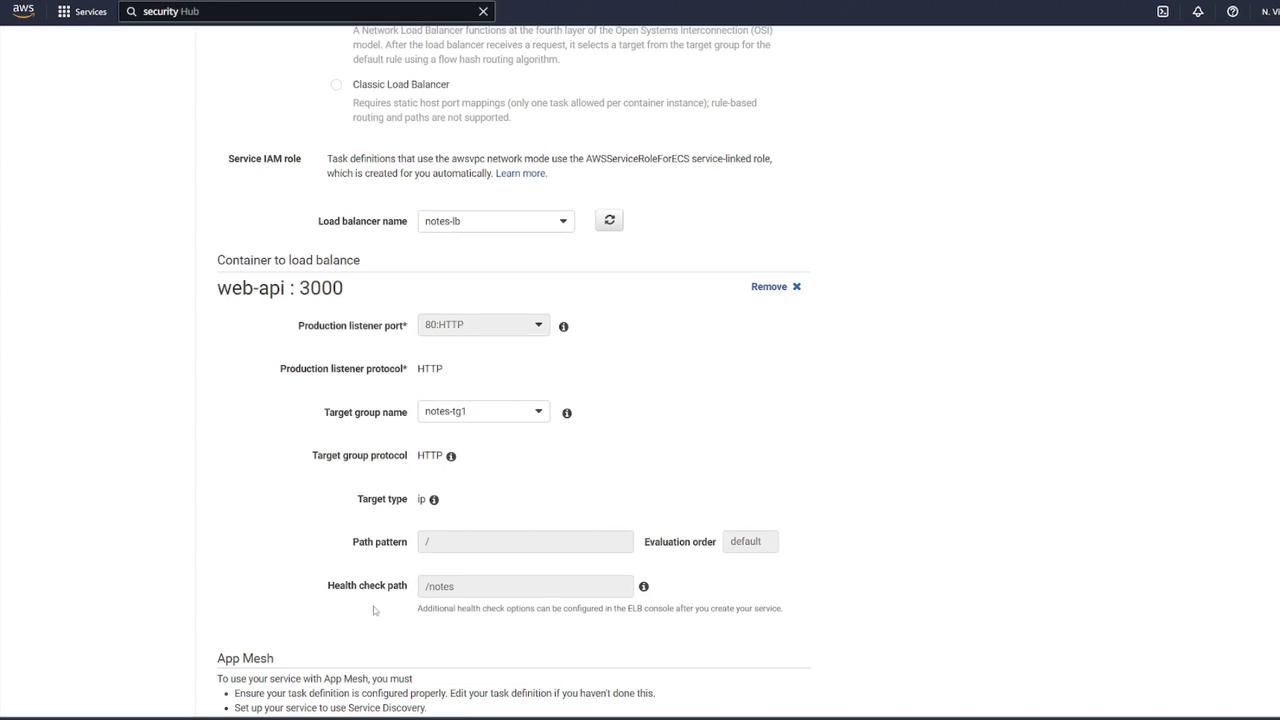

To distribute traffic and enhance scalability, set up an ALB:

- Click the link to open the ALB configuration in a new tab.

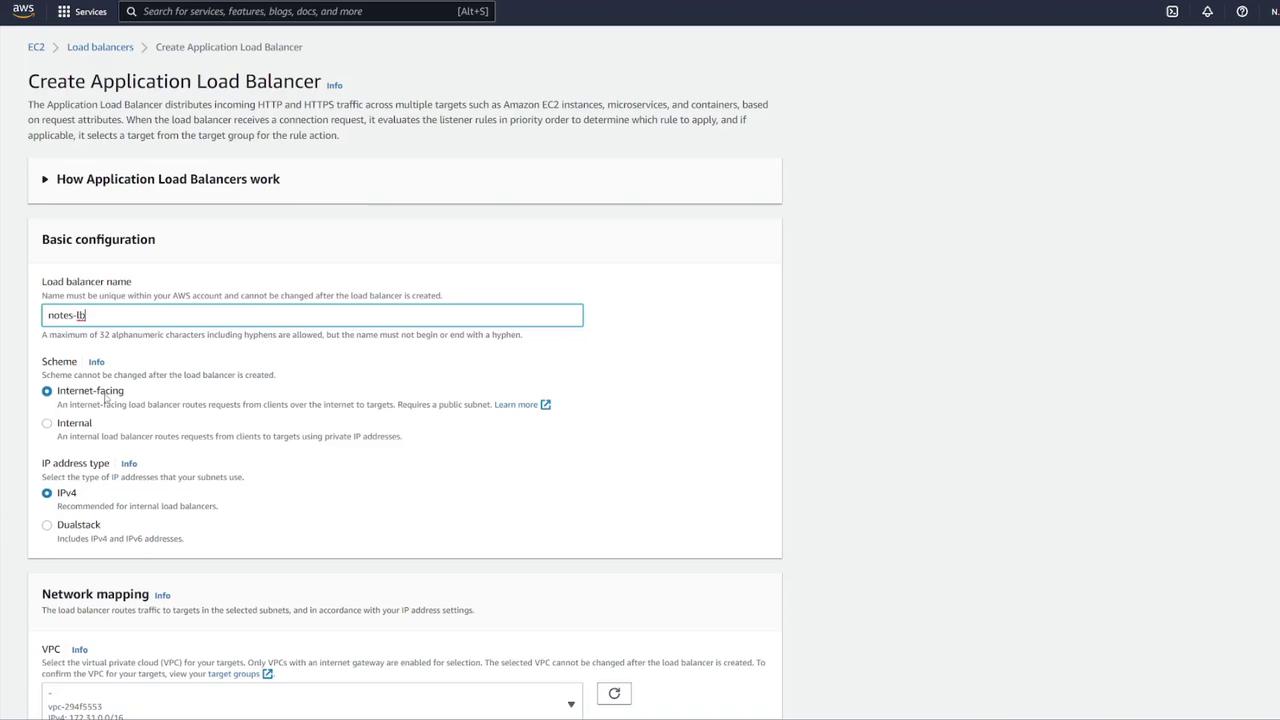

- Choose the Application Load Balancer type, set it as internet-facing, and select IPv4.

- Map the ALB to the appropriate VPC.

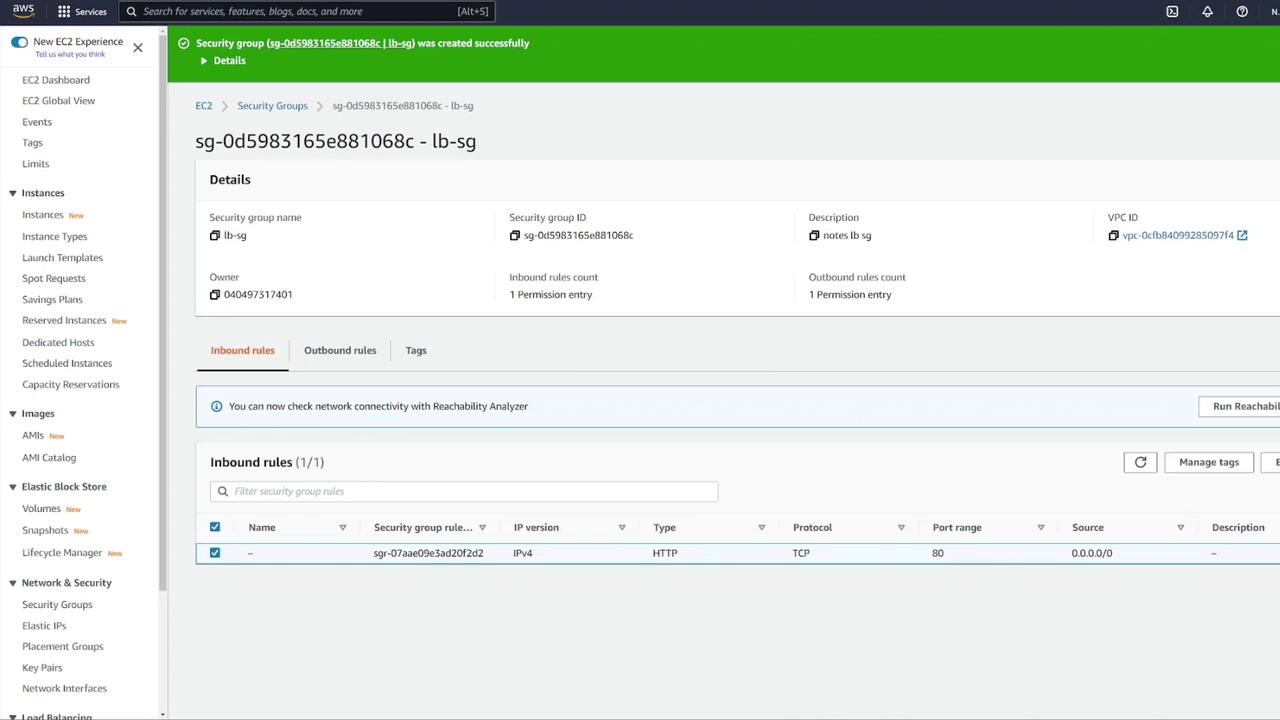

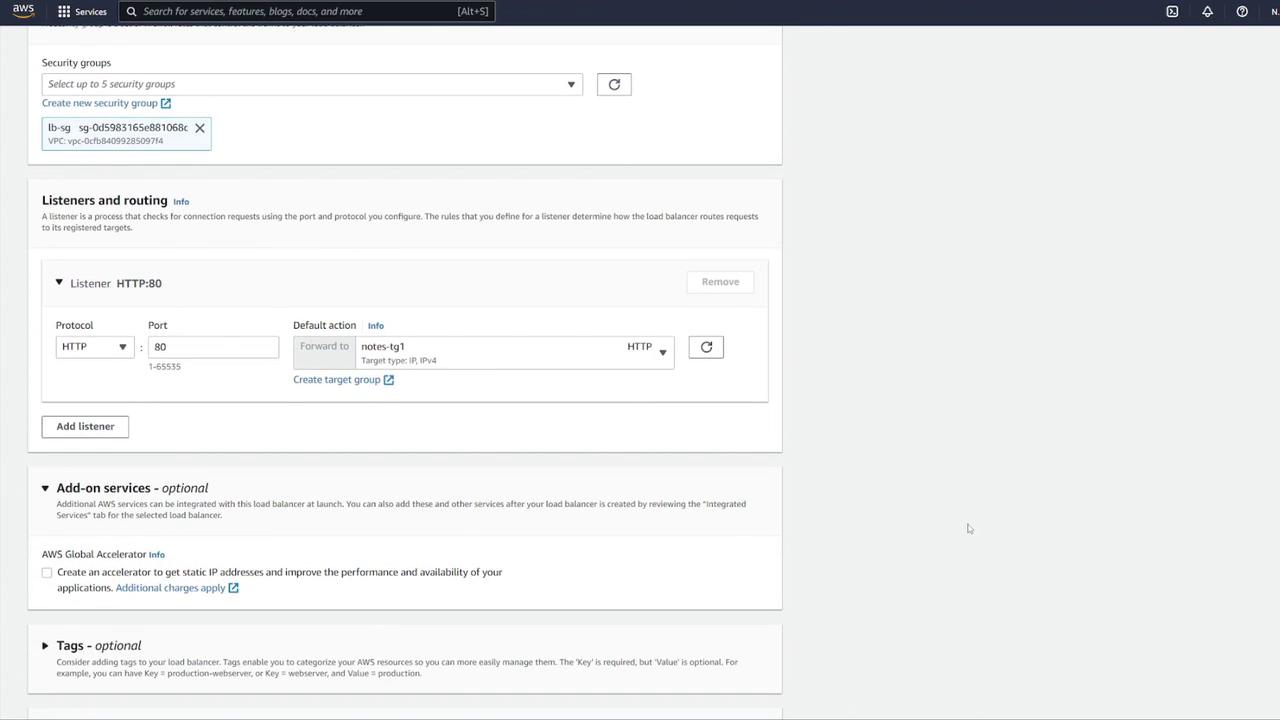

- Create a new security group for the ALB (e.g., "LB-SG").

Configure the ALB with the following details:

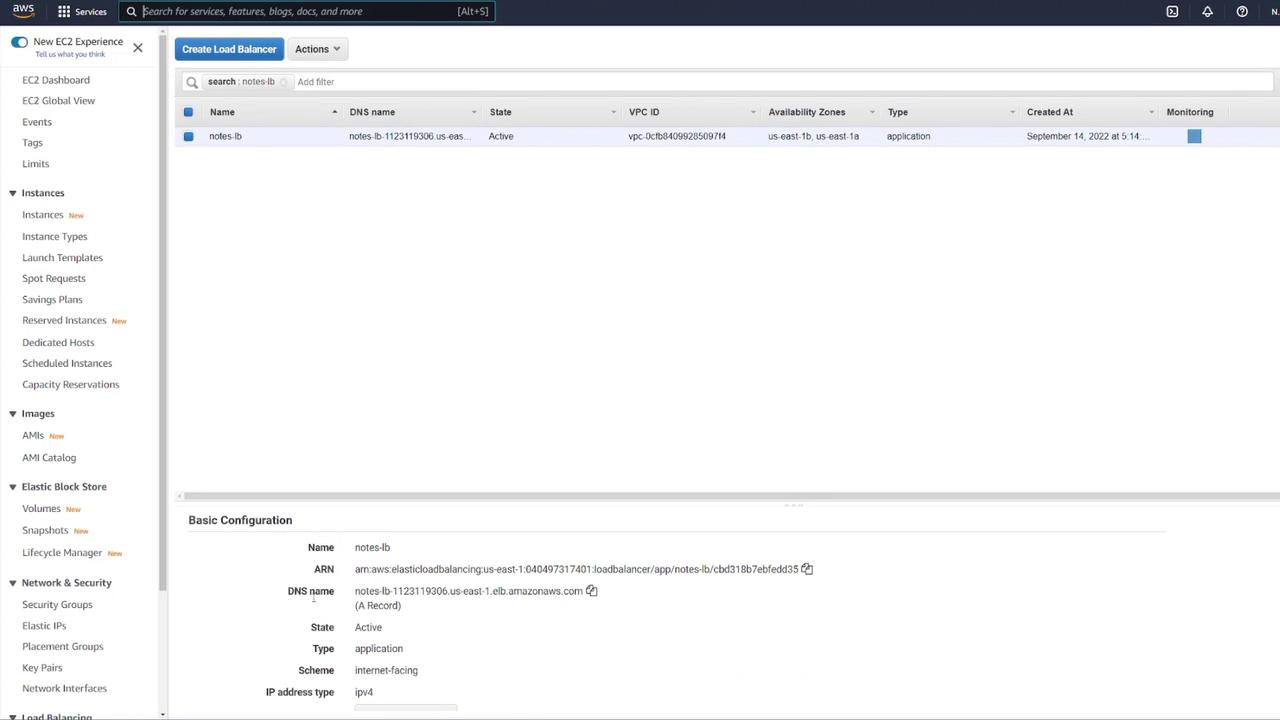

- Name it appropriately (e.g., "notes LB").

- Set it as internet facing with IPv4.

- Configure inbound rules to allow HTTP traffic on port 80. Although the container listens on port 3000, the ALB will forward requests to port 3000 using listener rules.

If any default security groups cause issues, create a custom ALB security group with the proper rules.

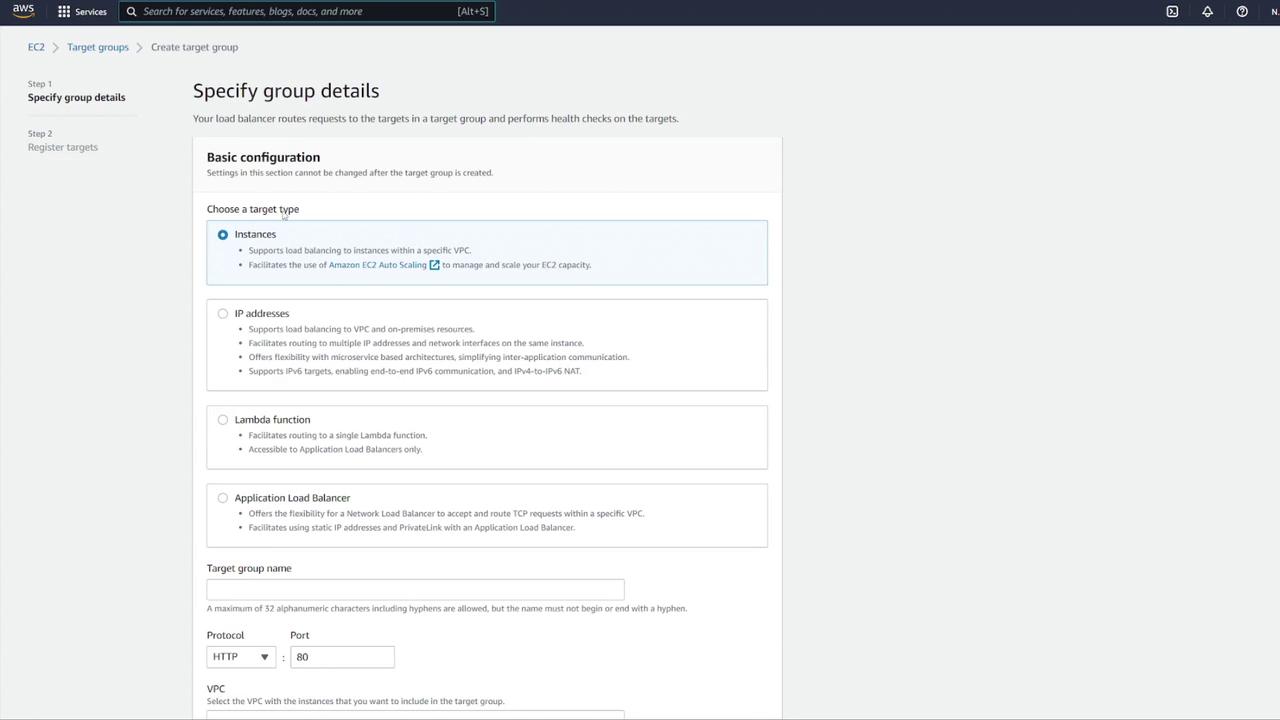

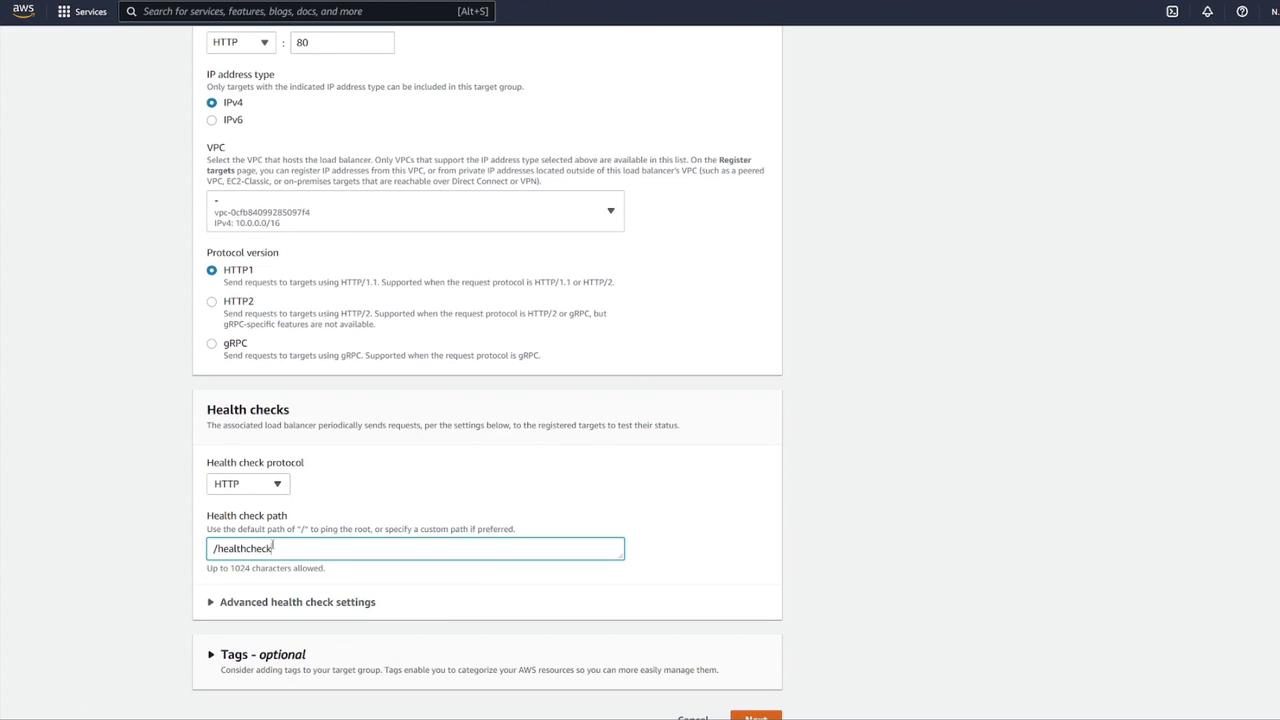

After confirming your ALB settings, create a target group by following these steps:

- Select IP addresses as the target type.

- Name the target group (e.g., "notes-tg1") and choose the correct VPC.

- Modify the health check configuration: update the health check path to

/notesand override the health check port to 3000 if required.

Return to the load balancer configuration and associate the new target group (notes-tg1) to the listener on port 80, ensuring traffic is forwarded to port 3000.

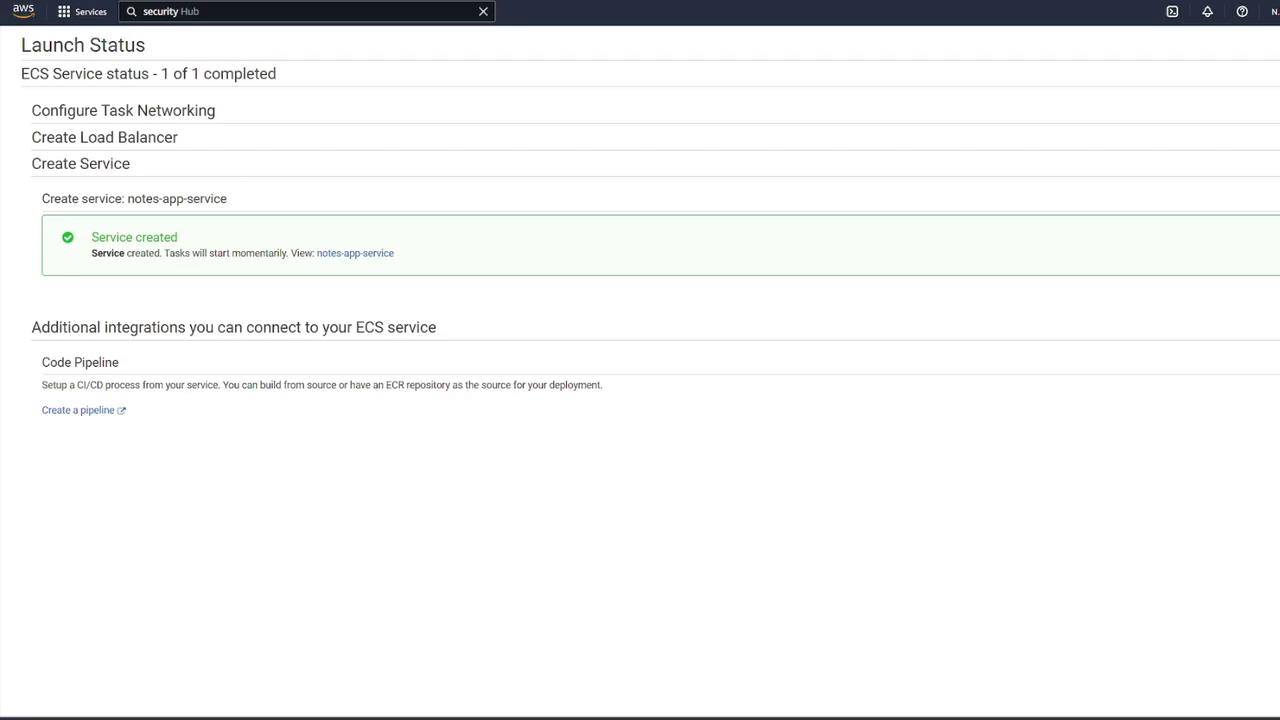

Review all final settings, disable auto scaling if not necessary, and create the ECS service.

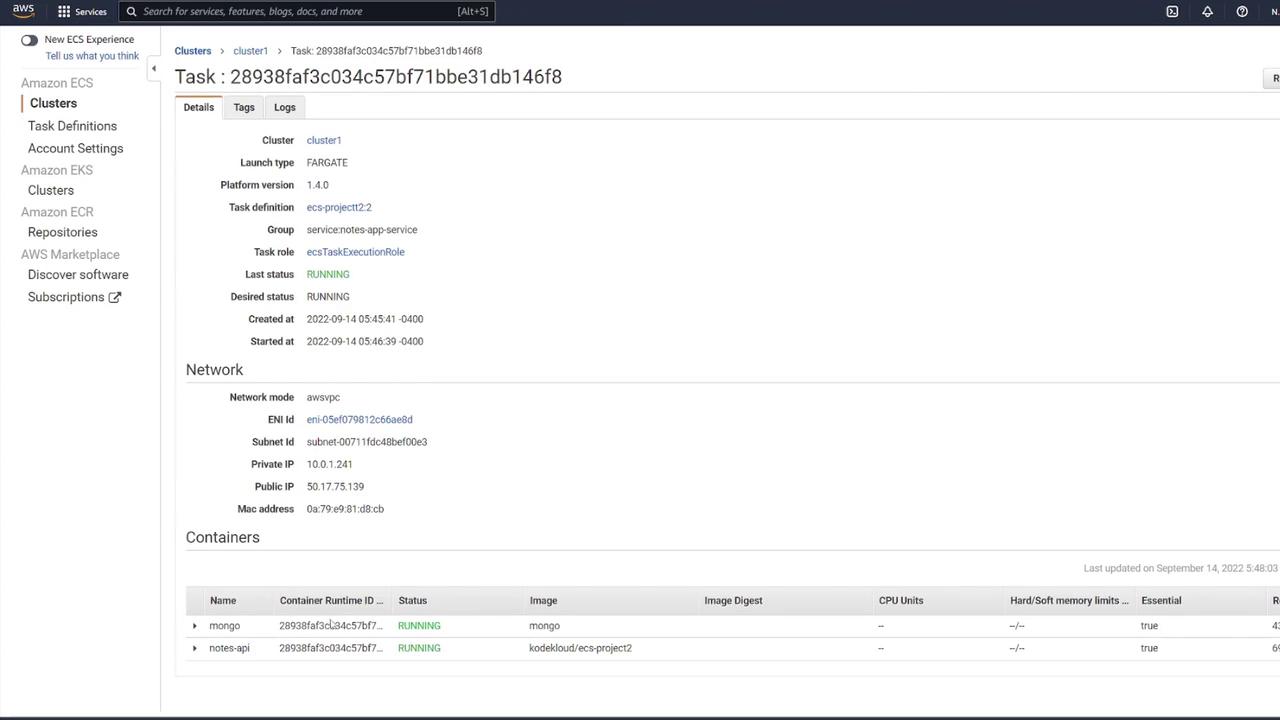

After the service is created, verify that at least one task transitions from provisioning to running. You can check the status by clicking the running task and confirming that both the "mongo" and "notes-api" containers operate as expected.

7. Testing the Application

Once your service is running, test your application using tools like Postman:

Send a GET request to the container's IP on port 3000 at the

/notesendpoint. The initial response should be:{ "notes": [] }Send a POST request with JSON data, such as:

{ "title": "second_note", "body": "remember to do dishes!!!!" }A subsequent GET request to

/notesshould display the newly created note:{ "notes": [ { "_id": "63211a3c034fdd55dec212834", "title": "second note", "body": "remember to do dishes!!!!", "__v": 0 } ] }

Since you configured a load balancer, test the application using the ALB’s DNS name. For example, navigate to:

Ensure you do not include port 3000 in the URL because the ALB listens on port 80 and forwards the requests to port 3000 through the target group. For example:

GET http://notes-lb-1123119306.us-east-1.elb.amazonaws.com/

{

"notes": []

}

After a POST request adds a note, a subsequent GET should display the updated results.

8. Enhancing Security Group Rules

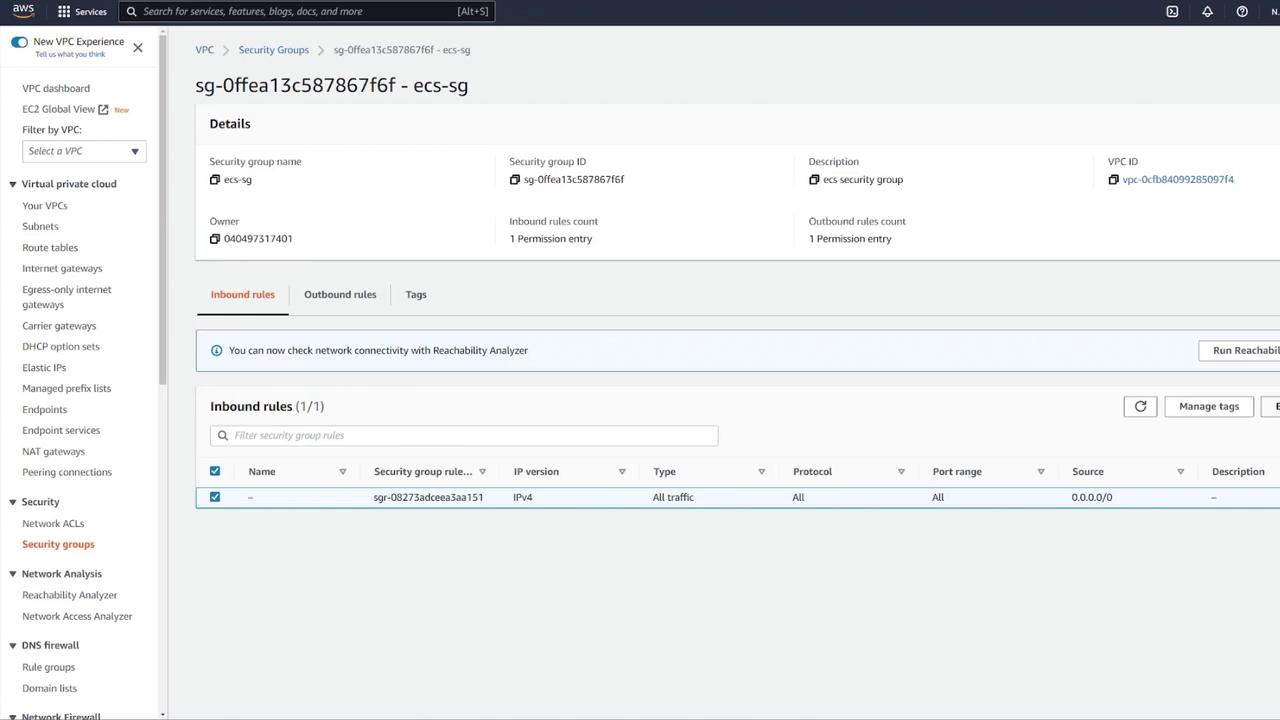

For improved security, update your ECS security group rules:

- Instead of allowing all IP addresses on any port, remove the overly permissive rule.

- Add a custom TCP rule on port 3000 with the source set to your load balancer's security group. This ensures that only traffic routed through the ALB reaches your ECS containers.

In this article, we successfully deployed a multi-container application on Amazon ECS using Fargate. This deployment includes a MongoDB database with persistent EFS storage, an Express-based web API, an Application Load Balancer for efficient traffic distribution, and tightened security through proper security group configurations.

For more detailed information on ECS and related services, please refer to the following resources:

Happy deploying!

Watch Video

Watch video content