Chaos Engineering

Chaos Engineering on Kubernetes EKS

Demo Memory Stress on EKS Part 4

In this fourth installment of our chaos engineering series, we analyze the impact of injecting memory stress into a Kubernetes pod on Amazon EKS using AWS Fault Injection Simulator (FIS). Over a nine-minute interval, we applied a controlled memory fault to the “PetSite” service and collected performance metrics across multiple AWS monitoring tools.

Note

Before running any FIS experiment, ensure your IAM role has the necessary permissions for AWS FIS, CloudWatch, and X-Ray. Review the AWS FIS documentation for setup details.

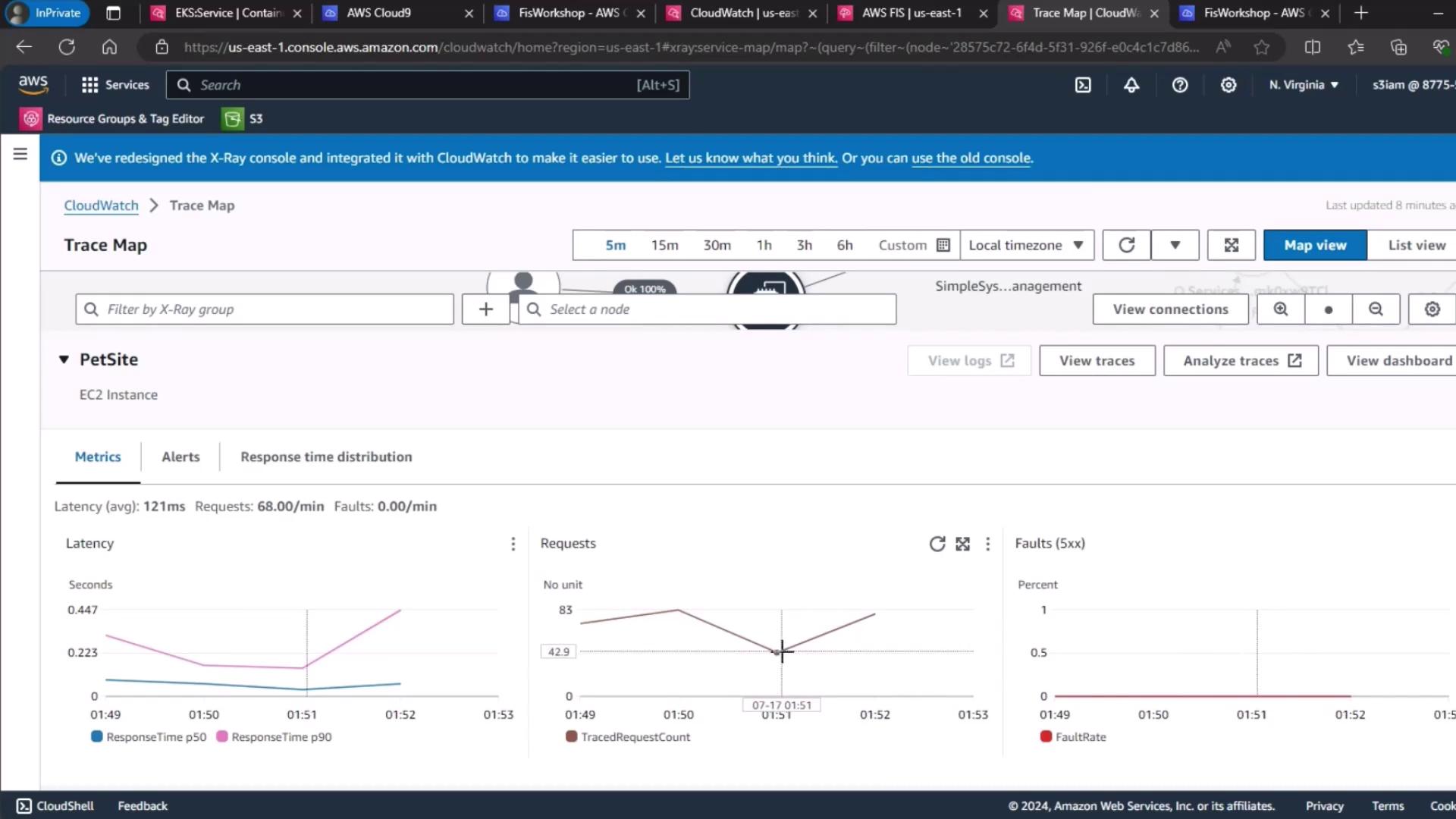

1. PetSite Trace Map

We start by examining the X-Ray service map for our PetSite EC2 instance. The average latency rose from roughly 90 ms to 120 ms, and the overall request count declined slightly—evidence that the memory fault impacted service responsiveness.

| Metric | Baseline | Under Memory Stress |

|---|---|---|

| Average Latency | ~90 ms | ~120 ms |

| Request Count | Normal | Decreased |

While the increase in latency is measurable, our distributed Kubernetes architecture absorbs much of the fault without cascading failures.

2. Container Insights

Next, we review pod-level resource metrics in CloudWatch Container Insights. The table below summarizes CPU and memory utilization before and during the experiment.

| Resource | Baseline Utilization | Under Memory Stress |

|---|---|---|

| CPU Utilization | 0.29 cores | 1.57 cores |

| Memory Usage | Normal | Noticeable increase |

These results confirm the FIS memory injection was successful. No pod restarts occurred, indicating adequate headroom and resilience in our EKS cluster.

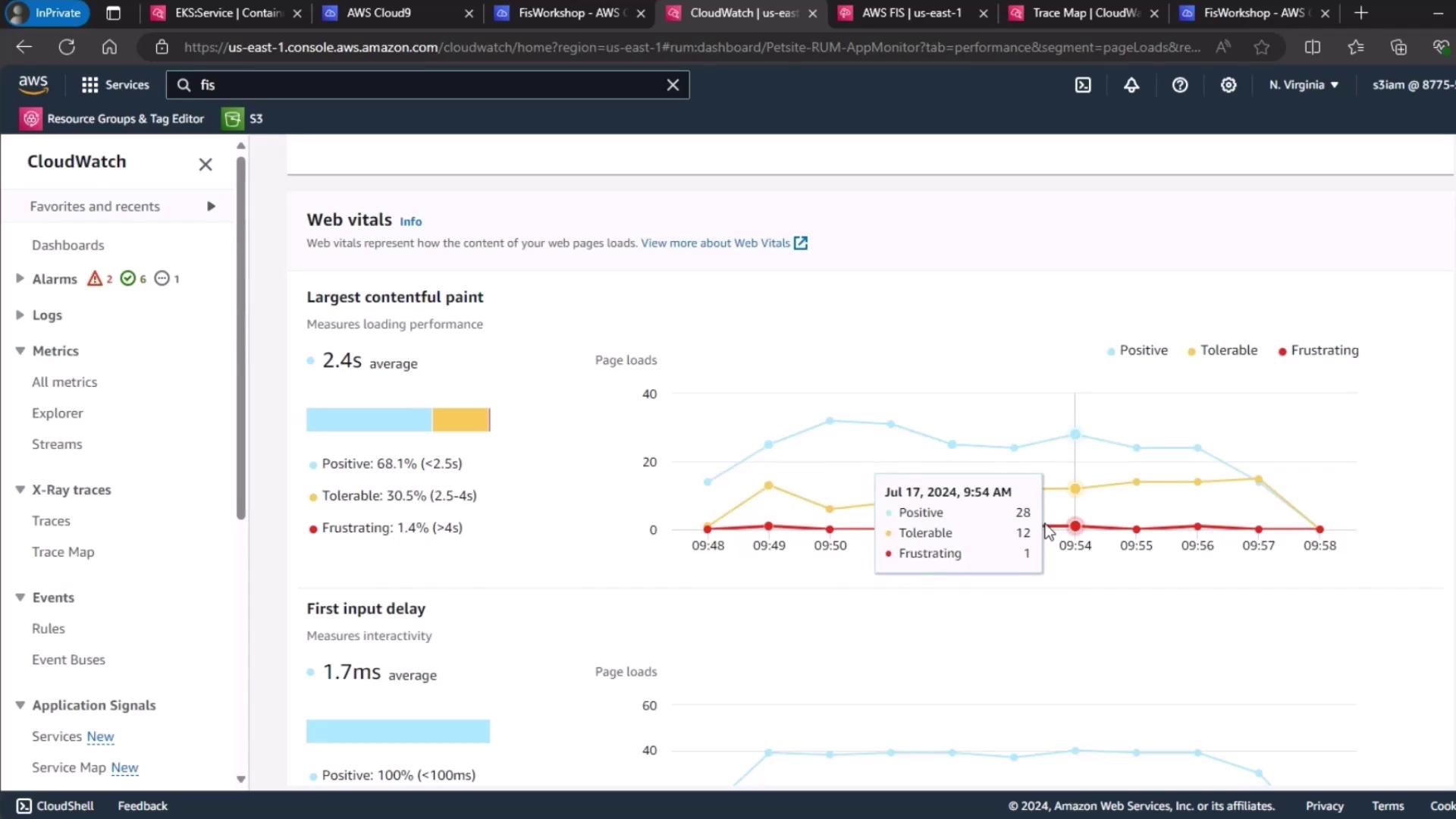

3. Real User Monitoring (RUM)

To assess real-world impact, we analyzed CloudWatch RUM web vitals. The proportion of “frustrating” user experiences inched up from 1.3 % to 1.4 %, still within our acceptable threshold.

Note

Set your own RUM thresholds based on application SLAs. A small increase in “frustrating” sessions may be acceptable if overall availability remains high.

Conclusion

Despite a clear uptick in latency and resource utilization during the AWS FIS memory stress test, end-user impact remained minimal. This demonstrates the robustness of a well-architected, distributed Kubernetes cluster on Amazon EKS.

Links and References

- AWS Fault Injection Simulator (FIS) Documentation

- Amazon EKS Overview

- CloudWatch Container Insights

- AWS X-Ray Developer Guide

- CloudWatch Real User Monitoring (RUM)

Watch Video

Watch video content