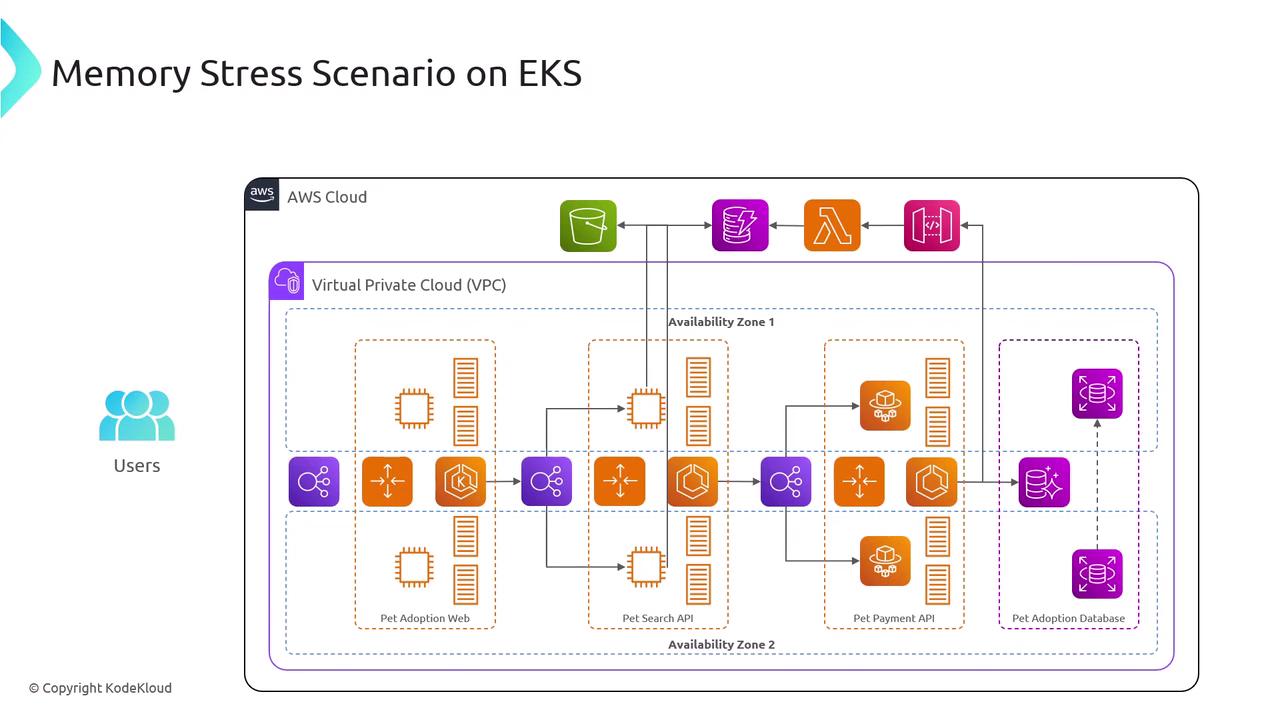

Architecture Overview

| Resource | Purpose | Example Command |

|---|---|---|

| EKS Cluster | Hosts and orchestrates Kubernetes workloads | eksctl create cluster --name pet-adopt-cluster |

| VPC & Subnets | Network isolation and multi-AZ deployment | Custom VPC with public/private subnets |

| AWS FIS Experiment | Injects faults to test resilience | aws fis start-experiment --cli-input-json file://experiment.json |

| CloudWatch Metrics | Monitors memory, CPU, and application health | Automatically integrated with EKS |

Experiment Design

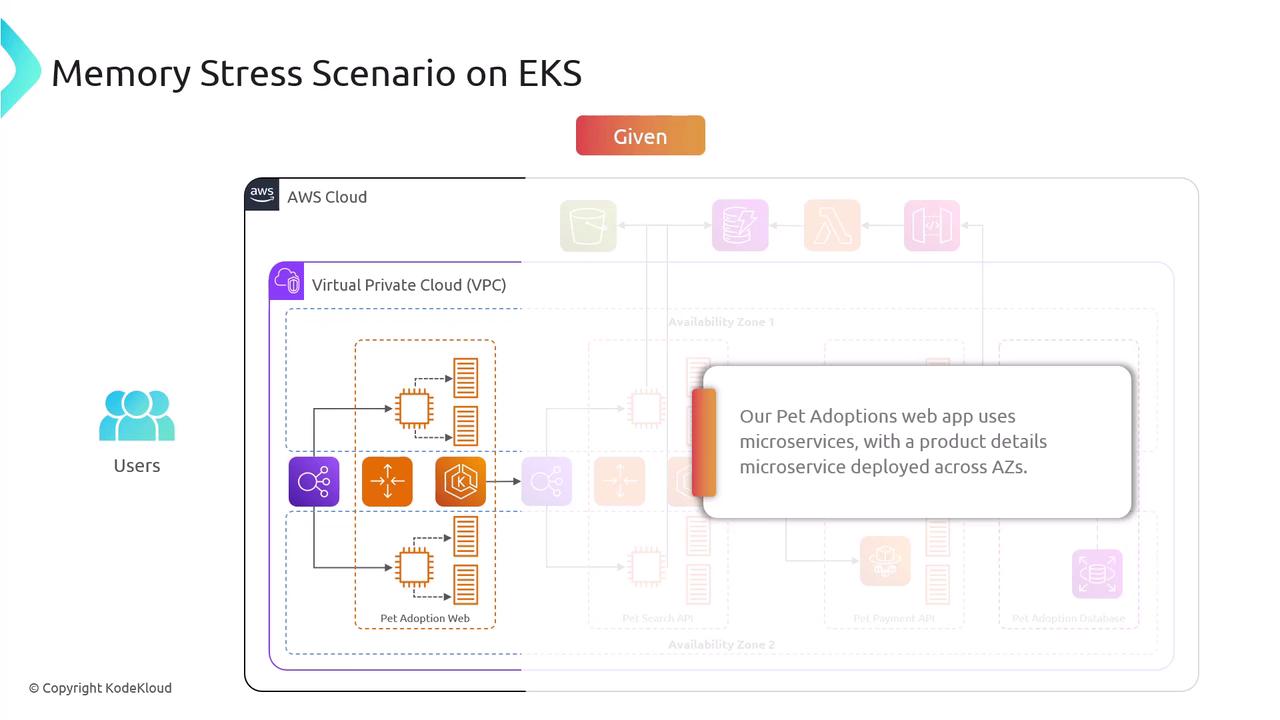

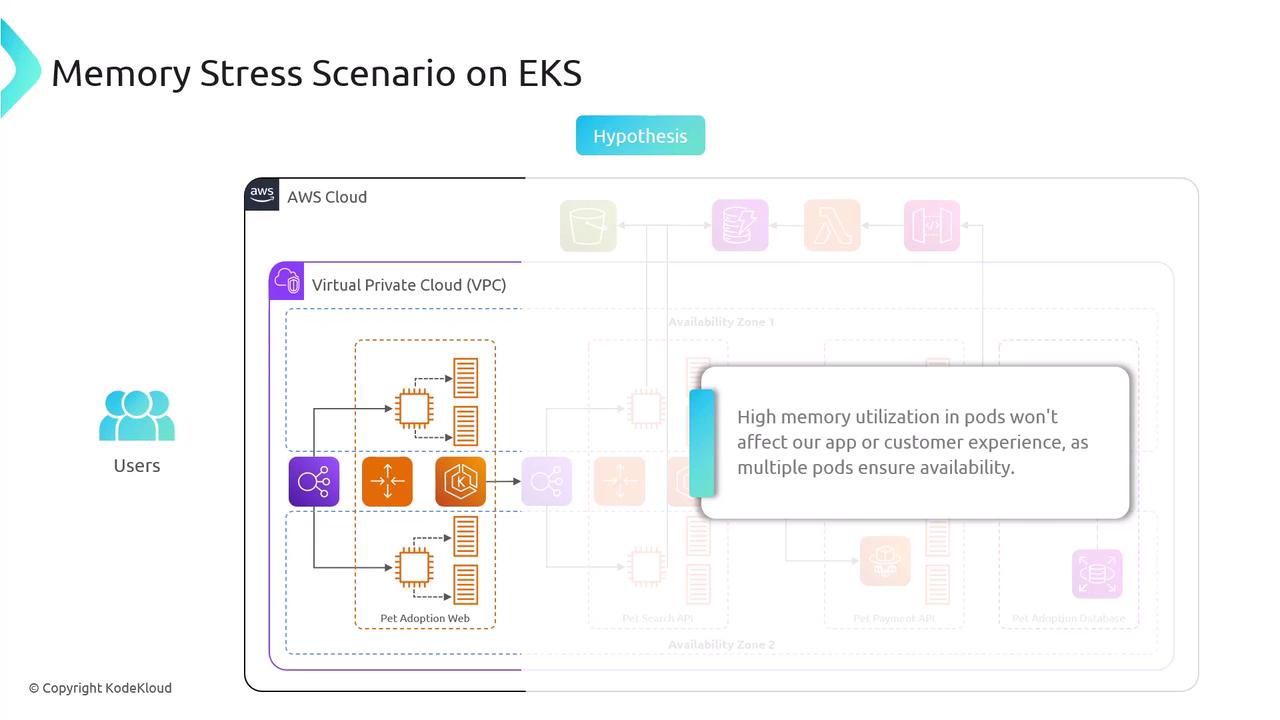

To ensure a structured approach, we define the Given (current state) and the Hypothesis (expected outcome under failure conditions).Given

Hypothesis

- An existing EKS cluster with worker nodes across at least two Availability Zones

- IAM permissions for

eks:*,fis:*, and CloudWatch metrics - AWS CLI configured for your target region