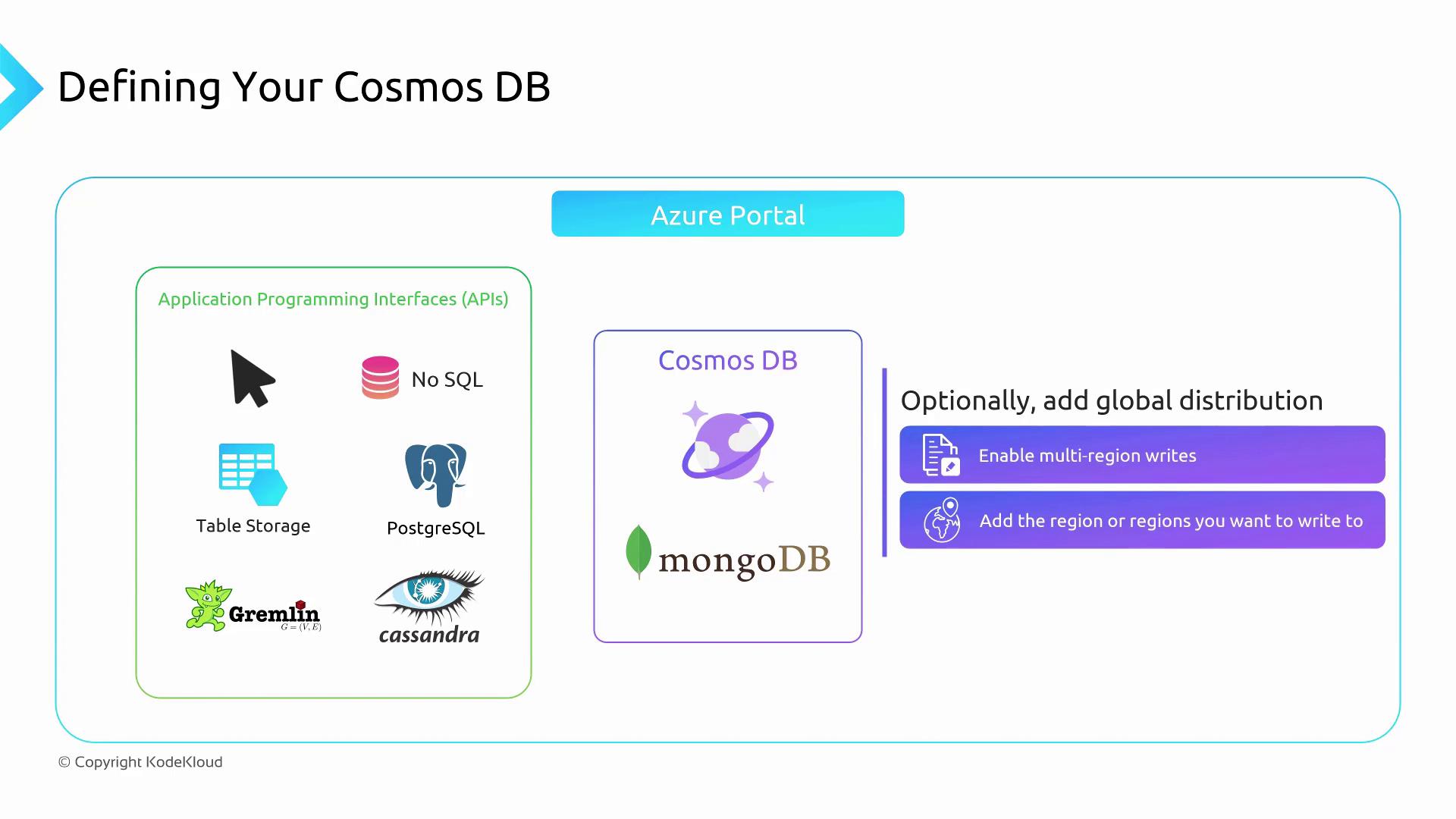

1. Create a Cosmos DB Account

- Sign in to the Azure portal.

- Go to Azure Cosmos DB and click + Create.

- Enter a unique account name, then select your subscription, resource group, and preferred region.

- Choose the API for your workload. Options include:

- Core (SQL)

- MongoDB

- Cassandra

- Gremlin

- Table

- Azure Cosmos DB for PostgreSQL

You can select only one API per account. For JSON-like documents or MongoDB migrations, choose MongoDB.

- (Optional) Enable Multi-region writes to allow write operations in all selected regions.

- Add additional regions for global distribution and high availability.

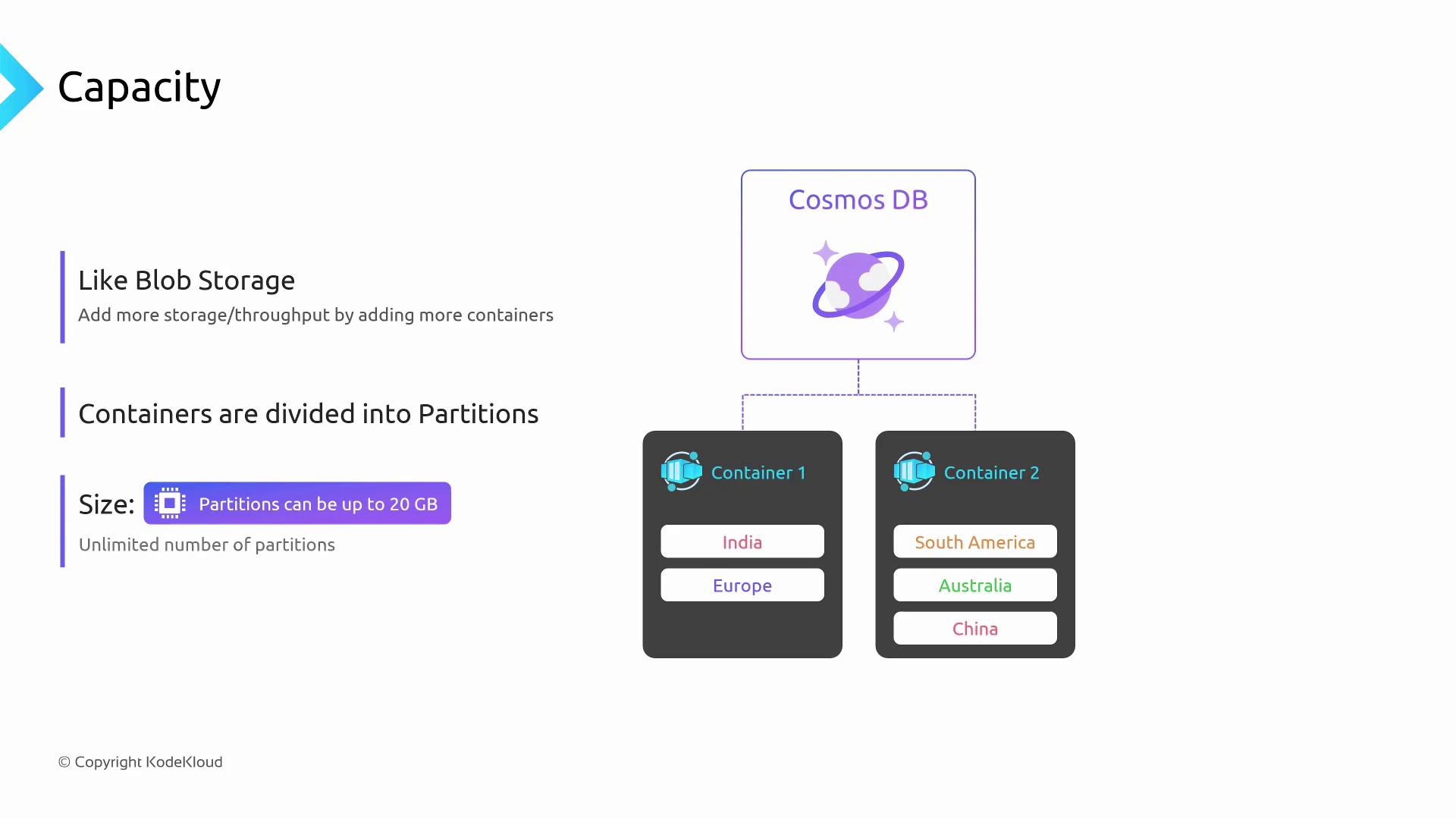

2. Scale Storage with Containers & Partitions

Azure Cosmos DB distributes your data across containers and partitions for virtually unlimited storage and throughput:- Container: A logical namespace for your items (documents, rows, edges). Use multiple containers to isolate workloads.

- Partition: Each container is automatically sharded into physical partitions up to 20 GB. Proper partition key design ensures balanced data distribution.

| Concept | Description |

|---|---|

| Container | Namespace for items; scales throughput at container or database level |

| Partition | Physical shard up to 20 GB; automatically managed by Cosmos DB |

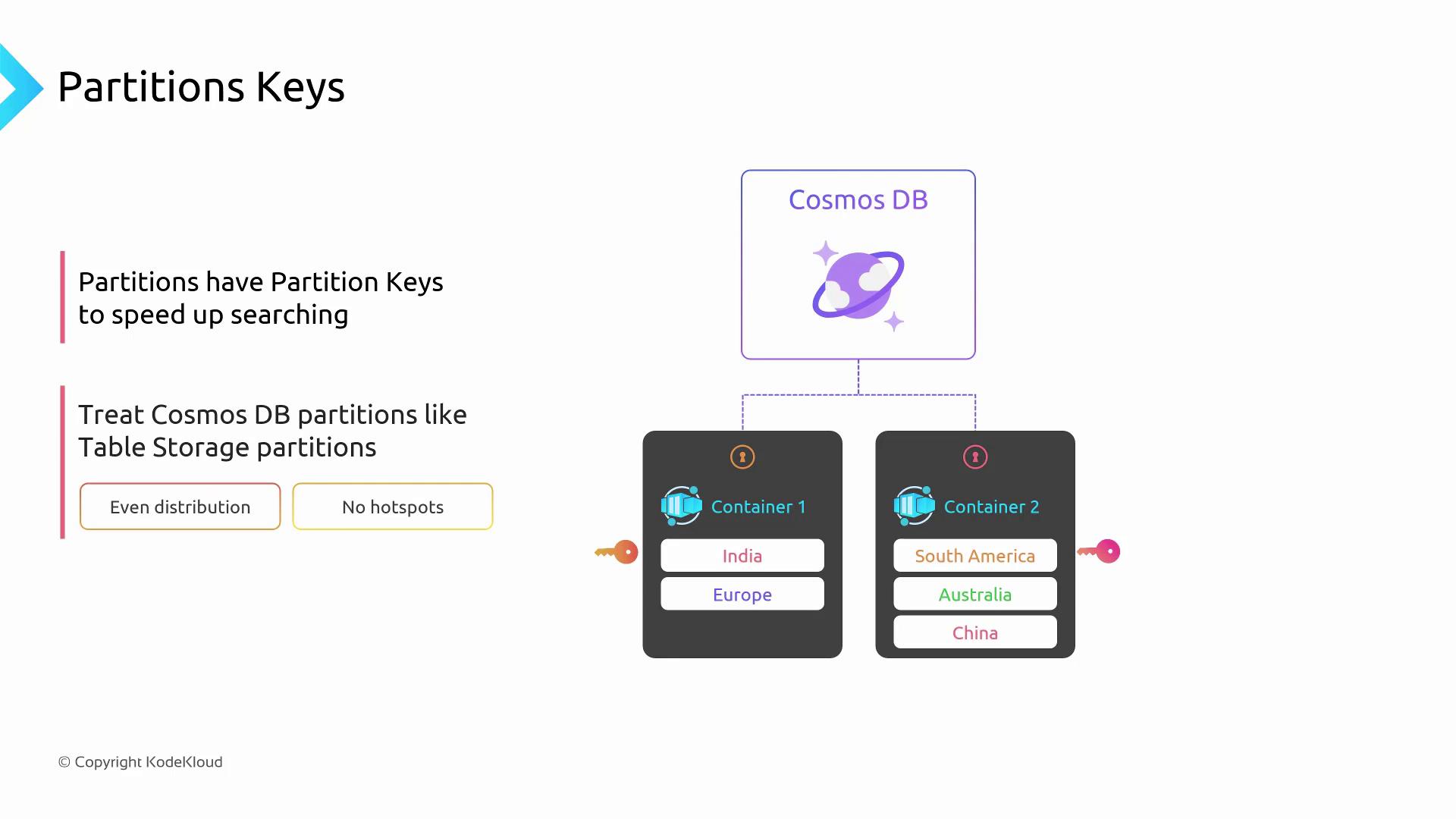

3. Design Effective Partition Keys

A well-chosen partition key is crucial for performance and cost optimization:- Use a property that is frequently present in your queries.

- Ensure high cardinality and even distribution to avoid “hot” partitions.

Select a key with many unique values (e.g.,

userId or orderId) to spread traffic and storage evenly.

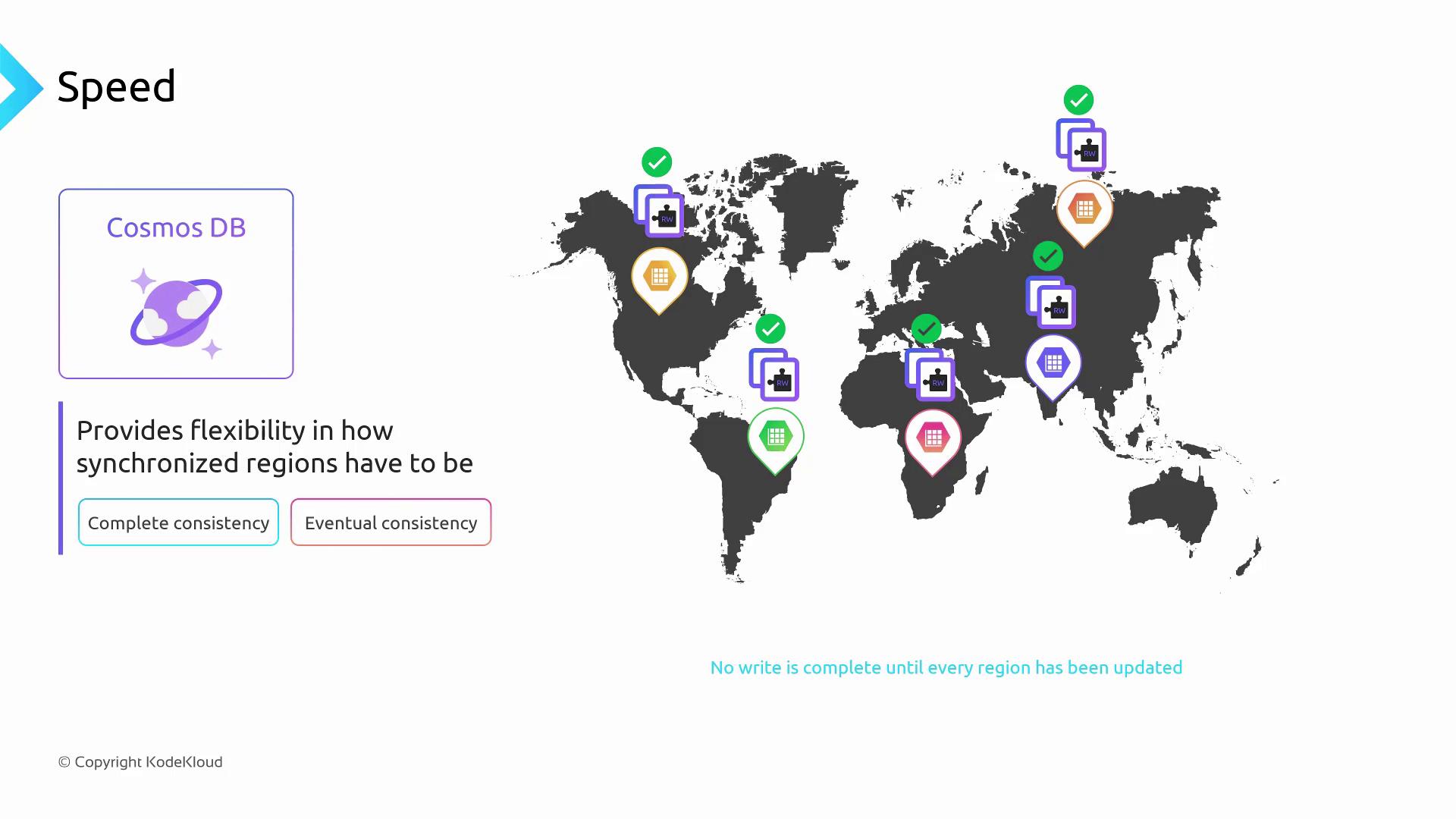

4. Configure Consistency Levels

Azure Cosmos DB provides five consistency levels tailored to different SLAs:| Level | Latency | Failure Impact | Guarantee |

|---|---|---|---|

| Strong | High | Higher failure risk | Reads always see the latest committed write |

| Bounded Staleness | Medium | Moderate | Reads lag by a fixed version interval or time window |

| Session | Low | Low | Monotonic reads/writes within a session |

| Consistent Prefix | Low | Low | Reads never see out-of-order writes |

| Eventual | Lowest | Lowest | Reads may be stale until replicas catch up |

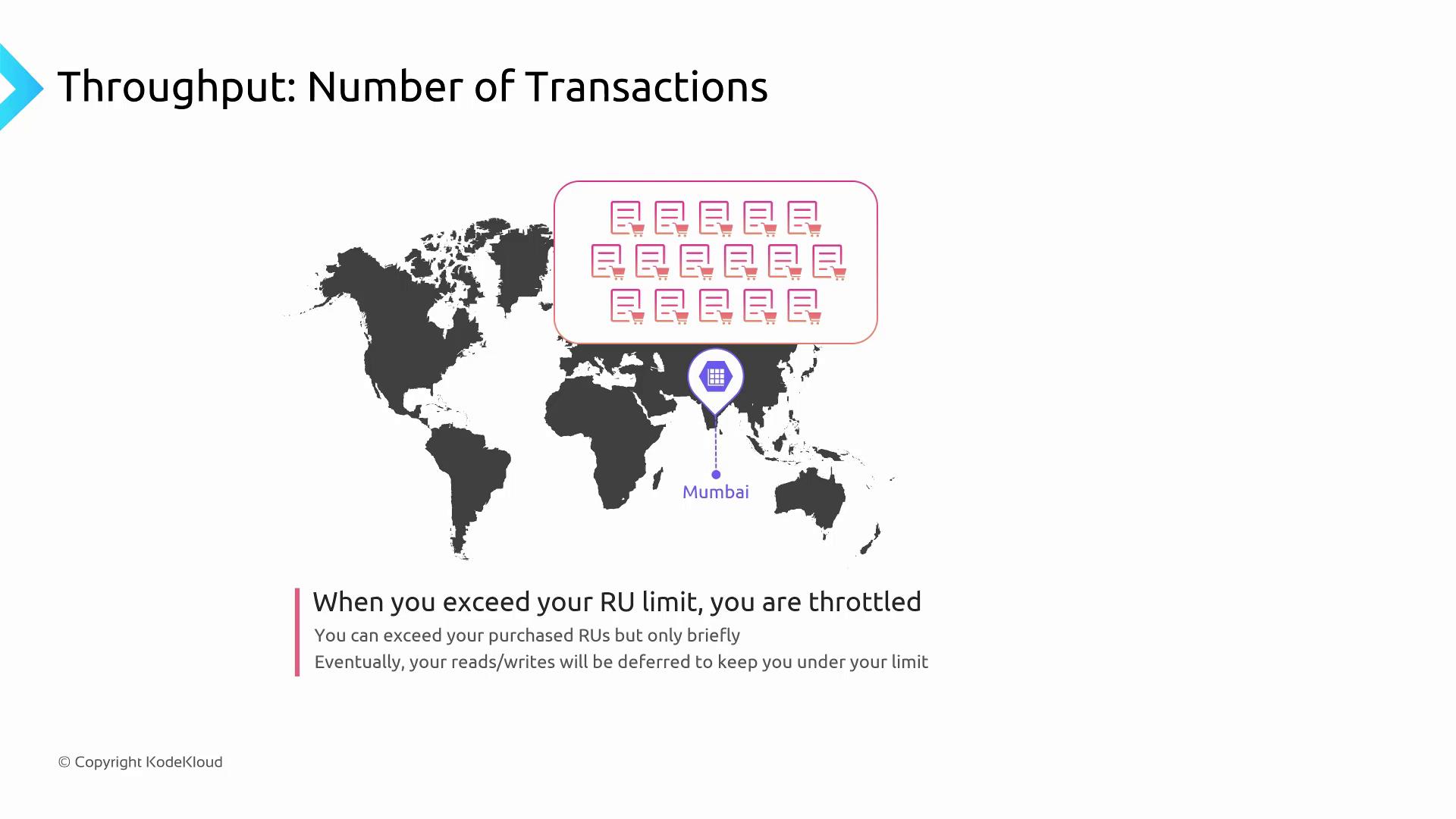

5. Provision Throughput & Manage Request Units (RUs)

Throughput in Cosmos DB is expressed in Request Units per second (RUs/sec):- If operations exceed your provisioned RUs, Cosmos DB returns HTTP 429 (throttled) errors.

- Throttled requests are retried automatically based on your retry policy, which can increase response times.

Monitor RU consumption in Azure Monitor. Adjust your retry policy and increase RUs if you observe frequent HTTP 429 errors.

Estimating & Allocating RUs

- Profile your workload: reads/writes per second and item sizes.

- Use the Azure Cosmos DB capacity calculator for accurate RU estimates.

- Provision at:

- Database level – shared across all containers

- Container level – dedicated throughput per container

| Container Name | Provisioned RUs | Use Case |

|---|---|---|

shipping-orders | 800 | High-traffic transactions |

customer-profiles | 100 | Low-volume user lookups |

inventory | 100 | Periodic stock updates |