Event Streaming with Kafka

Foundations of Event Streaming

Demo Setting up Kafka Cluster and Kafka UI using Docker

In this tutorial, you'll learn how to spin up a fully functional Apache Kafka cluster with Docker and visualize it using an open-source Kafka UI. By the end, you’ll be able to manage topics, brokers, and partitions—all from a browser.

Prerequisites

- Docker installed on your machine

- A code editor or terminal of your choice

1. Create a Dedicated Docker Network

Isolate your Kafka components on a custom bridge network:

docker network create kafka-net

2. Launch the Kafka Cluster

We’ll use the lensesio/fast-data-dev Docker image, which bundles ZooKeeper, Kafka broker, Schema Registry, REST Proxy, and Control Center.

docker run --rm -d \

--network kafka-net \

-p 2181:2181 \

-p 3030:3030 \

-p 9092:9092 \

-p 8081:8081 \

-p 8082:8082 \

-e ADV_HOST=kafka-cluster \

--name kafka-cluster \

lensesio/fast-data-dev

| Port | Service |

|---|---|

| 2181 | ZooKeeper |

| 3030 | Schema Registry UI |

| 9092 | Kafka broker |

| 8081 | REST Proxy |

| 8082 | Control Center |

When you run this command for the first time, Docker will pull the image:

Unable to find image 'lensesio/fast-data-dev:latest' locally

latest: Pulling from lensesio/fast-data-dev

31.43MB/31.43MB

...

79b6f845fed: Download complete

Once started, verify the container is running:

docker container ls

3. Deploy the Kafka UI

We’ll add Kafka UI by Provectus Labs to visualize and manage your cluster through a web interface.

docker run --rm -d \

--network kafka-net \

-p 7000:8080 \

-e DYNAMIC_CONFIG_ENABLED=true \

--name kafka-ui \

provectuslabs/kafka-ui

Note

The DYNAMIC_CONFIG_ENABLED flag allows you to add and modify multiple Kafka clusters dynamically without restarting the UI.

Confirm both containers are up:

docker container ls

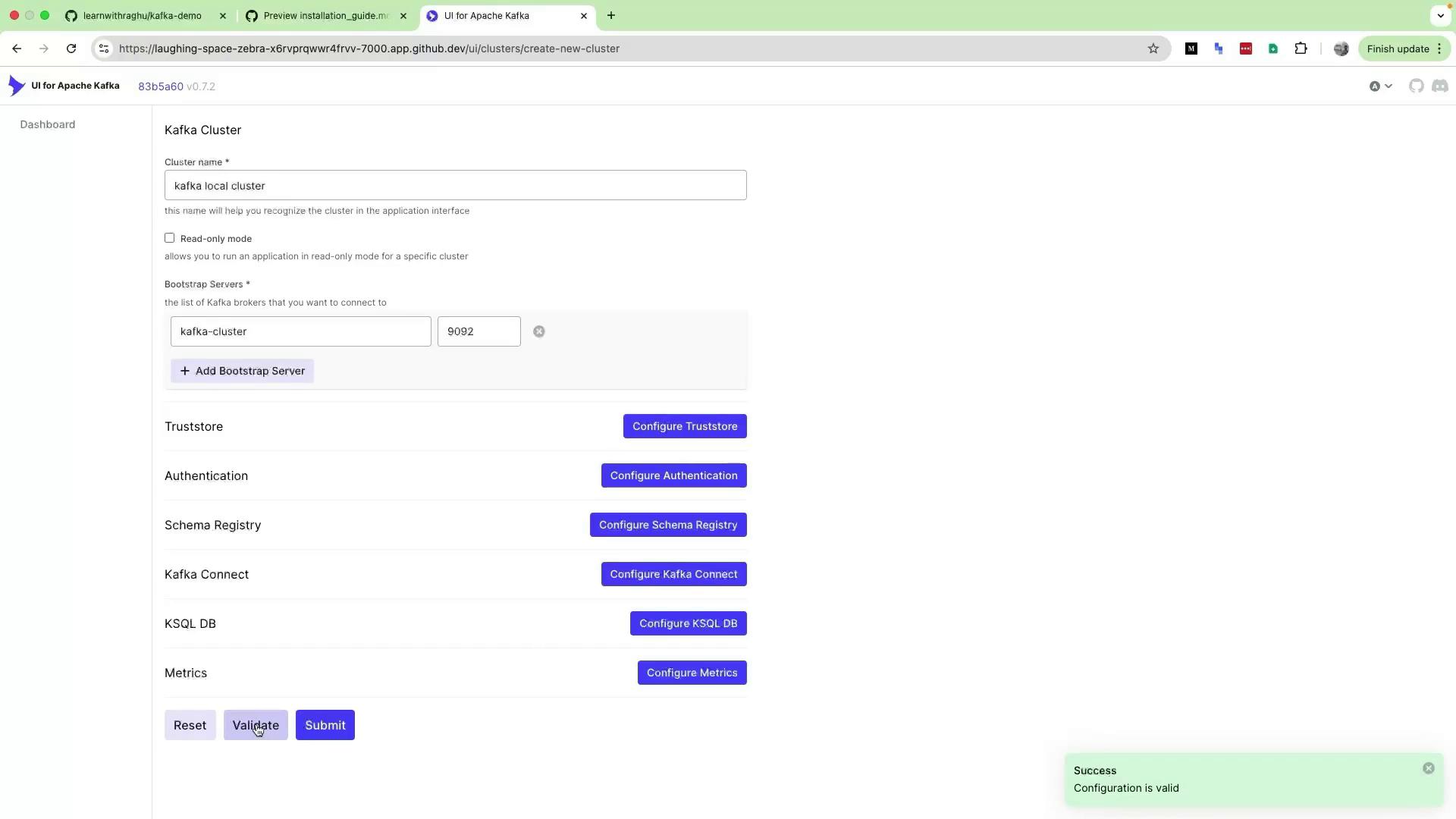

4. Configure Your Cluster in the UI

- Open your browser at:

http://localhost:7000 - On the initial setup page:

- Cluster Name: e.g.,

Kafka Local Cluster - Broker:

kafka-cluster:9092

- Cluster Name: e.g.,

- Click Validate to test connectivity.

- Once validated, click Submit.

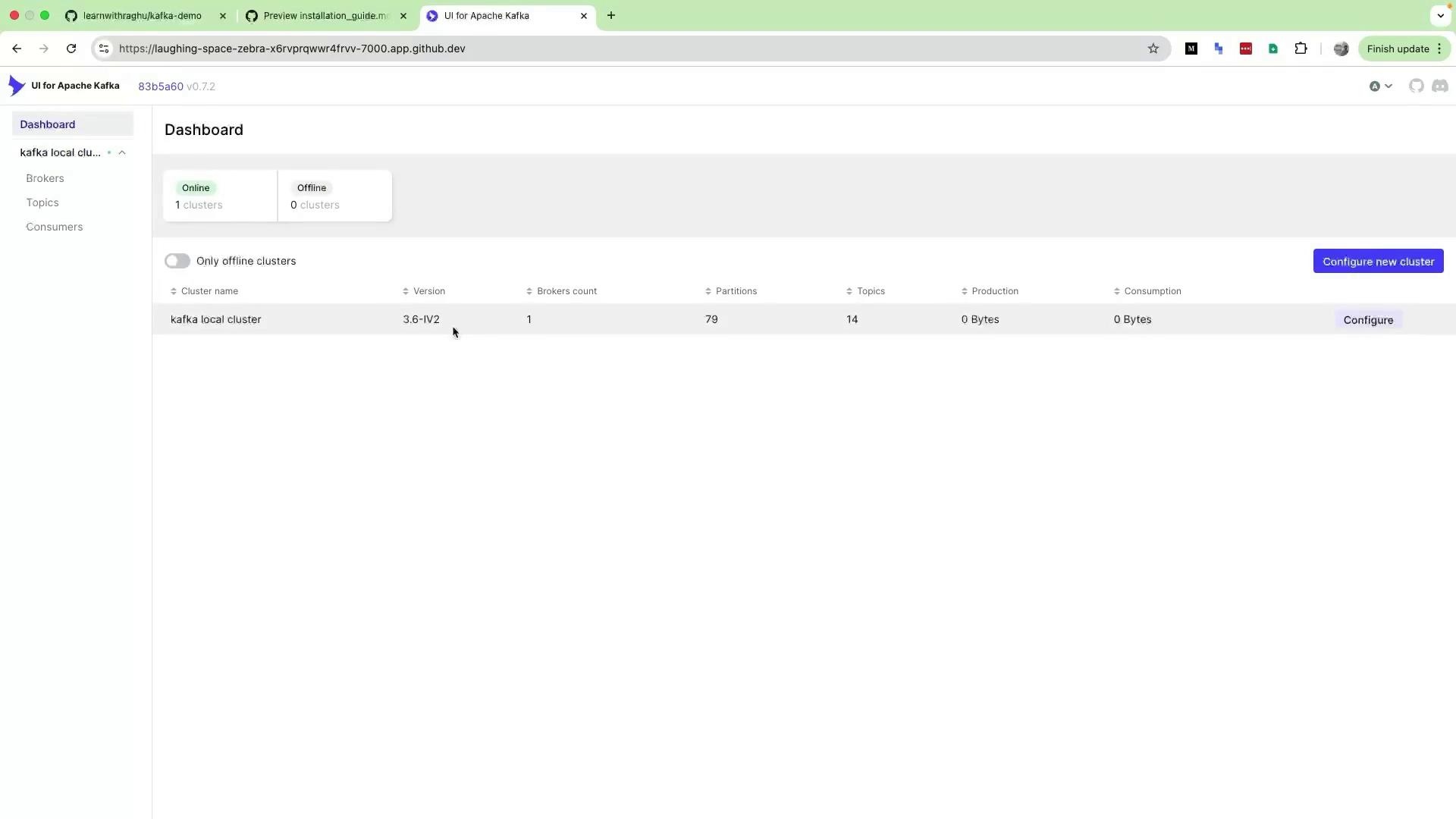

After submission, refresh the page. You should see your cluster listed:

5. Explore Cluster Details

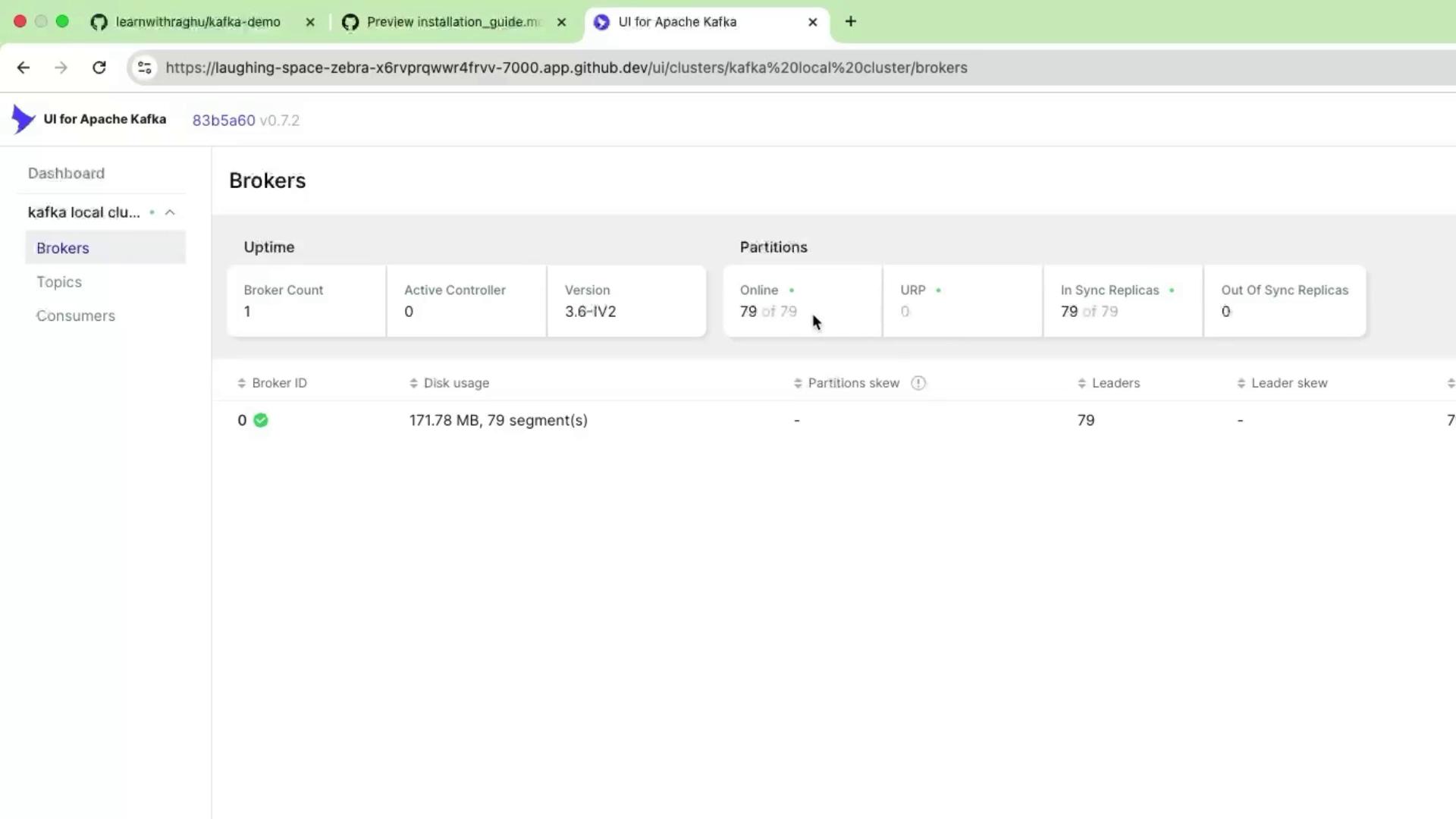

Brokers

Select Brokers from the sidebar to view:

- Broker count and IDs

- Controller status

- Kafka version

- Partition and replica status

Topics

Click Topics to browse all existing topics, including demo and system topics created by fast-data-dev.

Conclusion

With just two Docker commands, you now have a local Kafka cluster and a powerful UI to manage it. This setup eliminates heavy CLI usage and accelerates your development workflow.

Links and References

Watch Video

Watch video content