Event Streaming with Kafka

Foundations of Event Streaming

What is Event Streaming

Event streaming is the real-time processing and distribution of event data—discrete records that represent state changes or actions. Modern systems like Apache Kafka leverage durable, high-throughput logs to store and forward these events, enabling reactive architectures, analytics, and collaboration across distributed services.

Key Concepts

- Event: A record of an occurrence (often a key-value pair) such as a user action, sensor reading, or system update.

- Stream: An ordered, append-only sequence of events.

- Stream Processing: The continuous transformation, enrichment, or routing of events as they arrive.

Note

Event streaming differs from batch processing by handling data instantly rather than in periodic chunks. This low-latency approach powers use cases like fraud detection, monitoring, and live dashboards.

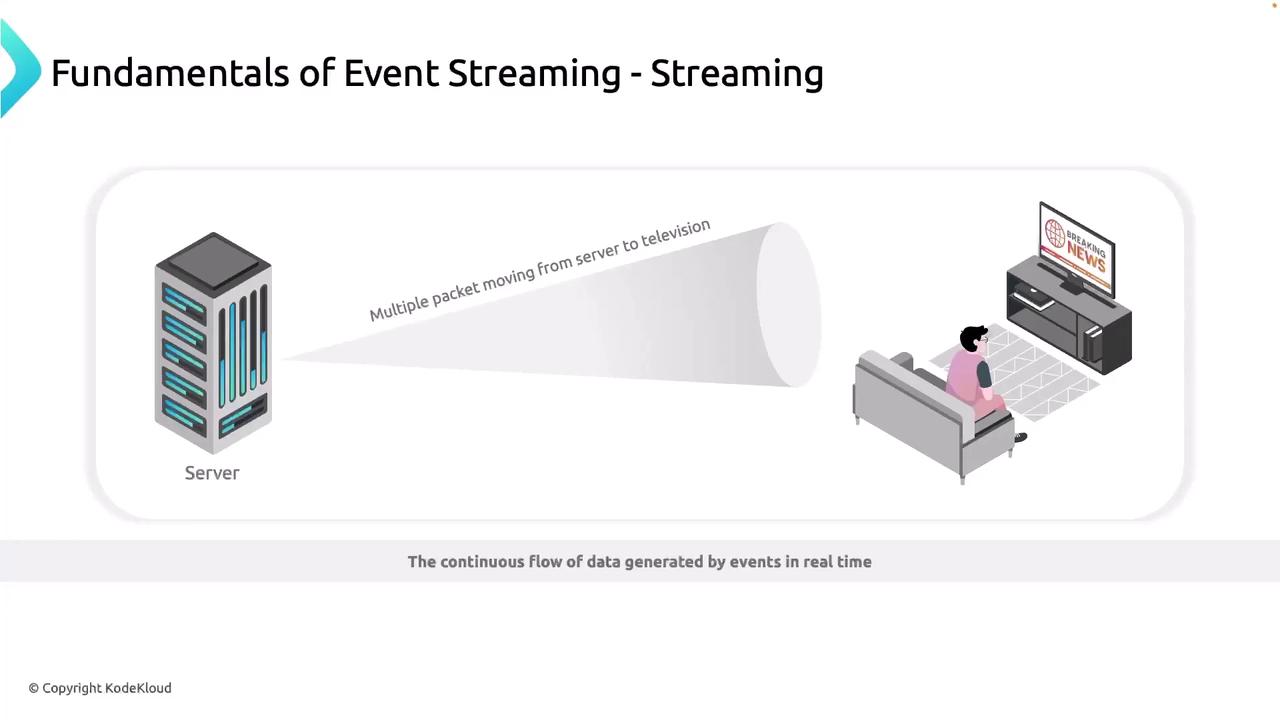

How It Works: Live News Example

Imagine you’re watching live news on your TV. Behind the scenes:

- A news server generates packets of data—each packet is an event (e.g., breaking news, weather alert).

- These events are published continuously to a streaming platform (like Kafka).

- Your TV client subscribes to the news topic and renders each packet in real time.

In this scenario, each video frame or text bulletin is an event flowing through a durable log, ensuring you never miss a moment.

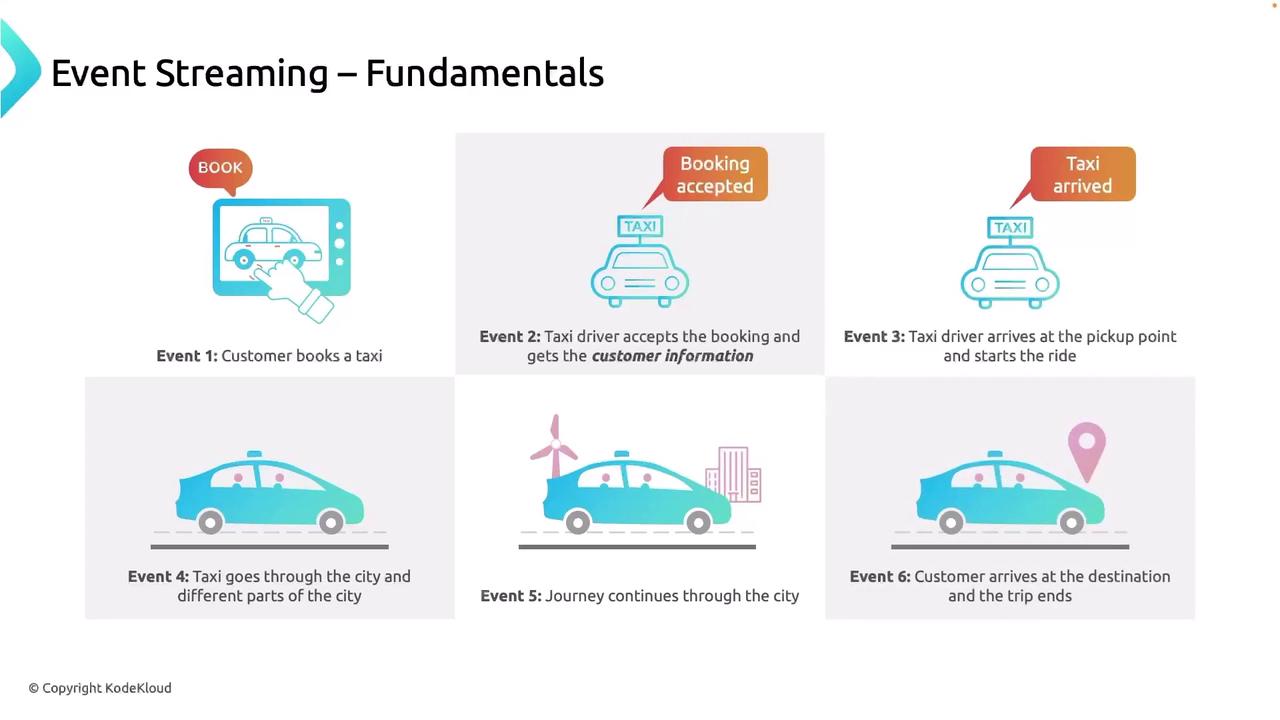

Real-World Use Case: Taxi Booking App

A taxi-hailing app uses event streaming to coordinate riders, drivers, and billing services:

| Step | Event Type | Description |

|---|---|---|

| 1. Request Ride | ride_requested | Customer selects pickup/drop-off and sends a ride_requested event. |

| 2. Broadcast to Drivers | ride_offered | The platform emits ride_offered to nearby drivers. |

| 3. Driver Accepts | ride_accepted | A driver returns ride_accepted, updating the customer’s UI with ETA and driver info. |

| 4. Arrival & Start | arrival, trip_start | Driver arrives (arrival), passenger boards, then trip_start triggers billing. |

| 5. Telemetry During Trip | telemetry | Continuous events like GPS coordinates, speed, and traffic data are streamed for analytics. |

| 6. Trip Completion | trip_end | On drop-off, trip_end fires. The system calculates fare, charges the wallet, and pays out. |

Benefits of Event Streaming

| Benefit | Impact |

|---|---|

| Scalability | Partitioned logs enable horizontal scale for high-throughput loads. |

| Durability | Events persist on disk, surviving failures and time-window replays. |

| Decoupling | Producers and consumers operate independently, improving agility. |

| Real-Time Insights | Instant analytics on live data for monitoring and alerting. |

Getting Started with Kafka

- Install Kafka

Follow the Apache Kafka quickstart to set up a single-node cluster. - Create a Topic

kafka-topics.sh --create --topic events --bootstrap-server localhost:9092 --partitions 3 --replication-factor 1 - Produce Events

kafka-console-producer.sh --topic events --broker-list localhost:9092 > {"eventType":"ride_requested","userId":"1234","pickup":"A","dropoff":"B"} - Consume Events

kafka-console-consumer.sh --topic events --from-beginning --bootstrap-server localhost:9092

Links and References

Watch Video

Watch video content