GCP Cloud Digital Leader Certification

Container orchestration in GCP

Cloud Run

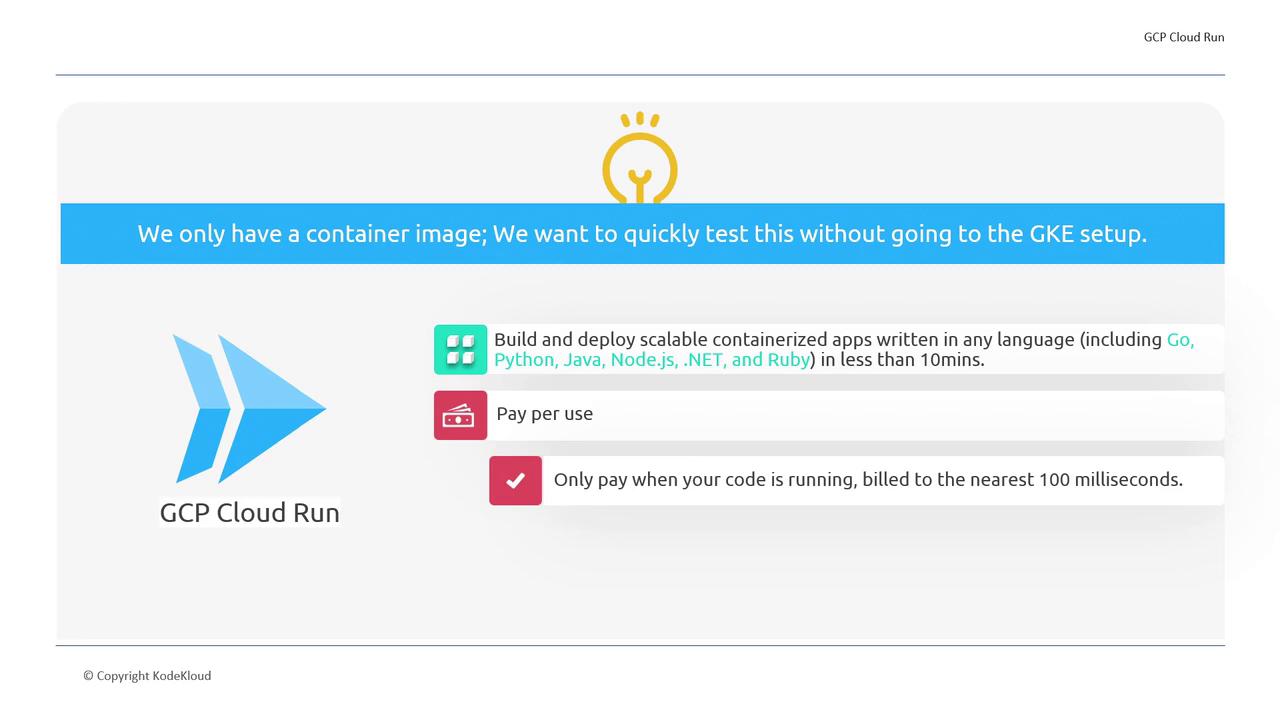

Cloud Run provides an efficient alternative for testing and running containerized applications without the overhead and expense of setting up a full Google Kubernetes Engine (GKE) cluster. This serverless service allows you to deploy applications quickly, test their performance, and pay only for the resources you use—making it ideal for development, testing, and scaling workloads.

Benefits of Cloud Run include:

- Quick deployment of containerized applications built using various programming languages.

- A pay-as-you-go billing model where charges apply only during active request handling.

- Elimination of infrastructure management, as the underlying resources are fully abstracted.

- Easy integration with other Google Cloud services such as load balancing and logging.

Note

Cloud Run is especially useful for evaluating application performance without incurring the costs of running idle resources, which is often a risk with GKE clusters.

How Does Cloud Run Work?

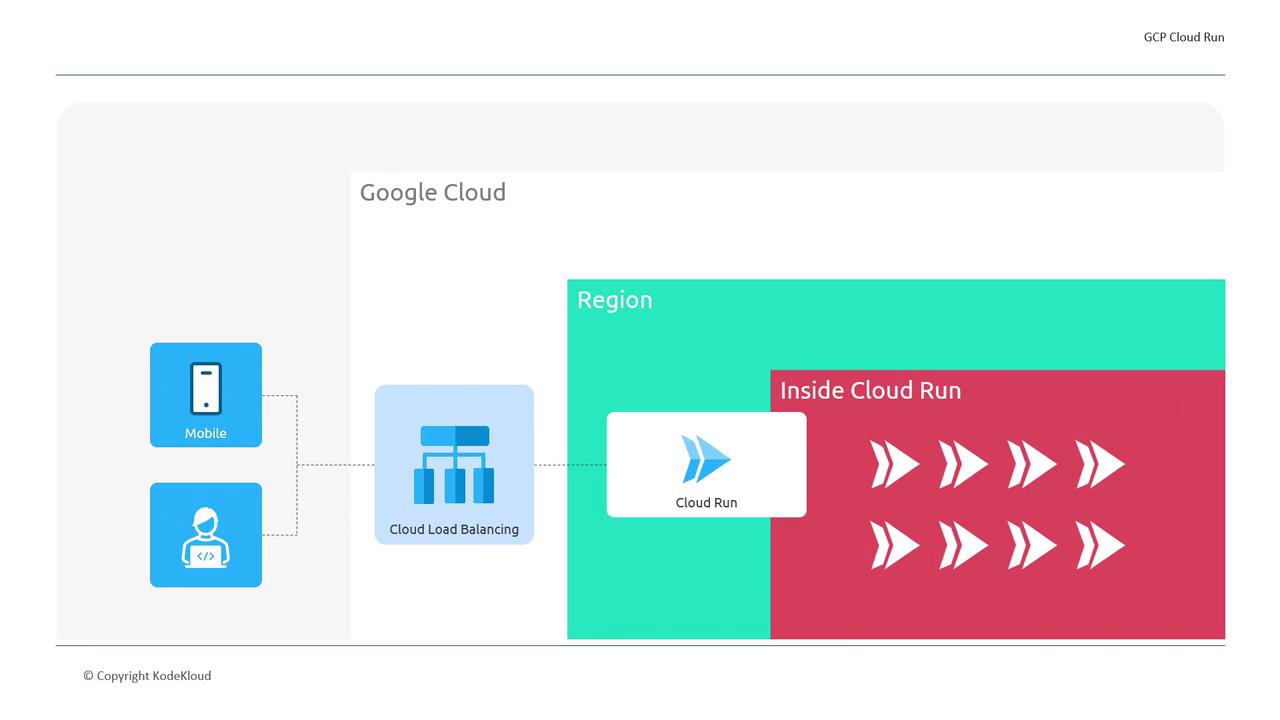

Cloud Run functions as a scalable, serverless platform that dynamically provisions container instances in response to incoming requests. While its user interface may resemble that of GKE, its internal architecture simplifies operations by automatically handling scalability and resource allocation.

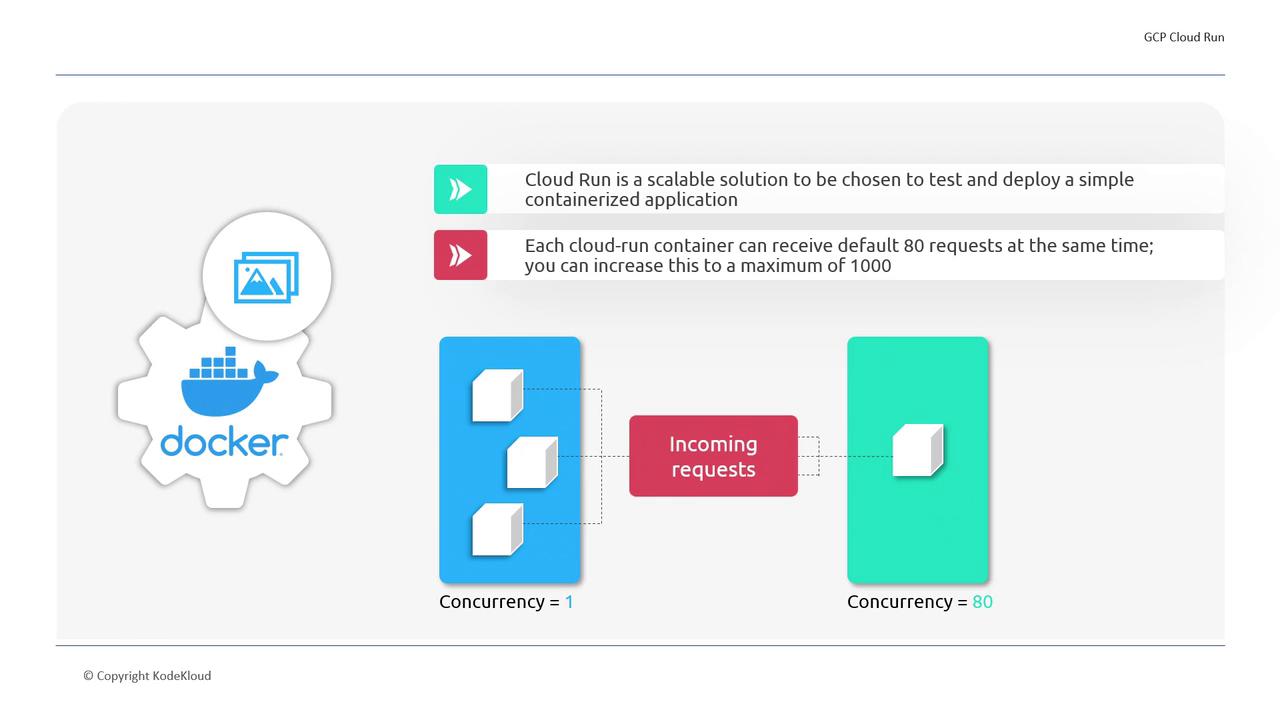

When a request arrives, Cloud Run promptly spins up the required container instances. For instance, if you set a container's concurrency parameter to 1, each container processes one request at a time. Conversely, increasing the concurrency value to 80 allows a single container to process up to 80 simultaneous requests. If the volume of incoming requests exceeds the established limit per container, additional instances are automatically created to manage the load.

This scalable approach ensures that your applications can efficiently respond to traffic spikes without manual intervention. It also fits perfectly into a microservices architecture, where individual containerized services can be deployed and managed independently.

To better illustrate how Cloud Run manages request handling and scalability, consider this diagram that contrasts different concurrency settings:

Warning

Before migrating to Cloud Run for production workloads, ensure that the serverless model fits your application's performance requirements, as the abstraction could limit certain advanced configuration options available in GKE.

In summary, Cloud Run offers a streamlined path to deploy container images, evaluate application performance, and handle dynamic scaling—all while minimizing ongoing resource costs. This makes it an excellent choice for developers seeking a flexible, cost-effective deployment platform on Google Cloud Platform.

Thank you for reading.

Watch Video

Watch video content