GitOps with ArgoCD

ArgoCD Basics

Create ArgoCD Project

In this guide, you'll learn how to create a custom ArgoCD project that enforces specific restrictions on source repositories and cluster-level resources. We begin by reviewing the default project configuration, then move on to creating and configuring a custom project, and finally deploy and synchronize an application to validate the restrictions.

Reviewing the Default Project

Before implementing a custom project, it is important to understand how the default project is configured. The default project is highly permissive—it allows deployments to any destination and namespace without restrictions on source repositories or resources.

Run the following command to list the projects:

argocd proj list

The output will show that the default project accepts any source (*) and destination (*,*), with no restrictions on cluster-level or namespace-level resources:

NAME DESCRIPTION DESTINATIONS SOURCES CLUSTER-RESOURCE-WHITELIST NAMESPACE-RESOURCE-BLACKLIST SIGNATURE-KEYS ORPHANED-RESOURCES

default *i,* * */* <none> disabled

For clarity, here's another similar output:

argocd proj list

NAME DESCRIPTION DESTINATIONS SOURCES CLUSTER-RESOURCE-WHITELIST NAMESPACE-RESOURCE-BLACKLIST SIGNATURE-KEYS ORPHANED-RESOURCES

default *.* * */* <none> <none> disabled

Note

The default project does not limit which clusters or namespaces can be deployed to, nor does it restrict source repositories. This open configuration may not be suitable for every production environment.

Checking Applications and Projects

You may also want to verify the current applications and projects using the Kubernetes CLI within the ArgoCD namespace.

To list all applications:

k -n argocd get applications

Output:

NAME SYNC STATUS HEALTH STATUS

solar-system-app-2 Synced Healthy

To list the projects available in ArgoCD:

k -n argocd get appproj

Output:

NAME AGE

default 102m

Creating a Custom Project

Next, we will create a new custom project. This project will restrict allowed source repositories and control cluster-level resource deployment. You can create this project using either the UI or CLI. Below is the CLI output that shows the default state before introducing our custom project:

argocd proj list

NAME DESCRIPTION DESTINATIONS SOURCES CLUSTER-RESOURCE-WHITELIST NAMESPACE-RESOURCE-BLACKLIST SIGNATURE-KEYS ORPHANED-RESOURCES

default *,* * */* <none> disabled

Additionally, review the current applications and projects:

k -n argocd get applications

NAME SYNC STATUS HEALTH STATUS

solar-system-app-2 Synced Healthy

k -n argocd get appproj

NAME AGE

default 102m

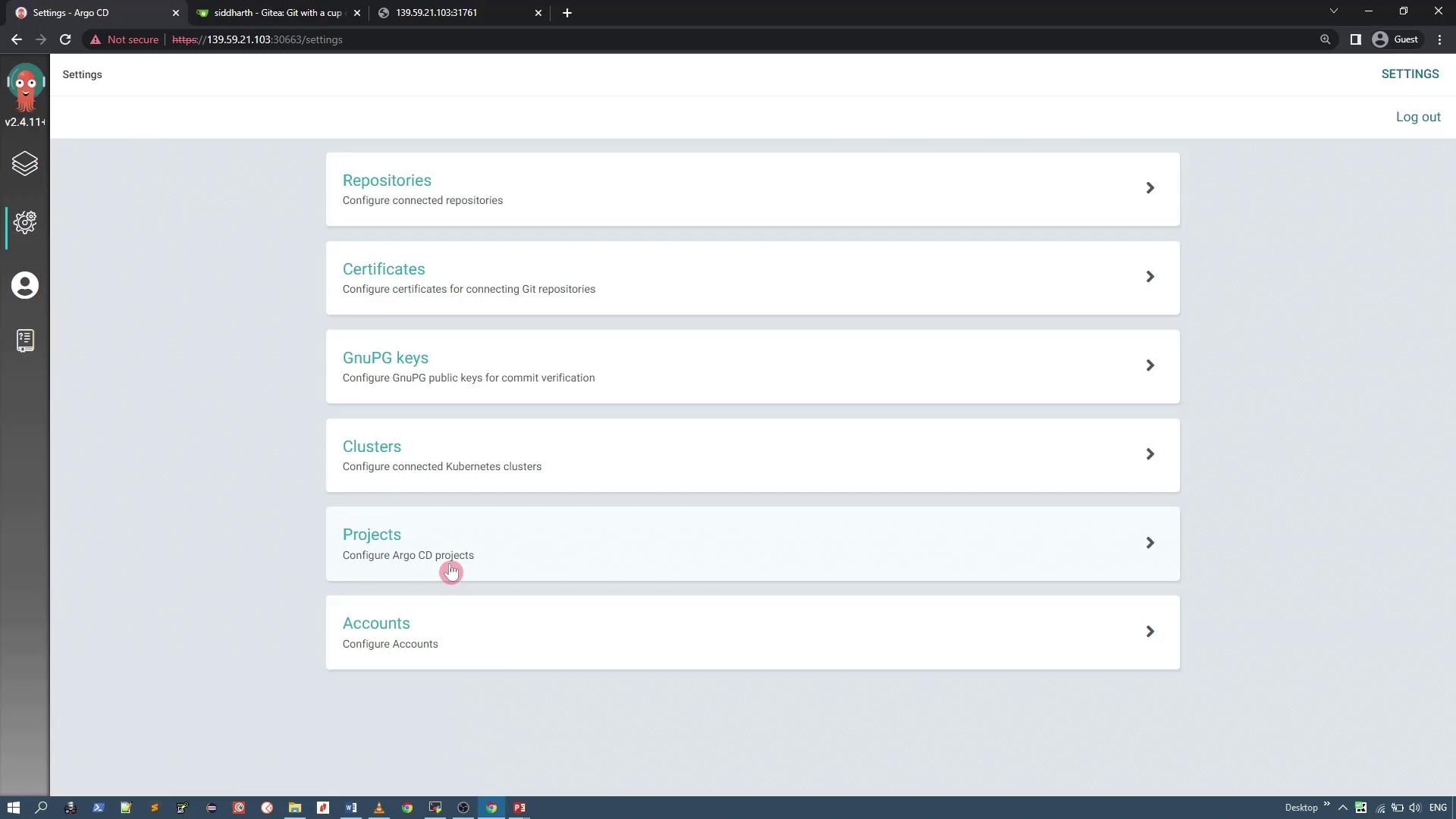

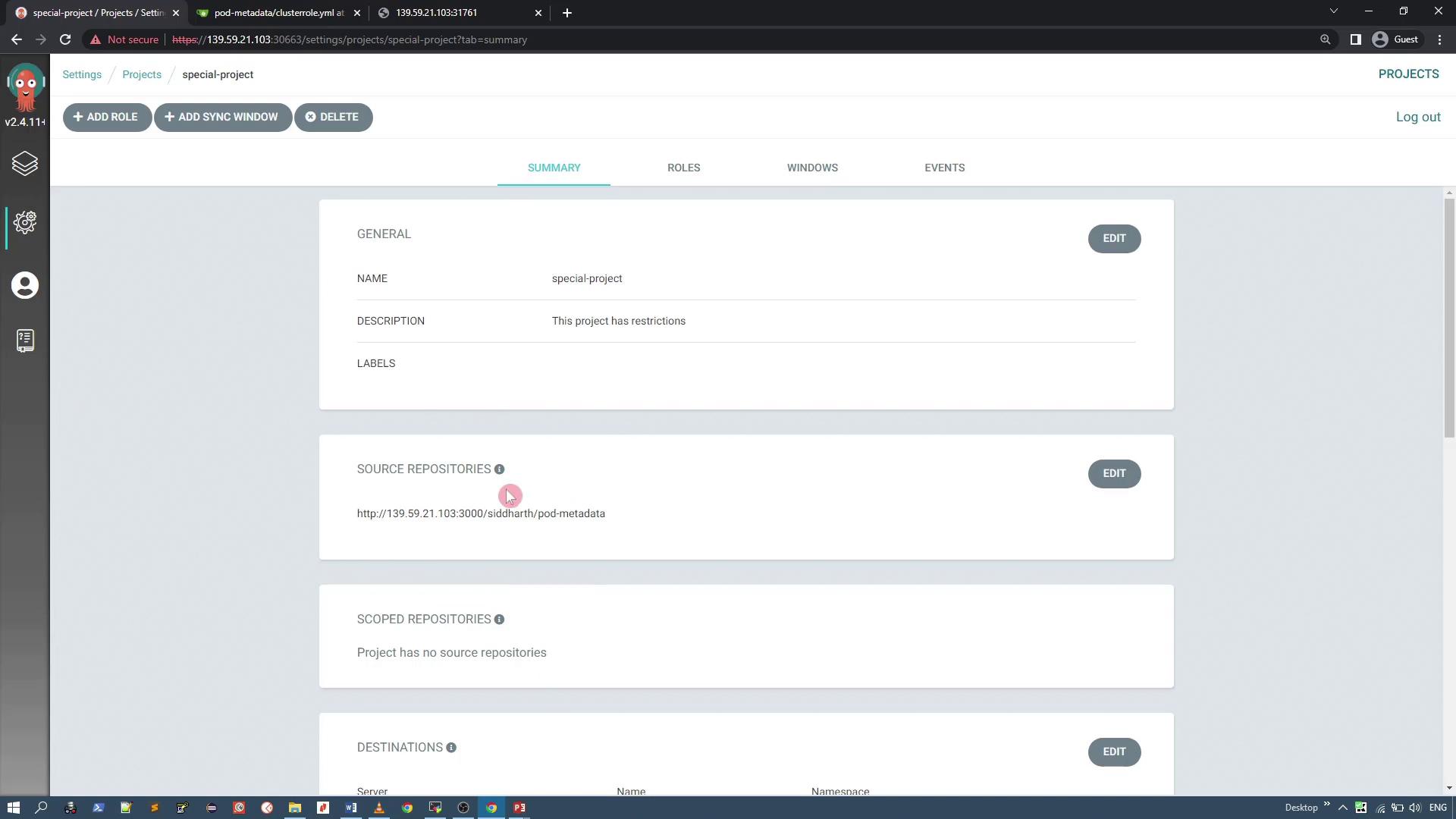

Configuring the Custom Project via the UI

To start, open the ArgoCD user interface and navigate to the Projects configuration via the sidebar. You will see the default project configuration listed. Click on "Create a new project" to begin setting up your custom project, for instance named "special-project". Provide a clear description outlining the restrictions that will be applied.

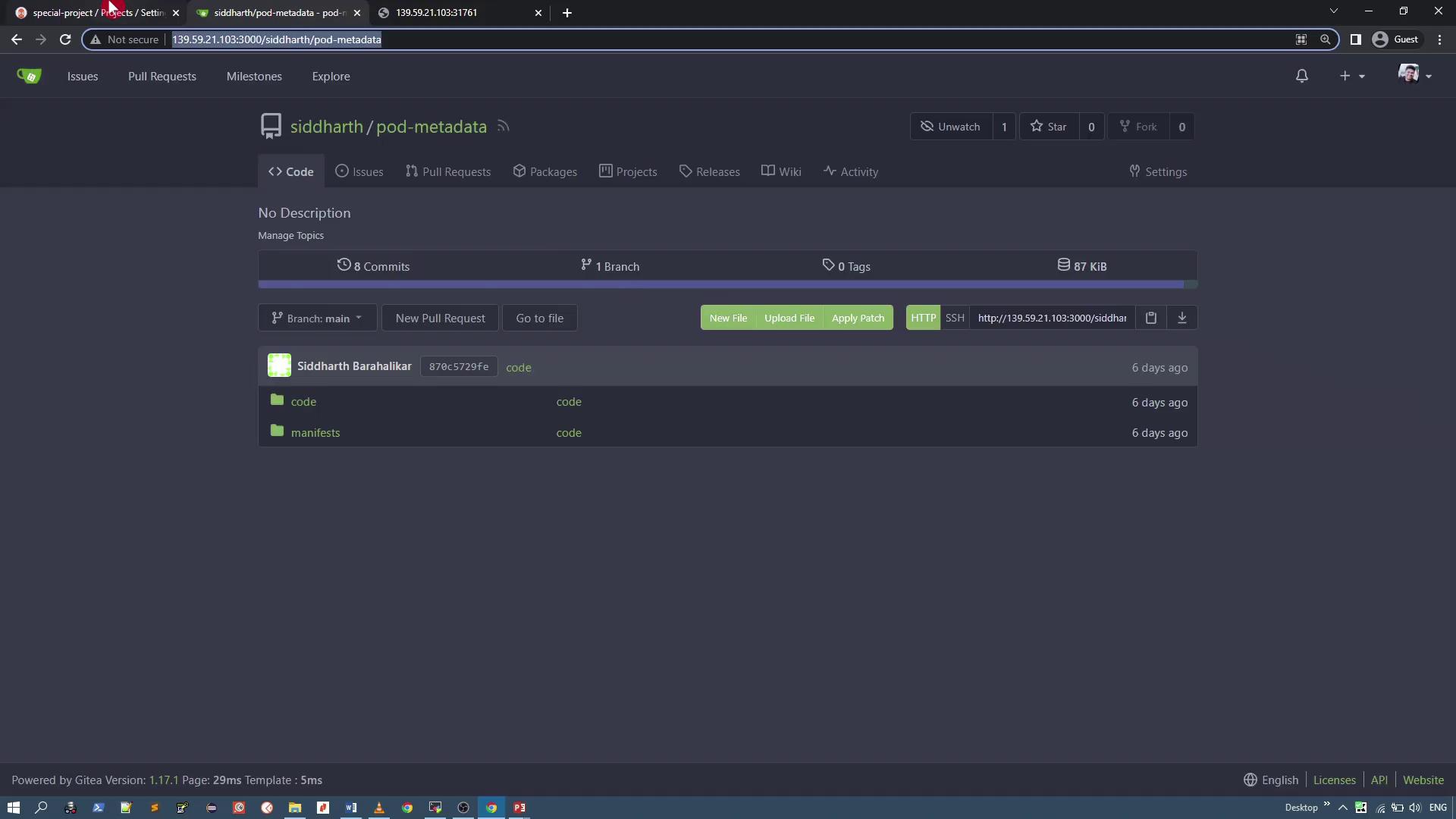

Setting Source Repository Restrictions

Within the project settings, limit the allowed source repositories by replacing the default wildcard (*) with the specific Git repository URL containing the pod metadata resources. For example, allow only the "pod metadata" repository:

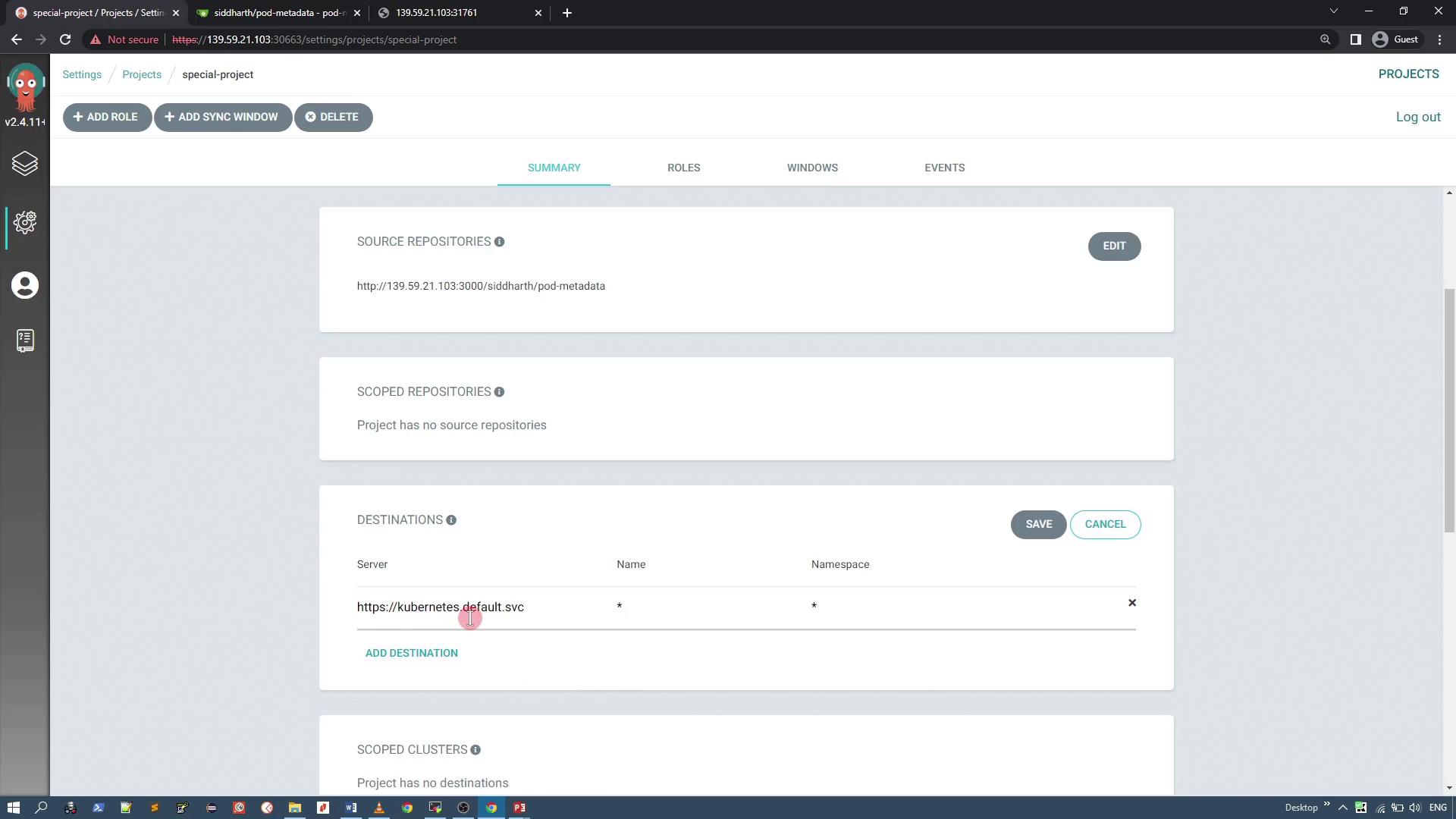

Defining Deployment Destinations

Under the Destinations section, restrict deployments to the current cluster by specifying its server URL (e.g., https://kubernetes.default.svc). You may keep the namespace wildcard (*) to allow flexible namespace usage.

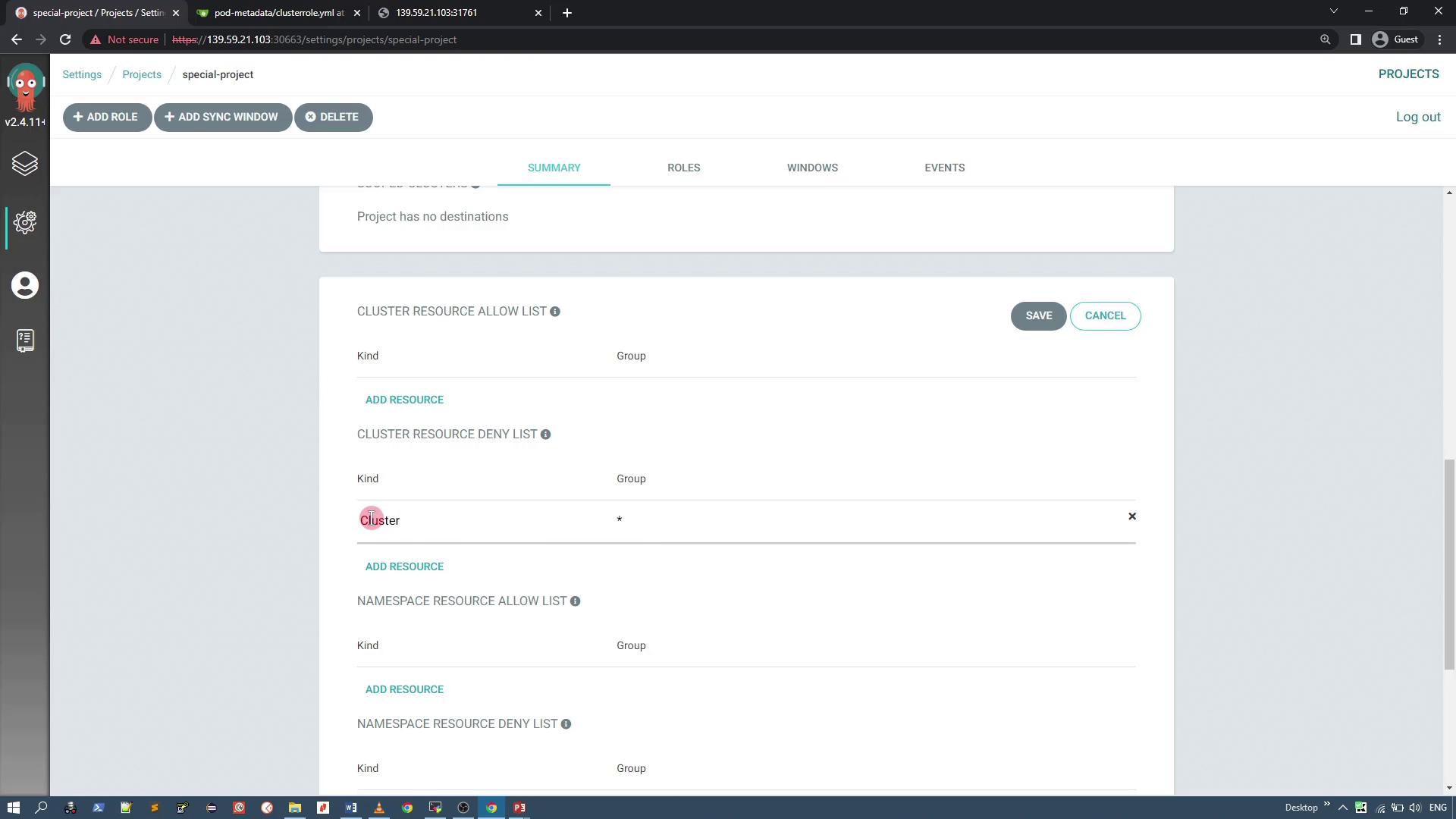

Configuring Resource Denial

To enhance security, configure the project to deny certain cluster-level resources. In this example, we restrict ClusterRole resources to prevent unauthorized full-access permissions. Suppose you have a manifest for a ClusterRole resource as shown below:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: pod-master

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- "*"

Add an entry to the project’s deny list for ClusterRole resources. When editing, select "ClusterRole" as the kind and leave the group field empty.

After saving your settings, view the new project summary in the UI:

Verifying the Custom Project via CLI

After configuration, verify that the new "special-project" has been created alongside the default project using:

argocd proj list

Expected output:

NAME DESCRIPTION DESTINATIONS SOURCES CLUSTER-RESOURCE-WHITELIST NAMESPACE-RESOURCE-BLACKLIST SIGNATURE-KEYS ORPHANED-RESOURCES

default /* *,* * /* /* <none> disabled

special-project This project has restrictions https://kubernetes.default.svc,* http://139.59.21.103:3000/siddharth/pod-metadata <none> disabled

To view detailed configuration in YAML format, run:

argocd proj get special-project -o yaml

Below is an example snippet of the YAML output:

metadata:

creationTimestamp: "2022-09-23T15:44:52Z"

generation: 4

name: special-project

namespace: argocd

spec:

clusterResourceBlacklist:

- group: ''

kind: 'ClusterRole'

description: This project has restrictions

description: This project has restrictions

destinations:

- name: in-cluster

namespace: '*'

server: https://kubernetes.default.svc

sourceRepos:

- http://139.59.21.103:3000/siddharth/pod-metadata

status: {}

Verify these settings again after any updates with:

argocd proj get special-project -o yaml

Important

Ensure that all fields are correctly populated. For instance, an empty group field for a ClusterRole should be represented properly in YAML quotes.

Creating and Testing an Application with the Custom Project

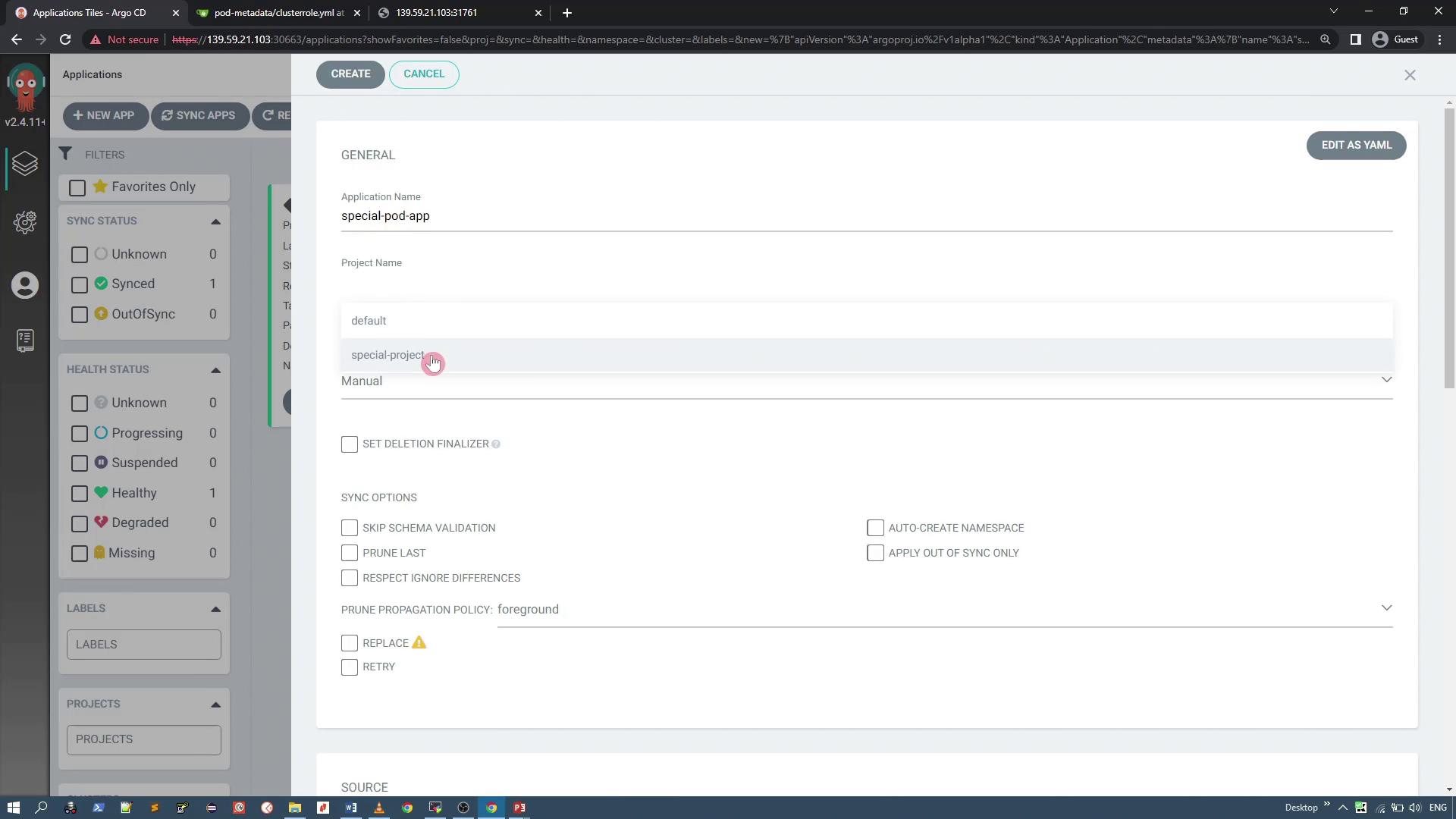

Now, create an application that is associated with the "special-project" custom project. When creating the application (e.g., "special-pod-app"), verify that the connected repository is correct. Note that the application sync policy should be set to manual and the namespace will be auto-created if necessary.

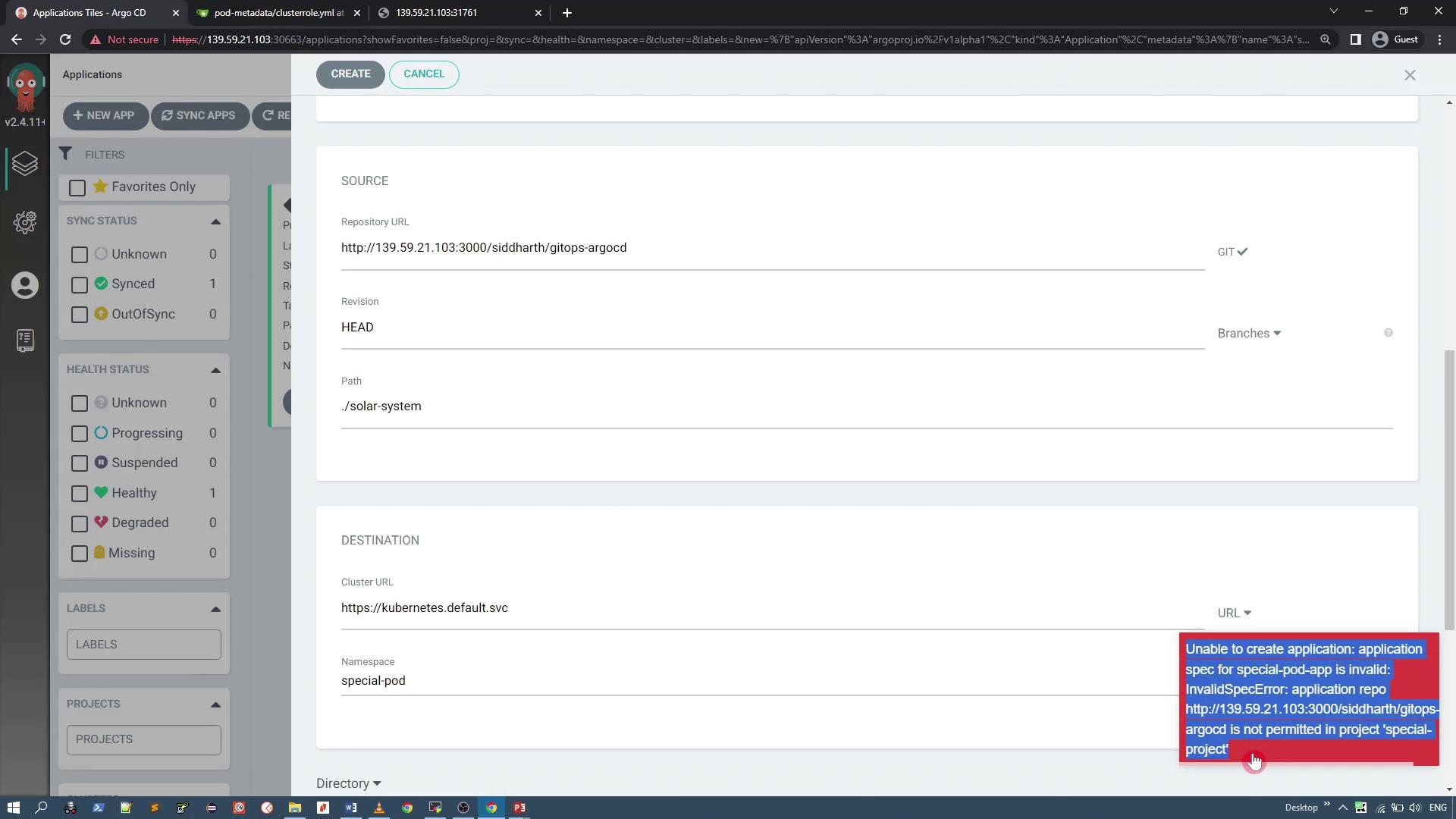

If you try deploying an application from a disallowed repository, an error will appear similar to the following:

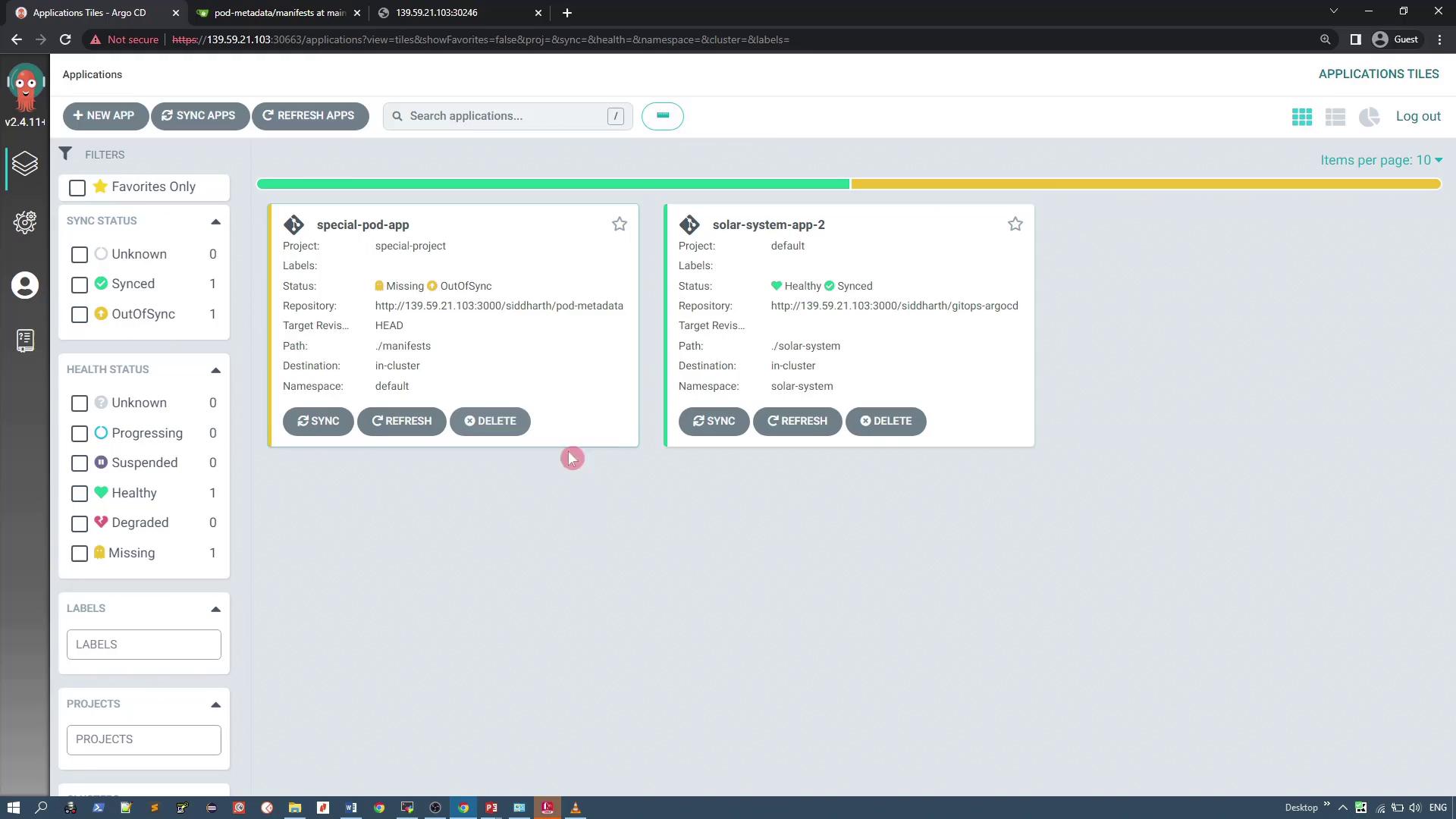

Once you select the approved pod metadata repository and set the application manifest path (e.g., "manifest"), click "Create." The application should now appear in the dashboard.

The ArgoCD dashboard will display both "special-pod-app" and the previously existing "solar-system-app-2":

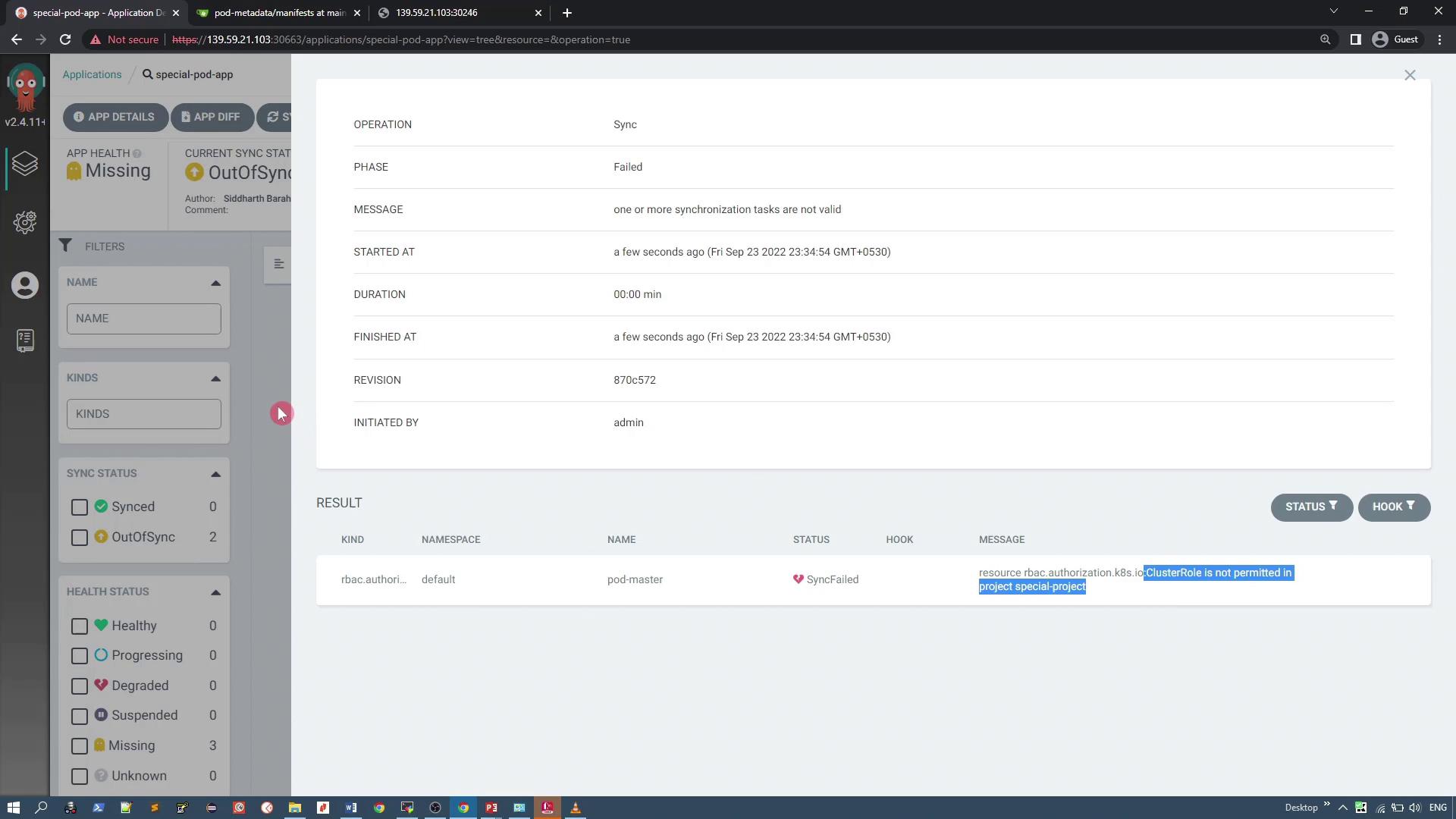

Synchronizing the Application and Handling Denied Resources

To deploy the application, click "Sync" for "special-pod-app." During synchronization, ArgoCD will try to apply all resources defined in the repository—including a ClusterRole resource. Since the custom project denies ClusterRole resources, the sync will fail with an error indicating that the resource is not permitted.

To resolve this, deselect the ClusterRole resource during the sync operation. This will deploy the remaining resources (the deployment and service) successfully. After resynchronizing, "special-pod-app" should deploy the pod metadata application and expose it via the configured service.

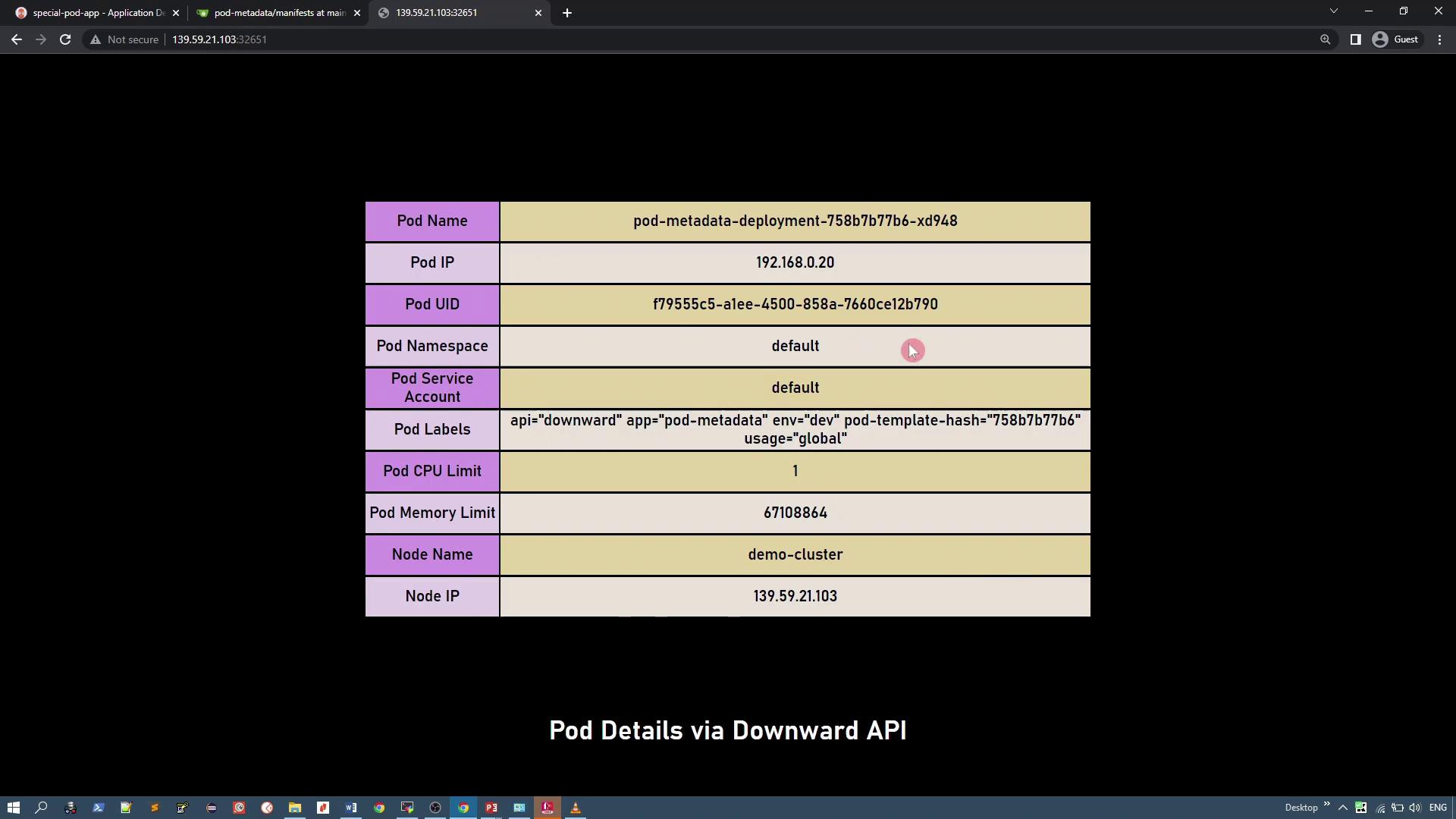

Application Details

Upon successful synchronization, the application deploys a simple PHP application that displays pod details (such as pod IP, pod name, UID, CPU requests, etc.) using the Kubernetes Downward API.

For reference, here is part of the deployment manifest:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: pod-metadata

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

api: downward

app: pod-metadata

env: dev

usage: global

spec:

containers:

- name: test-container

env:

- name: MY_NODE_IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

- name: MY_POD_ID

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: MY_POD_UID

valueFrom:

fieldRef:

fieldPath: metadata.uid

- name: MY_CPU_REQUEST

valueFrom:

resourceFieldRef:

containerName: test-container

resource: requests.cpu

- name: MY_CPU_LIMIT

valueFrom:

resourceFieldRef:

containerName: test-container

resource: limits.cpu

The following service manifest exposes the application via a node port (e.g., 32651):

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"pod-metadata"},"name":"pod-metadata-service","namespace":"default"},"spec":{"clusterIP":"10.107.42.62","clusterIPs":["10.107.42.62"],"externalTrafficPolicy":"Cluster","internalTrafficPolicy":"Cluster","ipFamilies":["IPv4"],"ipFamilyPolicy":"SingleStack","ports":[{"nodePort":32651,"port":80,"protocol":"TCP","targetPort":80}],"selector":{"app":"pod-metadata"}}}

creationTimestamp: "2022-09-23T18:05:38Z"

labels:

app: pod-metadata

name: pod-metadata-service

namespace: default

spec:

clusterIP: 10.107.42.62

clusterIPs:

- 10.107.42.62

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- nodePort: 32651

port: 80

protocol: TCP

targetPort: 80

selector:

app: pod-metadata

After deployment, access the application via node port 32651 to view pod details, including pod name, IP, UID, host IP, and other metadata retrieved via the Downward API.

Summary

In this lesson, you have learned how to:

- Review the default ArgoCD project configuration.

- Create a custom project with restrictions on source repositories and cluster-level resource deployment.

- Configure project settings via the UI by whitelisting an approved repository and denying ClusterRole resources.

- Deploy an application using the custom project, address synchronization errors due to denied resources, and verify a successful deployment of allowed resources.

Implementing project restrictions in ArgoCD allows you to enforce fine-grained control over application deployments, ensuring that only authorized resources and repositories are used.

Thank you.

Watch Video

Watch video content

Practice Lab

Practice lab