Kubernetes Autoscaling

Horizontal Pod Autoscaler HPA

HPA Multiple Metrics

Welcome to this guide on using Kubernetes’ Horizontal Pod Autoscaler (HPA) with both native and custom metrics. Combining CPU utilization with application-specific indicators—like request rate or transaction count—ensures your workloads scale precisely during demand spikes.

Use Case: E-commerce Platform

Consider a high-traffic e-commerce site where shoppers browse items, add them to carts, and complete purchases. Traffic surges during promotions make CPU and memory alone insufficient signals. You need application metrics (e.g., requests per second, cart size) to scale effectively.

Microservices and Independent Scaling

Your platform runs three microservices:

- Catalog Service: Handles product browsing

- Cart Service: Manages cart operations

- Checkout Service: Processes transactions

Each is a separate Deployment. To know when to scale the Catalog Service, monitor both CPU utilization and incoming request rate.

Exposing Custom Metrics with Prometheus

- Instrument your application to expose HTTP request metrics (e.g., active_requests).

- Deploy Prometheus in-cluster to scrape those metrics.

Note

Ensure your service exports Prometheus-formatted metrics (e.g., via client libraries like prometheus-client).

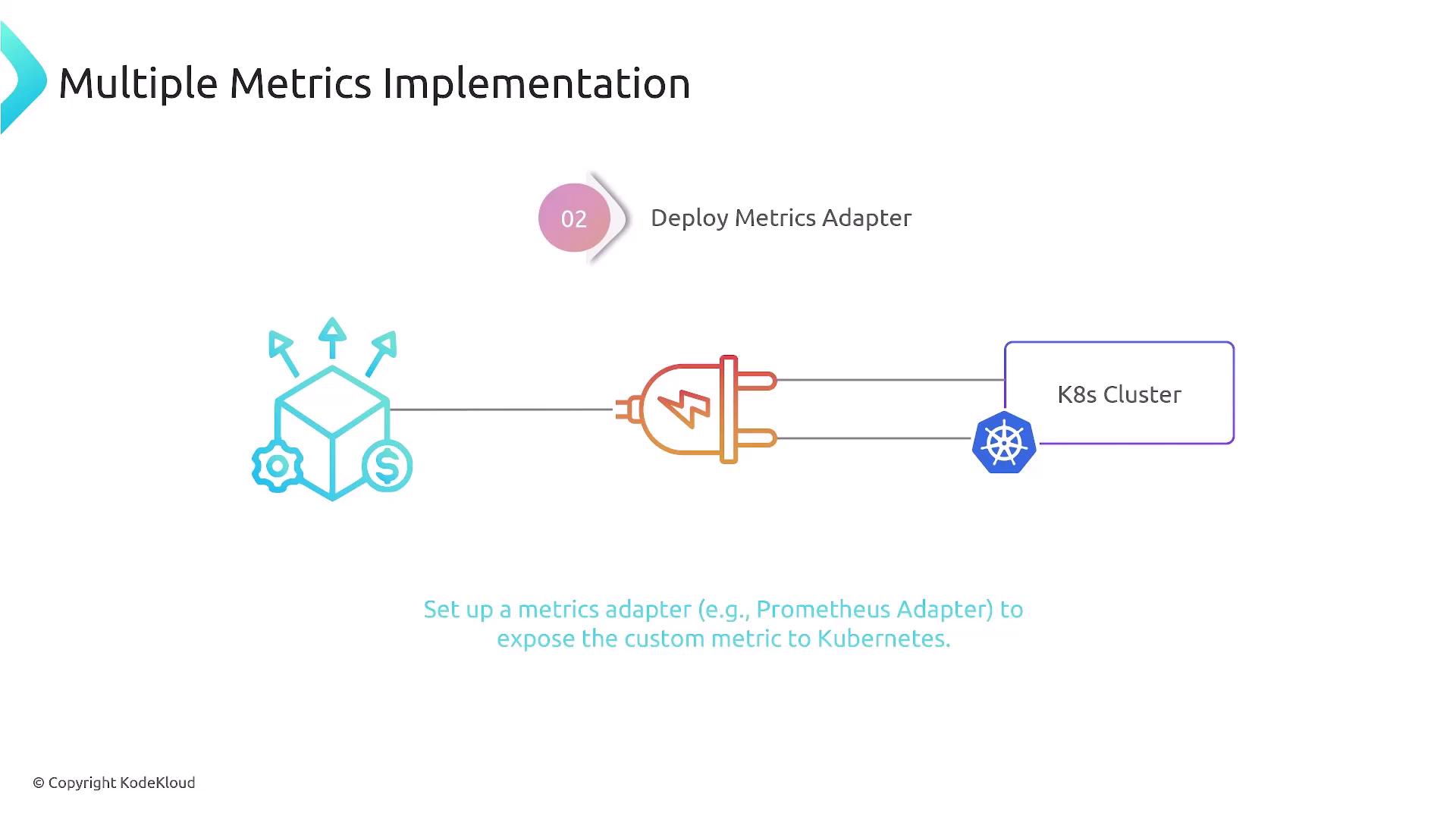

Next, install a metrics adapter—such as the Prometheus Metrics Adapter—so Kubernetes can query custom metrics from Prometheus.

Configuring HPA with Multiple Metrics

Below is a sample HorizontalPodAutoscaler manifest that scales the backend-service Deployment by:

- CPU utilization (70% average)

- Active HTTP requests per pod (100 average)

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: backend-service-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: backend-service

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

- type: Pods

pods:

metric:

name: active_http_requests

target:

type: AverageValue

averageValue: "100"

| Metric Type | Source | Aim |

|---|---|---|

| Resource | Kubernetes Metrics Server | Scale by CPU utilization (70% avg) |

| Pods | Prometheus Metrics Adapter | Scale by HTTP requests (100 avg/pod) |

The HPA controller evaluates both metrics and adjusts replicas to satisfy the most demanding threshold.

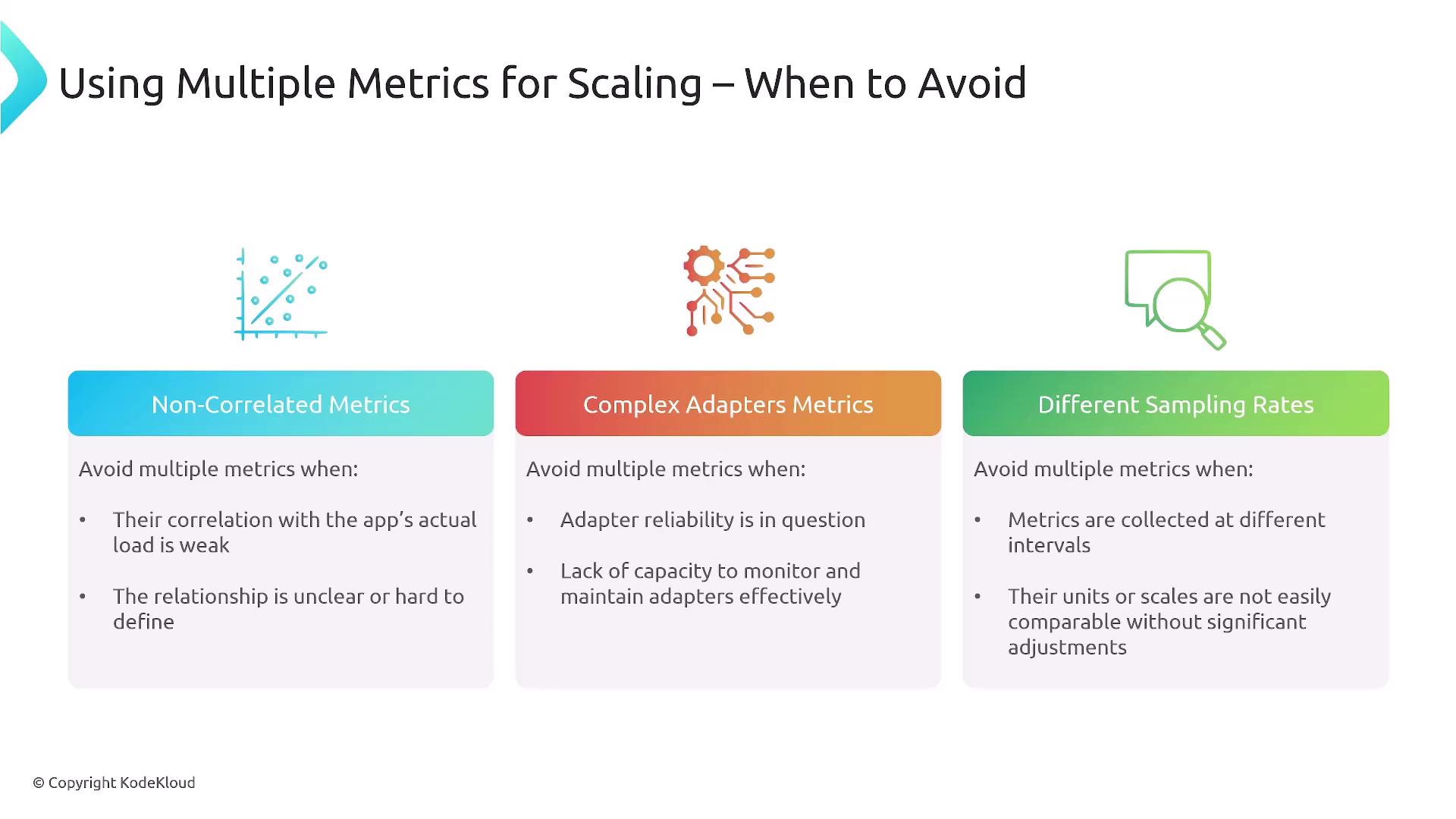

When to Avoid Multiple Metrics

While powerful, multiple-metric scaling can introduce complexity:

- Metrics that are poorly correlated with real load

- Unreliable or hard-to-maintain adapters

- Disparate sampling rates or units

Warning

Mixing metrics with different collection intervals (e.g., 1s vs. 10m) can cause erratic scaling. Validate correlation before production.

Balancing simplicity and accuracy is key. With the right metrics and adapters, HPA can keep your microservices responsive and cost-efficient.

Links and References

Watch Video

Watch video content

Practice Lab

Practice lab