Recap of Kubernetes Network Policies

By default, Kubernetes pods communicate without restrictions, which can expose applications to unintended traffic flows. Implementing network policies allows you to explicitly permit or deny traffic between:- Pod-to-Pod

- Pod-to-Service

- Pod-to-Namespace

Network policies enhance cluster security by defining clear ingress and egress rules. Always start with a default deny posture in production.

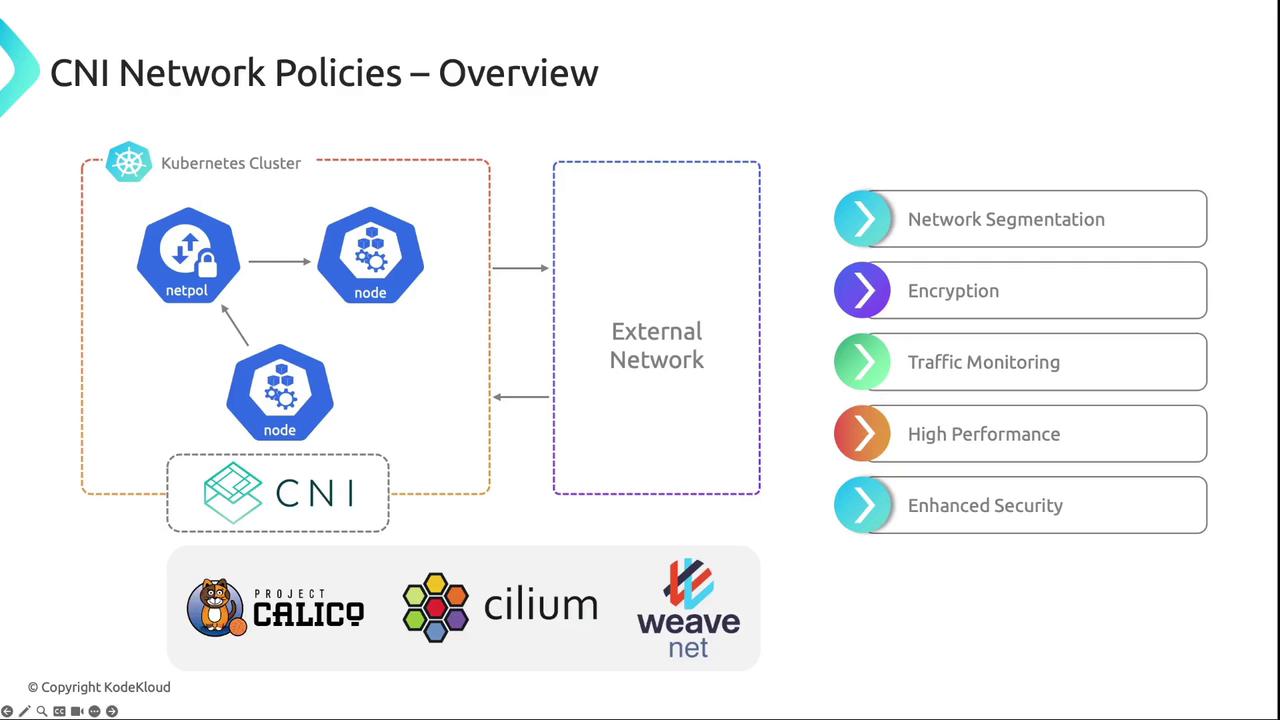

Built-In vs. CNI-Specific Network Policies

Kubernetes ships with basic network policy support, but many CNI plugins extend capabilities with advanced features:| Feature | Built-In Policy | CNI Plugin Extensions |

|---|---|---|

| Layer 7 Filtering | ✖️ | ✅ HTTP, gRPC, Kafka rules |

| Encryption & Segmentation | ✖️ | ✅ mTLS, IPsec |

| Rate Limiting & Whitelisting | ✖️ | ✅ IP whitelisting/blacklisting, QoS |

| Traffic Monitoring & Analytics | Limited | ✅ Real-time metrics & logs |

| Multi-Cluster Policy Scope | ✖️ | ✅ Global policy management |

Key Benefits of CNI Network Policies

| Benefit | Description |

|---|---|

| Advanced Traffic Control | Fine-grained L3–L7 rules across pods, nodes, external targets |

| Enhanced Security | Intrusion detection, IP whitelisting, rate limiting |

| Performance Optimization | Low-latency, high-throughput networking |

| Extended Scope | Policy enforcement beyond cluster boundaries |

| Customization | Organization-specific rule definitions |

| Segmentation & QoS | Network isolation plus traffic prioritization |

| Real-Time Monitoring | Anomaly detection and live metrics |

Cilium: Our CNI of Choice

Cilium leverages eBPF for efficient enforcement of Layer 3, 4, and 7 policies with minimal performance overhead:- Layer 7 Visibility (HTTP, gRPC, Kafka)

- Protocol-Aware Filtering (methods, paths, headers)

- Service Mesh Integrations (Istio, Linkerd)

- Multi-Cluster Policy Consistency

- Rich eBPF-Powered Troubleshooting & Metrics

Policy Enforcement Modes

Cilium follows a whitelist model. Traffic is dropped by default unless permitted by one of these modes:- Ingress Policies: Allow traffic into pods based on source IPs, ports, or L7 rules

- Egress Policies: Allow pods to initiate traffic to specified destinations

- Default Deny: Any traffic not explicitly allowed will be blocked

Rule Structure

Every Cilium policy uses an endpoint selector to target pods via labels:fromEndpoints/toEndpoints: Label selectors for source/destination podsports&protocols: Restrict to TCP/UDP ports- Layer 7 rules: HTTP methods, paths, headers

- CIDR blocks: Allow or exclude specific IP ranges

Example: Comprehensive CiliumNetworkPolicy (L3–L7)

- Ingress: Only HTTP GET on

/publicfrom pods withapp=frontend. - Egress: Only TCP port 3306 to pods with

app=database.

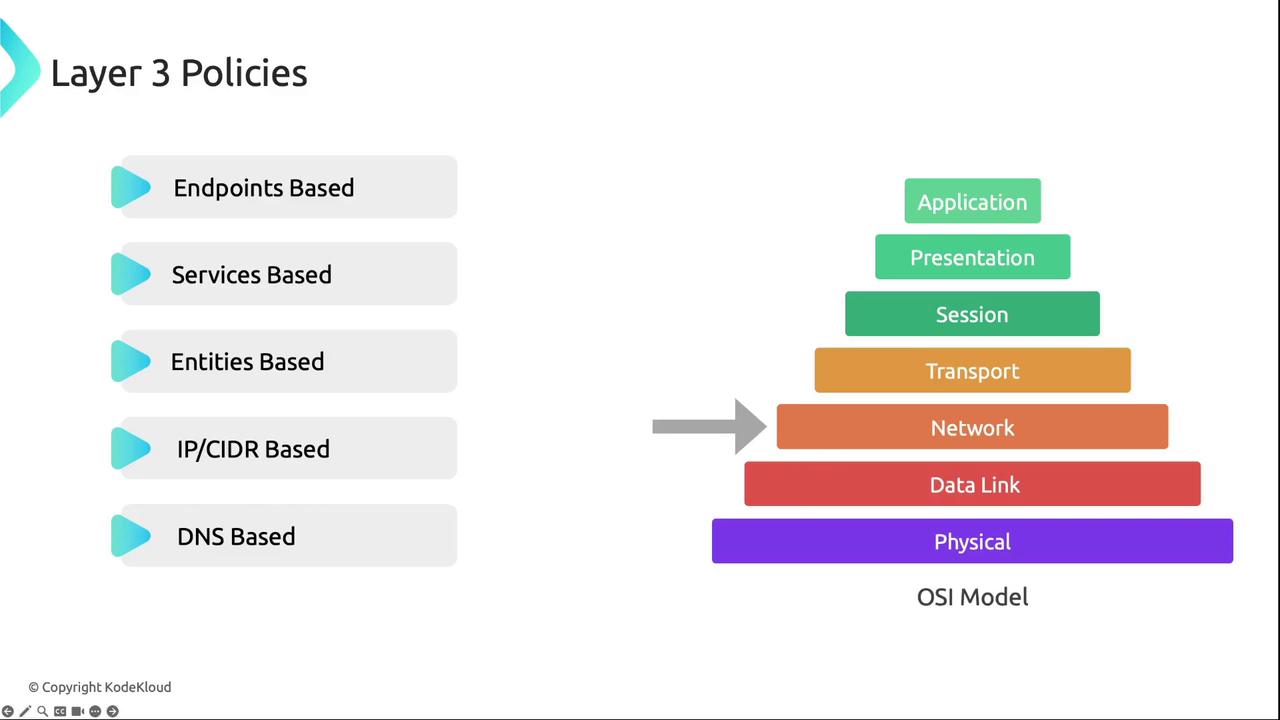

Layer 3 Policies

Layer 3 policies define network-layer connectivity without deep packet inspection:- Endpoints-based: Select pods by labels

- Entities-based: Match built-in identities like

hostorworld - DNS-based: Use runtime-resolved DNS names (honoring TTLs)

Examples

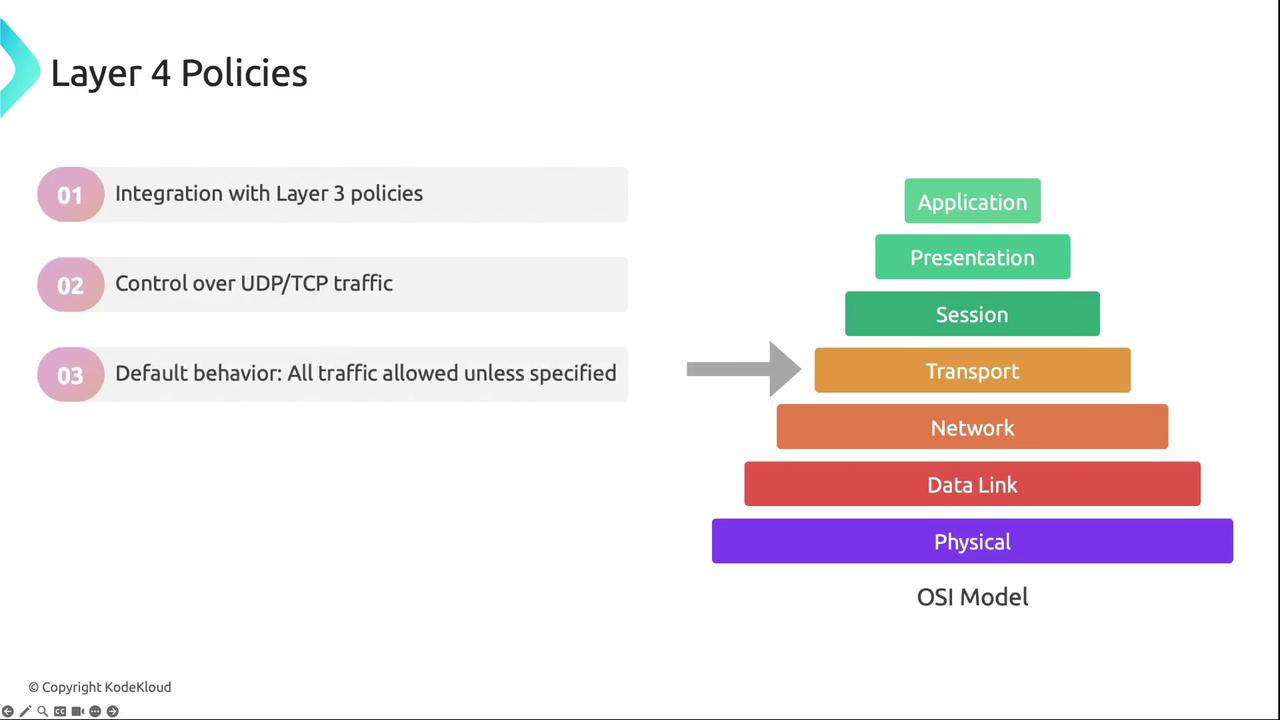

Layer 4 Policies

Layer 4 rules govern transport-layer connectivity (TCP/UDP). By default, Cilium blocks ICMP unless explicitly permitted.

Example: Restrict Egress to TCP Port 80

app=myService.

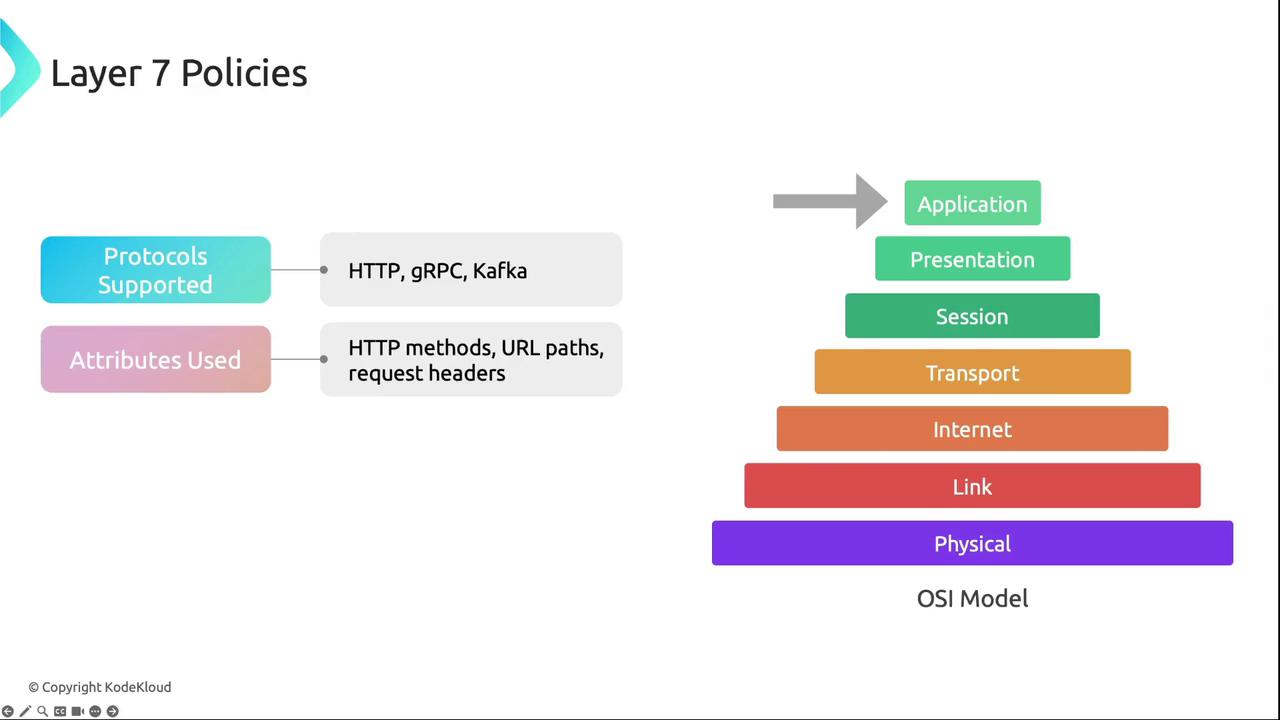

Layer 7 Policies

Layer 7 policies enable application-layer inspection and enforcement for HTTP, gRPC, Kafka, and more.

Example: HTTP Methods, Paths & Headers

- HTTP GET requests to

/path1 - HTTP PUT requests to

/path2only ifX-My-Header: trueis present

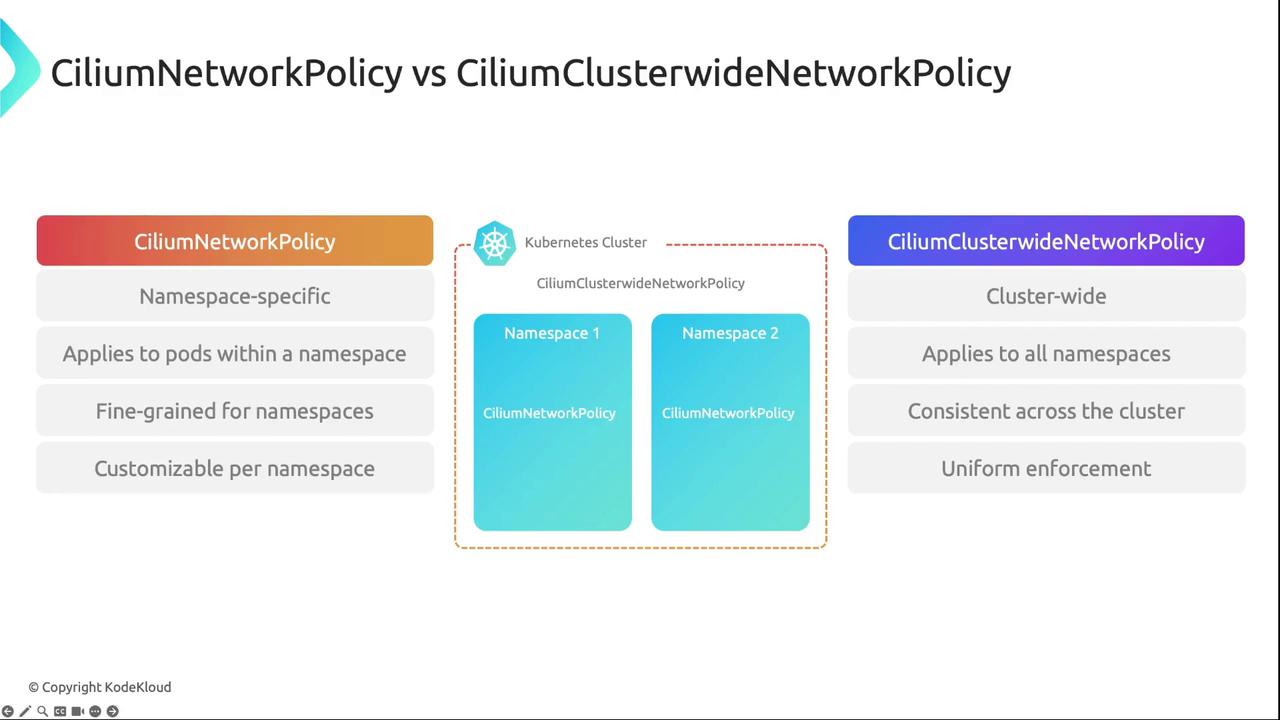

Namespace vs. Cluster-Wide Policies

Cilium supports two scope levels:| Resource Type | Scope |

|---|---|

| CiliumNetworkPolicy | Single Namespace |

| CiliumClusterwideNetworkPolicy | All Namespaces |

Combining namespace-specific and cluster-wide policies ensures both granular control and consistent, global security enforcement.

Now that we’ve covered the theory behind CNI network policies, explore our hands-on Cilium policy lab to see them in action.