LangChain

Building Agents

What are Agents

In this final lesson, we bring together prompt engineering, memory management, tool integration, and retrieval-augmented generation (RAG) to build a complete LangChain agent. You’ll see how to orchestrate Long Language Models (LLMs) with external tools and memory stores in one cohesive workflow.

Prerequisites

- Prompt engineering best practices

- Short-term and long-term memory strategies

- Integrating external tools (APIs, Python REPL, search)

- Retrieval-Augmented Generation for grounding responses

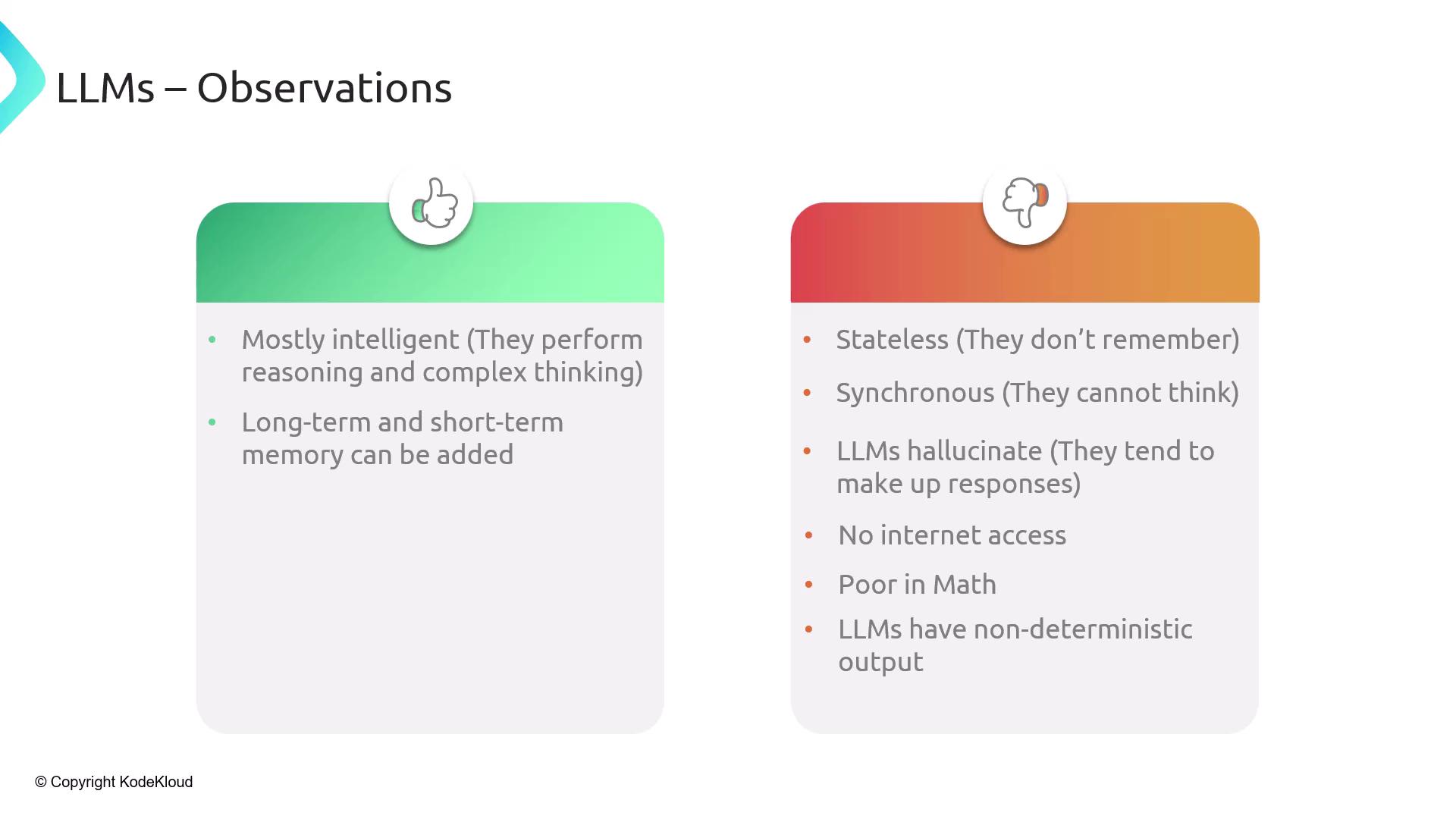

Observations on Large Language Models (LLMs)

LLMs power modern AI but come with both strengths and limitations:

- Statelessness: No built-in memory across calls

- Synchronous execution: Single-turn prompt processing

- Varying intelligence: GPT-4 vs. smaller models

- Hallucination risk: Inventing unsupported details

- No internet access: Stuck with training data cutoff

- Weak math: Simple arithmetic often fails

- Non-deterministic: Outputs and formats can change

Addressing LLM Limitations

LangChain modules help overcome these challenges:

- Memory: Short-term scratchpads & long-term stores

- Grounding: RAG with vector stores and document retrieval

- Real-time data: API/tool integrations (e.g., search, weather)

- Accurate math: Python REPL tool for calculations

- Consistent output: Prompt templates + output parsers

When you need all these in one workflow, agents are the solution.

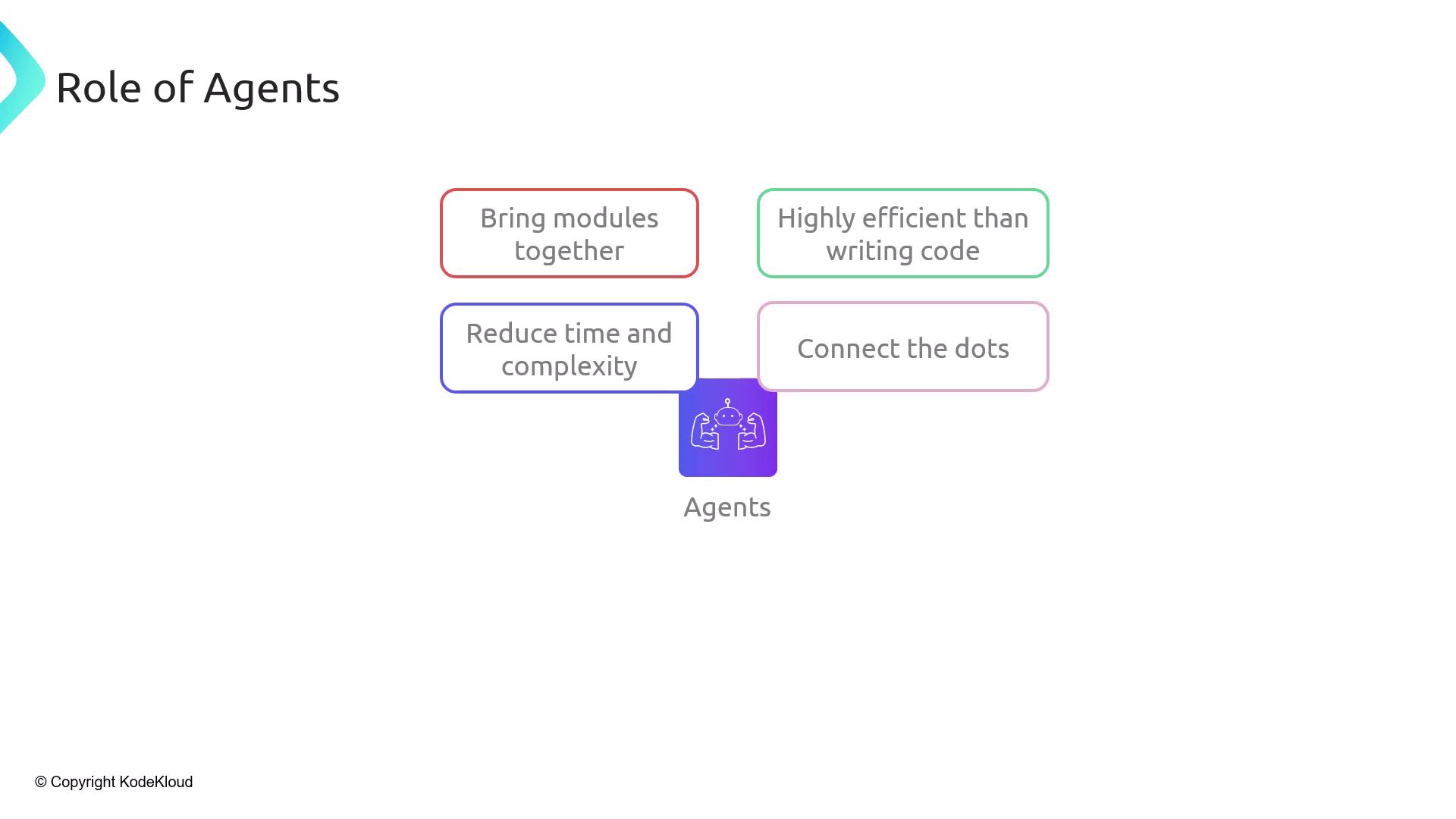

Introducing LangChain Agents

Agents are orchestration layers that combine LLMs with tools, memory, and custom code to handle multi-step tasks.

![]()

Note

An agent acts as a controller: it queries the LLM for required data, invokes external tools, updates memory, and composes the final structured response.

With agents, you can:

- Expose functions in any language as REST endpoints

- Seamlessly integrate Python, Java, or custom libraries

- Scale from simple chatbots to multi-step pipelines

Advantages of Agents

By unifying LangChain modules, agents offer:

- Modularity: Plug-and-play tools, memory, and parsers

- Flexibility: Adapt to domains like healthcare, service, or education

- Efficiency: Automated prompt engineering & formatting

- Scalability: From quick scripts to enterprise systems

Agents also bridge user intent and LLM reasoning across sectors:

Agents vs. LLMs: A Comparison

| Limitation of LLMs | Agent Enhancement |

|---|---|

| Stateless | Long-term & short-term memory |

| Synchronous | Asynchronous workflows |

| Variable intelligence | Chain-of-thought reasoning |

| Hallucination | Grounded with contextual data |

| No internet access | Web browsing & API invocation |

| Poor at math | Python REPL calculations |

| Unpredictable output | Structured output via parsers |

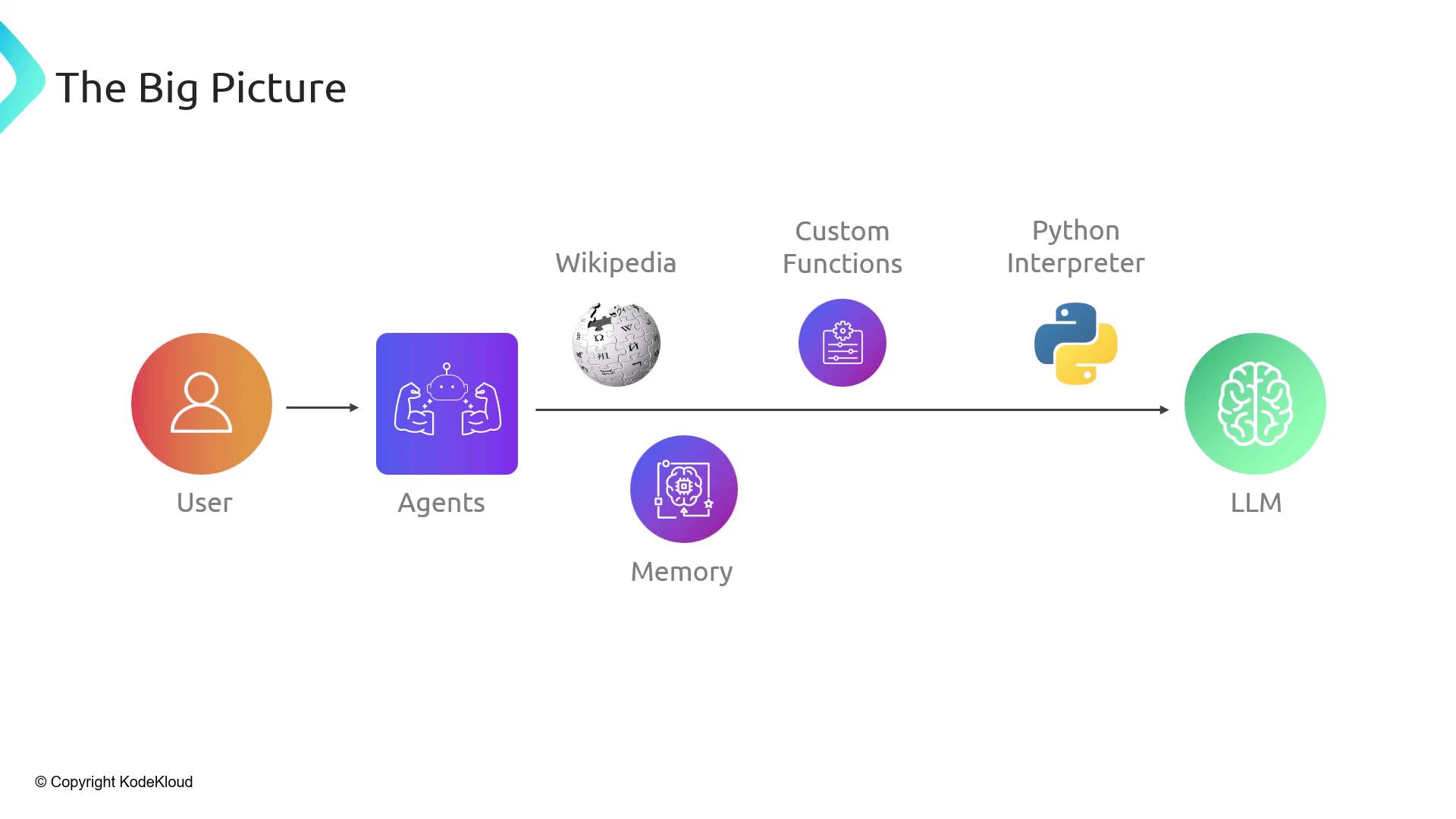

The Big Picture Architecture

An agent orchestrates end-to-end AI workflows:

- User sends a query

- Agent analyzes intent and decides on tools/memory

- Tools (Wikipedia, search APIs, custom functions, Python) fetch data

- LLM processes prompts with context and returns structured output

Agents leverage both scratchpads and databases (e.g., Redis) and automate advanced prompting patterns like chain-of-thought and React-Reason-Act.

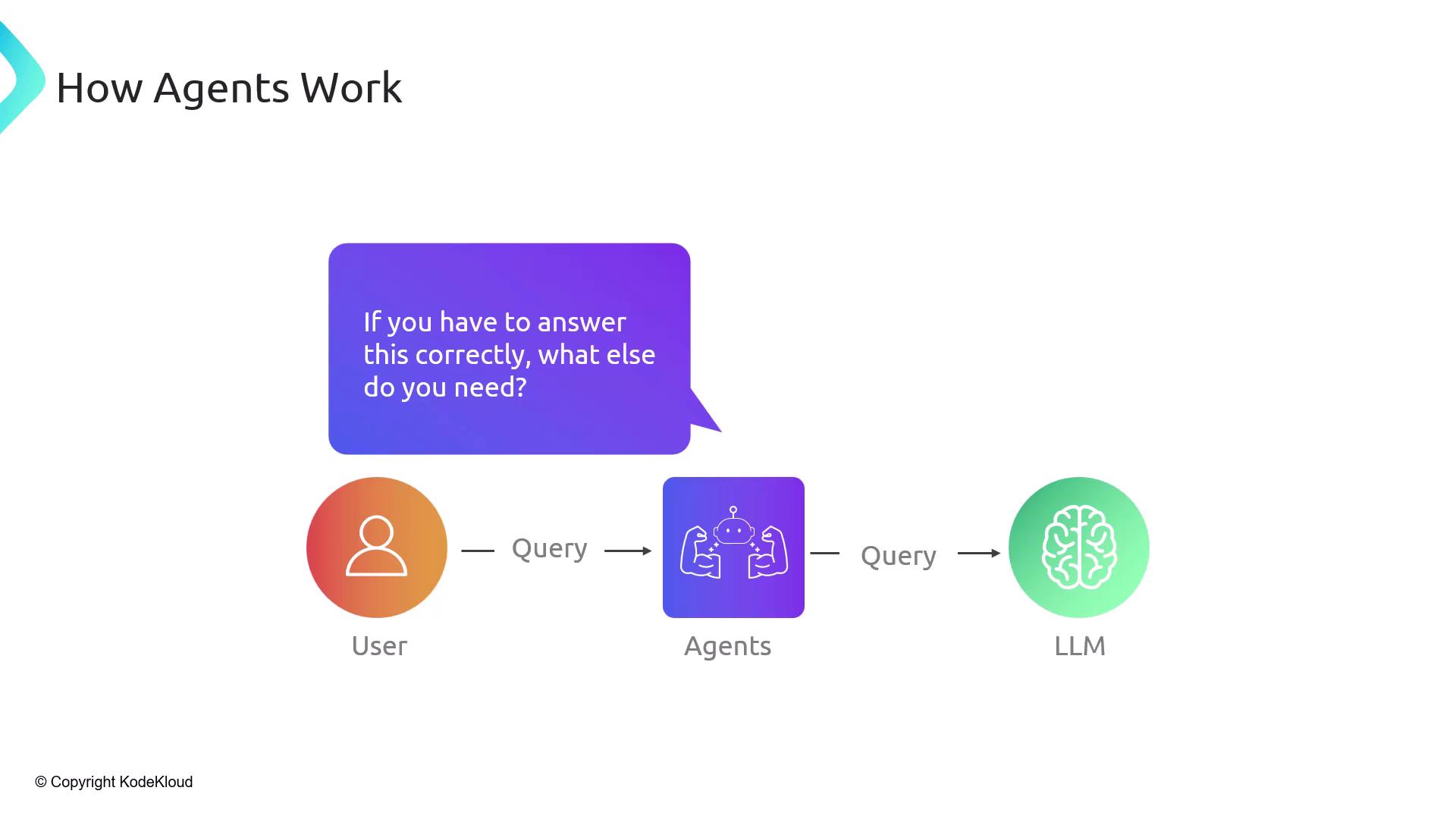

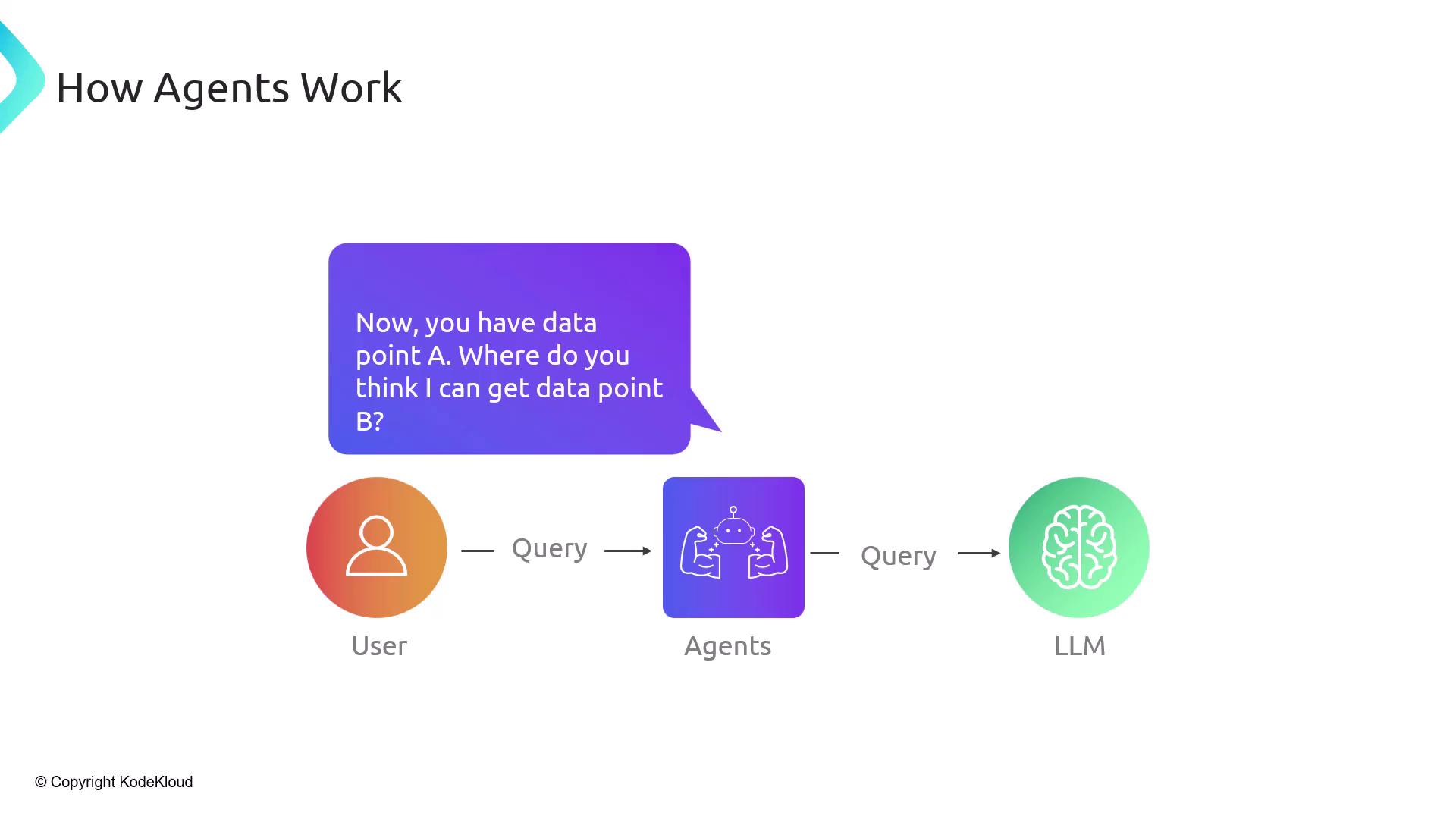

How Agents Work

At runtime, the agent uses the LLM as a reasoning engine with external support:

- Agent asks the LLM: "What else do you need to answer this?"

- LLM lists data points (A, B, C)

- Agent invokes tools (search, Wikipedia, Python REPL) to gather data

- New data is fed back to the LLM iteratively

- Loop continues until the LLM returns the final result

Agents can run autonomously for tasks such as customer support, counseling, or academic guidance:

![]()

Warning

Always secure API keys and protect sensitive data when configuring memory stores and external tools.

Agent Use Cases

- Customer Support Agent: FAQs, troubleshooting, escalation

- Student Guide Agent: Step-by-step problem solving

- Travel Coordinator Agent: Itinerary planning, booking

- Meeting Scheduler Agent: Calendar integration, invites

These examples are just the beginning. In the next lessons, we’ll build and deploy real-world agents end to end—stay tuned!

References

Watch Video

Watch video content