LangChain

Interacting with LLMs

Messages in ChatModel

Unlock the full potential of chat-based interactions by understanding how messages are structured in a chat model. Whether you’re building a conversational AI assistant or integrating a chatbot into your application, mastering the flow of messages ensures richer, more accurate interactions.

Table of Contents

- Core Message Types

- Message Workflow

- Statefulness and LLMs

- Implementing in LangChain

- Links and References

Core Message Types

When working with a chat model, all communication is framed as a sequence of three distinct message objects:

| Message Type | Purpose | Example |

|---|---|---|

| System | Establishes persona or global settings | “Act like a physics teacher.” |

| Human | Captures user input or inquiries | “Explain Newton’s laws.” |

| AI | Contains the model’s generated response | “Newton’s first law states…” |

Note

System and human messages together form the prompt sent to the model. AI messages are the model’s replies based on that prompt sequence.

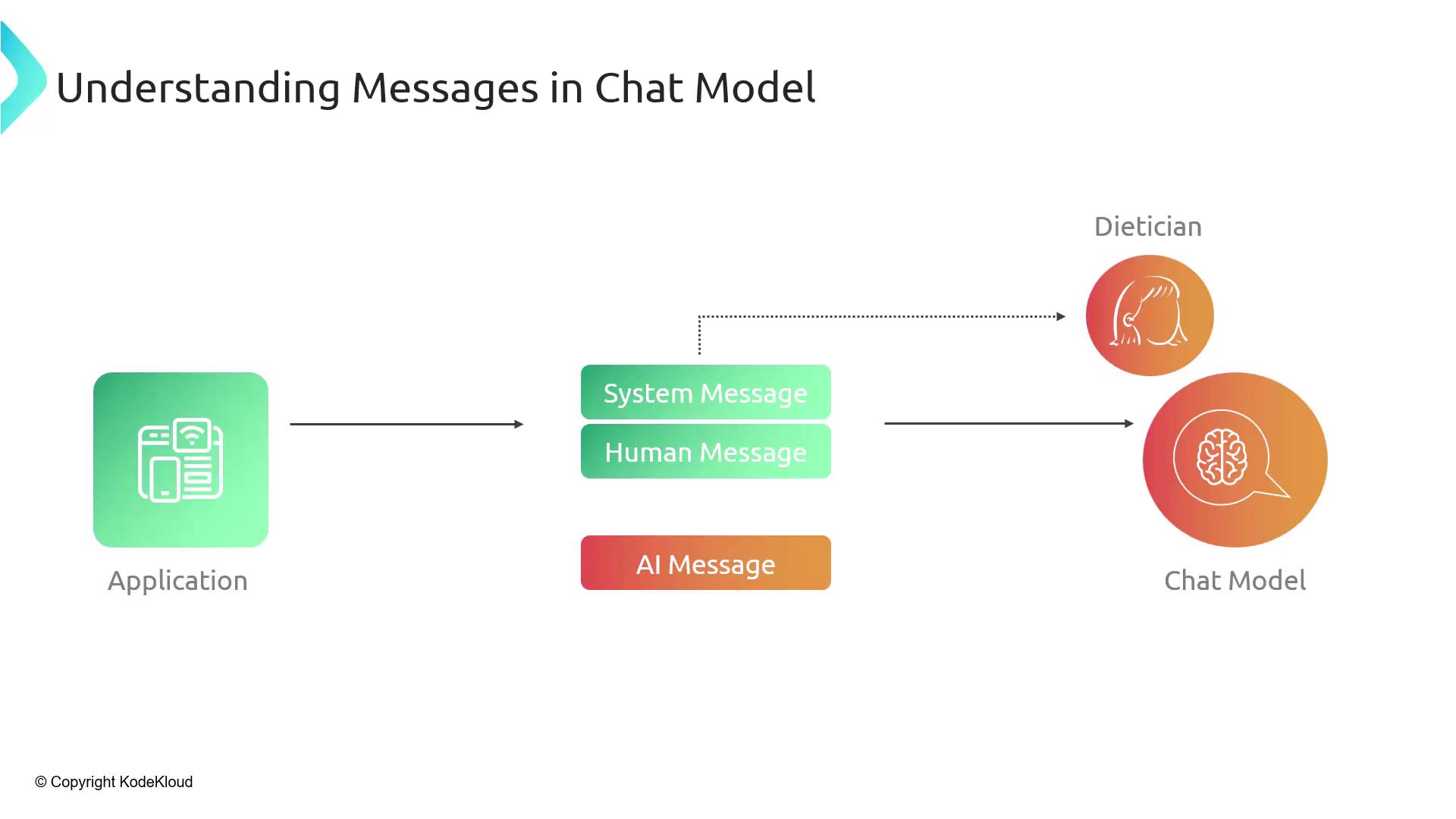

Message Workflow

Below is a high-level flowchart illustrating how your application composes and processes these messages before, during, and after calling the chat model API:

- Initialize System Message

- Accept Human Message

- Invoke Chat Model

- Receive AI Message

- Render Response to User

Statefulness and LLMs

Large language models (LLMs) do not maintain memory across separate sessions. This means every new conversation must include its system message to preserve context.

Warning

If you omit the system message at the start of a session, the model will have no persona or configuration, leading to unpredictable or generic responses.

Implementing in LangChain

LangChain simplifies prompt construction by treating each message as an object. Here’s a basic example in JavaScript:

import { ChatOpenAI } from "langchain/chat_models";

import {

SystemMessage,

HumanMessage,

AIMessage

} from "langchain/schema";

const chat = new ChatOpenAI({ temperature: 0.7 });

const messages = [

new SystemMessage("You are a friendly dietician."),

new HumanMessage("What is a balanced meal plan for weight loss?")

];

const response = await chat.call(messages);

console.log(response); // AIMessage with the diet plan

Use this pattern to chain multiple messages, inject context, or adjust personas dynamically.

Links and References

Explore these resources for deeper dives into conversational AI, deployment best practices, and scaling your chat application.

Watch Video

Watch video content