LangChain

Interacting with LLMs

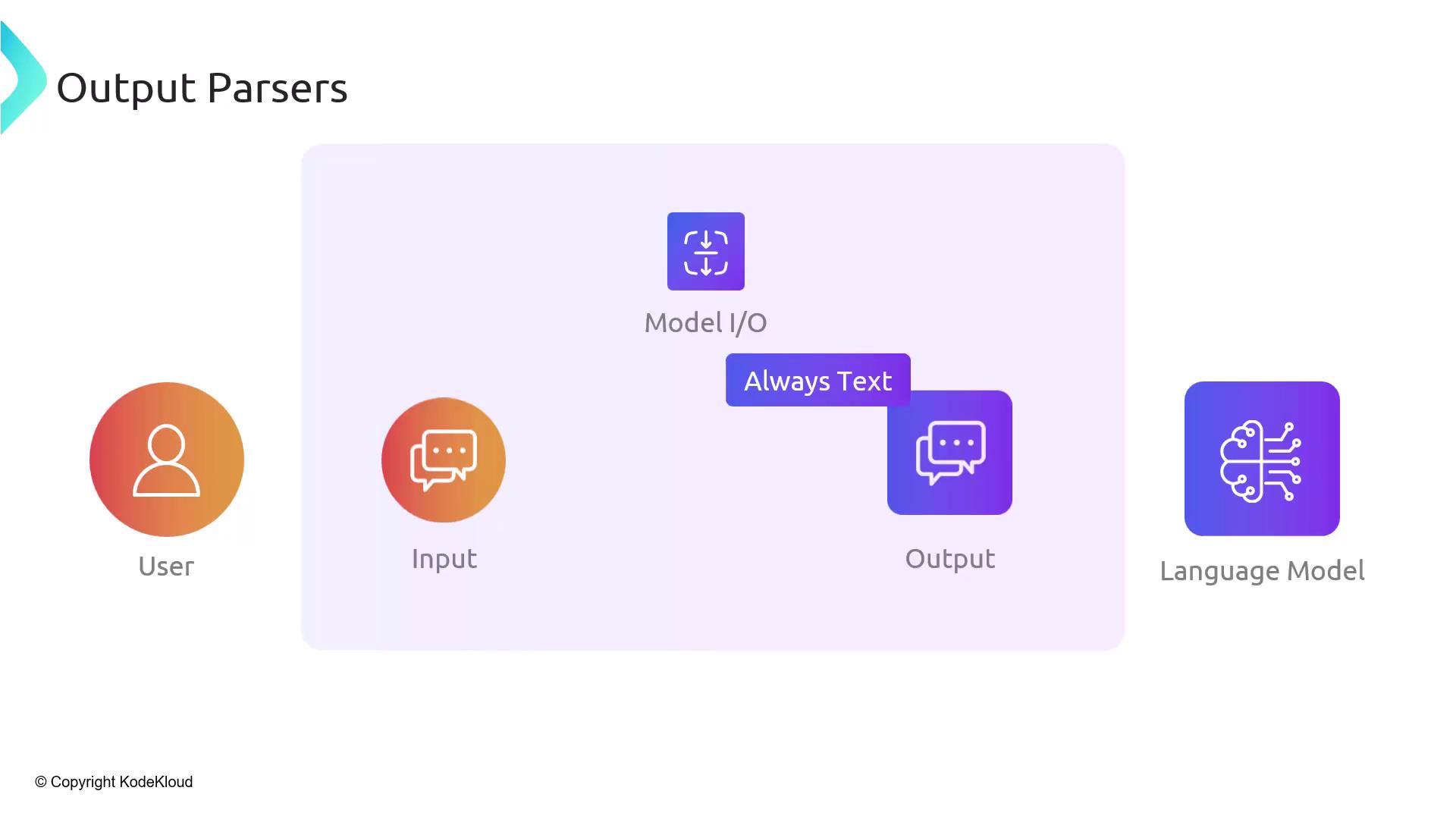

Parsing Model Output

In this lesson, we focus on transforming a language model’s plain-text responses into structured formats like JSON, XML, YAML, or CSV. Although Large Language Models (LLMs) always return text, downstream applications typically require data in a predictable schema. By embedding clear formatting instructions in your prompt and using LangChain’s OutputParser, you can automate this workflow end-to-end.

Why Structured Output Matters

- Interoperability: Structured data (JSON, XML, YAML) integrates seamlessly with APIs and databases.

- Reliability: Reduces parsing errors and unexpected values at runtime.

- Maintainability: Clear schemas make it easier to validate and extend your data model.

Note

Large language models always return text. To work with objects, you need to parse and validate that text.

How LangChain’s OutputParser Works

LangChain’s OutputParser automates both prompt construction and response transformation:

Prompt Construction

You define a schema and example responses inside aPromptTemplate. The model then knows exactly which structure (e.g., JSON with specific fields) to produce.Response Transformation

After receiving the text output, the parser converts it into your target data type (e.g., Pythondict, XML DOM, YAML mapping), handling parsing errors and edge cases.

from langchain import PromptTemplate, LLMChain

from langchain.output_parsers import ResponseSchema, StructuredOutputParser

# 1. Define the schema you expect

schemas = [

ResponseSchema(name="title", description="Title of the article"),

ResponseSchema(name="tags", description="List of relevant tags"),

]

# 2. Create an output parser

parser = StructuredOutputParser.from_response_schemas(schemas)

# 3. Build a prompt that includes instructions and examples

template = """

Generate an article summary:

{format_instructions}

Article:

\"\"\"

{article_text}

\"\"\"

"""

prompt = PromptTemplate(template=template, input_variables=["article_text"], partial_variables=parser.get_format_instructions())

# 4. Run the chain and parse

chain = LLMChain(llm=llm, prompt=prompt)

output = chain.run(article_text="...your content here...")

result = parser.parse(output)

Common Output Formats

| Format | Description | Example |

|---|---|---|

| JSON | Widely used, machine-readable | { "name": "Alice", "age": 30 } |

| XML | Markup-based, verbose | <person><name>Alice</name></person> |

| YAML | Human-friendly, indentation-based | name: Alice |

| CSV | Tabular data, comma-separated | name,age\nAlice,30 |

Benefits of Using OutputParser

- Predictability: Enforces schema so you avoid malformed data.

- Error Handling: Catches parsing exceptions early and returns structured error messages.

- Extensibility: Easily swap or update schemas without rewriting parsing logic.

Warning

Always include format_instructions from StructuredOutputParser in your prompt. Omitting them can lead to inconsistent model responses and parsing failures.

Next Steps

- Experiment with custom

ResponseSchemadefinitions for your use case. - Validate parsed output against JSON Schema or your own validators.

- Integrate the parser into your production pipeline for reliable data ingestion.

Links and References

- LangChain OutputParser Documentation

- Python

jsonModule - YAML Tutorial

- XML in Python (

xml.etree.ElementTree)

Watch Video

Watch video content