Overview of LangChain Libraries

| Library Layer | Description |

|---|---|

| LangChain Core | Defines runtime, interfaces, base abstractions, and LCEL. |

| LangChain Community | Offers adapters and integrations for third-party services. |

| LangChain (Primary) | Provides pre-built chains, agents, and retrieval patterns. |

LangChain is built in layers. Each layer depends on the one below it, ensuring a consistent API and runtime across all integrations.

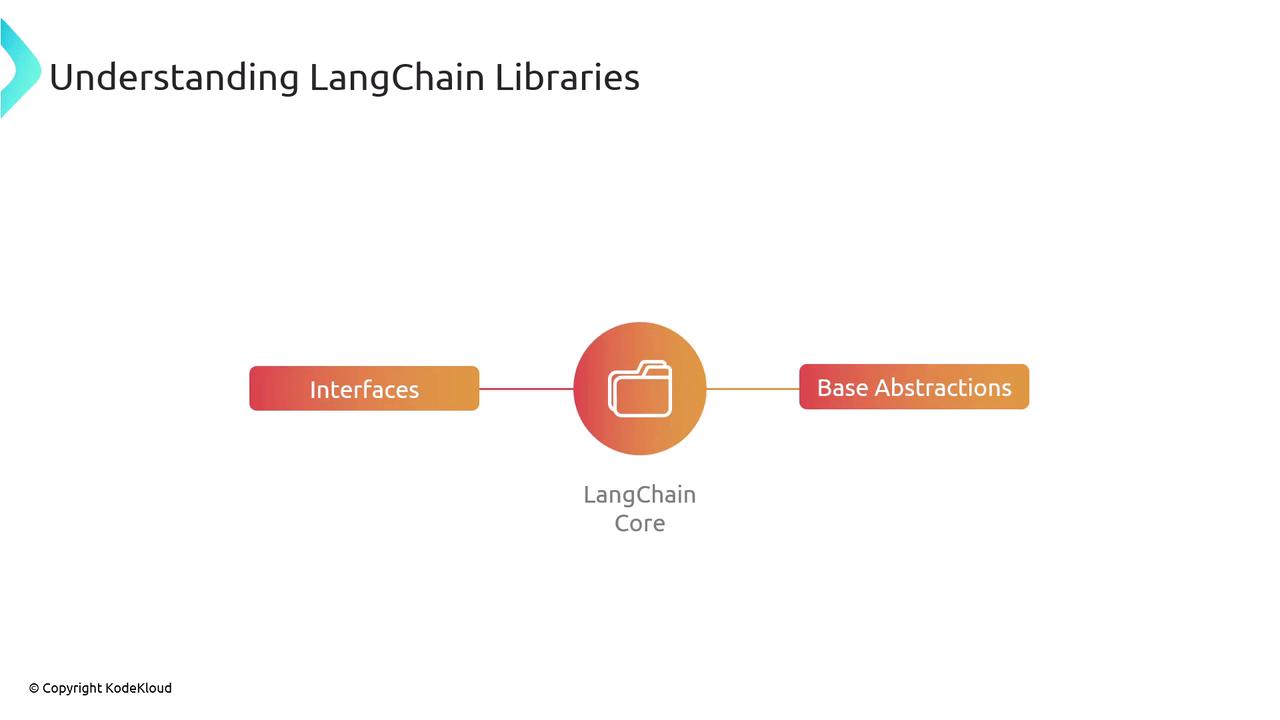

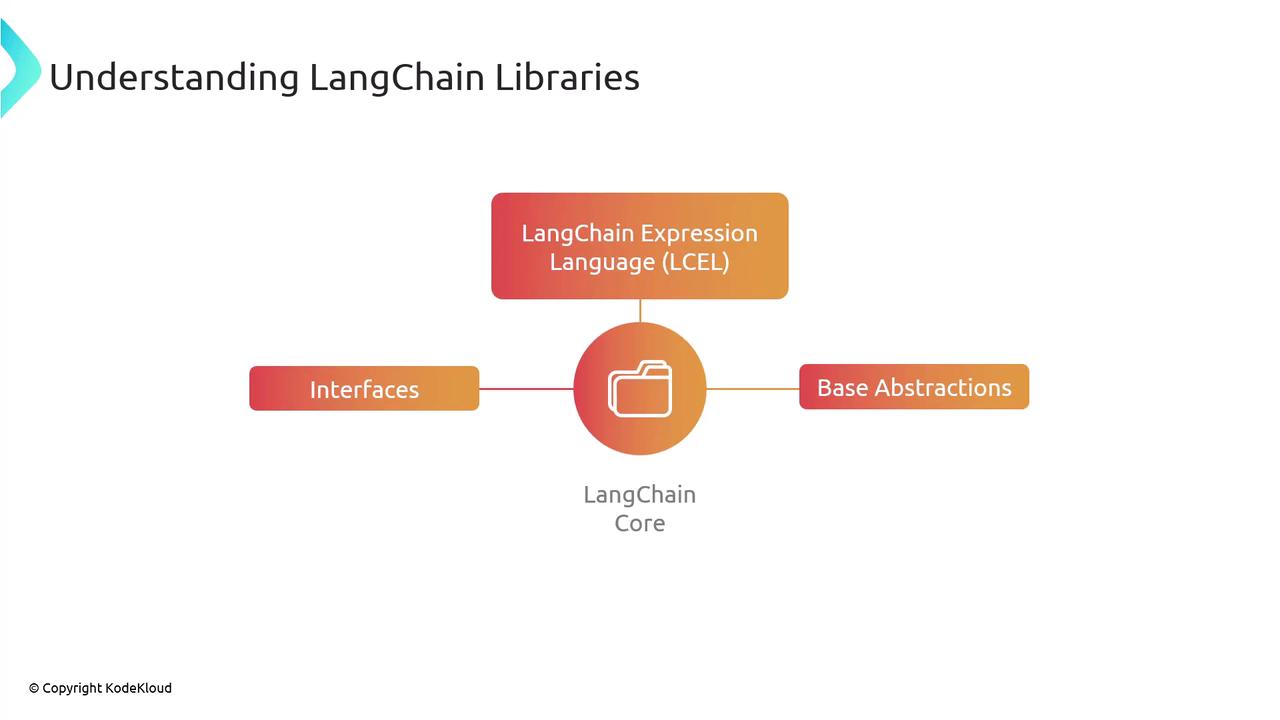

1. LangChain Core

LangChain Core is the foundation that powers chains, agents, and tools. It exposes:- Interfaces: Abstract classes for LLMs, vector retrievers, and memory modules.

- Base Abstractions: Core types and classes that define behavior for downstream libraries.

- LCEL (LangChain Expression Language): A minimal DSL for composing prompts and orchestrating chains.

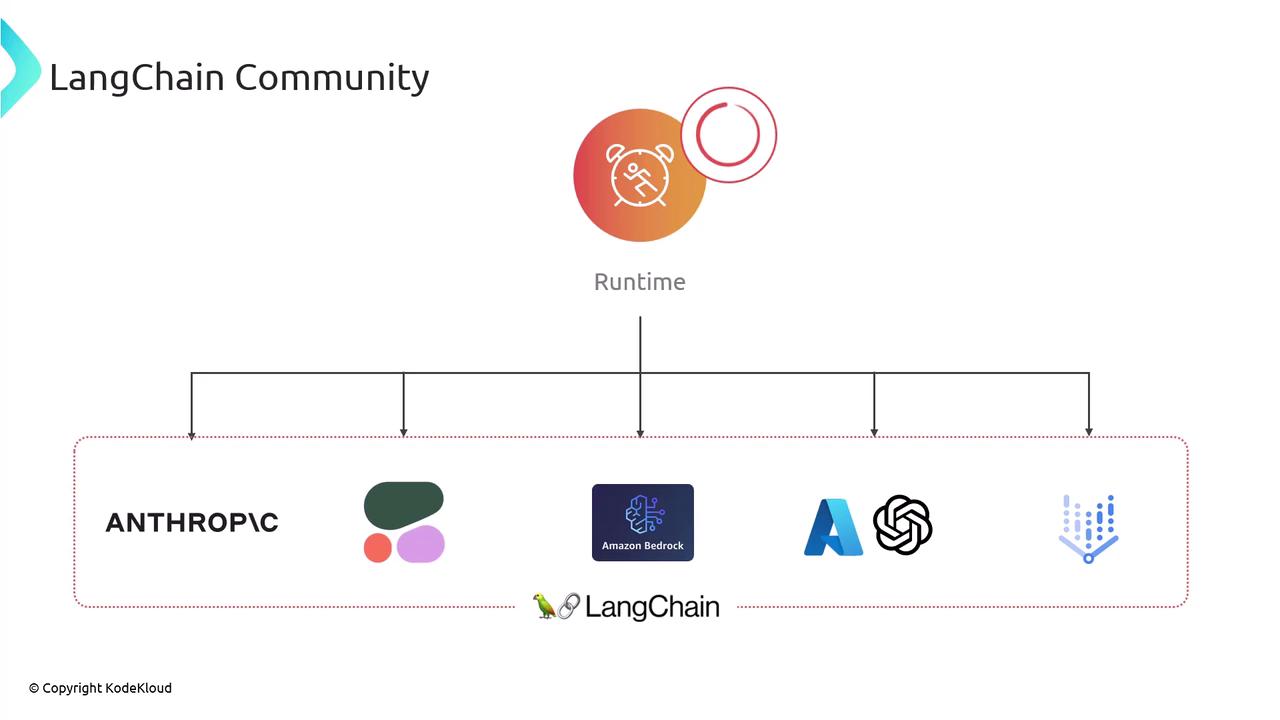

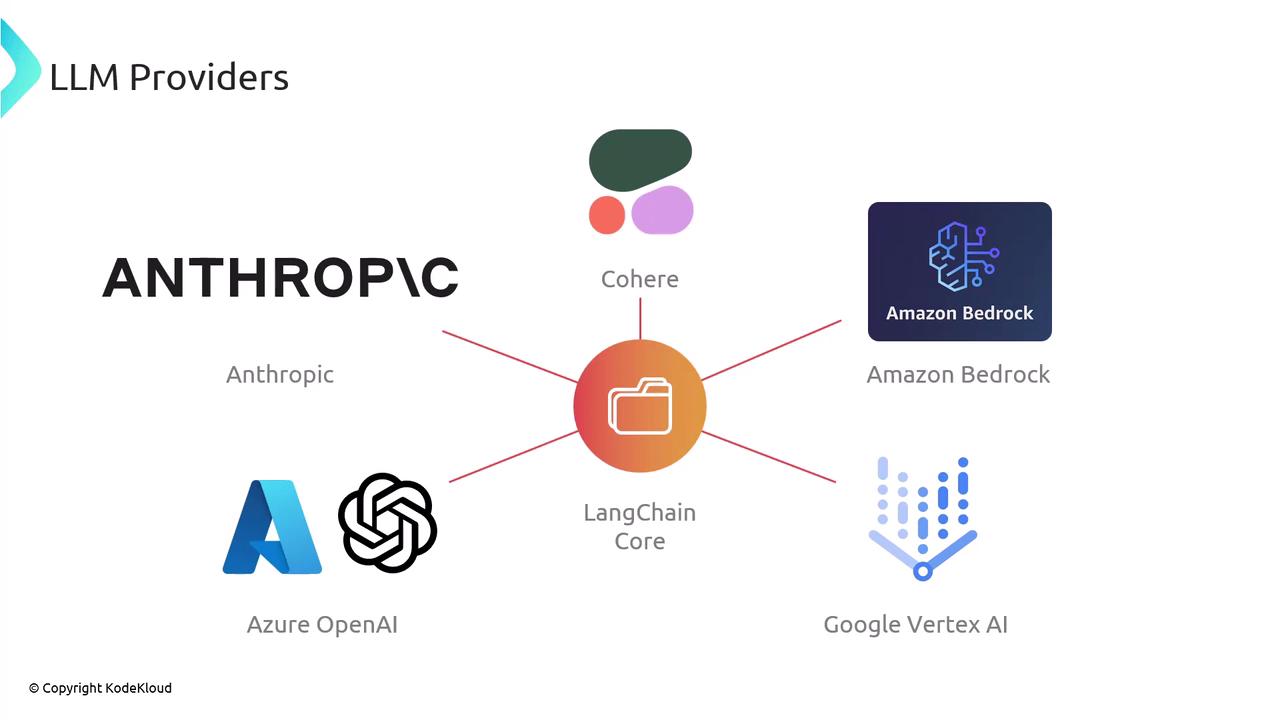

2. LangChain Community

The Community layer builds on Core by providing concrete implementations for popular AI services:| LLM Provider | LangChain Integration |

|---|---|

| OpenAI | langchain-openai |

| Anthropic | langchain-anthropic |

| Cohere | langchain-cohere |

| Amazon Bedrock | langchain-amazon-bedrock |

| Azure OpenAI | langchain-azure-openai |

| Google Vertex AI | langchain-google-vertex |

- Vector databases (e.g., Pinecone, Weaviate)

- Retriever plugins for semantic search

- Document loaders for PDF, CSV, and more

- Specialized tooling (e.g., SQL agents, Python REPL)

Installing the wrong community package can lead to version conflicts. Always match the package version with your LangChain Core release.

3. LangChain (Primary Library)

The Primary Library leverages Core and Community to provide high-level building blocks:- Chains: Pre-built workflows for summarization, Q&A, translation, and more.

- Agents: Frameworks that enable dynamic decision-making using tools.

- Retrieval Strategies: Caching, streaming, and hybrid search patterns.