What Is a Tool?

A tool in LangChain is a configurable wrapper around external functionality—APIs, custom functions, or runtimes like Python REPL. Instead of relying solely on indexed documents or prompts, tools enable:- Real-time data retrieval (e.g., stock quotes, weather forecasts)

- Dynamic computation or code execution

- Integration with proprietary or third-party services

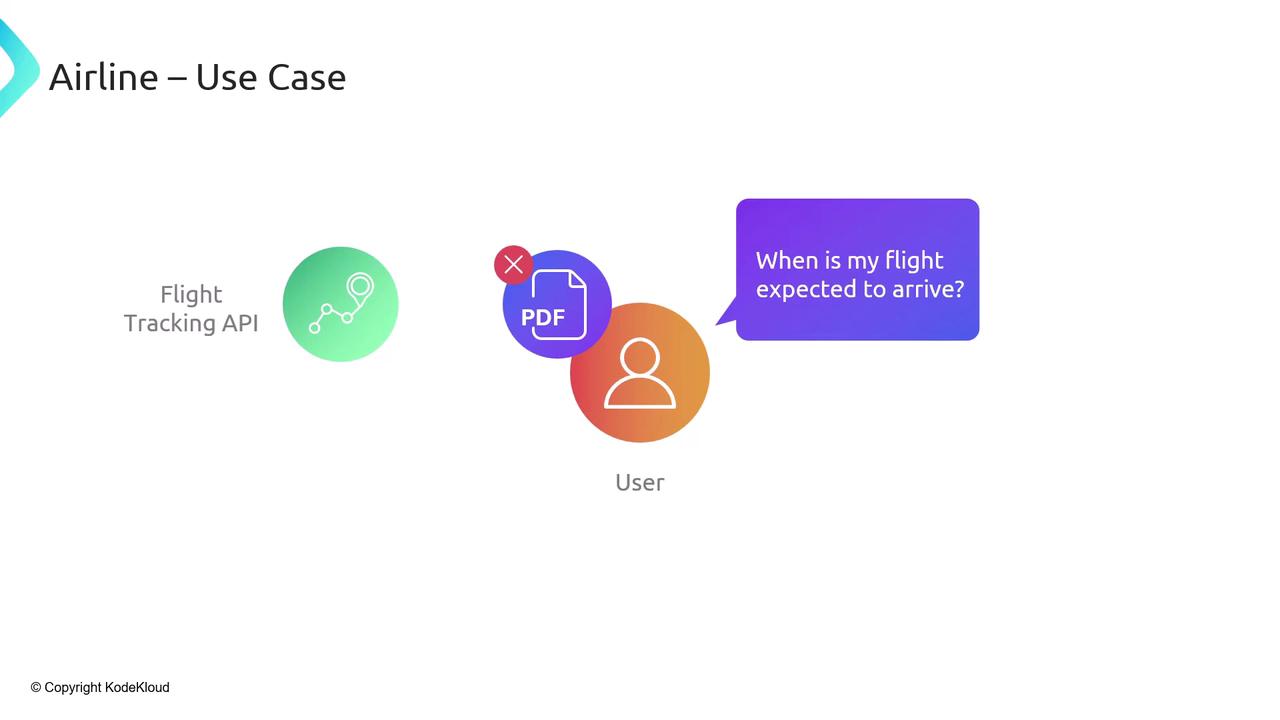

Real-World Example: Airline Chatbot

Imagine an airline support chatbot:-

Baggage Policy

The policy is stored in a PDF, already indexed in your vector database. You use RAG to retrieve and inject the policy text into the prompt, and the LLM answers. -

Flight Arrival Time

The expected arrival isn’t in static documents—you must call a flight-tracking API for live data. This is exactly where tools come in.

Fetching Live Data with Tools

By plugging a flight-tracking API into your chain, the LLM can request and display up-to-the-minute flight status. You can also stream updates or combine multiple APIs in one workflow.Built-In Tools in LangChain

LangChain ships with a variety of ready-to-use tools:- Wikipedia: Fetch article summaries or full pages

- Search: Perform live web searches

- YouTube: Retrieve and summarize video transcripts

- Python REPL: Run snippets of code for computation

- Custom Functions: Wrap any proprietary or third-party API

You can extend these tools with your own wrappers or SDKs to integrate specialized services.

When to Use RAG vs. Tools

| Capability | RAG (Pre-Indexed) | Tools (Real-Time) |

|---|---|---|

| Data Source | Static documents (PDFs, articles) | Live APIs, streaming endpoints, custom runtimes |

| Latency | Batch-driven (indexing over hours/days) | Synchronous, real-time |

| Use Cases | FAQs, policy lookup, historical data | Flight tracking, stock quotes, on-the-fly compute |

| Integration Complexity | Vector database + retriever + LLM | API client + tool wrapper + LLM |

Don’t use RAG for live data—indexed documents can’t provide up-to-the-minute information. Instead, plug in a real-time tool.

Putting It All Together

Returning to our airline chatbot:- Baggage Policy → RAG (static, indexed)

- Flight Status → Tools (real-time API calls)