Running Local LLMs With Ollama

Building AI Applications

Ollama REST API Introduction

In this tutorial, you’ll learn how to launch and interact with Ollama’s REST API. We’ll cover:

- Running the API server locally

- Sending requests via HTTP

- Interpreting responses for seamless integration into your applications

Why Use the Ollama REST API?

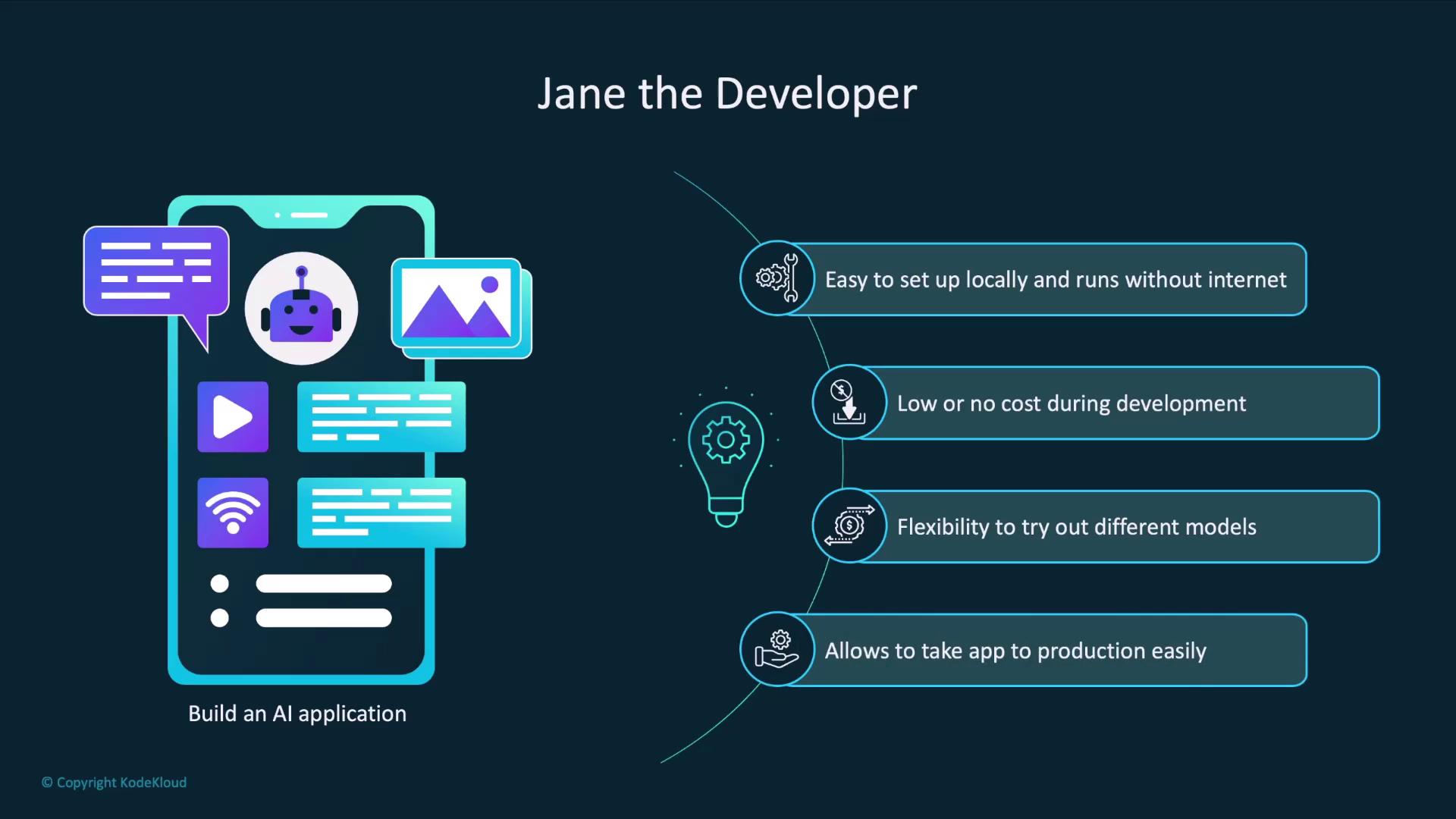

Imagine you’re Jane, a developer building an AI-powered app. Your goals include:

- Quick local setup without internet access

- Zero costs during experimentation

- Easy swapping of LLM models

- A simple transition to production with hosted APIs

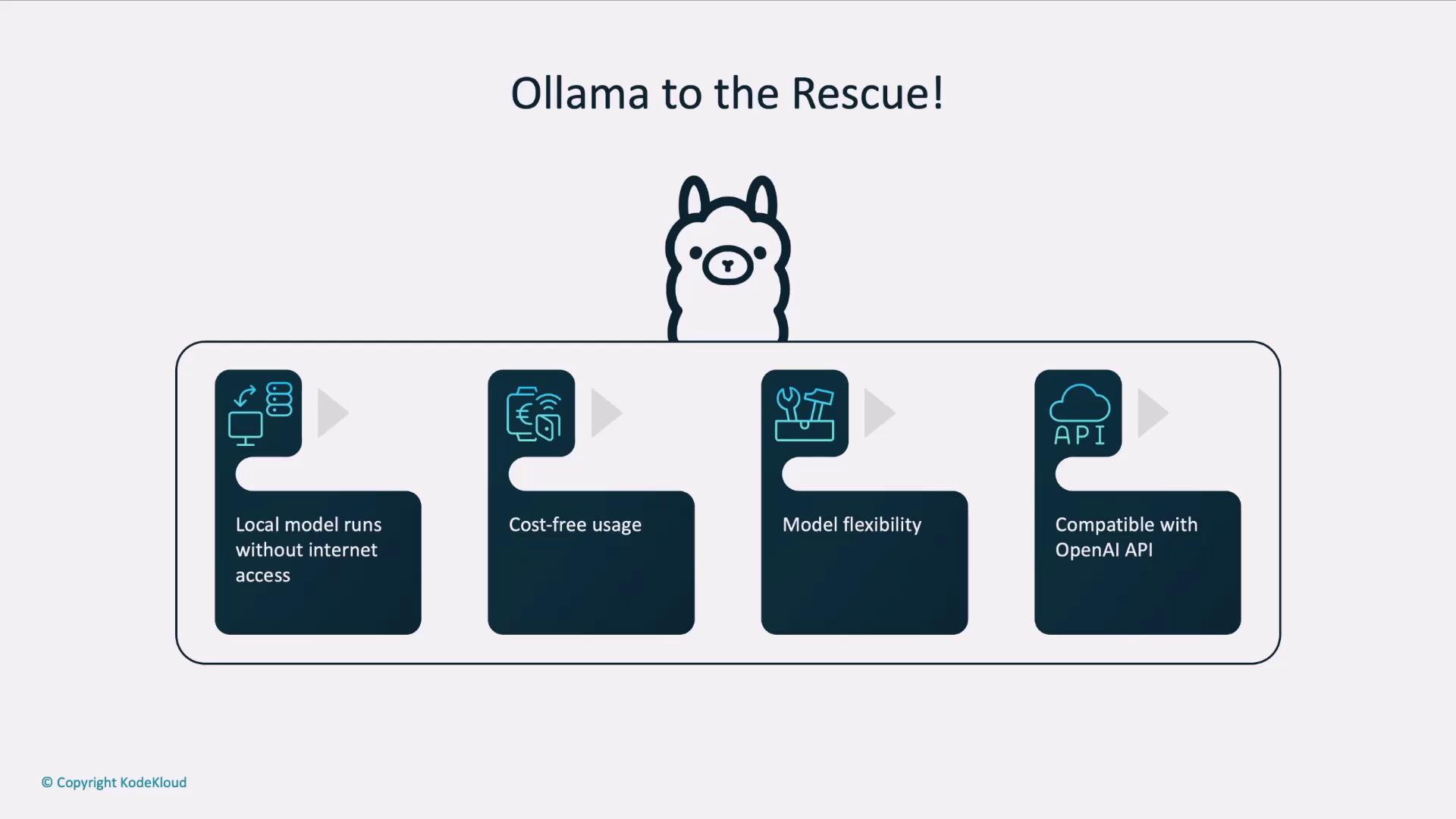

Ollama checks all these boxes:

| Benefit | Description |

|---|---|

| Offline Usage | Run models locally without internet after pulling them once. |

| Free & No Sign-Up | No credit card required to explore and prototype. |

| Model Flexibility | Compare and switch between different LLMs with a single CLI command. |

| Production Compatibility | Swap your local endpoint for the OpenAI API when you’re ready to scale. |

Tip

When it’s time for production, simply update your API base URL and credentials to point at OpenAI’s API—your code stays the same.

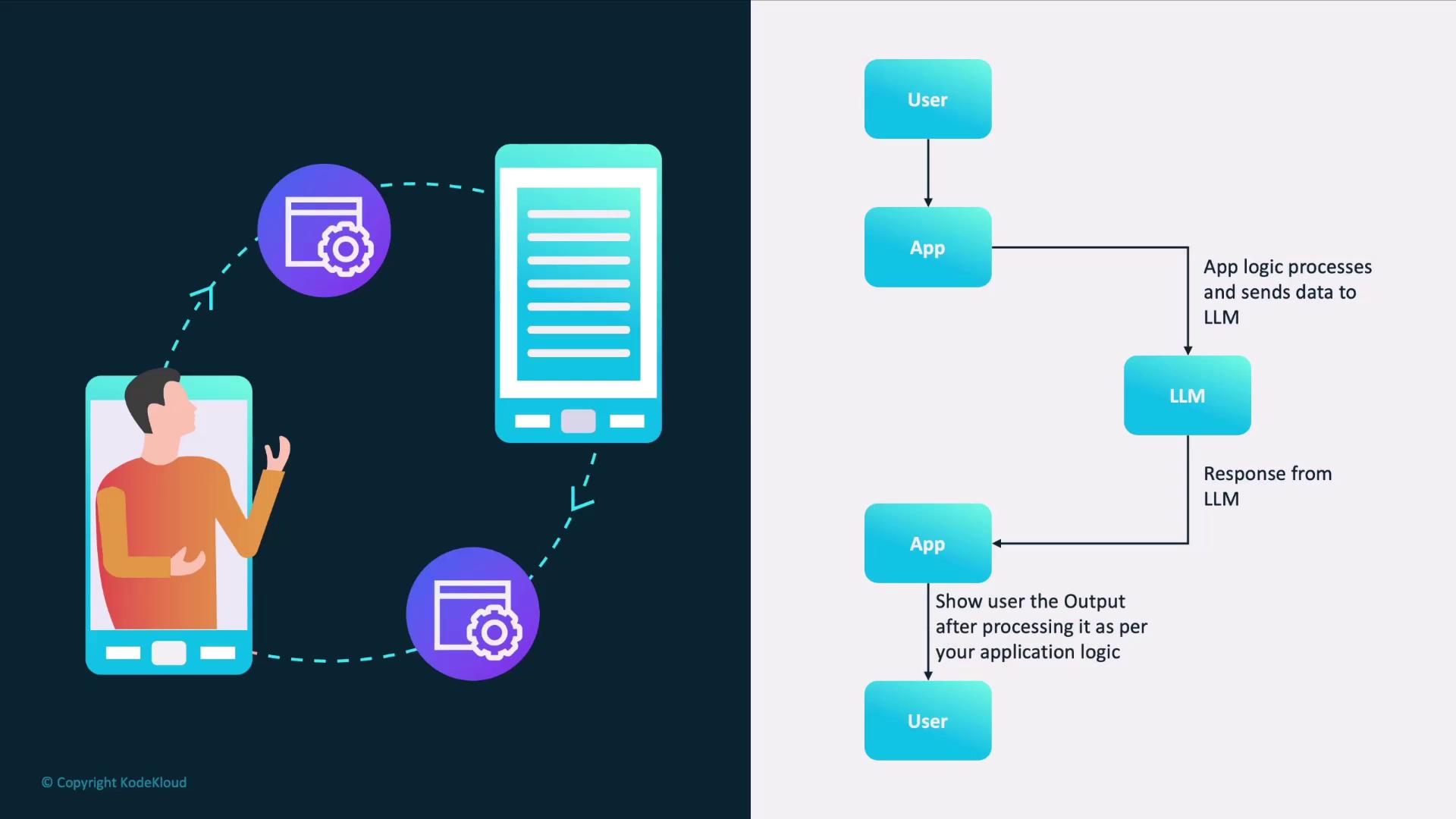

How an AI Application Interacts with an LLM

A typical AI workflow involves:

- User submits input to your app.

- App pre-processes the text (e.g., tokenization).

- App sends a request to the LLM endpoint.

- LLM generates and returns a response.

- App post-processes the output (e.g., formatting).

- App displays results to the user.

To implement this flow, you need a REST endpoint for both requests and responses. That’s exactly what ollama serve provides.

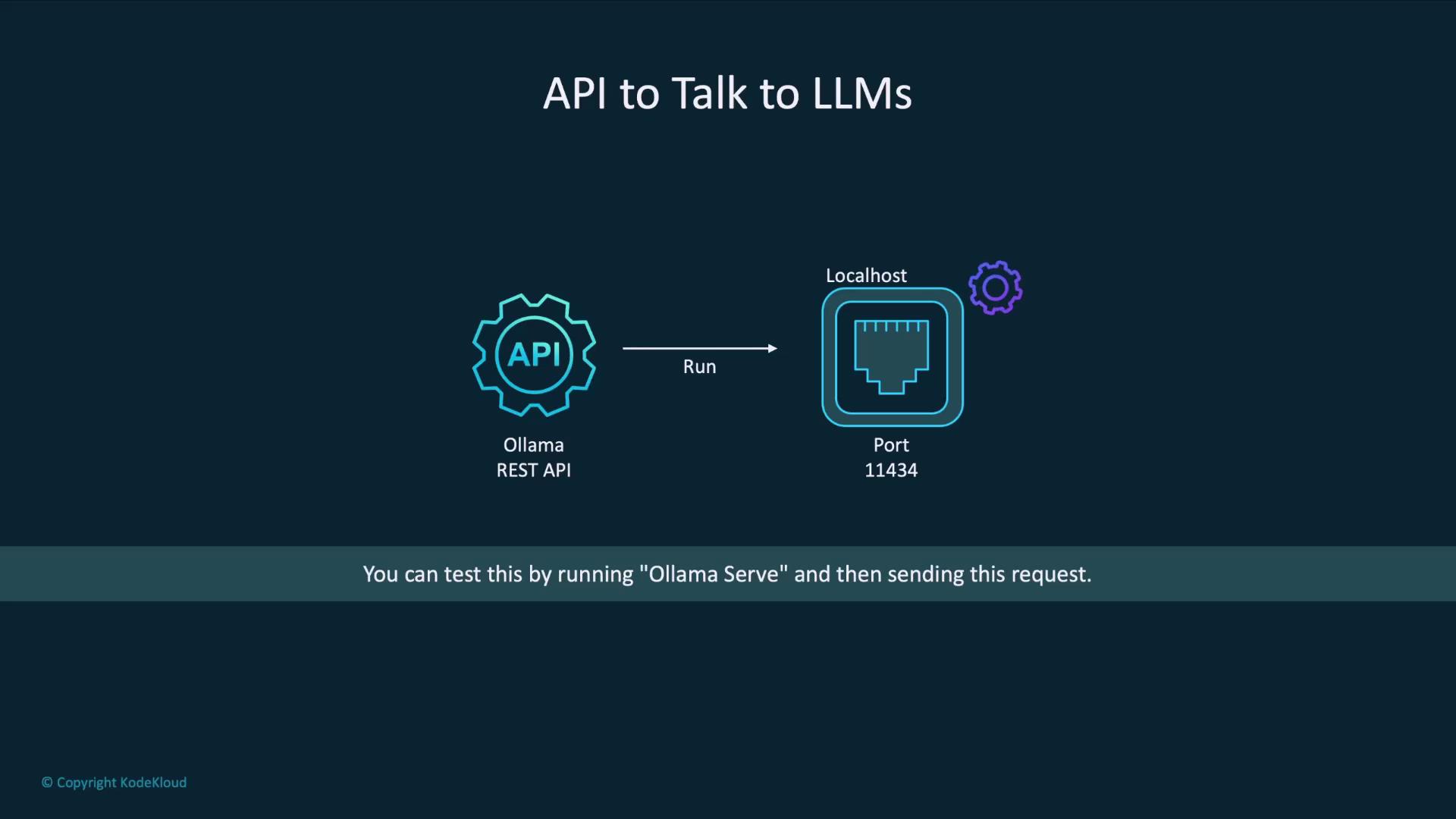

Getting Started: Launching the Ollama Server

By default, Ollama’s REST API runs on port 11434. Start the server with:

ollama serve

Once the service is up, you can send HTTP requests to http://localhost:11434/api.

Warning

Ensure port 11434 is not used by other services. If it is, stop those processes or choose a different port using --port <PORT>.

Example: Generating a Poem with curl

Here’s how to call the llama3.2 model to compose a poem:

curl http://localhost:11434/api/generate -d '{

"model": "llama3.2",

"prompt": "Compose a poem on LLMs",

"stream": false

}'

Sample JSON response:

{

"model": "llama3.2",

"created_at": "2025-01-08T06:19:15.039927Z",

"response": "In silicon halls, where data reigns\nA new breed of mind, with logic gains\n...A future where language, is a tool for all\nNot a gatekeeper, that stands at the wall\nSo let us nurture these models with care\nAnd guide them gently, through the digital air\nFor in their potential, we find our own\nA world of wonder, where knowledge is sown.",

"done": true,

"done_reason": "stop"

}

Response Fields

| Field | Description |

|---|---|

| model | The name of the model that generated the output. |

| created_at | ISO 8601 timestamp when processing finished. |

| response | The generated text from the model. |

| done | Boolean indicating whether the generation completed. |

| done_reason | Explanation for why generation stopped (e.g., stop, length). |

Additional diagnostic fields (token counts, timing metrics) appear in the payload for performance tuning but are optional for most production use cases.

Next Steps

You’ve now set up the Ollama REST API and tested a simple generate endpoint. In the following lessons, we’ll explore:

- Streaming responses for real-time applications

- Custom prompts and system messages

- Advanced endpoints for embeddings, classifications, and more

Links and References

Watch Video

Watch video content