Rust Programming

Asynchronous Programming

Concurrent Programming

Welcome to this comprehensive lesson on concurrent programming with Rust. In this guide, you'll learn how Rust handles concurrency and how to leverage its powerful features to build efficient, responsive applications. We'll begin by discussing the importance of concurrency, compare sequential and concurrent programming, and then dive into threads, processes, and asynchronous programming in Rust. You'll also discover the differences between concurrency and parallelism.

By the end of this lesson, you will have a solid understanding of concurrent programming concepts and the know-how to apply them effectively in your Rust projects.

Motivation for Concurrency

Modern applications must handle multiple tasks simultaneously. For instance, while you type or browse the internet, your device can play music in the background and download files without any lag. This smooth multitasking experience is essential not only for desktop and mobile applications but also for server-side applications handling numerous client requests concurrently.

Consider a music application, which seamlessly performs multiple tasks:

- Streams music data from a server.

- Processes data into a playable format.

- Sends the audio to the system's sound hardware.

- Interacts with the user interface to respond to actions like play, pause, or skip, and updates UI elements such as song titles and progress bars.

Achieving smooth performance in such an application requires effective concurrent programming.

Sequential Execution

Sequential execution is the default programming model where each instruction is executed one after the other. Consider the following example:

do_a_thing();

println!("hello!");

do_another_thing();

Here, each function call completes before the next one begins. Although sequential execution is straightforward, it can be inefficient—especially when a function like println! triggers a blocking I/O operation. During such operations, the CPU remains idle, which wastes valuable cycles.

I/O operations, like file reads or network requests, typically take longer than in-memory computations. In a sequential model, the processor waits for these operations to complete, which reduces overall system efficiency and responsiveness.

Processes and Threads

Operating systems provide processes and threads as fundamental concurrency constructs:

- Processes: Independent programs with their own memory space.

- Threads: Smaller execution units within a process that share the same memory space.

The OS schedules these threads and processes on CPU cores, enabling them to run concurrently.

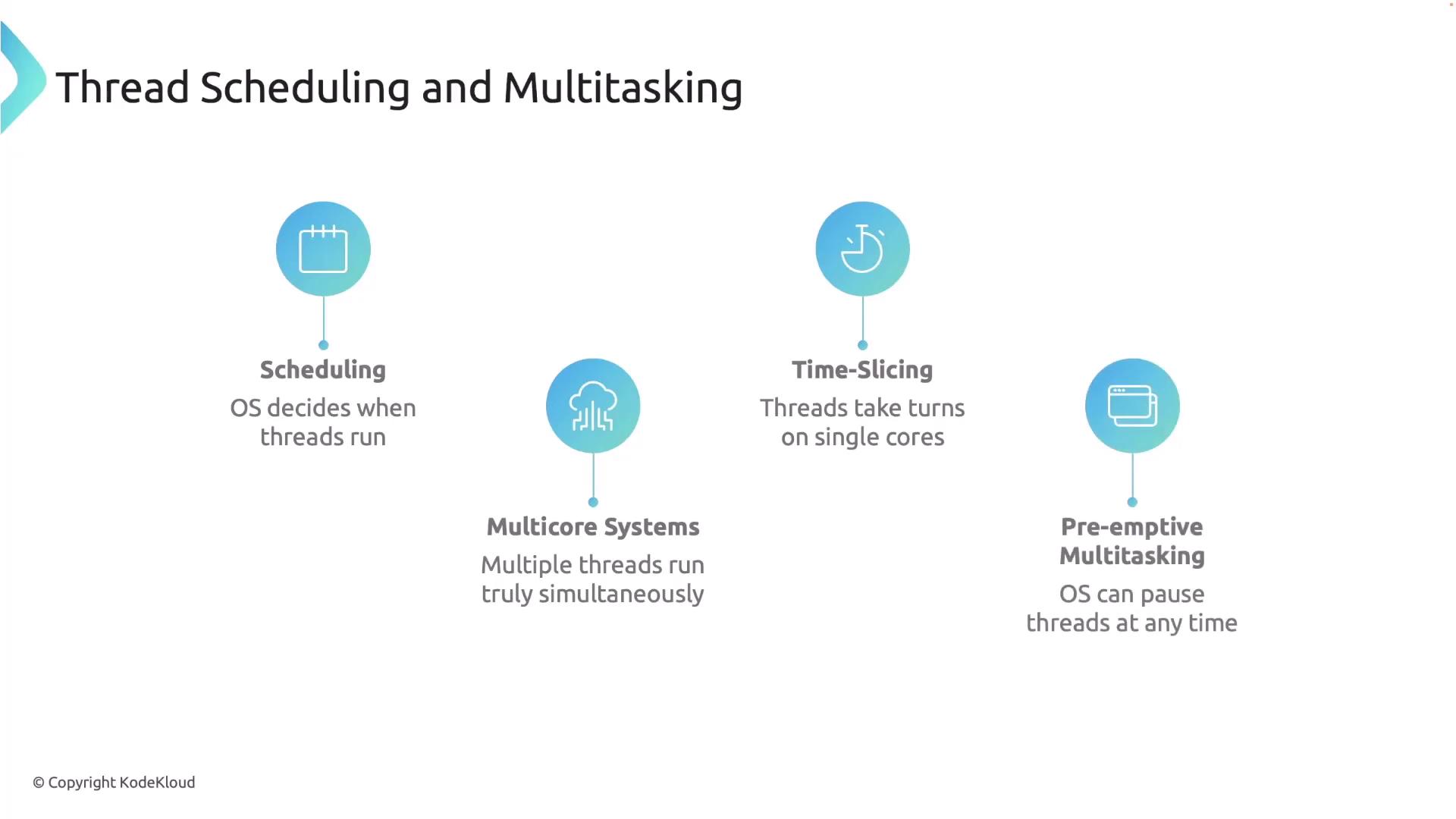

Thread Scheduling and Multitasking

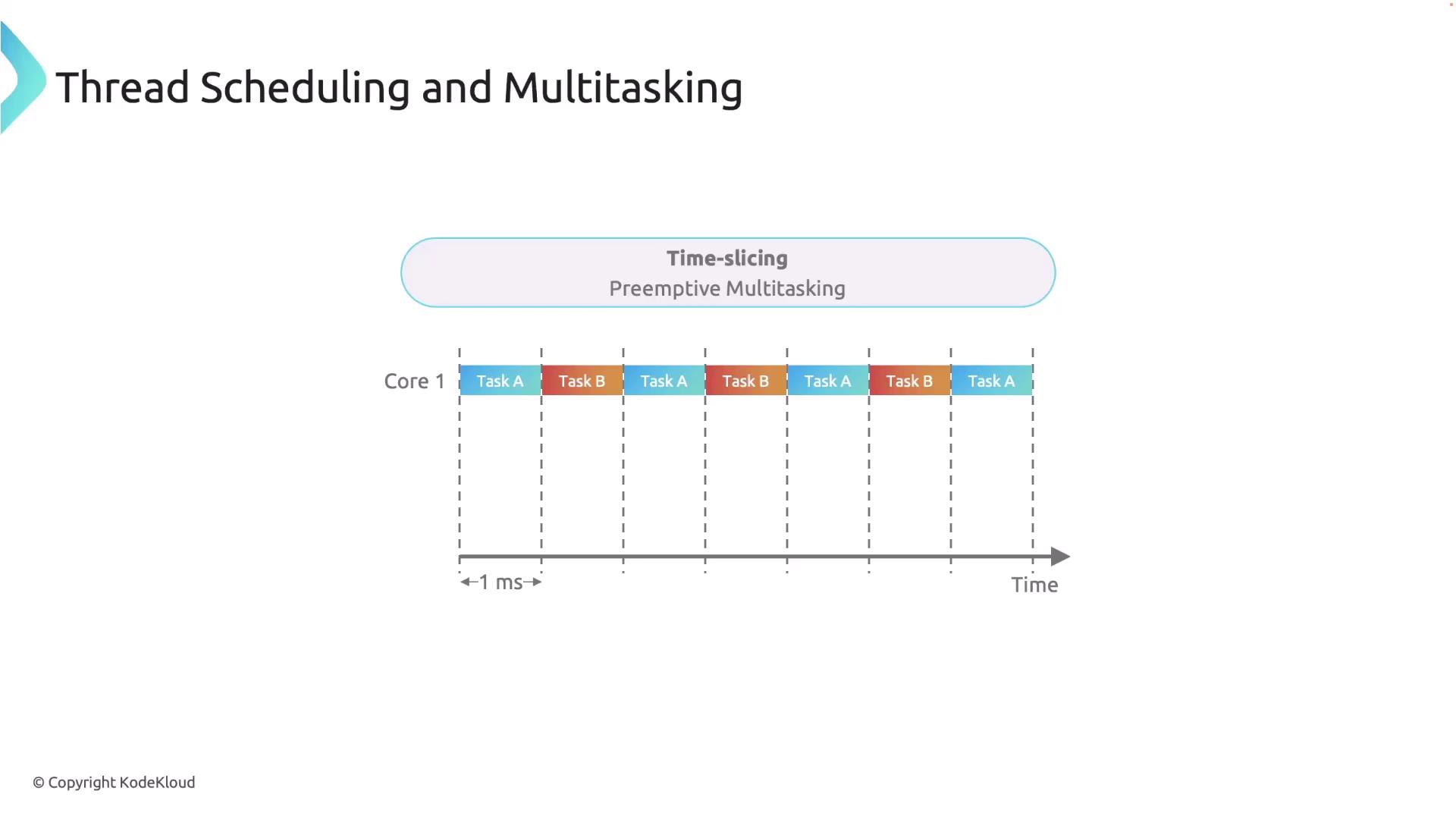

Thread scheduling is how an OS decides the execution order and duration of threads. On a multicore system, multiple threads can run at the same time. However, if there are more threads than available cores, the OS employs:

- Time-slicing: Allocating short time intervals to each thread on a core.

- Preemptive multitasking: Interrupting threads to switch between them, ensuring responsive task handling.

Modern multicore processors allow true parallel execution, and even on a single core, rapid time-slicing creates the illusion of simultaneous operations.

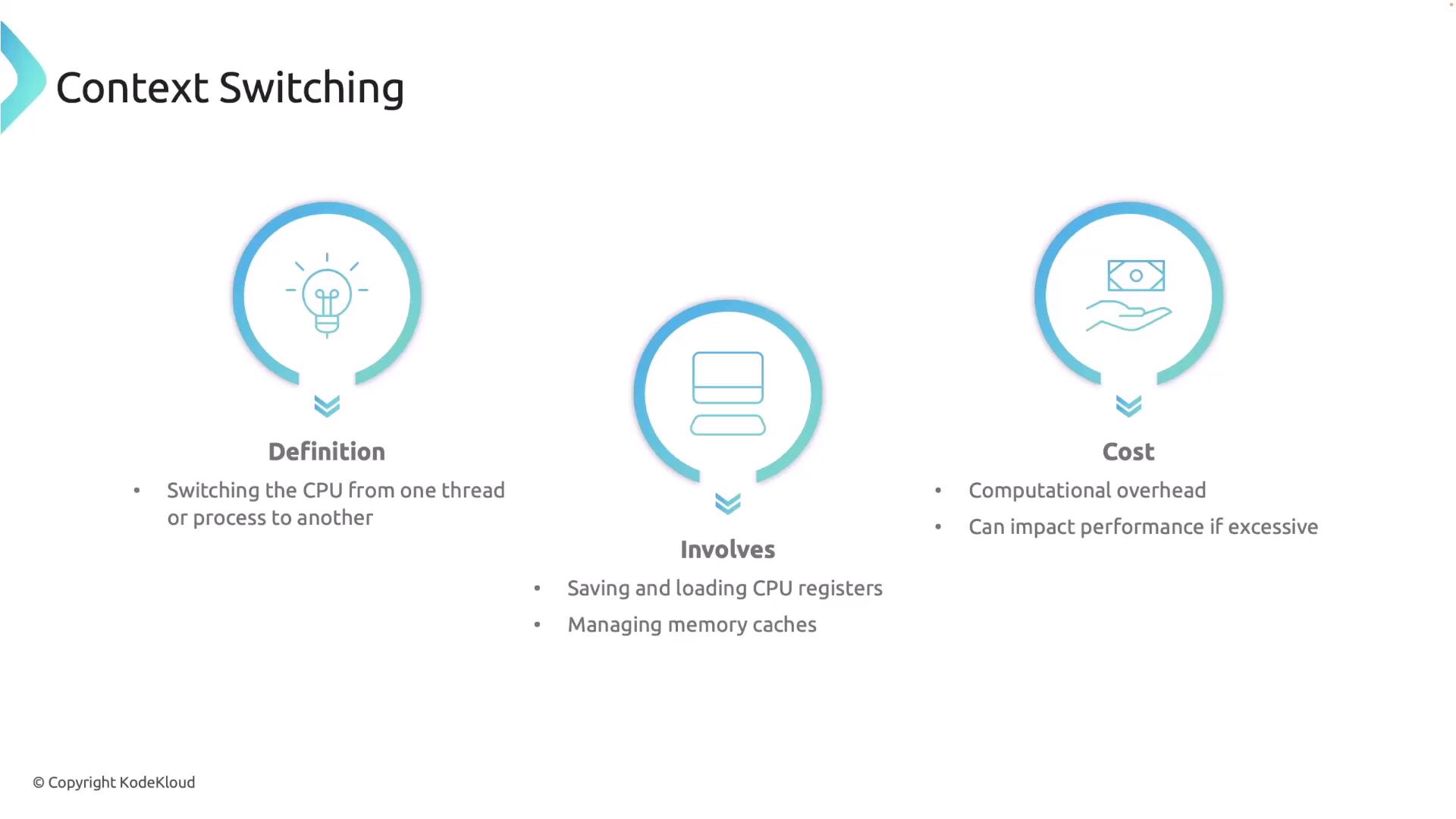

Context Switching

Context switching occurs when an OS switches from running one thread to another by saving the state of the current thread and loading the state of the next. While essential, context switching can negatively impact performance due to cache interference and overhead.

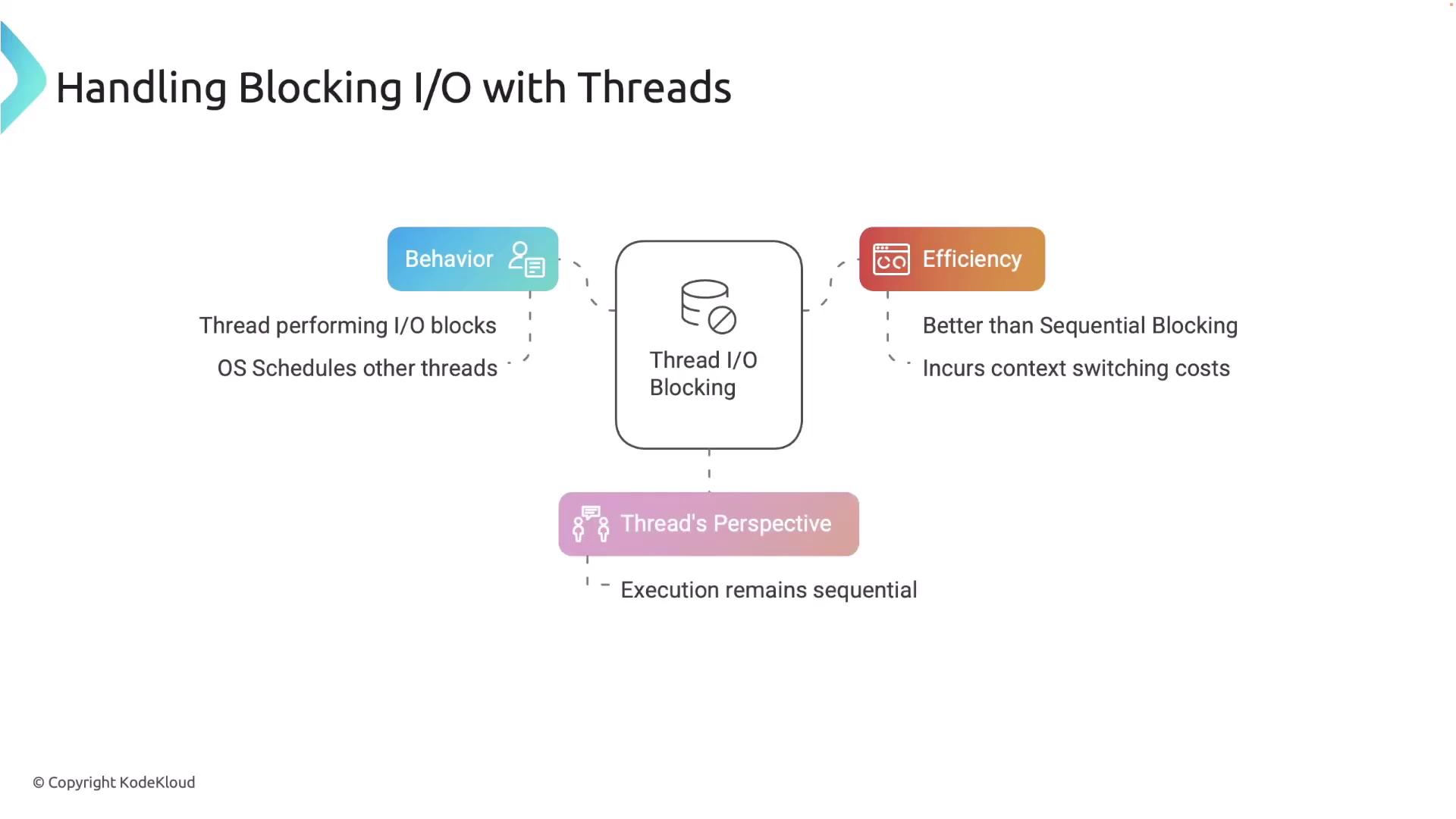

Handling Blocking I/O with Threads

When a thread encounters a blocking I/O operation, the OS pauses that thread and schedules another. This allows other threads to utilize the CPU during waiting periods, though it does introduce context switching overhead.

Limitations of Thread-Based Concurrency

While thread-based concurrency enhances responsiveness, it has notable limitations:

- High overhead from frequent context switching.

- Increased complexity when managing a large number of threads.

- Scalability issues, particularly for applications like TCP servers requiring thousands of concurrent connections.

Warning

Excessive use of threads can overwhelm the system, making thread-based models less suitable for massive concurrency scenarios.

Asynchronous Programming in Rust

Asynchronous programming offers an alternative concurrency model that is managed within the application rather than relying solely on OS threads. Instead of spawning heavy threads, async programming uses lightweight tasks that yield control voluntarily, enabling cooperative multitasking. This design minimizes overhead while efficiently handling many concurrent operations.

Rust's robust async features are particularly well-suited for high-performance, I/O-intensive applications.

Cooperative Multitasking

In cooperative multitasking, tasks run until they reach a point where they must wait (for example, on I/O operations) and then yield control back to the scheduler. Unlike preemptive multitasking, cooperative multitasking ensures uninterrupted execution between yield points. However, long-running computations should periodically yield control to avoid blocking other tasks.

Asynchronous I/O

Asynchronous I/O allows tasks to initiate I/O operations without blocking execution. When an async I/O operation is triggered, the task yields control while the async runtime manages the operation and notifies the task upon completion. This maximizes CPU utilization and increases application throughput.

Comparing Blocking I/O with Threads vs. Async I/O with Tasks

| I/O Model | Description | Resource Impact |

|---|---|---|

| Blocking I/O with Threads | A thread blocks during an I/O operation, and the OS schedules another thread. | Higher resource consumption and overhead |

| Async I/O with Tasks | A task yields control when I/O is initiated; the async runtime handles scheduling without OS intervention. | Lower overhead and high scalability |

Combining Threads and Async Programming

It is possible to combine the strengths of threads and async programming. For example, async can handle high-concurrency I/O-bound tasks while CPU-bound computations can be offloaded to separate threads. A common use case is a web server that processes HTTP requests asynchronously and delegates heavy data processing to worker threads.

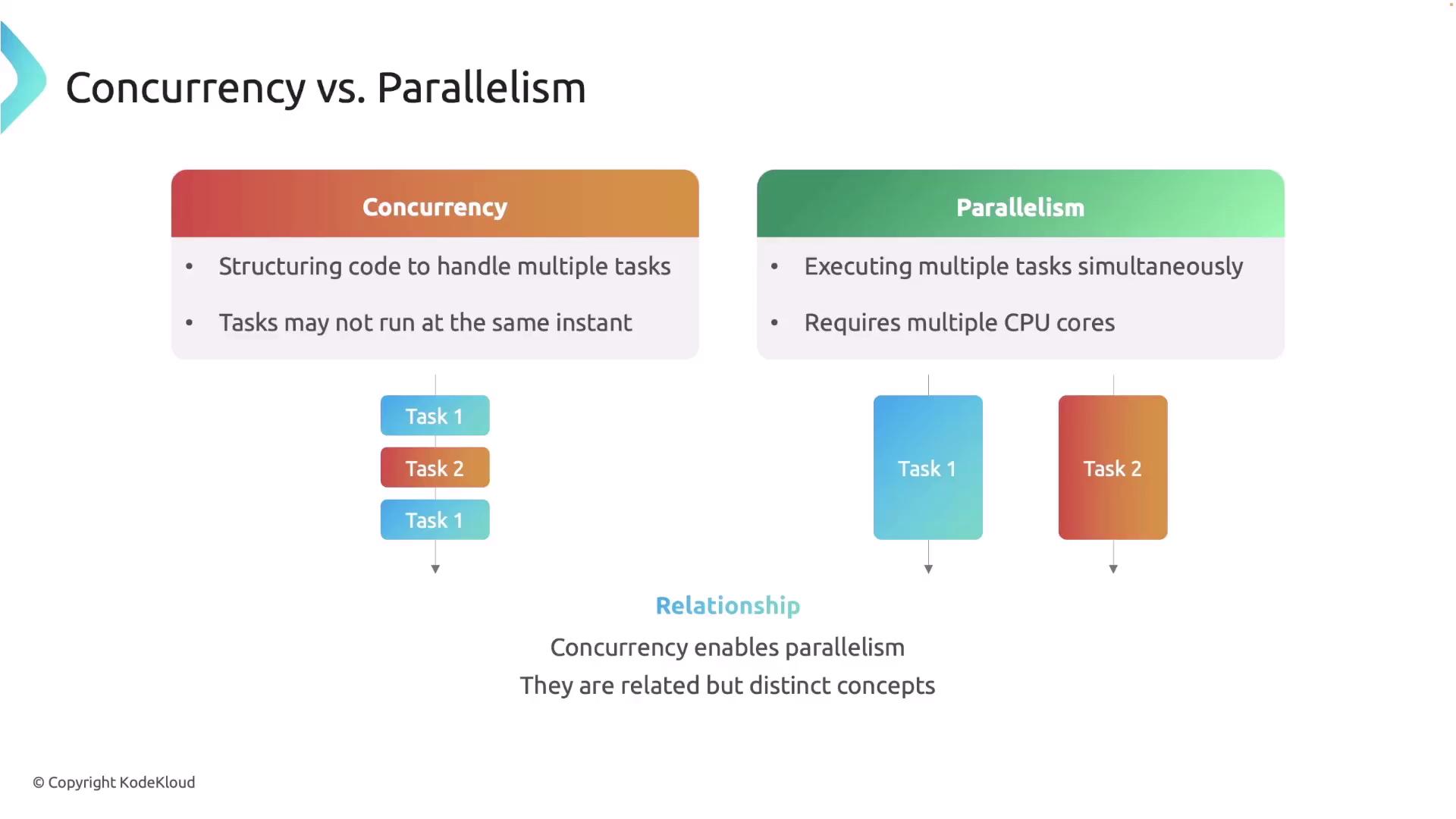

Concurrency vs. Parallelism

Understanding the distinction between concurrency and parallelism is key to effective program design:

- Concurrency involves structuring your code to manage multiple tasks—tasks that may interleave, but do not necessarily run simultaneously.

- Parallelism means running multiple tasks at exactly the same time, which requires multiple CPU cores.

Concurrency is a design strategy that can enable parallelism, but the two concepts are distinct.

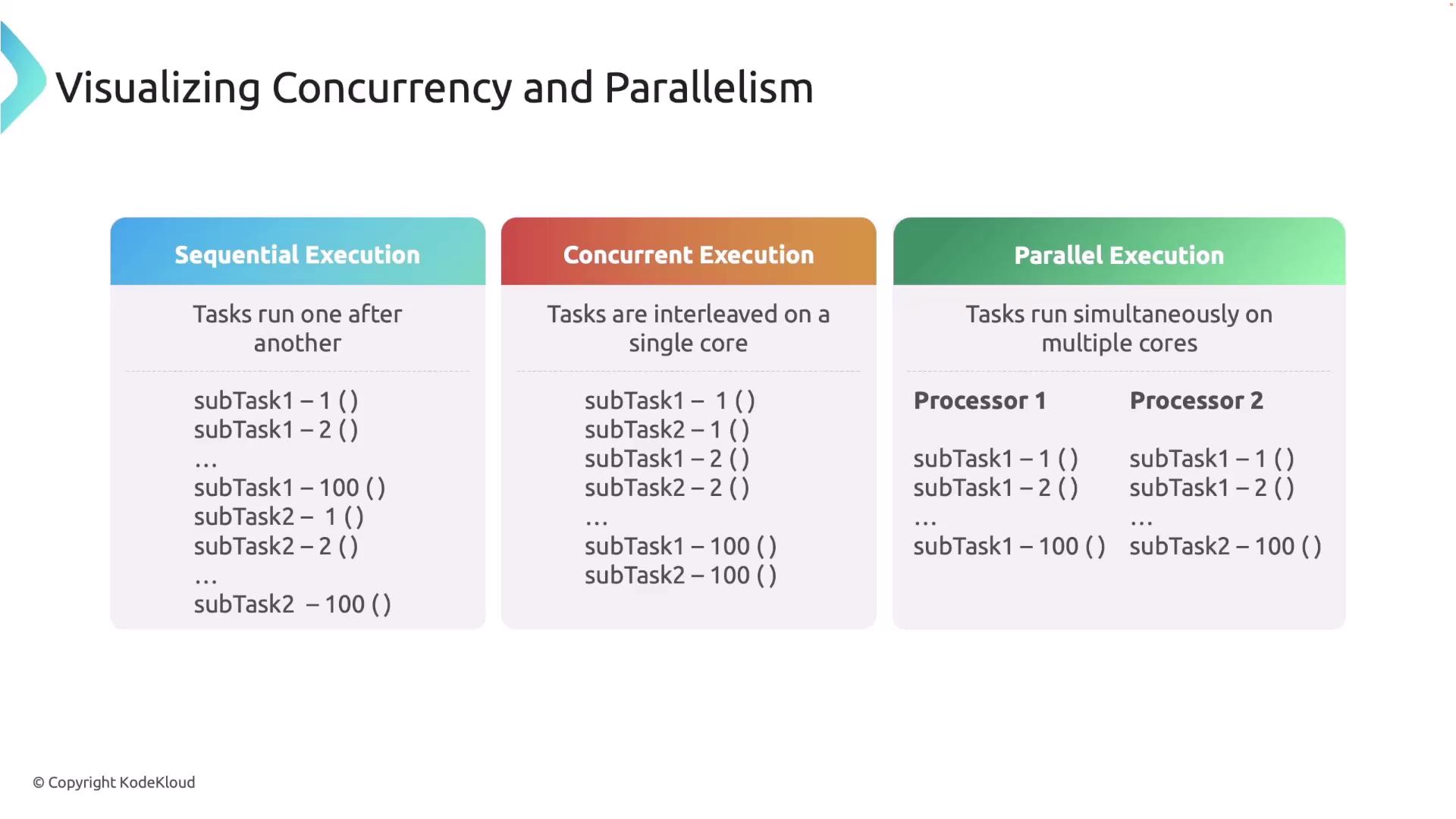

Visualizing Execution Modes

- Sequential Execution: Tasks run one after the other.

- Concurrent Execution: Tasks are interleaved on a single core.

- Parallel Execution: Tasks run simultaneously on multiple cores.

In Rust’s async programming model, constructs such as async functions and await allow operations to be handled without blocking the entire thread, ensuring continuous responsiveness. Parallel execution, on the other hand, depends on hardware resources like multiple CPU cores to truly run tasks at the same time.

Combining Concurrency and Parallelism

Both threads and async tasks contribute to achieving high performance through a blend of concurrency and parallelism:

- Concurrency: Achieved by structuring and interleaving tasks within your code.

- Parallelism: Facilitated by the scheduler—either the OS for threads or the async runtime for tasks—leveraging multiple CPU cores simultaneously.

For example, async runtimes like Tokio can configure tasks to run across multiple threads, combining efficient I/O handling with parallel execution.

By integrating these two paradigms—concurrency from your code structure and parallelism from hardware capabilities—you can significantly optimize your application's performance.

Key Takeaways

Here are the key insights from this lesson:

- Concurrency: Enables efficient management of multiple tasks to improve responsiveness.

- Asynchronous Programming: Provides a lightweight concurrency model, ideal for I/O-bound operations with minimal overhead.

- Parallelism: Utilizes hardware capabilities to execute tasks simultaneously, enhancing performance for CPU-intensive operations.

- Combined Approach: Leveraging the right tools for each part of your application—threads for CPU-bound tasks and async for I/O-bound tasks—allows you to build scalable, high-performance systems in Rust.

Note

Remember to choose the appropriate concurrency model for your specific use case. Use asynchronous programming for handling numerous I/O-bound tasks, and delegate CPU-intensive tasks to threads or parallel execution frameworks.

Watch Video

Watch video content