Kubernetes for the Absolute Beginners - Hands-on Tutorial

Kubernetes Overview

Container Orchestration

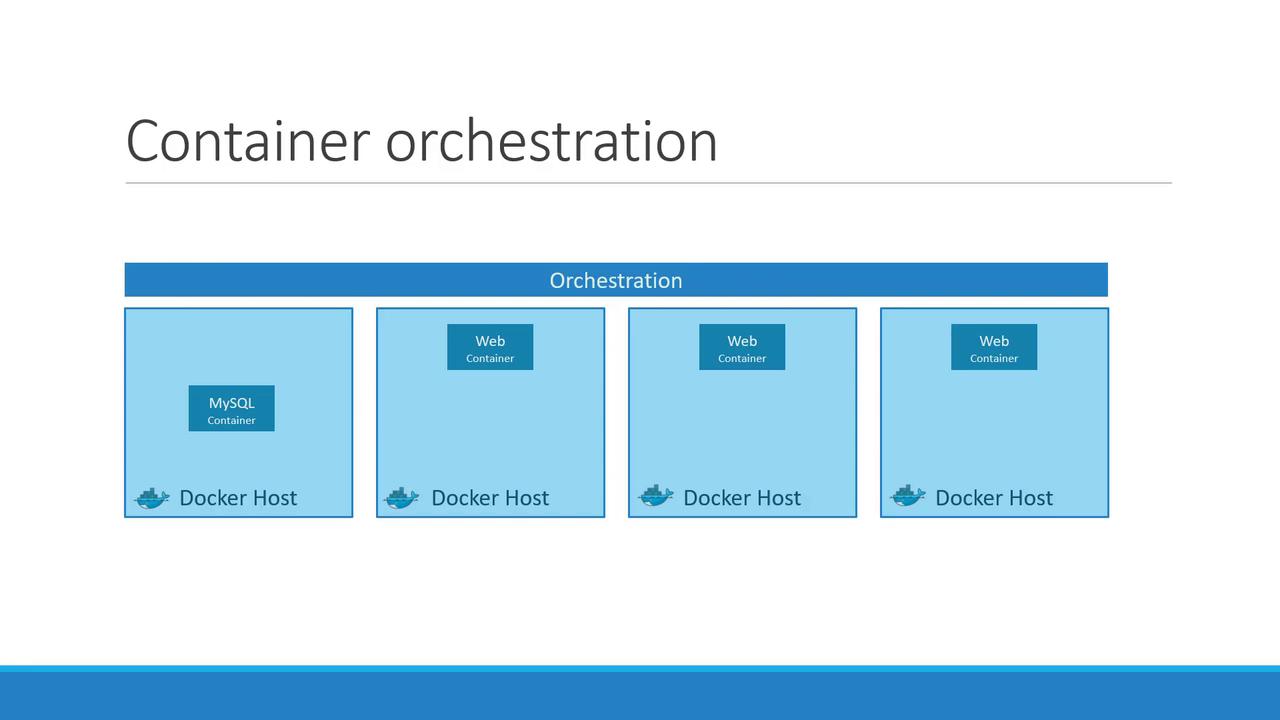

Welcome to this lesson on container orchestration. In this guide, you'll learn how to deploy containerized applications in production environments while addressing challenges like connectivity, inter-service dependencies, and dynamic load scaling.

Imagine your application is encapsulated in a Docker container, potentially relying on ancillary containers—such as those running databases or messaging services—to function correctly. Additionally, as user demand fluctuates, a system that can quickly scale both up and down is essential. Container orchestration platforms provide the necessary resources and capabilities by managing container connectivity and autoscaling seamlessly.

Container orchestration automates the deployment, scaling, and management of containerized applications. Several technologies are available to fulfill these requirements. For example, Docker Swarm offers a straightforward setup process; however, it may lack some advanced features needed for highly complex applications.

Apache Mesos is another orchestration solution that, while more challenging to configure at first, provides a robust set of advanced features for sophisticated architectures. However, Kubernetes stands out as the industry favorite. Although its initial setup might be more involved, Kubernetes offers extensive customization for deployments and can manage intricate architectures with ease. It is supported by all major public cloud providers—Google Cloud Platform, Azure, and AWS—and is consistently among the top-ranked projects on GitHub.

Key Advantages

Some notable benefits of container orchestration include:

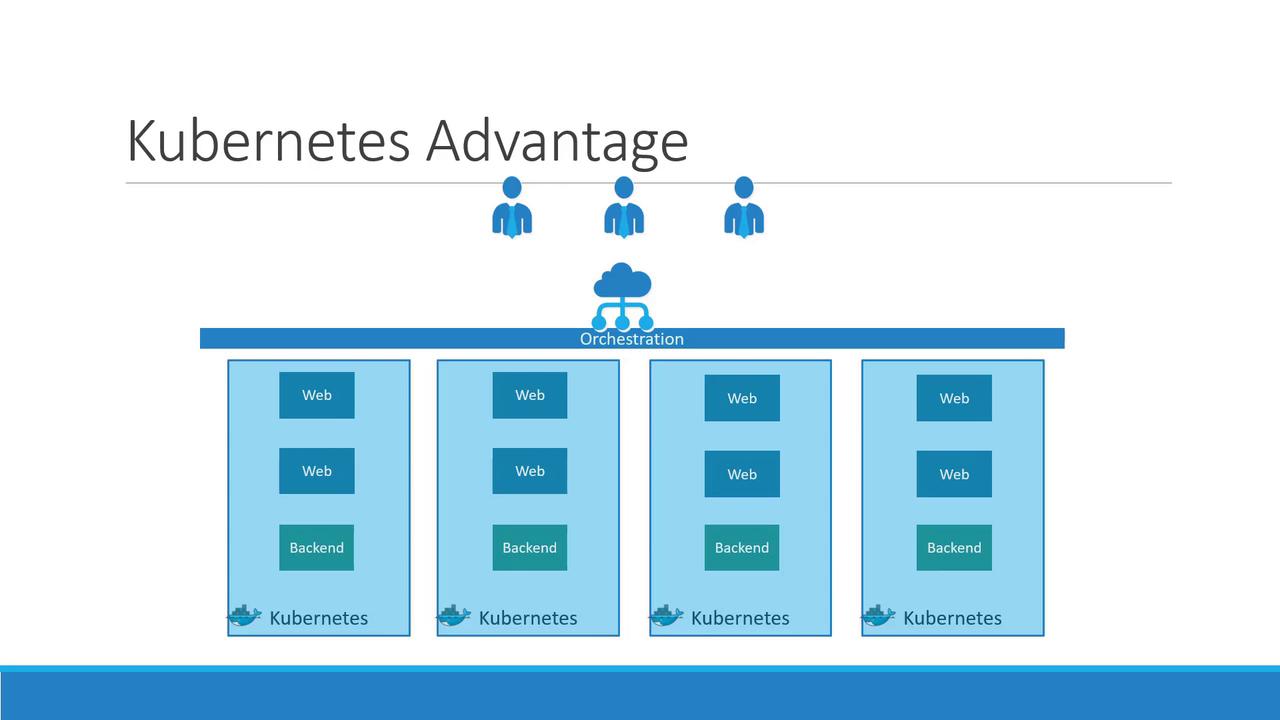

- High Availability: Multiple instances of your application run on different nodes, ensuring hardware failures do not disrupt service.

- Load Balancing: Distributes user traffic evenly across containers, optimizing resource utilization.

- Seamless Scaling: Quickly deploy additional application instances during high-demand periods while scaling down when demand decreases.

- Declarative Configuration: Modify resources or scale nodes using declarative configuration files without causing downtime.

Kubernetes is a powerful container orchestration platform built to manage the deployment and scaling of hundreds or even thousands of containers across a clustered environment. It supports dynamic resource management and facilitates the deployment of complex, multi-service architectures without interrupting your applications.

In upcoming articles, we will explore the architecture of Kubernetes in more detail, diving deeper into its concepts and capabilities.

Thank you for reading, and we look forward to guiding you through the next lesson.

Watch Video

Watch video content