AI-900: Microsoft Certified Azure AI Fundamentals

Azure NLP Services

Conversational Language and Understanding

In this article, we explore the fundamental components that empower conversational AI systems—such as Azure's Language Understanding (LUIS) and the Azure Bot Service—to interpret and respond to natural language inputs. This guide breaks down the process into three essential elements: Utterance, Intent, and Entity.

Utterance

An utterance is the spoken or typed input provided by a user. For instance, when a user says "Set a timer for 10 minutes," this complete input is processed as an utterance. Conversational AI systems analyze such inputs to understand the user's requirements.

Intent

The intent represents the underlying purpose or goal behind the user’s utterance. In the previous example, the intent is "set timer." The system identifies this intent to decide on the appropriate course of action.

Entity

An entity provides specific details extracted from an utterance. In our timer example, the phrase "10 minutes" is an entity that specifies the duration. By extracting entities, the system can execute the user's request with greater precision.

How Conversational AI Works

The methodology behind processing user input in conversational AI follows these steps:

- Recognize the Utterance: Capture the complete user input.

- Classify the Intent: Determine the user's goal (for instance, setting a timer).

- Extract Entities: Identify and extract particular pieces of information (e.g., "10 minutes").

- Generate a Response: Utilize the recognized intent and entities to perform an action or provide a suitable response, such as initiating the timer.

Note

Accurately identifying the intent and entities is crucial for the system to deliver precise actions.

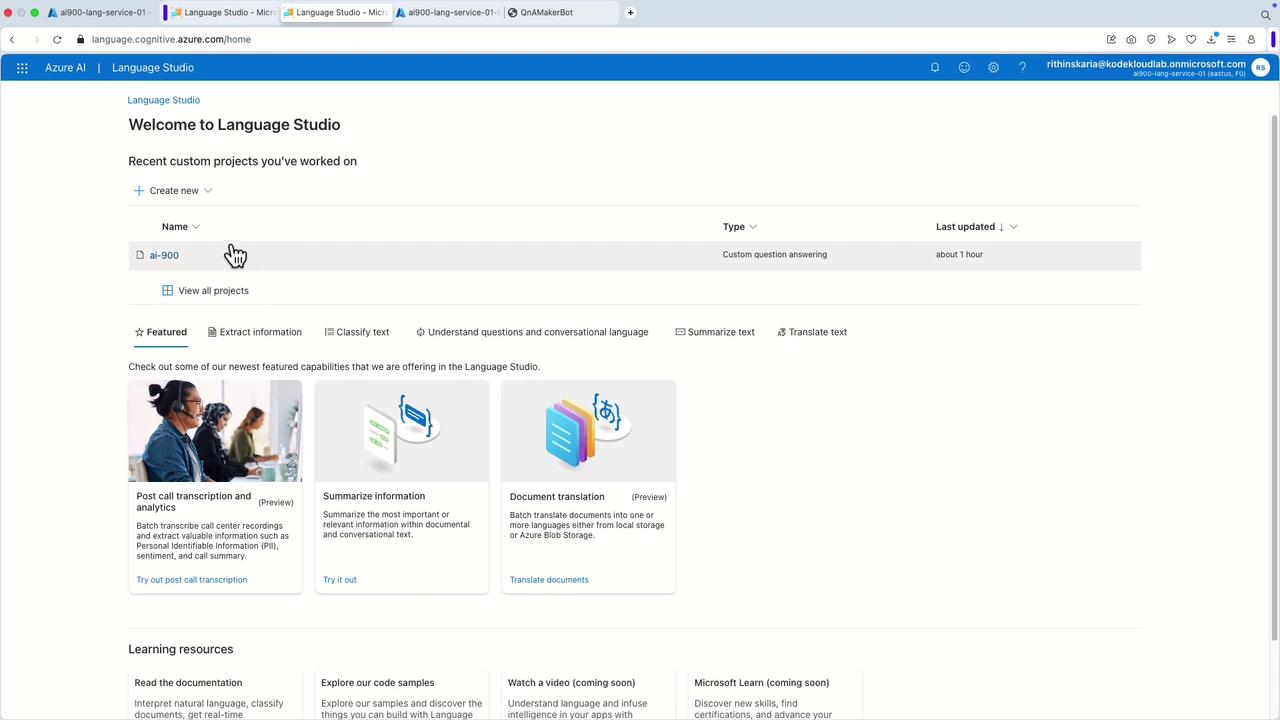

Working with LUIS in Language Studio

Follow these steps to create, train, test, and deploy a conversational language understanding project using Azure Language Studio:

1. Creating a New Project

- In Language Studio, click on "Create New" and choose "Conversational Language Understanding."

2. Project Setup

- Name your project (e.g., "LUIS AI 900").

- Click "Next" and then "Create." You will then be directed to the intents section.

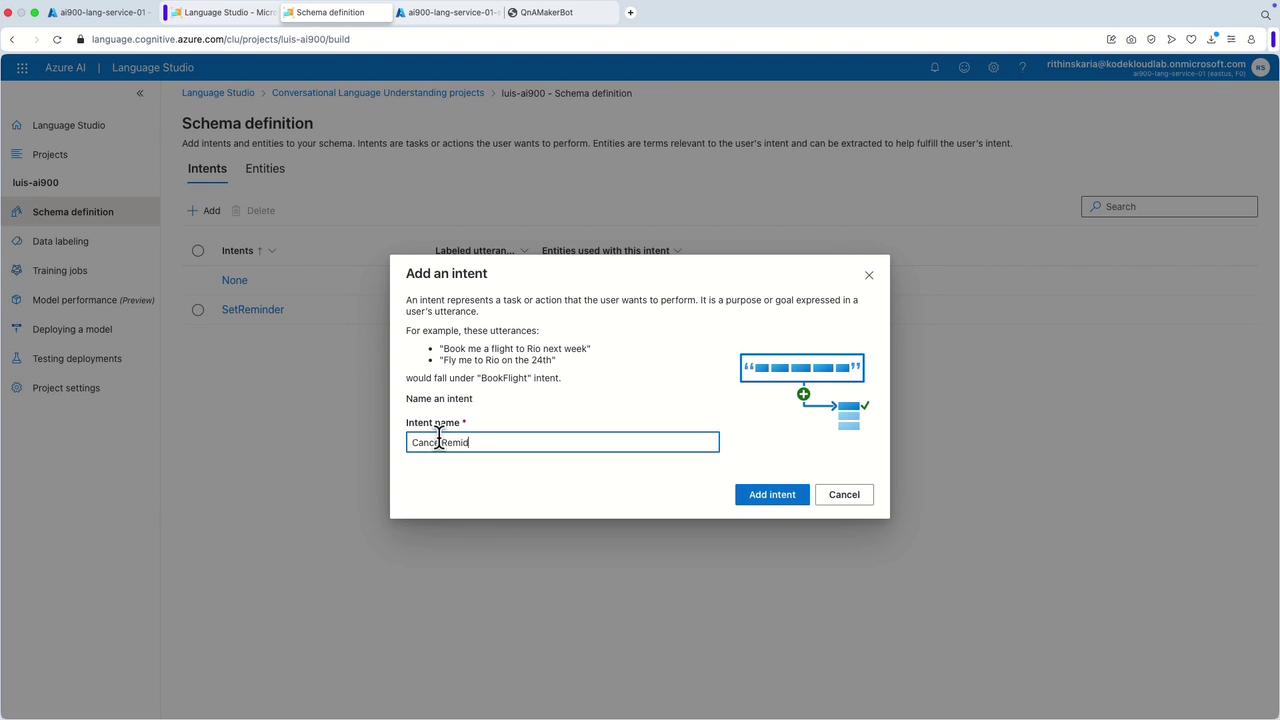

3. Defining Intents

- In the intents section, add a new intent by navigating to the Schema Definition.

- Create an intent called "Set Reminder."

- You can add additional intents such as "Cancel Reminder," "Set Alarm," "Modify Alarm," "Cancel Alarm," or "Set Recurring Alarm" as required.

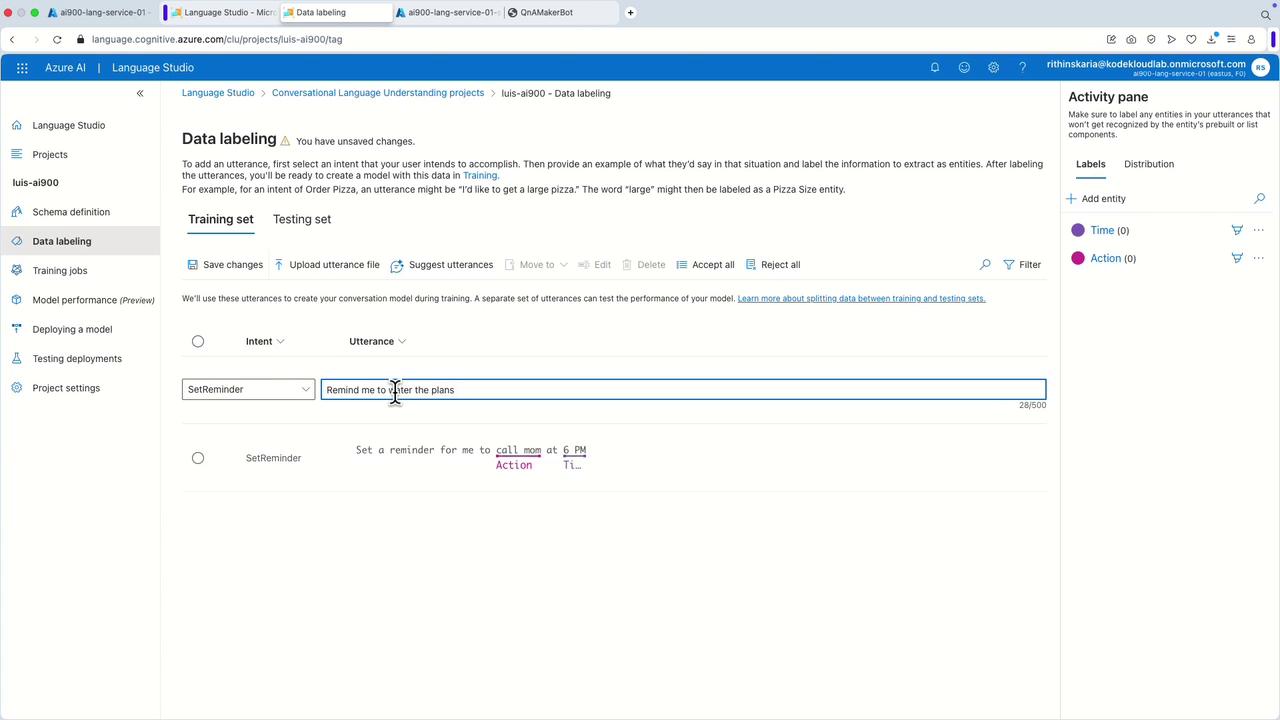

4. Labeling the Data

Once the intents are defined, start labeling your training data. For example, for the "Set Reminder" intent, you might include an utterance like:

"Set a reminder for me to call mom at 6 p.m."

Define the extracted entities:

- Action: "call mom"

- Time: "6 p.m."

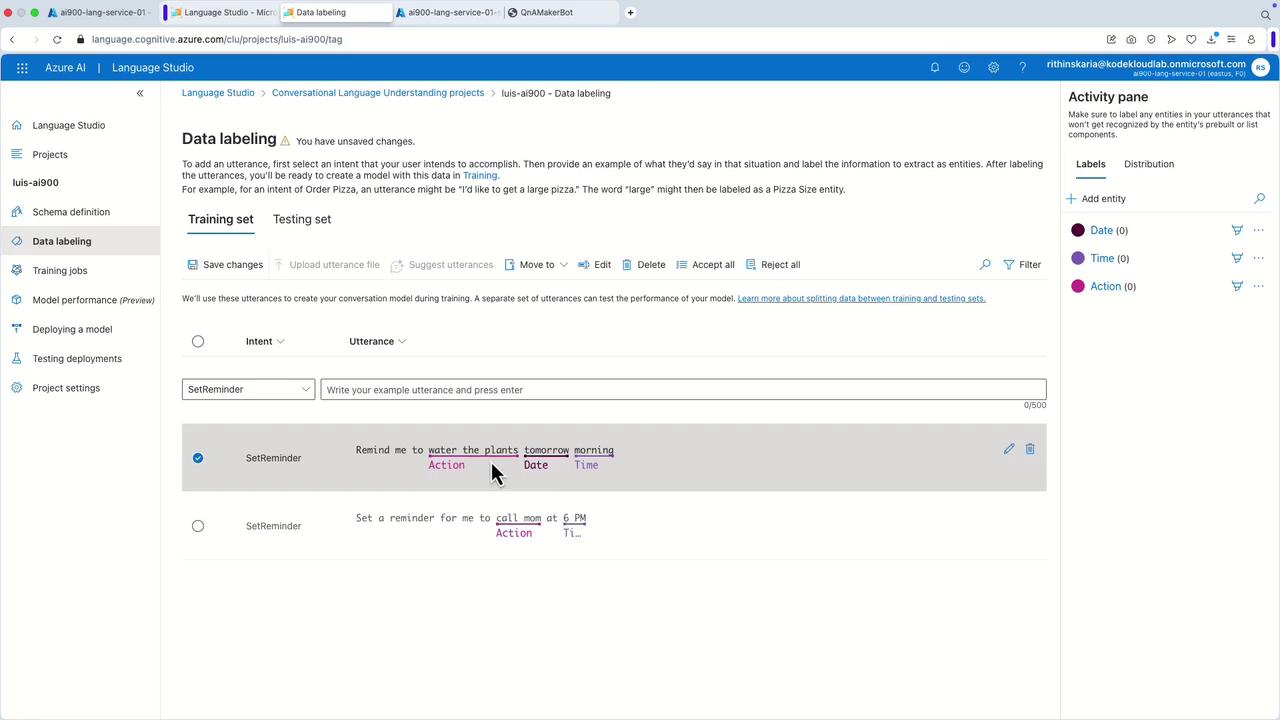

Enhance your model’s robustness with additional examples. For instance:

"Remind me to order the plants tomorrow morning."

For this utterance:

- Action: "order the plants" or "water the plants"

- Time: "morning"

- Date: (optional, e.g., "tomorrow")

5. Additional Example – Cancel Reminder

Define an utterance such as:

"Cancel my reminder to call Dad tonight."

Here, the intent is "Cancel Reminder" and the entity "Action" corresponds to "call Dad," while "tonight" serves as the time label.

6. Testing Intents

Create sample utterances for other intents such as "Set Alarm." For example:

"Set an alarm for 9 a.m."

In this case, label "set an alarm" as the action and "9 a.m." as the time.

Click "Save Changes" after labeling to proceed with training.

7. Training and Deployment

- Navigate to the training jobs section and start a new training job (e.g., name it "Louis01").

- Choose the free tier with the default settings.

- Once training is complete, deploy the model by adding a new deployment (naming it "Louis01" and selecting the trained model).

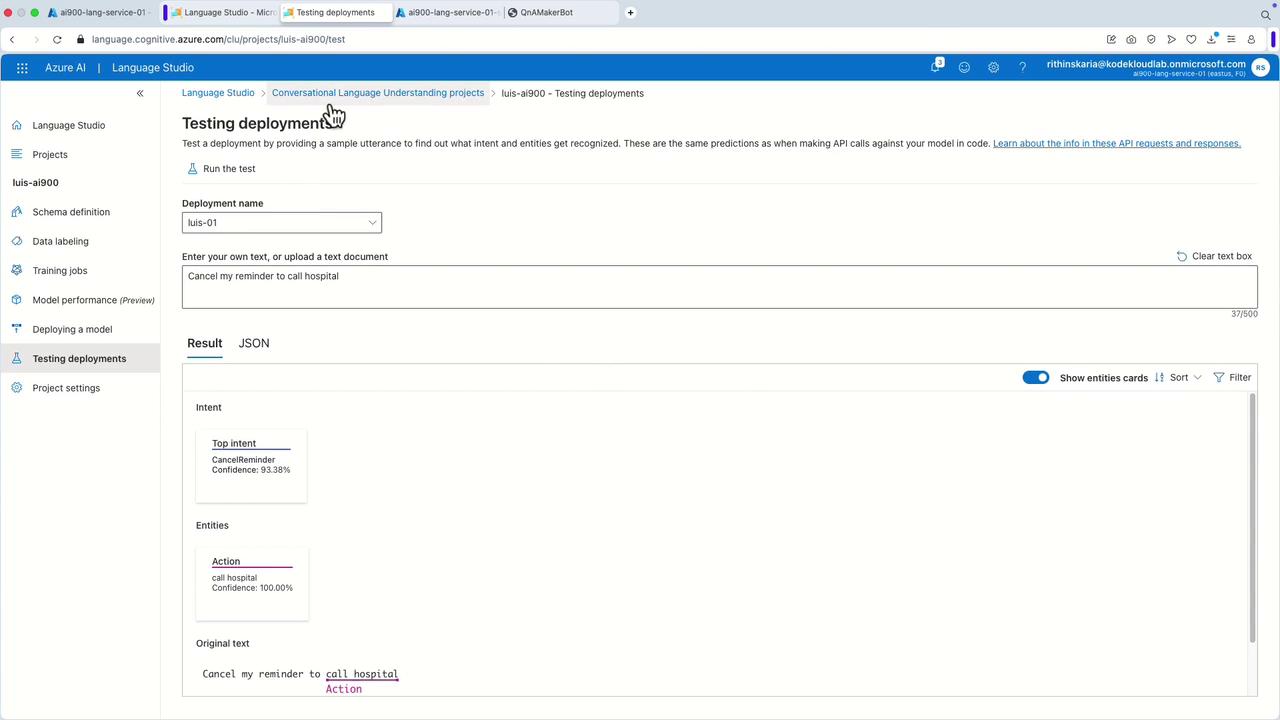

8. Testing the Deployed Model

Test your deployed model using sample utterances:

Example 1: "Set an alarm for 11 a.m."

The model should recognize the intent "Set Alarm" with a high confidence score and extract the time "11 a.m."

Example 2: "Cancel all meetings for tomorrow."

The system should detect the "Cancel Reminder" intent, though it might not capture any entities if they are not precisely defined.Example 3: "Cancel my reminder to call the hospital."

Here, the extracted entity "Action" should be recognized as "call hospital," confirming the accuracy of the system’s understanding.

Tip

Regular testing of your model with varied examples ensures robust performance and accurate entity extraction.

These detailed steps demonstrate the process of creating, training, testing, and deploying a conversational AI understanding project using Language Studio. This workflow allows the system to accurately identify user intents and extract relevant entities from utterances, ensuring precise responses to natural language commands.

Up next, we will explore how speech integration enhances this conversational AI workflow.

Watch Video

Watch video content