AI-900: Microsoft Certified Azure AI Fundamentals

Azure NLP Services

Speech Recognition and Synthesis

In this lesson, we explore two fundamental capabilities of the Azure Speech Service: Speech Recognition (speech-to-text) and Speech Synthesis (text-to-speech). These features empower developers to create interactive and accessible applications that seamlessly bridge the gap between spoken and written language.

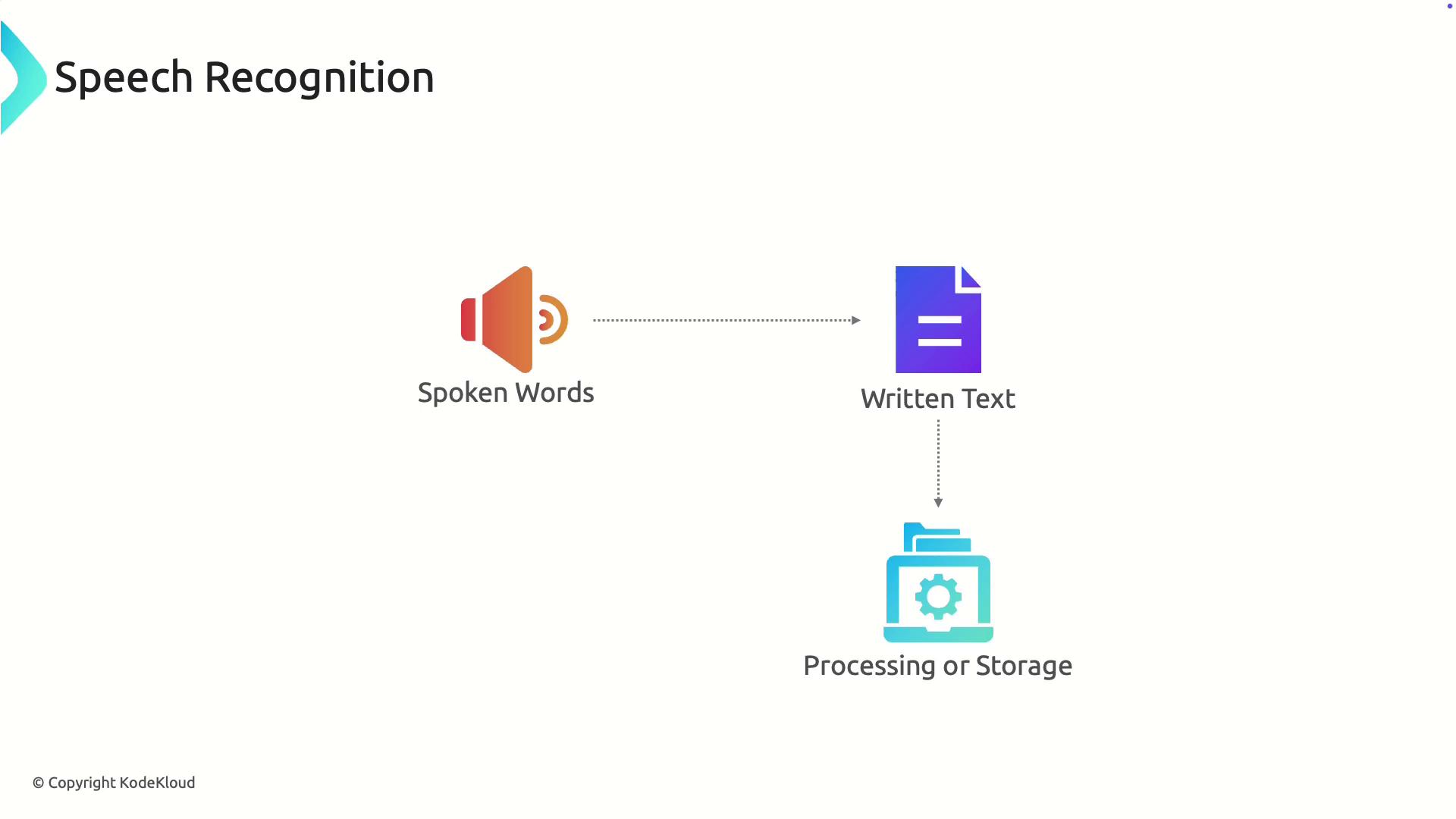

Speech Recognition

Speech recognition, or speech-to-text, converts spoken language into written text. The process begins with capturing audio input—such as voice commands, conversations, or dictation—and processing it into text for storage, analysis, or further action.

Key Benefits

Speech recognition enhances user accessibility and productivity. It allows users to dictate documents hands-free, making it especially valuable for individuals with mobility impairments. Additionally, customer service applications leverage this technology to transcribe conversations for sentiment analysis and issue resolution.

Speech Synthesis

Speech synthesis, also known as text-to-speech, converts written text into audible speech. This capability is essential for delivering spoken feedback, thereby enhancing accessibility for users with visual impairments and supporting interactive learning environments.

Real-World Applications

Applications such as navigation apps can read out directions, while educational tools may read aloud instructions to enhance comprehension and engagement. These features help create more inclusive experiences for all users.

Together, speech recognition and synthesis facilitate seamless interaction between users and applications, enabling a natural and intuitive communication experience.

![]()

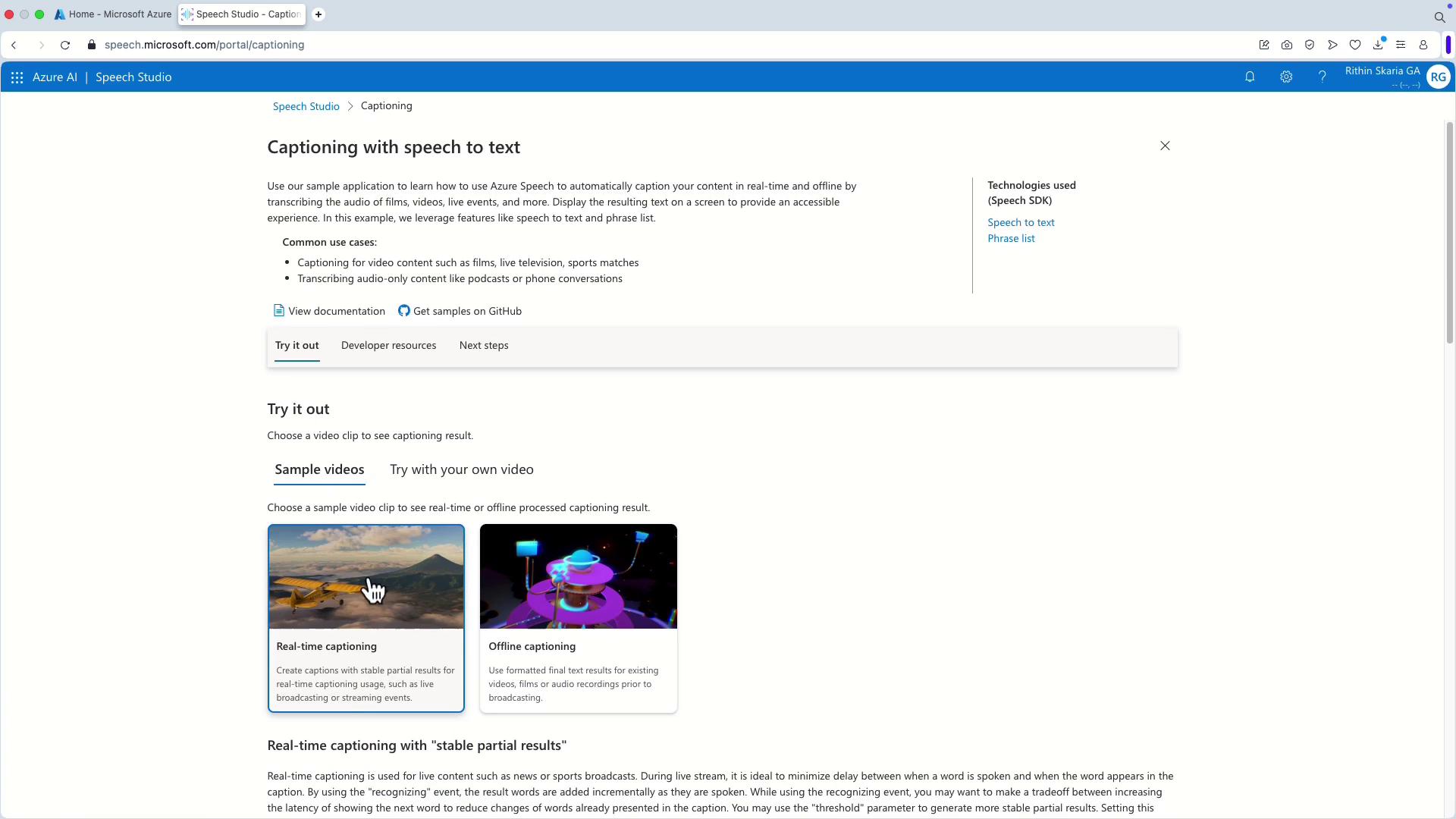

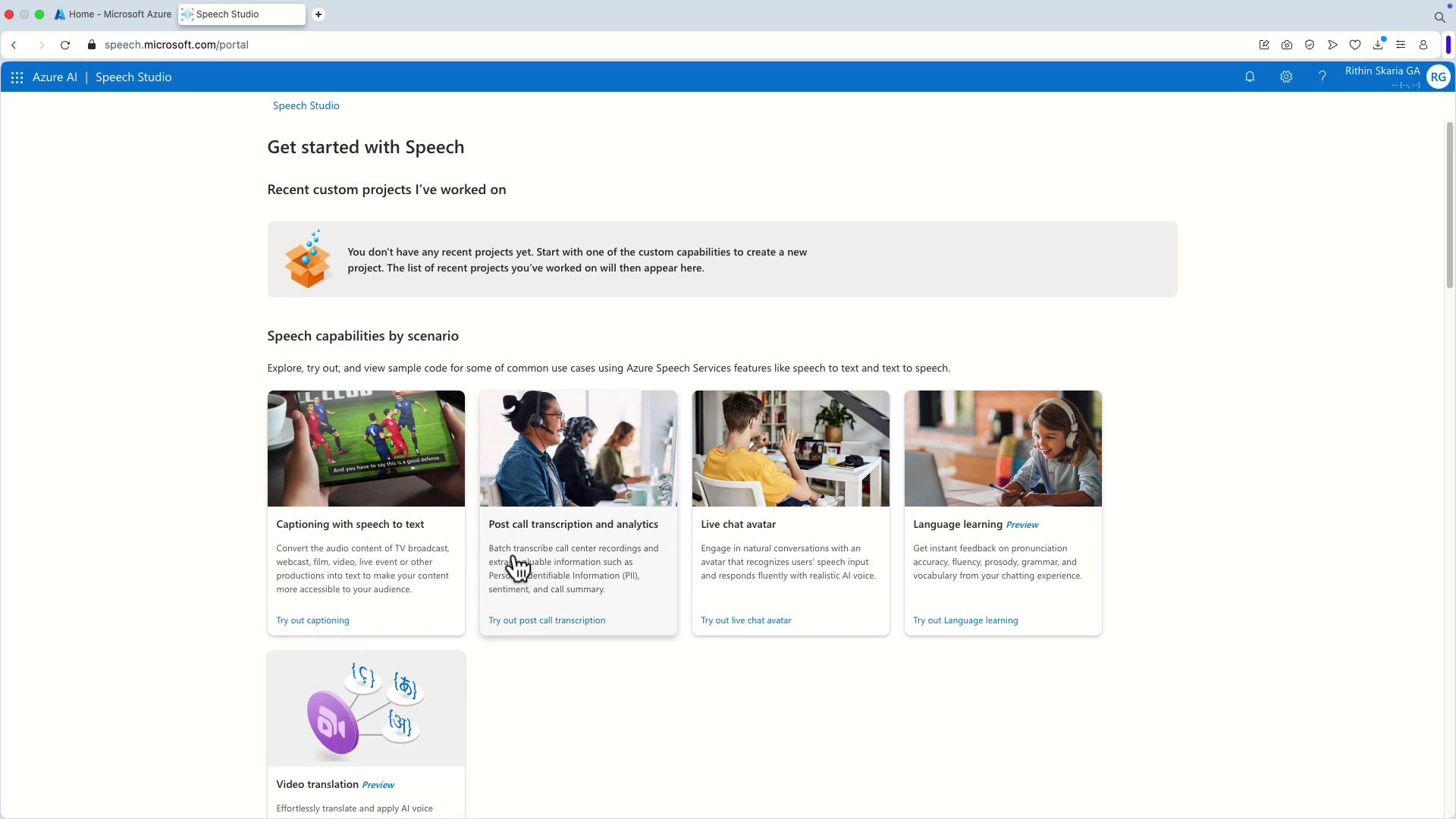

Practical Applications and Speech Studio Overview

Azure Speech Studio provides an interactive platform where you can experiment with these speech capabilities. It is designed to help you create and manage speech resources effectively, making it easier to integrate features like captioning, transcription, and interactive speech services into your applications.

Use Cases in Azure Speech Studio

| Feature | Description | Example Use Case |

|---|---|---|

| Real-Time Captioning | Converts spoken words into text on the fly | Live and offline video captioning |

| Post-Call Transcription | Analyzes transcribed conversations for insights | Customer service sentiment analysis |

| Interactive Speech Features | Provides speech output for enhanced user interaction | Live chat avatars, language learning tools |

Within Speech Studio, you can manage voice resources and experiment with real-time captioning, a feature that benefits both live events and post-event processing.

Furthermore, the platform offers advanced post-call transcription analytics, which are particularly useful in customer service scenarios for analyzing conversations and uncovering key insights.

![]()

For a broader look at the available features—including live chat avatars and language learning—explore the comprehensive tools provided within Speech Studio.

With this overview, we conclude our module on speech recognition and synthesis. Stay tuned for the next topic as we continue to explore powerful Azure services and their real-world applications.

Watch Video

Watch video content