AWS Certified AI Practitioner

Fundamentals of Generative AI

Foundation Model Lifecycle

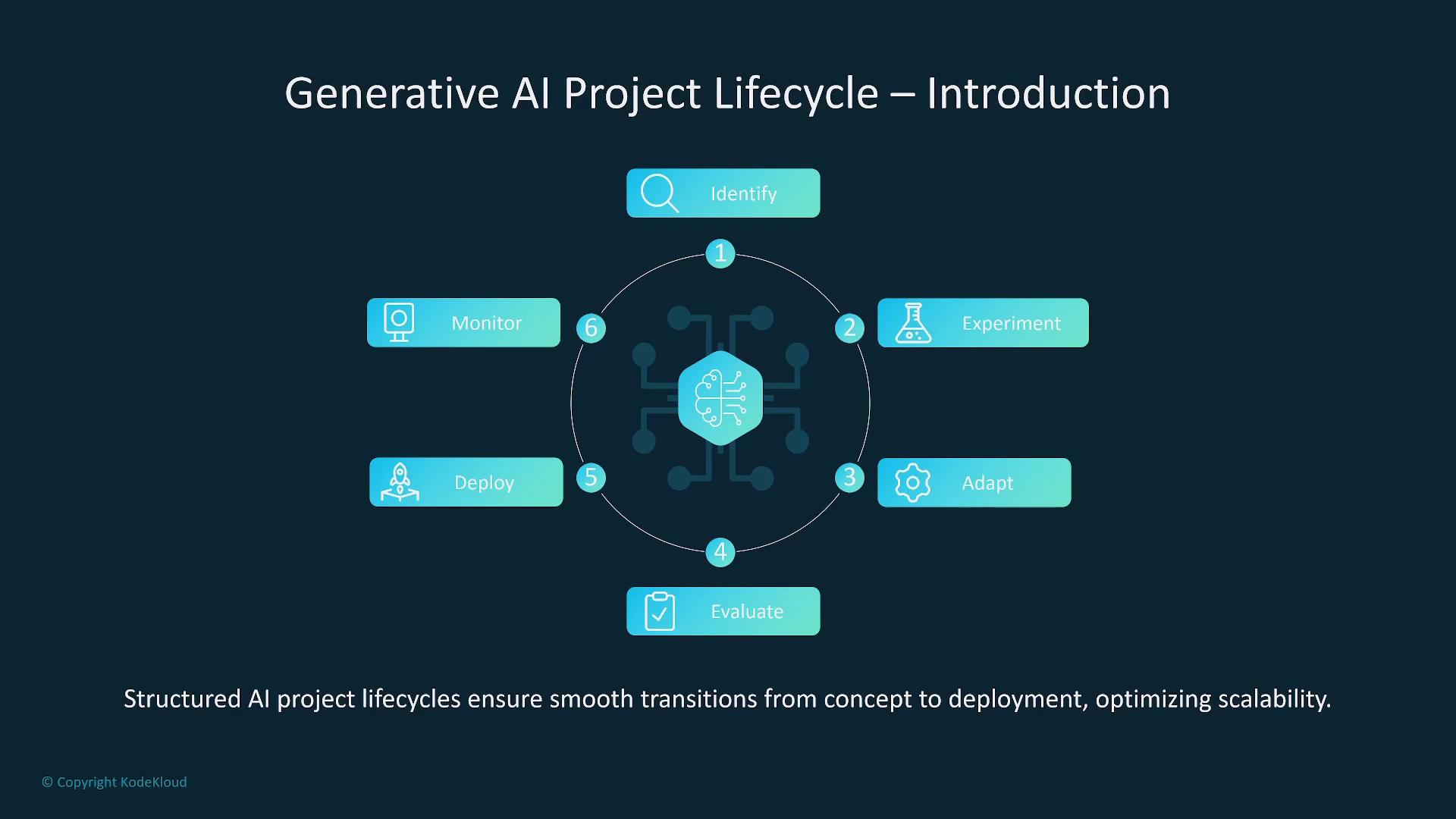

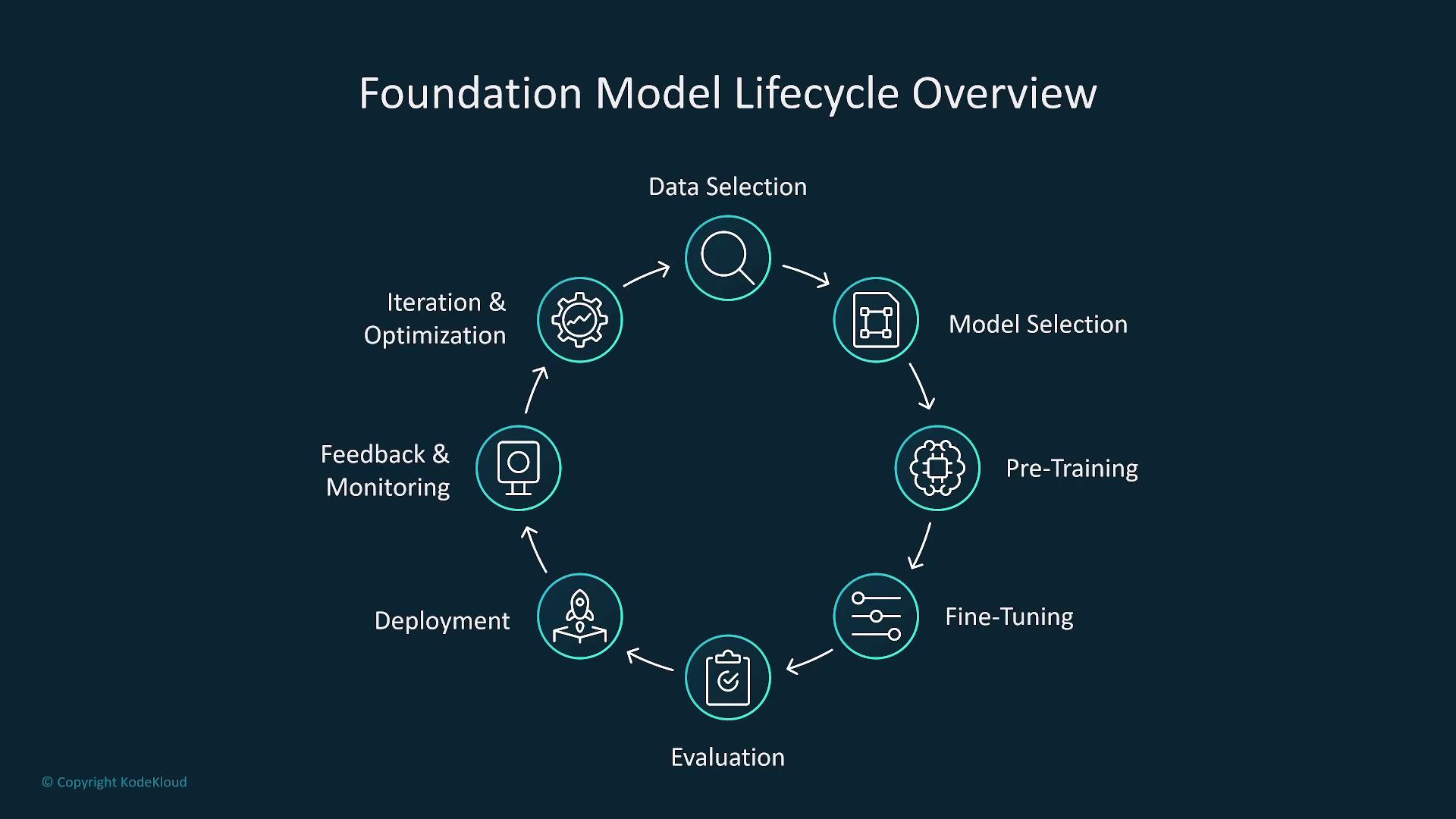

Welcome back! In this lesson, we delve into the lifecycle of generative AI foundational models—a systematic process that mirrors the traditional machine learning lifecycle. This comprehensive approach takes a project from initial conception through to deployment, ensuring scalability, precision, and continuous improvement.

Project Initiation

The lifecycle begins with problem identification and data collection. From there, teams move through experimentation, fine-tuning, training, evaluation, deployment, and continuous monitoring to optimize performance.

Defining the Use Case

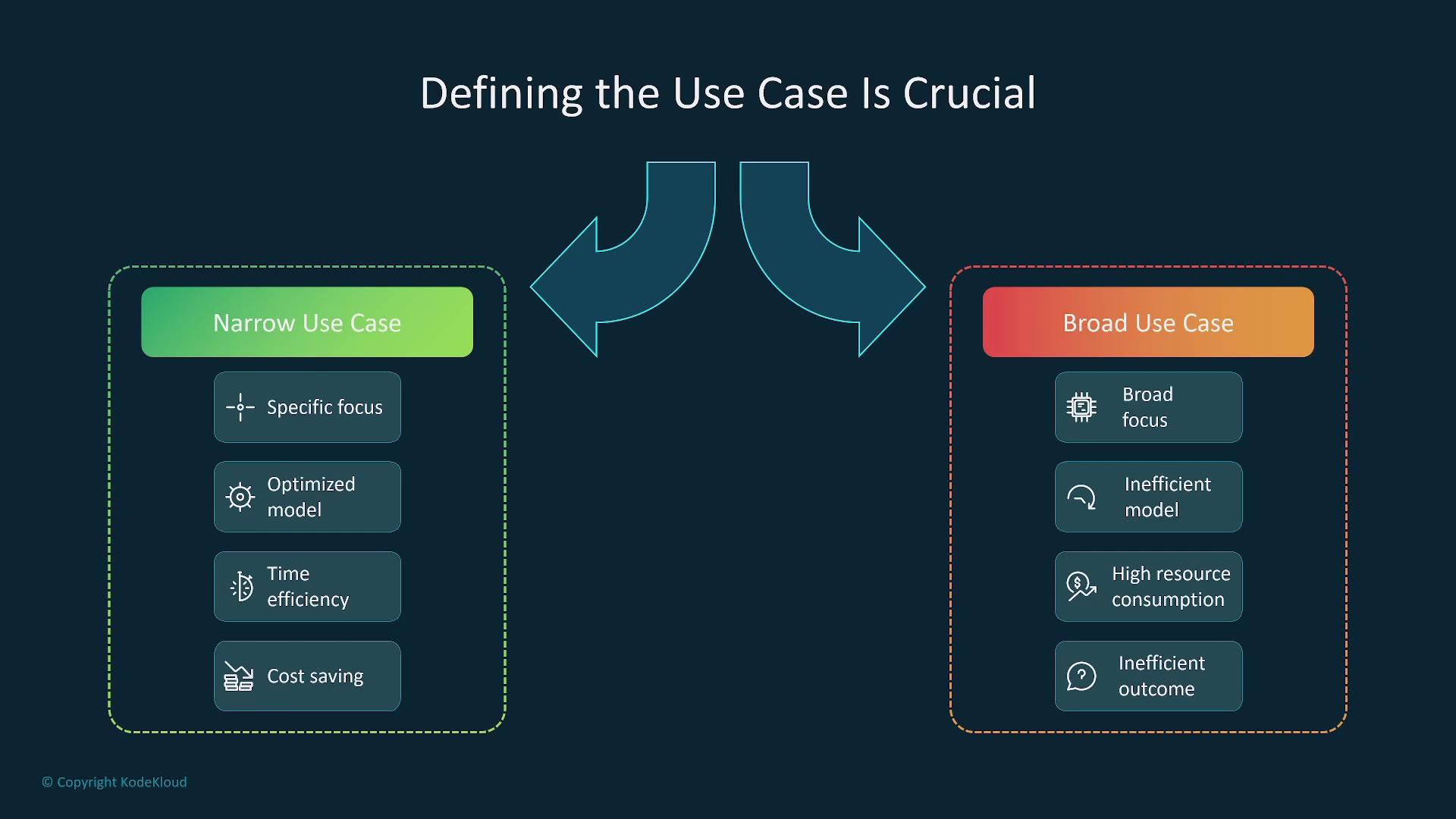

Clearly defining the use case is essential. By outlining the project objectives, you determine whether a narrow or broad model approach is most appropriate. A narrow focus can increase efficiency, optimize computational resource usage, and prevent overengineering. In many instances, deploying several specialized models yields better performance than a single, all-encompassing model.

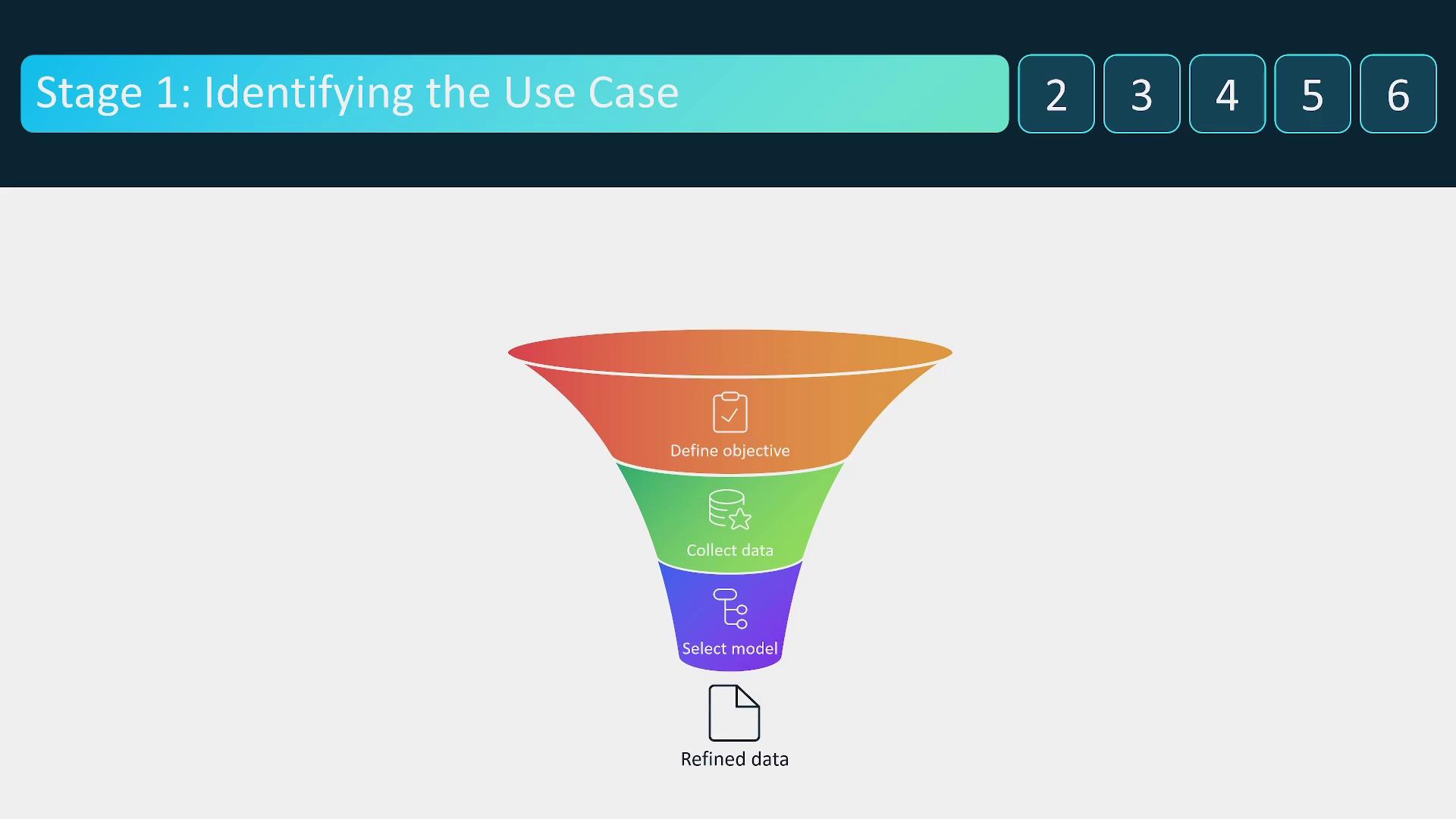

Clarifying the Use Case

Start by identifying the specific task and objectives of your project. Collect and refine the necessary data, which then serves as the critical foundation for leveraging a pre-trained model or undertaking custom model training.

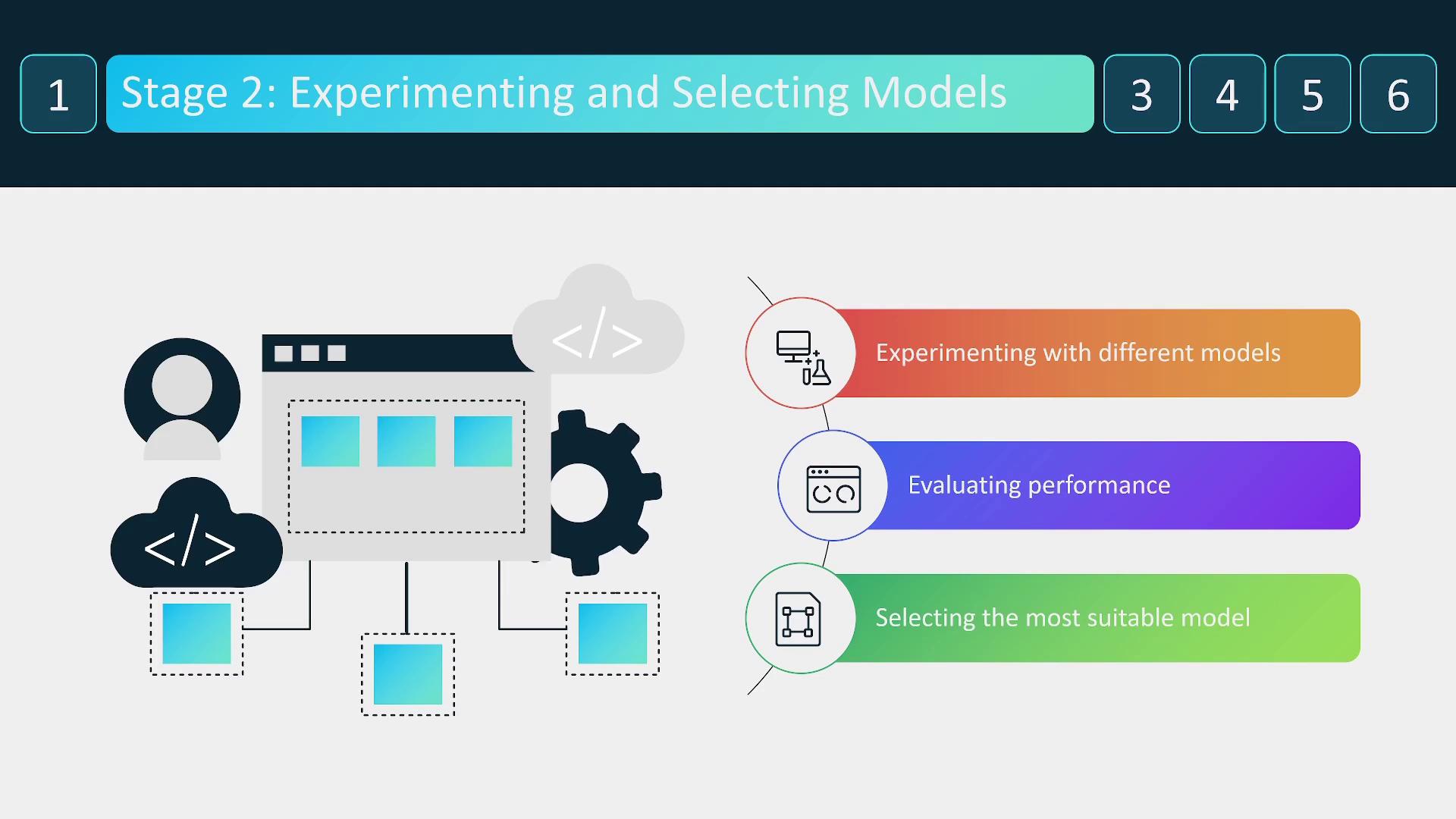

Experimentation and Model Selection

The next step in the process involves experimenting with various models. This phase evaluates different options using established metrics and benchmarks, allowing you to select the most suitable candidate. Enhancements during this stage involve additional training, prompt engineering, and fine-tuning to meet key business outcomes.

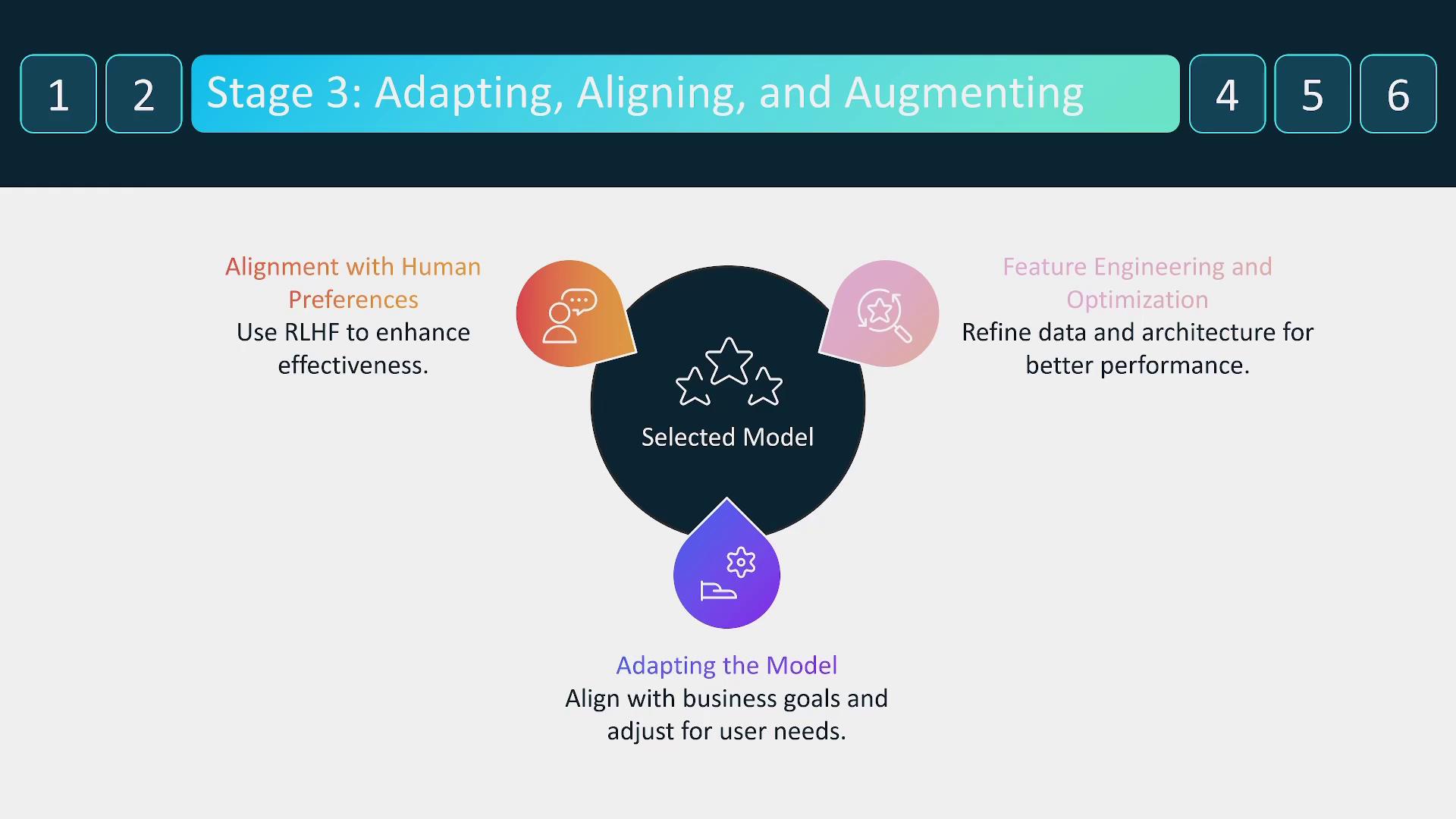

Adaptation and Alignment

After choosing a promising model, refine its performance further with feature engineering and prompt fine-tuning. Adapt the model continuously based on real-time feedback and new data. Techniques such as reinforcement learning from human feedback (RLHF) may also be applied—but exercise caution to prevent bias or model poisoning.

Note

When refining models, always validate adjustments with controlled experiments to maintain model integrity.

Rigorous Evaluation

Once the model is fine-tuned, it is vital to evaluate it under realistic conditions. This evaluation employs multiple metrics and benchmarks to verify that the model consistently delivers the expected performance and remains robust in various scenarios.

Deployment and Monitoring

Upon successful evaluation, the model is ready for deployment. Integration with the existing infrastructure must ensure responsiveness and business continuity. Post-deployment, continuous monitoring is established to track performance, gather user feedback, and adjust for evolving requirements.

Overview of the Lifecycle

This lifecycle echoes traditional MLOps practices by emphasizing a cycle of data selection, model evaluation, pre-training, fine-tuning, deployment, and continuous improvement.

Broad vs. Narrow Model Approaches

Often, there is a critical decision between a broad and a narrow model approach. For example, while chatbots may benefit from models with broad capabilities, specialized applications—such as legal document analysis or named entity recognition—are best served by models with a narrow focus.

When selecting a model, you typically choose between utilizing pre-trained models—which are generally more resource-efficient—or training a model from scratch, which, although more expensive, can be customized for highly specialized domains.

Warning

Ensure that clear project objectives and appropriate resource planning are established early on to avoid unnecessary costs and inefficiencies.

Importance of Clear Objectives

Establishing clear objectives is critical for optimizing computational resources and ensuring smooth progress throughout the project lifecycle. By setting precise goals, you can avoid inefficiencies and manage resource usage effectively.

The Role of Prompt Engineering

Prompt engineering plays a vital role in maximizing model accuracy. Two types of input prompts are used:

- System Prompts: These define backend behavior and security guidelines.

- User Prompts: These initiate model interactions and can evolve to become increasingly refined.

Providing in-context examples within prompts can significantly boost performance without additional training. However, care should be taken to prevent inadvertent bias or model poisoning.

Conclusion

This lifecycle for foundational models in generative AI involves several key phases—from clearly defining the use case and selecting the right model through to prompt engineering, rigorous evaluation, deployment, and continuous monitoring. Each stage is integral to maintaining alignment with human expectations and achieving business objectives.

See you in the next lesson!

Watch Video

Watch video content