Inspecting the Current Cluster

Before upgrading, we need to verify the existing cluster configuration. Start by checking the current version of the Kubernetes cluster and confirming the node status by running:Upgrade Strategy

Since application uptime is critical and no additional virtual machines are available, the recommended strategy is to upgrade one node at a time. This sequential approach allows workloads to be migrated safely between nodes, thus avoiding downtime. Upgrading all nodes simultaneously would disrupt active workloads, so a staged upgrade—first the control plane, then the worker node—is the optimal solution. !!! note “Important” Ensure that the upgrade is done following this strategy to prevent any service interruptions during the process.Checking the Latest Stable Version with kubeadm

Before initiating the upgrade, determine the latest stable Kubernetes version available via kubeadm:Upgrading the Control Plane Node

Draining the Control Plane

Start by draining the control plane node to safely migrate workloads. Because DaemonSet-managed pods are running, be sure to include the ignore flag:Upgrading kubeadm and the Control Plane Components

The upgrade process involves updating the kubeadm tool, control plane components, and finally the kubelet. The goal is to upgrade from v1.19.0 to v1.20.0. Follow these steps:-

Update the Package Cache and Check Available Versions

-

Upgrade kubeadm

Depending on your environment, apply one of the methods below:

Or:Verify the upgrade by running:

-

Check the Upgrade Plan Again

-

Apply the Upgrade to the Control Plane

Upgrade the control plane components using:

If successful, you will see confirmation messages such as:

Upgrading kubelet and kubectl

To synchronize the node components with the control plane, upgrade kubelet and kubectl:Upgrading the Worker Node

Draining the Worker Node

Before upgrading the worker node (node01), drain it to migrate its workloads:Upgrading kubeadm on the Worker Node

SSH into node01 using its IP or configured settings, then update the kubeadm tool:Upgrading kubelet and kubectl on the Worker Node

Upgrade kubelet and kubectl on node01:Reference to Kubernetes Documentation

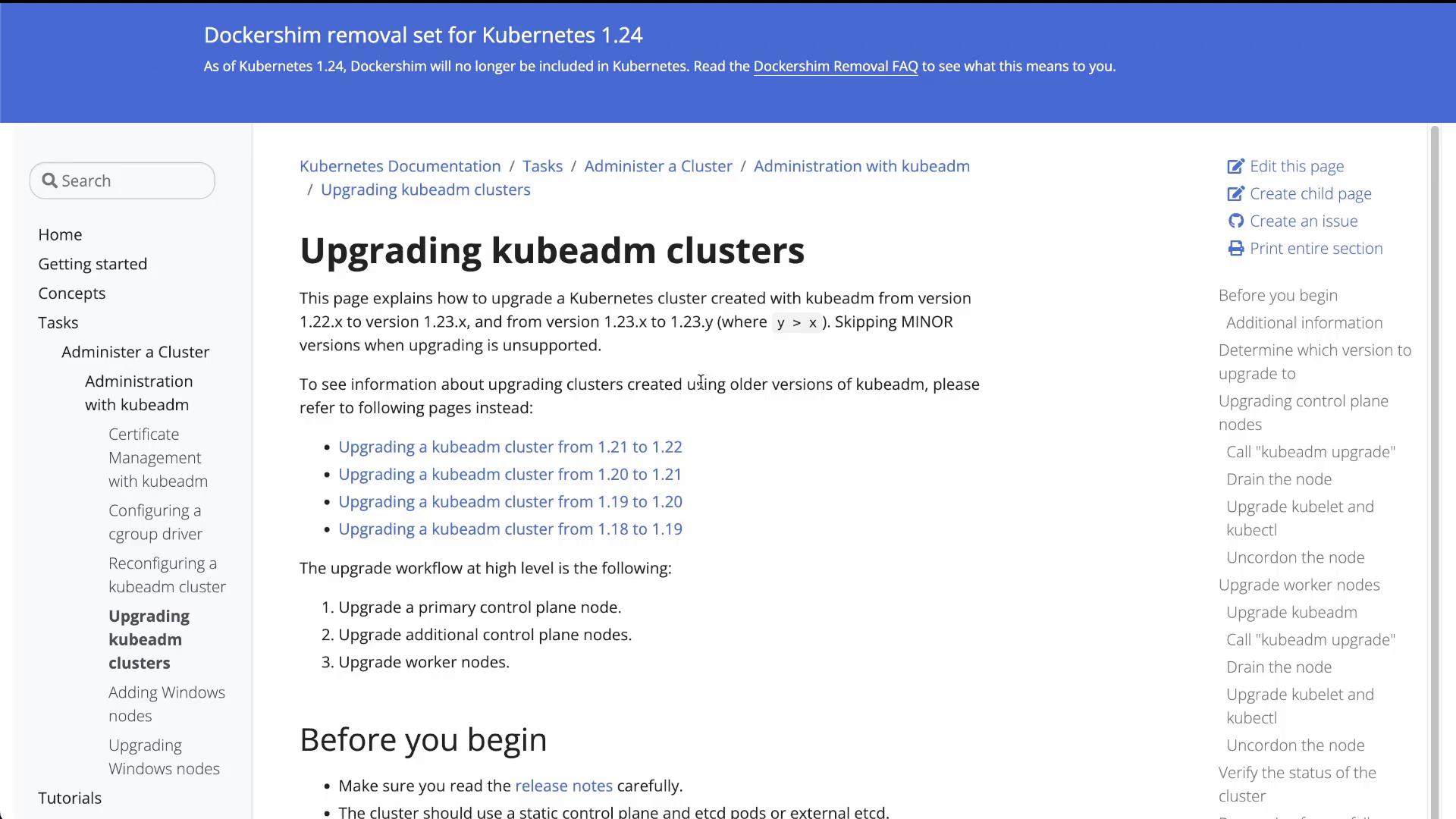

For more detailed instructions on upgrading kubeadm clusters, refer to the official Kubernetes documentation. An example screenshot from the documentation is shown below: