EFK Stack: Enterprise-Grade Logging and Monitoring

Elasticsearch and Kibana Deployment on Kubernetes

Scaling Elasticsearch Nodes

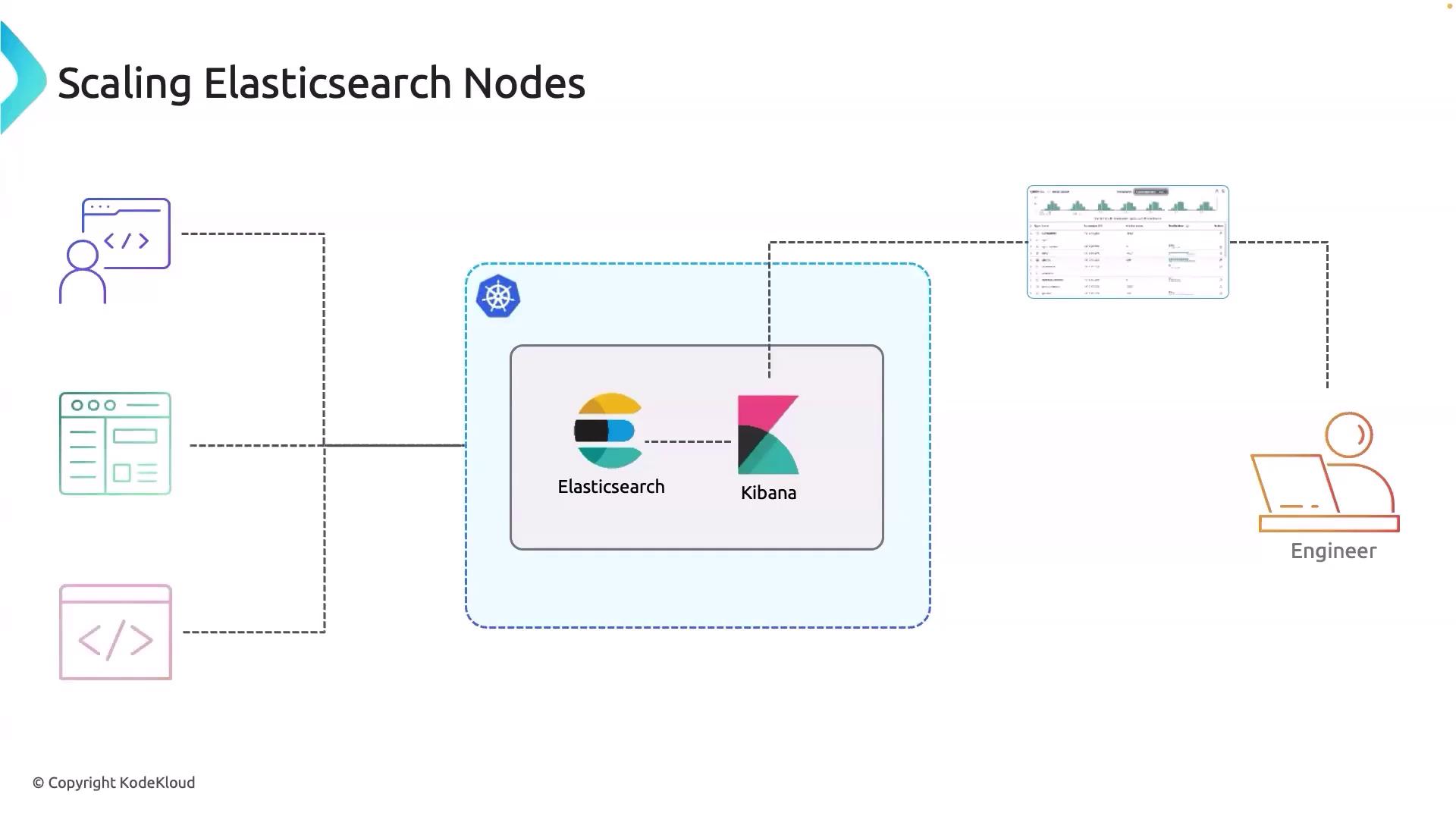

Hello and welcome back! In this article, we discuss key challenges and strategies for scaling Elasticsearch nodes within your Kubernetes cluster. In our current setup, both Elasticsearch and Kibana are deployed on Kubernetes. As an SRE or DevOps engineer, you rely on Kibana to monitor the health of your Kubernetes cluster and applications, while external developers use Elasticsearch and Kibana to build dashboards and execute CLI commands.

Assume this reflects your current organizational setup. As the volume of logs entering Elasticsearch increases, the cluster risks becoming a single point of failure. The key question is: When should you consider scaling Elasticsearch nodes? The answer lies in understanding the demands and constraints your cluster faces.

When to Scale

Scaling Elasticsearch nodes is essential when:

- The data volume overwhelms current nodes, resulting in performance degradation.

- High query loads force nodes to work inefficiently.

- Performance bottlenecks indicate nodes are nearing their operational limits.

- Ingestion rates exceed the capacity of existing nodes.

- Redundancy and high availability are needed to prevent data loss and downtime.

- Geographical distribution demands optimized performance across regions.

Scaling Strategies

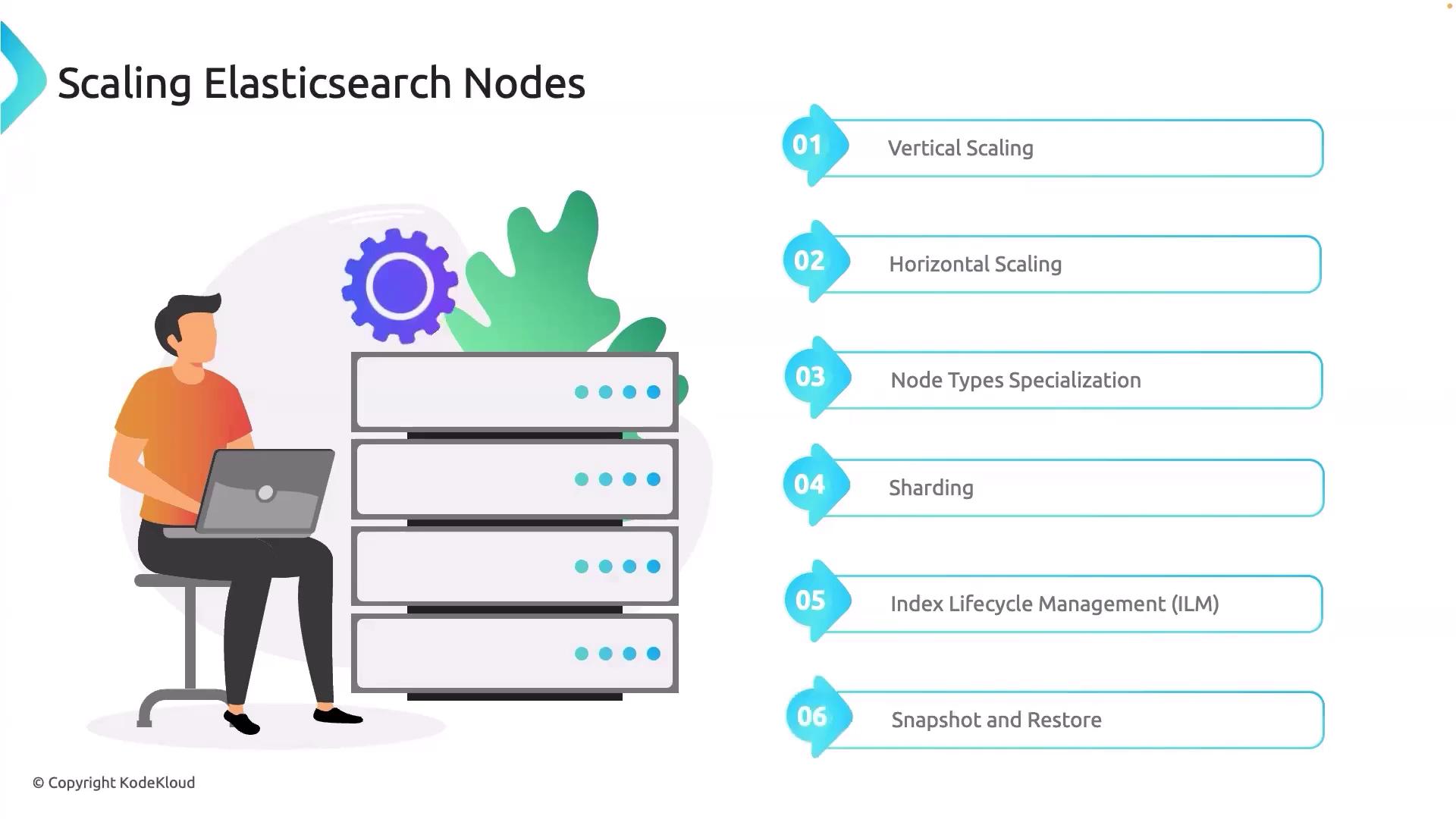

There are two principal approaches when it comes to scaling Elasticsearch nodes:

- Vertical Scaling: Increase the resources (CPUs, memory) of existing nodes.

- Horizontal Scaling: Add more nodes to distribute the load more evenly across the cluster.

A highly effective and oft-overlooked strategy is node type specialization. This approach dedicates specific nodes to tasks such as data ingestion or query processing, which can quickly alleviate performance bottlenecks without solely relying on scaling up or out.

Additional performance optimization techniques include:

- Sharding: Distributes data across multiple nodes to enhance search and indexing performance.

- Index Lifecycle Management (ILM): Efficiently manages the lifecycle of your data indices.

- Snapshot and Restore: Provides reliable backup and recovery solutions to maintain data integrity during scaling operations.

Each of these strategies enhances the scalability and performance of your Elasticsearch cluster. For example, while vertical and horizontal scaling focus on infrastructure improvements, methods like sharding, ILM, and snapshot/restore emphasize refining the cluster's configuration.

Best Practices

Start by experimenting with node type specialization. If further improvements are required, proceed with sharding and lifecycle management techniques, followed by the implementation of snapshot and restore processes. Managed services like Elastic Cloud often primarily utilize vertical and horizontal scaling to expand clusters. More detailed guidance on Elastic Cloud will be covered in future lessons.

That concludes our discussion on scaling Elasticsearch nodes. Thank you for reading, and see you in the next lesson.

Watch Video

Watch video content