Event Streaming with Kafka

Building Blocks of Kafka

Kafka Brokers The Foundation of Message Storage

Welcome back! In this lesson, we’ll dive into how Kafka brokers serve as the backbone for storing and managing messages in a Kafka cluster. By the end, you’ll understand their role, core features, and proven real-world scale.

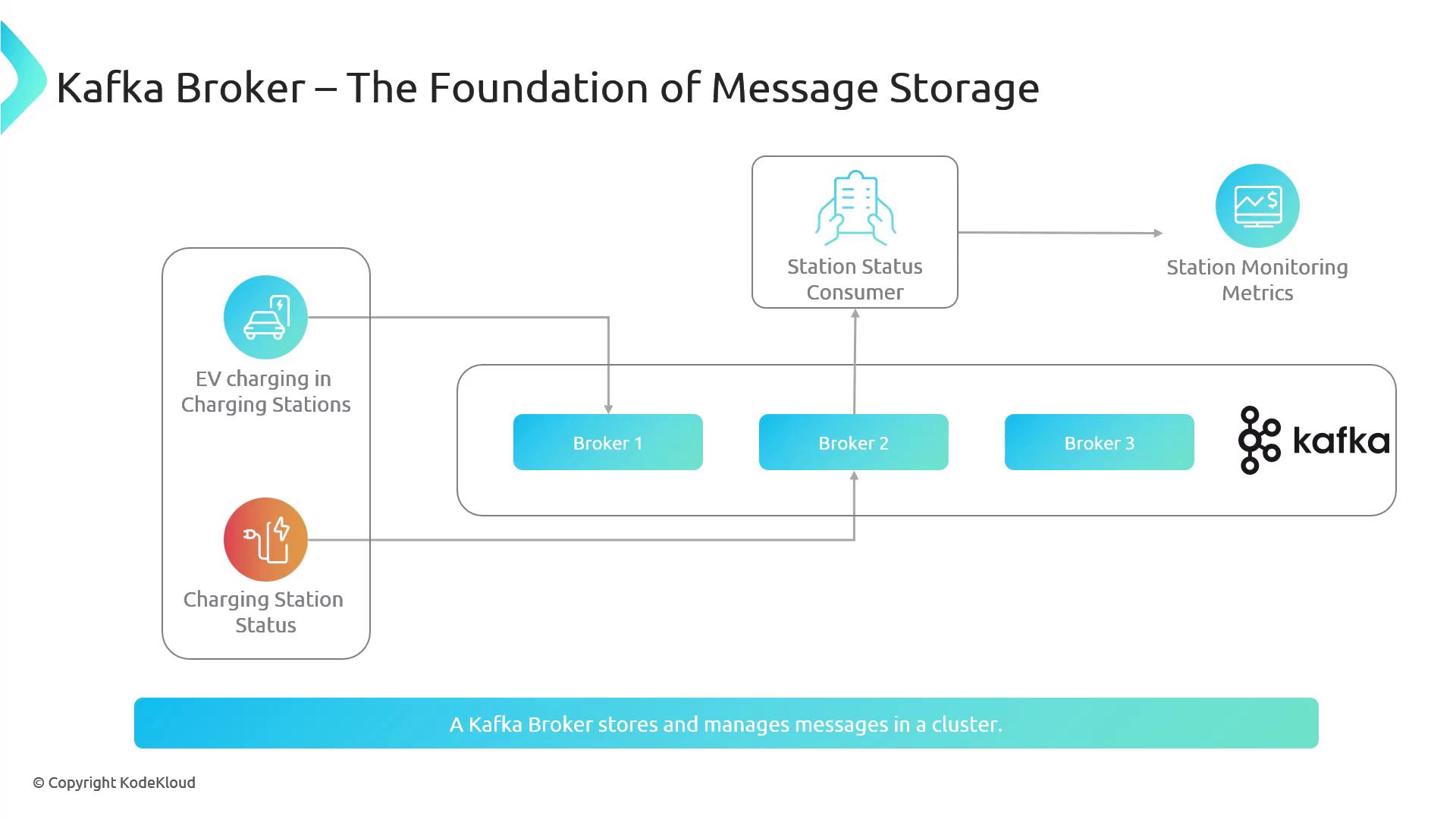

EV Charging Use Case

In our EV charging example, charging stations and IoT sensors act as producers, publishing events (e.g., charge start, energy usage) to Kafka topics. Downstream consumers—such as monitoring services or dashboards—subscribe to these topics, processing events to deliver real-time station availability and usage analytics to end users.

What Is a Kafka Broker?

A Kafka broker is a server node in a Kafka cluster that:

- Receives and stores messages from producers

- Persists messages on disk ( HDD or SSD ) for durability

- Serves messages to consumers on demand

Note

Each broker maintains partitions for topics. Partitions are the unit of parallelism and replication in Kafka.

Summary of Broker Responsibilities

- Ingest messages from producers

- Persist events to local disk for replay and fault tolerance

- Dispatch messages to subscribed consumers

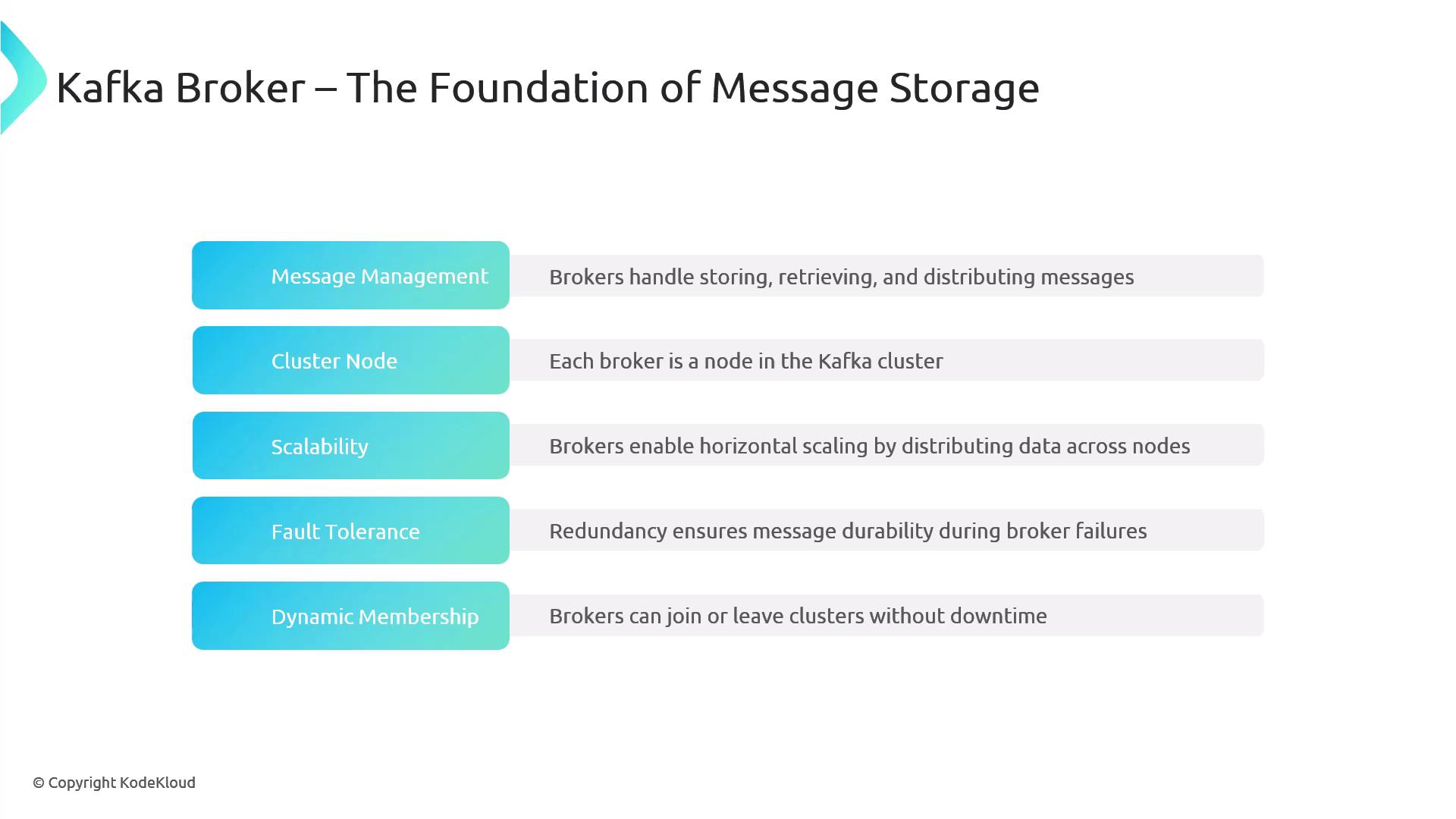

Core Characteristics of Kafka Brokers

Kafka brokers are built for massive throughput, reliability, and seamless scaling. Key attributes include:

| Feature | Description | Benefit |

|---|---|---|

| Message Management | Stores, indexes, and retrieves event streams | Guarantees reliable, ordered delivery |

| Cluster Node | Forms a distributed cluster with other brokers | Balances load and prevents hotspots |

| Scalability | Horizontal growth by adding more brokers | Maintains low latency under load |

| Fault Tolerance | Replicates partitions across multiple brokers | Ensures no data loss on failure |

| Dynamic Membership | Brokers can join or leave without downtime | Simplifies upgrades and maintenance |

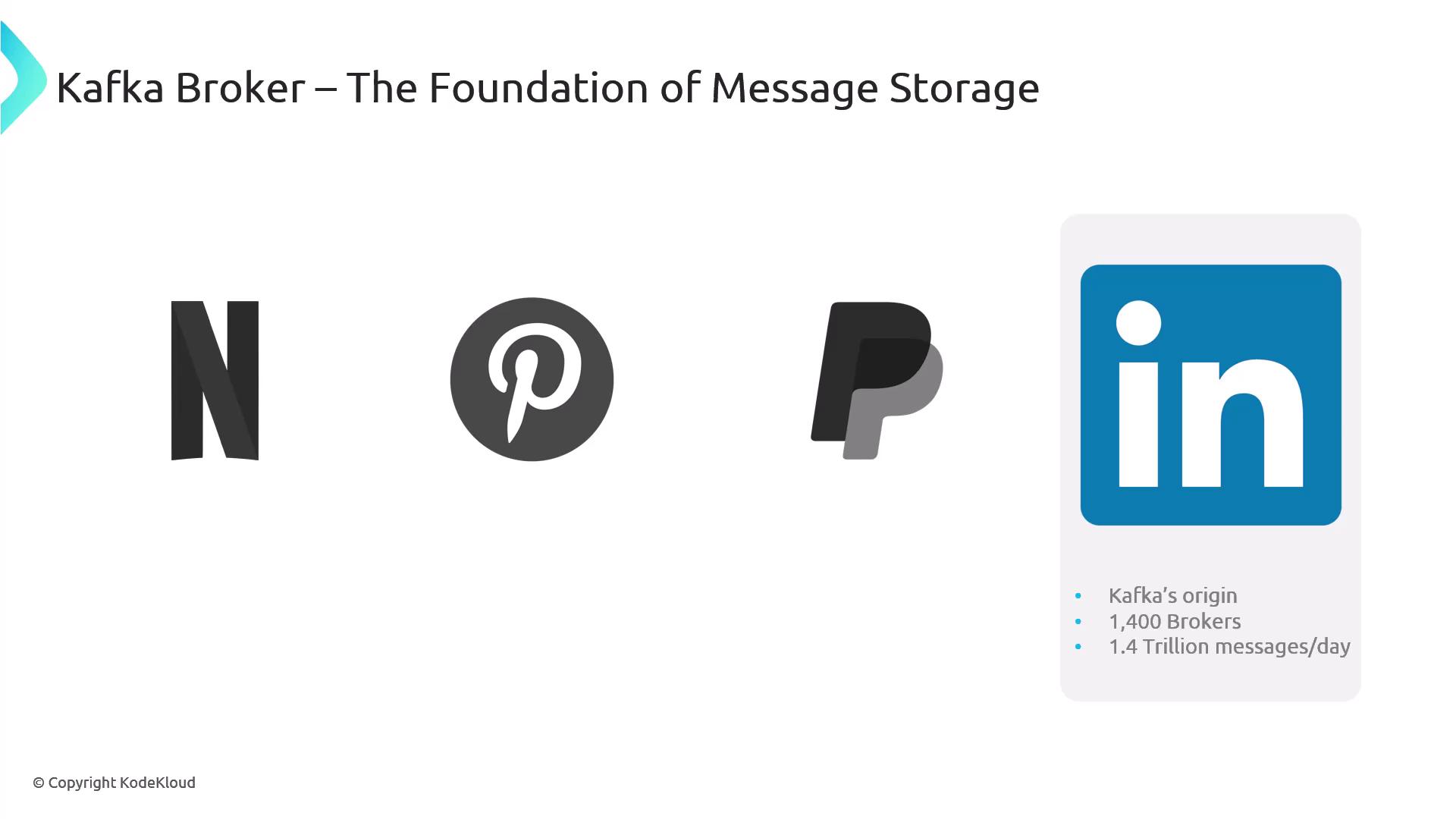

Real-World Deployments

Kafka’s proven at massive scale. Here are a few examples:

| Company | Brokers | Messages/Day | Highlights |

|---|---|---|---|

| Netflix | ~4,000 | >1 trillion | Powers streaming telemetry across 50 clusters |

| – | ~4 trillion @ 50 GB/s | Stores exabytes of events in Amazon S3 | |

| PayPal | ~1,500 | – | Maintains 99.99% uptime for global transactions |

| ~1,400 | ~1.4 trillion | Kafka’s originator—drives core data pipelines |

Warning

Under-provisioning brokers can lead to throttling or data loss. Always plan capacity based on peak throughput and replication factor.

These deployments showcase Kafka’s ability to scale horizontally, providing a robust, fault-tolerant messaging backbone for modern event-driven architectures.

In this lesson, you’ve learned how Kafka brokers form the bedrock of message storage, ensuring durable, scalable, and reliable event streaming. In the next chapter, we’ll explore Kafka topics and partitions in depth.

Links and References

Watch Video

Watch video content