Event Streaming with Kafka

Building Blocks of Kafka

Understanding Kafka Topics Organizing Your Data Streams

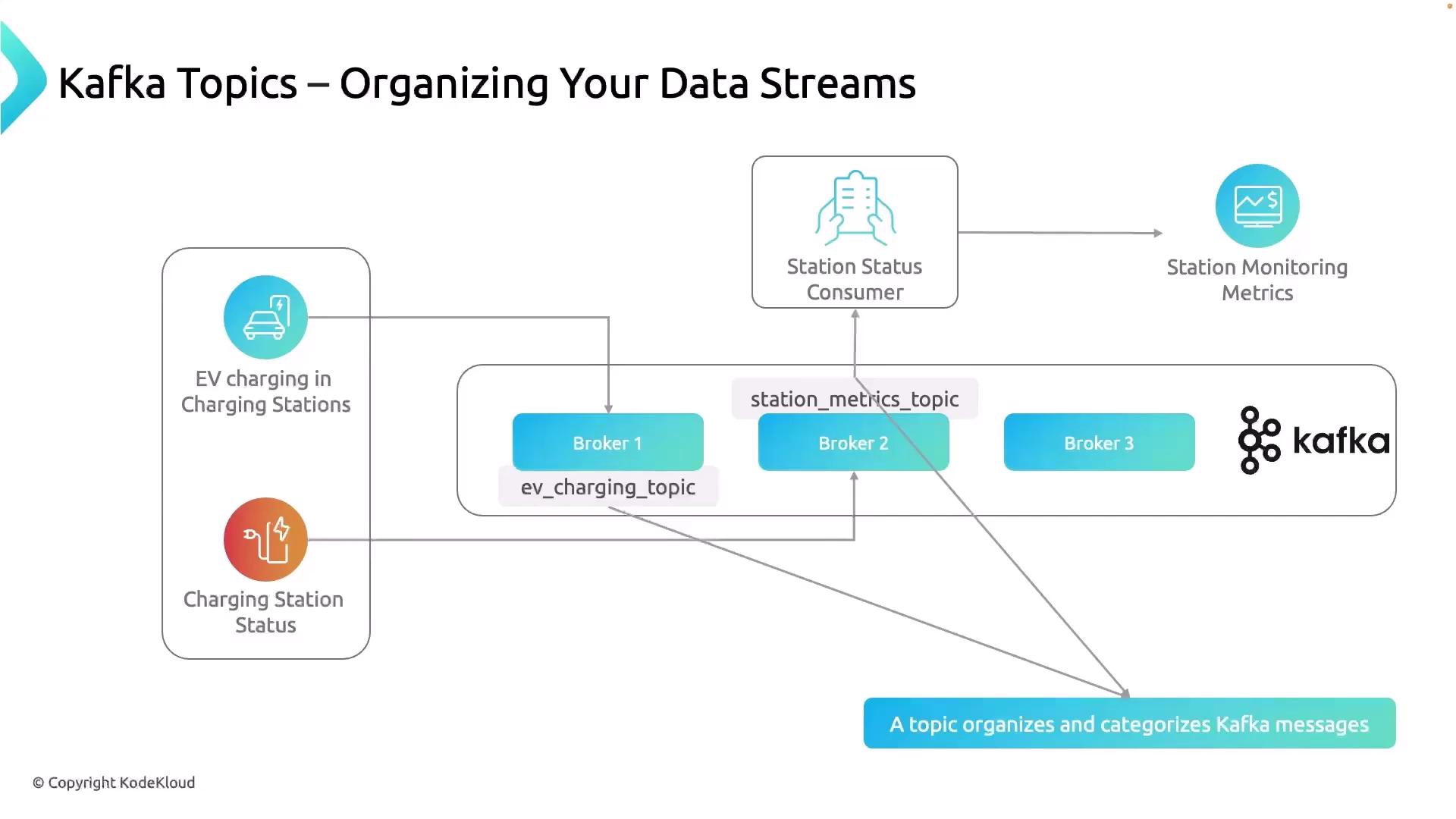

Kafka brokers handle the storage and distribution of events in your cluster, but to keep related messages together and make access easier, you need topics. Topics provide logical categories for producers to publish messages and for consumers to subscribe to specific streams.

In our EV charging station example:

- The charging hardware publishes events to

EV_charging_topic. - The station status system streams metrics to

station_metrics_topic.

A topic is simply a named channel that groups related messages.

Topics themselves are not physical servers—they’re configurations within your brokers. When you bring up a Kafka cluster, you launch a set of brokers and then define topics on top of them. Together, brokers and topics form the foundation for producing and consuming events.

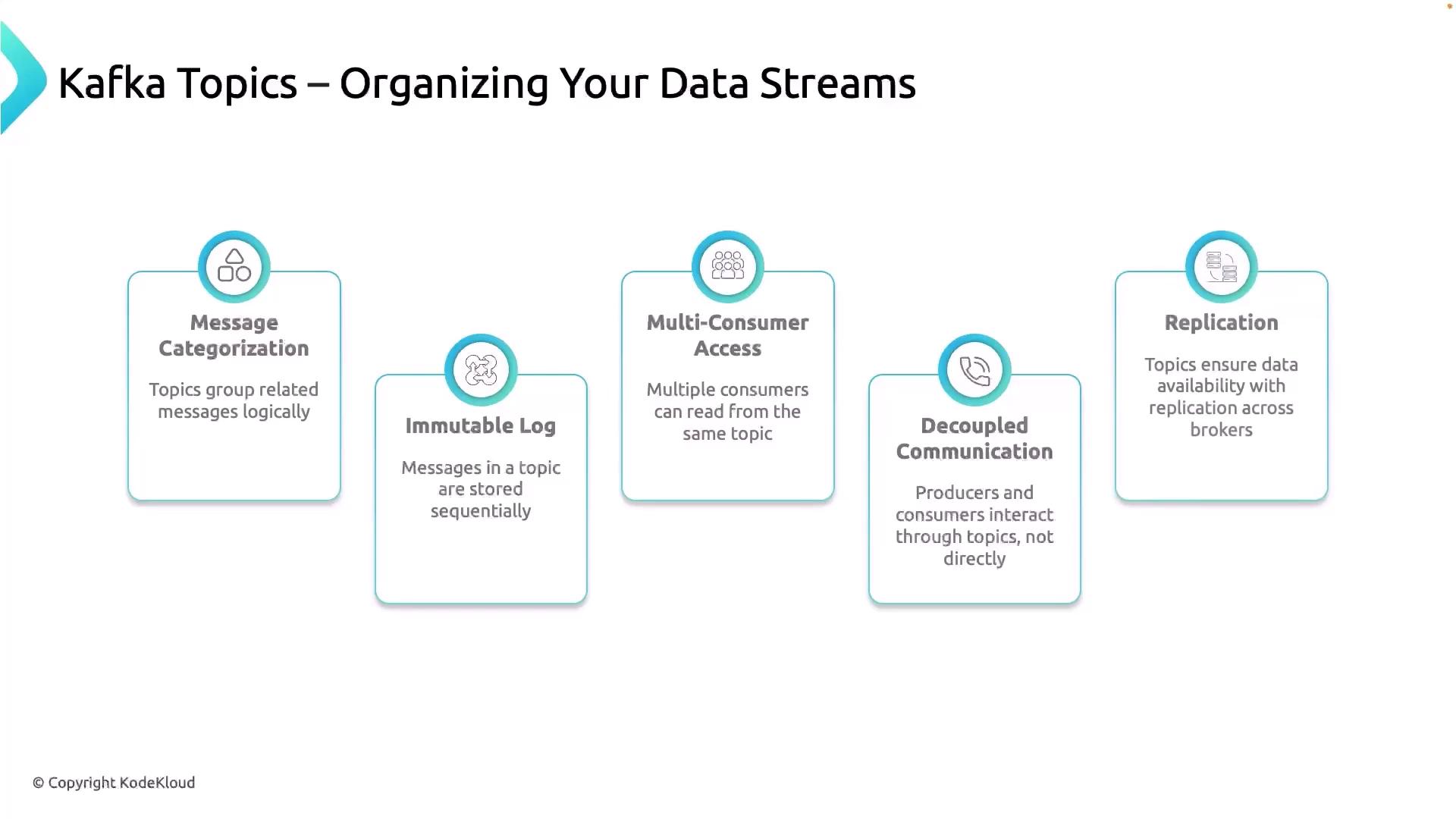

Key Features of Kafka Topics

| Feature | Description | Benefit |

|---|---|---|

| Message Categorization | Logical grouping of related messages | Keeps streams organized by data type |

| Immutable Log | Append-only, time-ordered storage | Guarantees ordered, auditable event replay |

| Multi-Consumer Access | Independent consumer groups with individual offsets | Enables parallel processing without conflicts |

| Decoupled Communication | Producers and consumers operate independently | Simplifies scaling and fault isolation |

| Replication | Partitions duplicated across multiple brokers | Provides high availability and durability |

1. Message Categorization

Each topic acts as a container for messages of a similar kind—whether they’re logs, IoT data, or transaction records. You can create dozens or even hundreds of topics to keep your data streams organized.

Warning

Managing tens of thousands of topics can increase metadata overhead on the brokers. Monitor performance and adjust num.network.threads or num.io.threads as needed.

2. Immutable Log

Internally, a topic is stored as an append-only log. Once a message is written, it cannot be altered—only new messages can be appended. This ensures consumers always read events in the order they were produced.

Note

Use retention settings (retention.ms, retention.bytes) to control how long messages remain available in the log. Align retention with your consumers’ processing speed to prevent data loss.

3. Multi-Consumer Access

Kafka tracks each consumer group’s offset—the position in the log it has read. Multiple groups can independently consume the same topic, enabling use cases such as real-time analytics, monitoring dashboards, and batch processing on the same data.

4. Decoupled Communication

Producers push messages to a topic without knowing who will consume them. Consumers pull messages at their own pace. This decoupling allows producers and consumers to scale, update, or fail independently without impacting each other.

5. Replication

To ensure fault tolerance, Kafka replicates each partition of a topic across multiple brokers. If one broker fails, another replica can take over with no data loss. We’ll cover replication in more detail in a future lesson.

These five features—categorization, immutability, multi-consumer access, decoupling, and replication—make Kafka topics the backbone of scalable, real-time event streaming.

Next Steps: Diving into Partitions

Topics alone set up your logical streams. In the next lesson, we’ll explore partitions—how they distribute data for parallelism and high throughput.

References

Watch Video

Watch video content