Event Streaming with Kafka

Building Blocks of Kafka

Kafka Replication Ensuring Data Reliability and Fault Tolerance

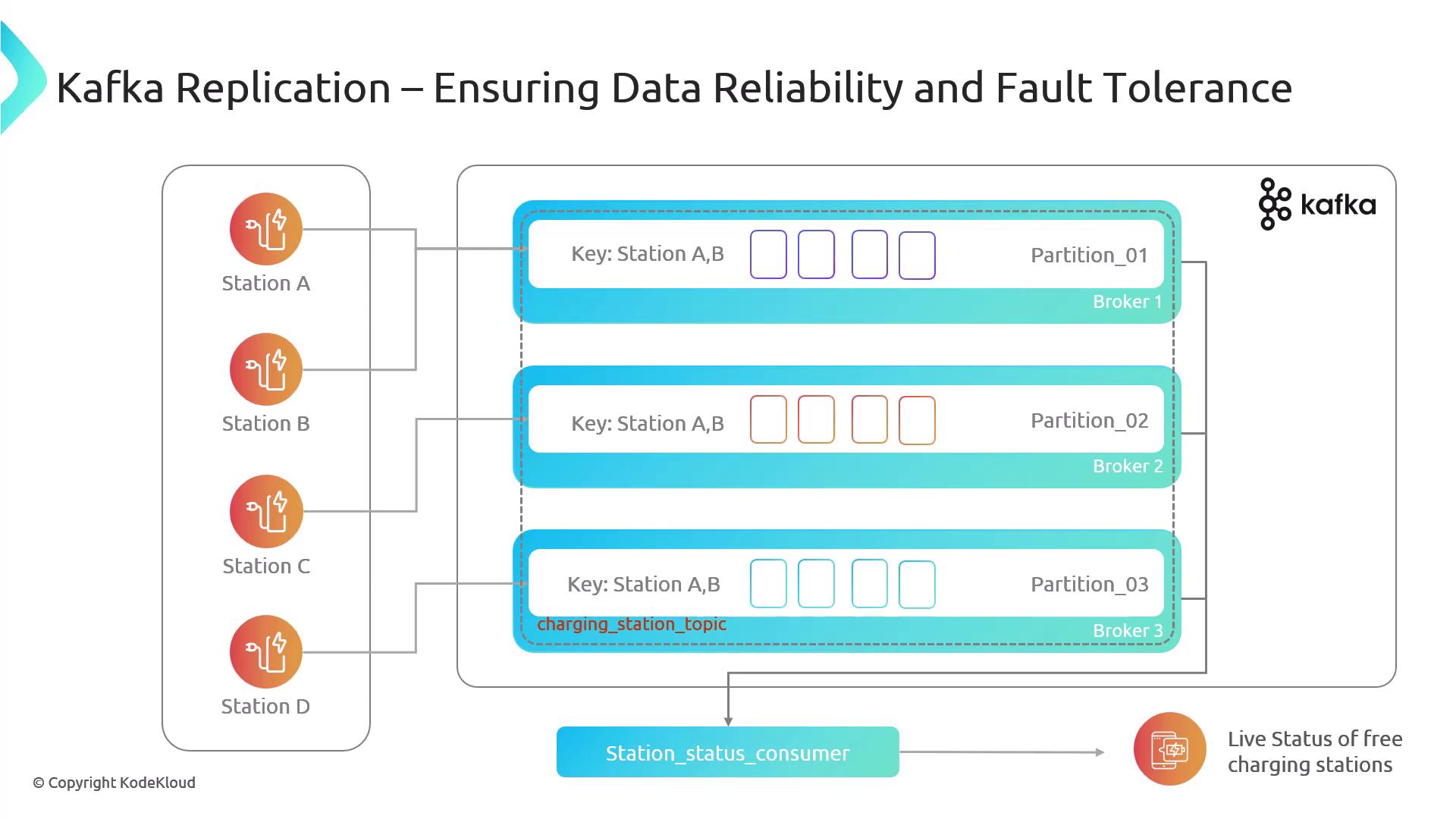

Welcome back! In the previous lesson, we explored how partitions enable high availability in Apache Kafka. Now, we’ll dive into replication, Kafka’s key mechanism for data durability and fault tolerance.

Example: EV Charging Network

Consider an EV charging network where each charging station publishes events—status updates, metrics, and more—to two topics:

charging-station-topicstation-metrics-topic

Your monitoring dashboards consume from station-metrics-topic to display live station status.

Note

In this example, we assume three brokers (broker1, broker2, broker3) and three partitions per topic.

Topic Creation Example

bin/kafka-topics.sh --create \

--topic charging-station-topic \

--partitions 3 \

--replication-factor 1 \

--bootstrap-server broker1:9092

Initially, if the replication factor is 1, each partition only exists on a single broker.

Imagine broker2 fails. The partition it hosts becomes unavailable, and dashboards go dark.

Warning

Without replication, any single broker failure leads to data unavailability for the partitions it hosts.

Why Replication Matters

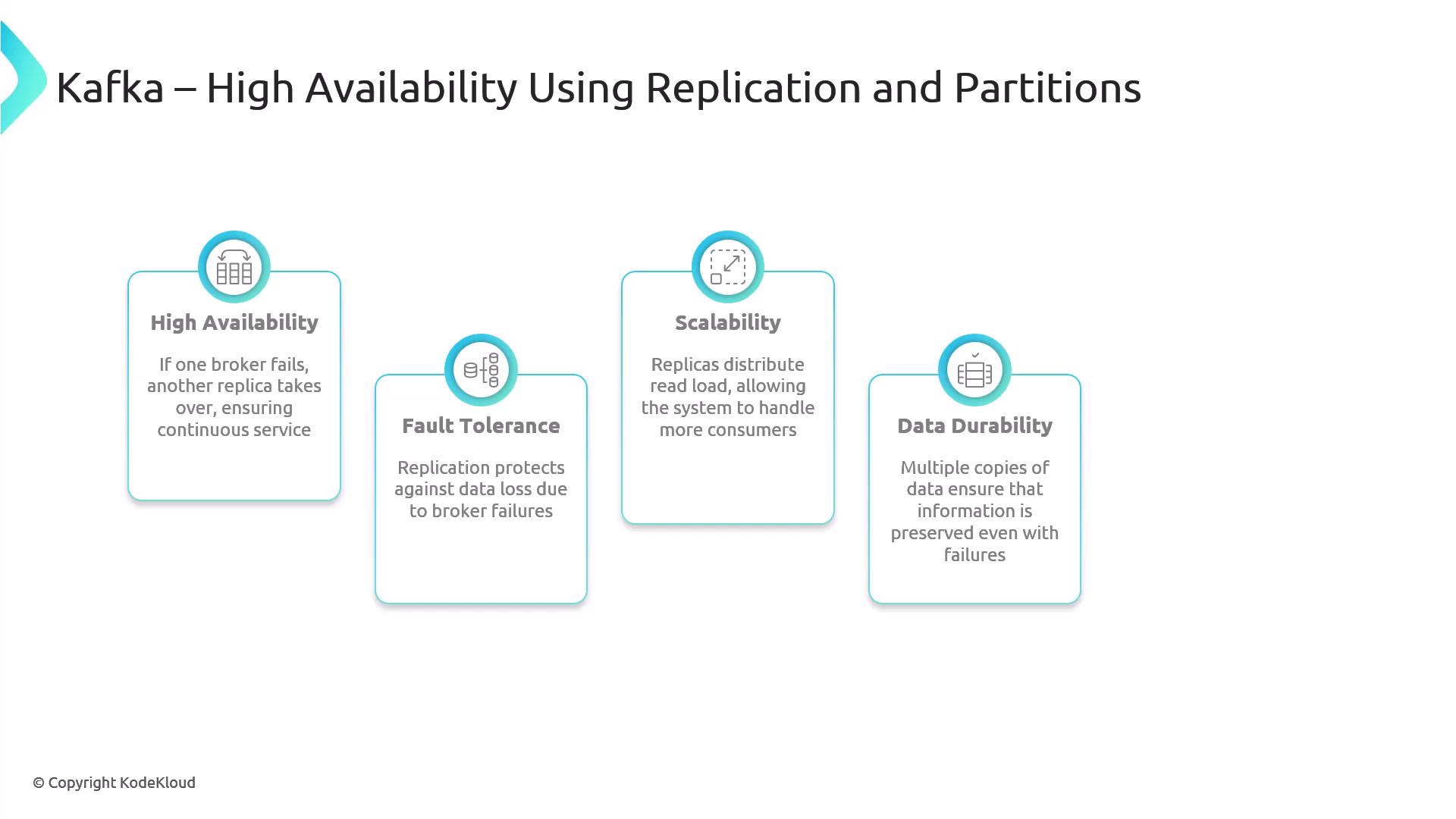

Replication copies each partition across multiple brokers. This ensures:

| Benefit | Description |

|---|---|

| High Availability | On broker failure, an in-sync replica is promoted to leader automatically. |

| Fault Tolerance | Multiple copies prevent data loss if a broker crashes or hardware fails. |

| Scalability | Consumers can read from replicas, distributing the load and improving read throughput. |

| Data Durability | Messages are only acknowledged to producers once written to all in-sync replicas. |

| Increased Throughput | Parallel reads and writes across replicas boost overall system throughput. |

Replication in Action

Let’s update our topic to use a replication factor of 3:

bin/kafka-topics.sh --alter \

--topic charging-station-topic \

--replication-factor 3 \

--bootstrap-server broker1:9092

For partition 1, Kafka will assign:

- Leader on

broker1 - Followers on

broker2andbroker3

Broker Failure (Non-Leader):

Ifbroker3goes offline, the partition continues serving reads/writes from the leader and the remaining in-sync follower. Kafka will automatically replicate to restore the desired replication factor.Leader Failure:

Ifbroker1(leader) fails, one of the in-sync followers (e.g.,broker2) is elected leader. Producers and consumers transparently reconnect, minimizing downtime.

Conclusion

By combining partitioning with replication, Apache Kafka delivers a robust, fault-tolerant streaming platform that guarantees data reliability and high availability. Stay tuned for more Kafka deep dives!

References

Watch Video

Watch video content