Event Streaming with Kafka

Deep Dive into Kafka Beyond the Basics

Offset Management in Kafka

Efficient offset management is critical for building fault-tolerant, exactly-once or at-least-once message-processing applications with Apache Kafka. This guide covers the core concepts, architecture, and best practices to help you track and commit offsets reliably.

Kafka Offsets and the Book Analogy

Imagine reading a book and placing a bookmark on the last page you read. In Kafka, each message within a partition is assigned a unique, sequential offset. Consumers use these offsets to know which messages they've processed and where to resume if they restart.

- Messages in a topic partition → Pages in a book

- Offset → Page number

- Consumer group → Multiple readers sharing a book

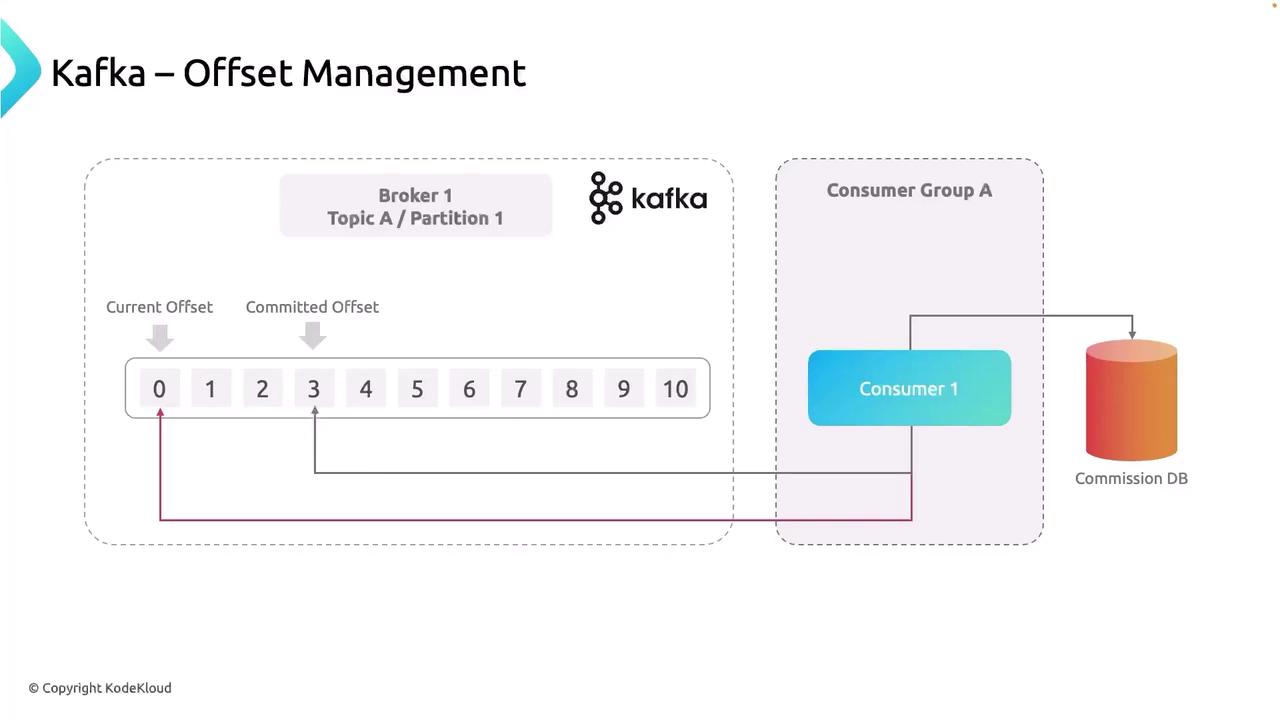

High-Level Architecture

Below is a simple Kafka setup illustrating offset management for a single-partition topic:

- Kafka Broker: Hosts the topic partition and stores incoming messages.

- Topic A: Contains sequentially-offset messages starting from 0.

- Consumer Group: One or more consumers that share the load.

- Consumer: Reads messages, processes them (e.g., writes to a commission database), and commits offsets.

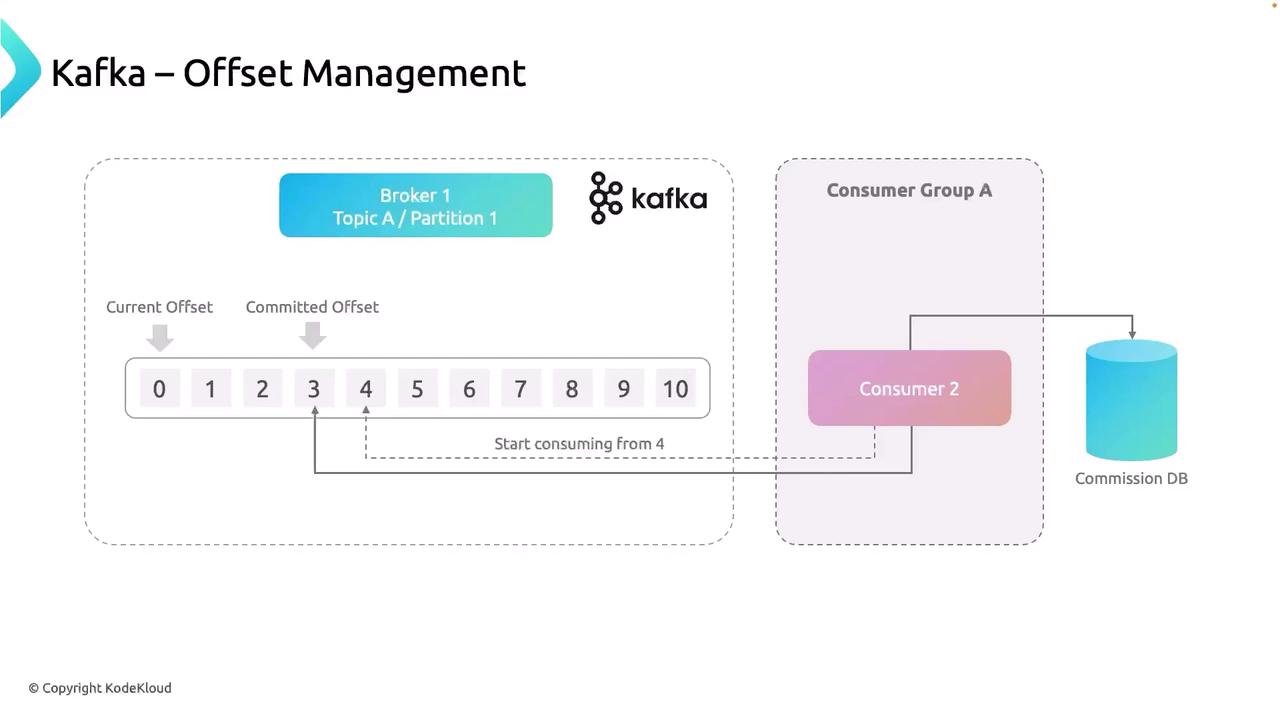

Handling Consumer Failures

If a consumer crashes after processing offset 3, a new consumer (consumer-2) must resume at offset 4 to avoid duplicate entries or data loss. On startup, consumer-2 fetches the last committed offset (3) and continues seamlessly.

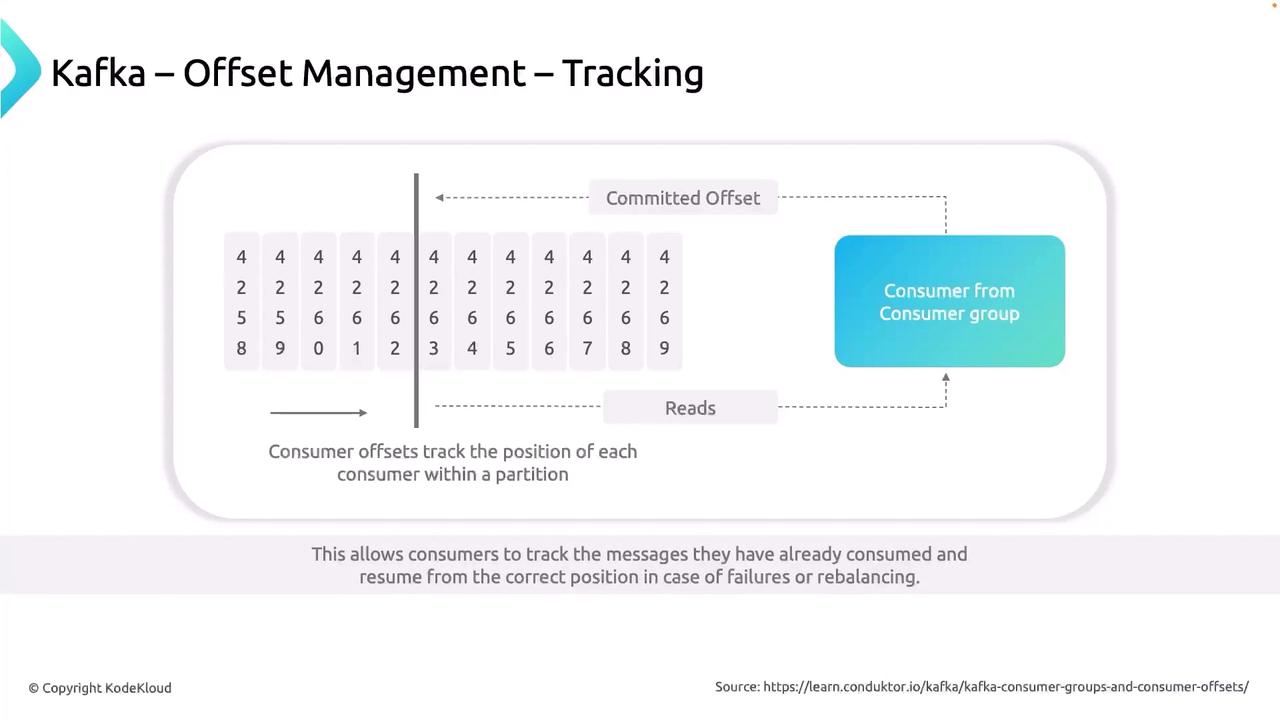

Detailed Offset Tracking

Kafka itself doesn’t track offsets for users; consumers do. As records are processed, the consumer updates its highest processed offset. At configurable intervals or trigger points, the consumer commits that offset to a special internal topic (__consumer_offsets).

Note

Offset commits occur to the __consumer_offsets topic by default. You can customize the commit interval with the auto.commit.interval.ms consumer setting.

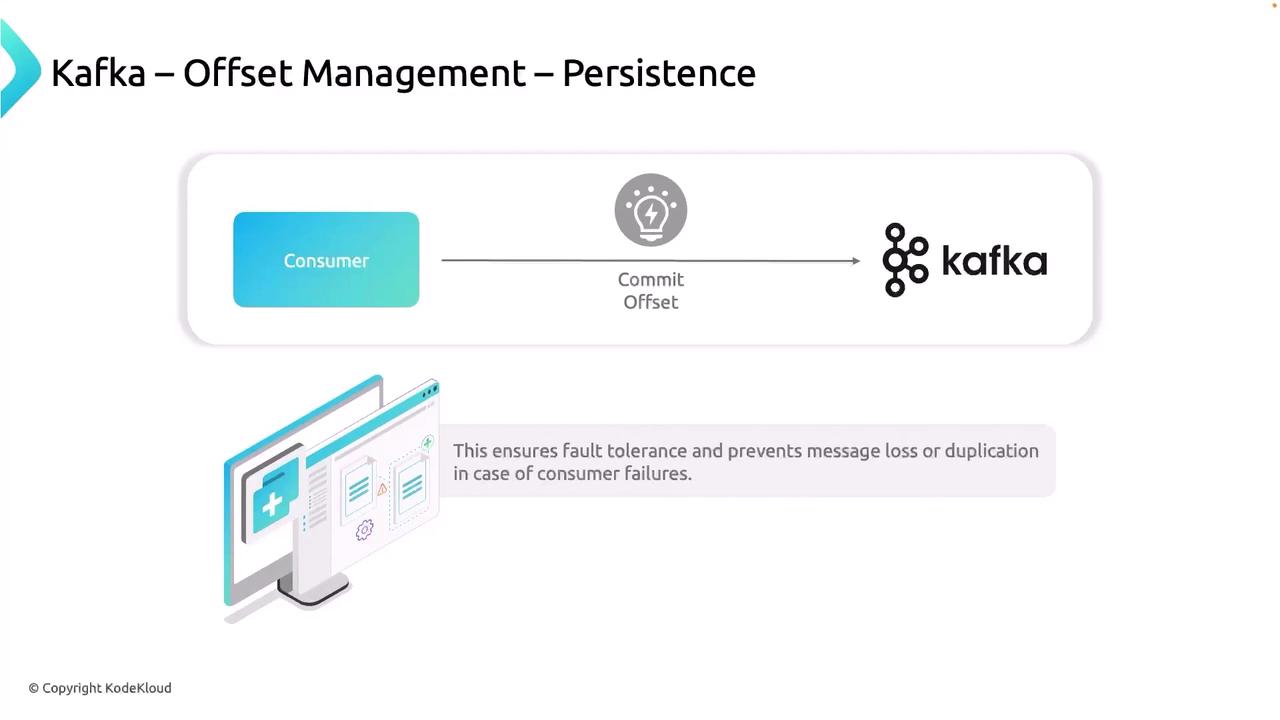

Offset Persistence Strategies

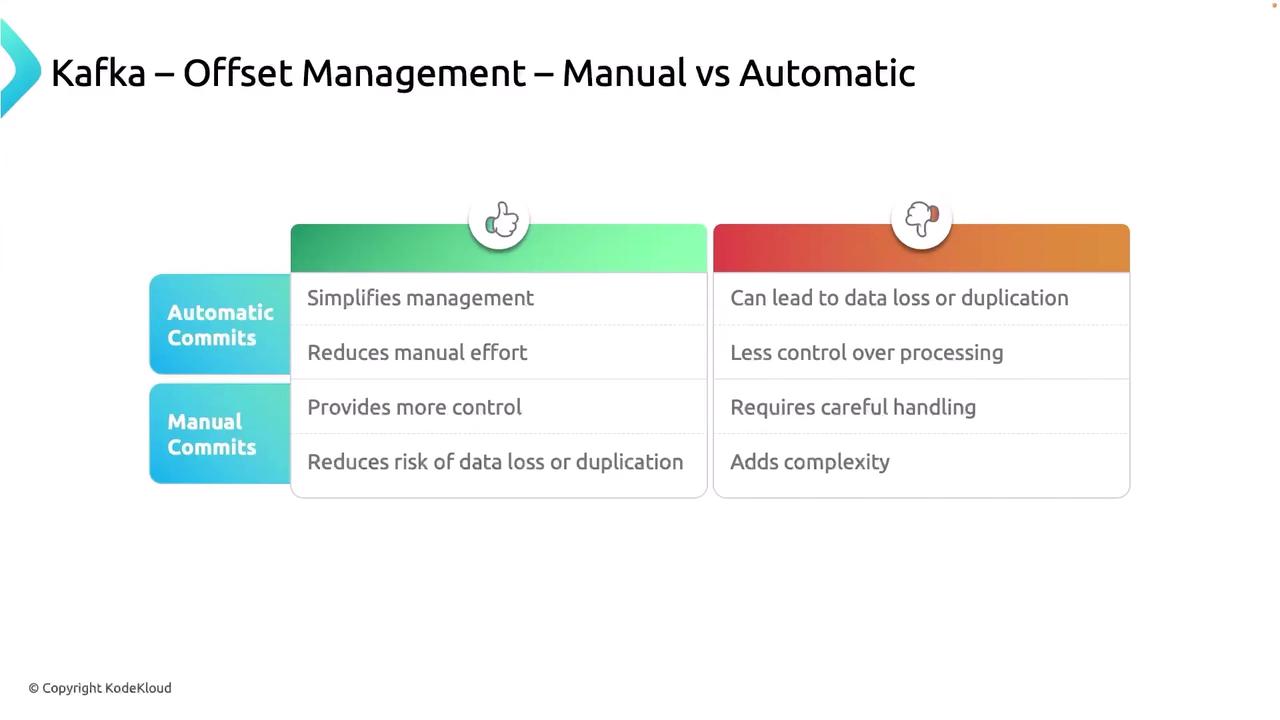

You can choose between automatic and manual offset commits depending on your fault-tolerance and performance needs.

| Commit Type | Pros | Cons |

|---|---|---|

| Automatic Commits | Less boilerplate code | Potential data loss or duplication on failure |

| Manual Commits | Precise control | Requires additional error handling logic |

Code Example: Manual Offset Commit

// Process records...

consumer.commitSync(); // Blocks until the broker acknowledges the offset

Warning

With automatic commits, if a consumer fails between fetching records and committing offsets, you may reprocess or skip messages. Use manual commits when you need exact control.

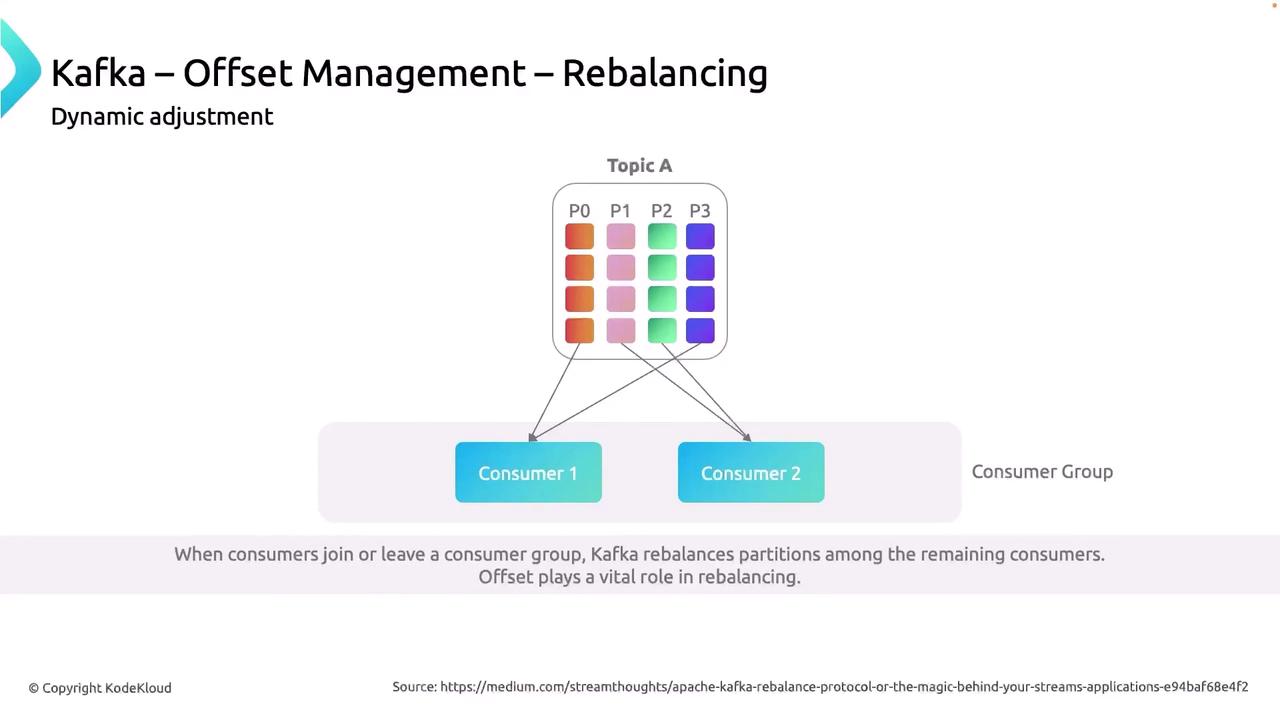

Consumer Group Rebalancing

When consumers join or leave a group, Kafka reassigns partitions among the active members. During rebalancing, each consumer retrieves the last committed offsets for its new partitions to avoid reprocessing or data loss.

Consider a topic with four partitions and two consumers:

| State | Consumer 1 | Consumer 2 |

|---|---|---|

| Before Failure | Partitions 0 & 3 | Partitions 1 & 2 |

| After Failure | Partitions 0, 1, 2 & 3 (reassigned) | — |

Conclusion

Robust offset management is the backbone of reliable Kafka applications. By tracking and committing offsets—either automatically or manually—you ensure that your consumers can recover gracefully and process each message exactly once or at least once, based on your requirements.

References

Watch Video

Watch video content