Manufacturing Line Analogy

Think of a factory conveyor belt with a QR code scanner. Each package moves forward once its code is read. If one package has an unreadable or malformed barcode, the scanner stops the entire line. That single bad package halts production.Poison Pill in Apache Kafka

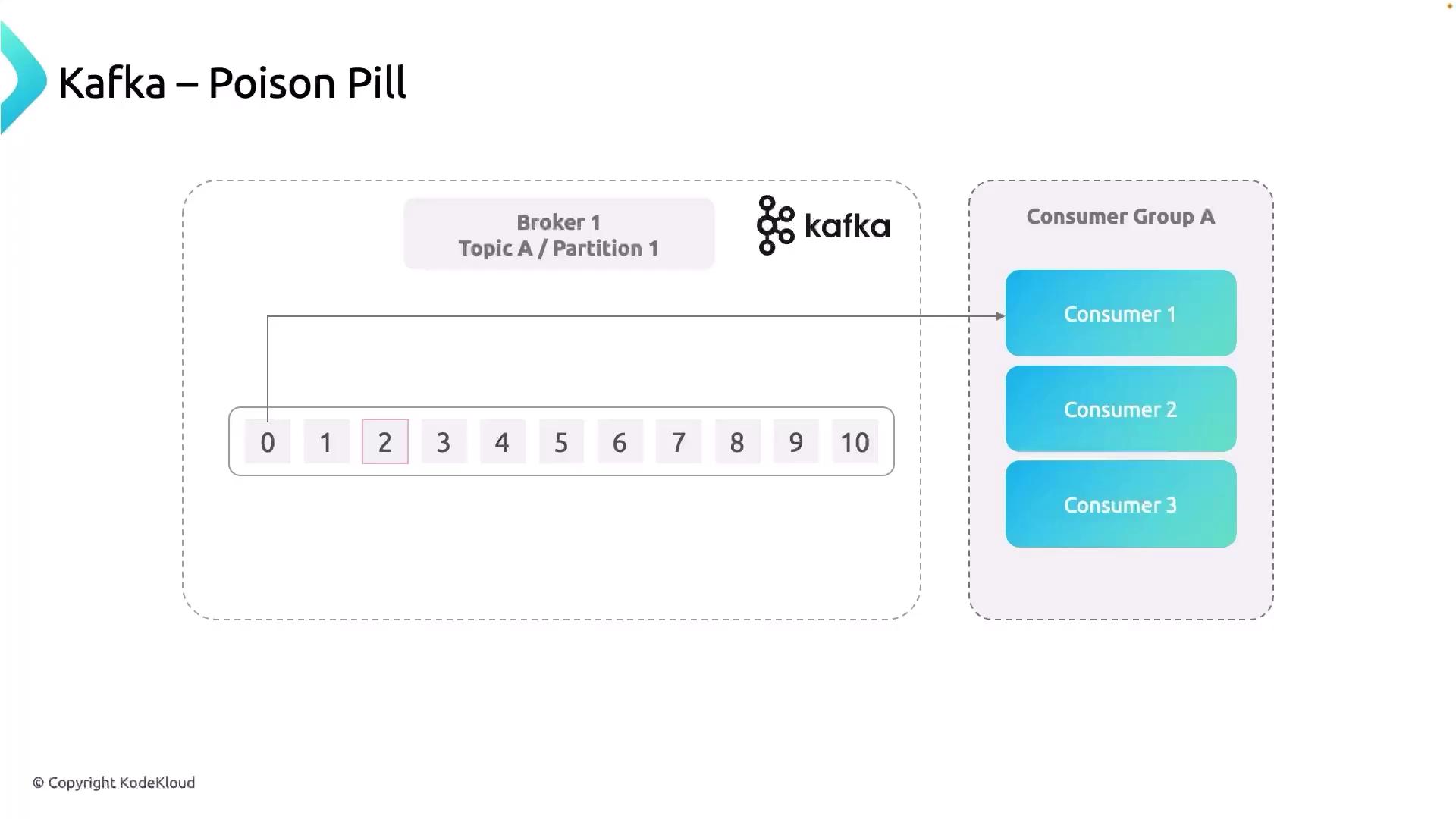

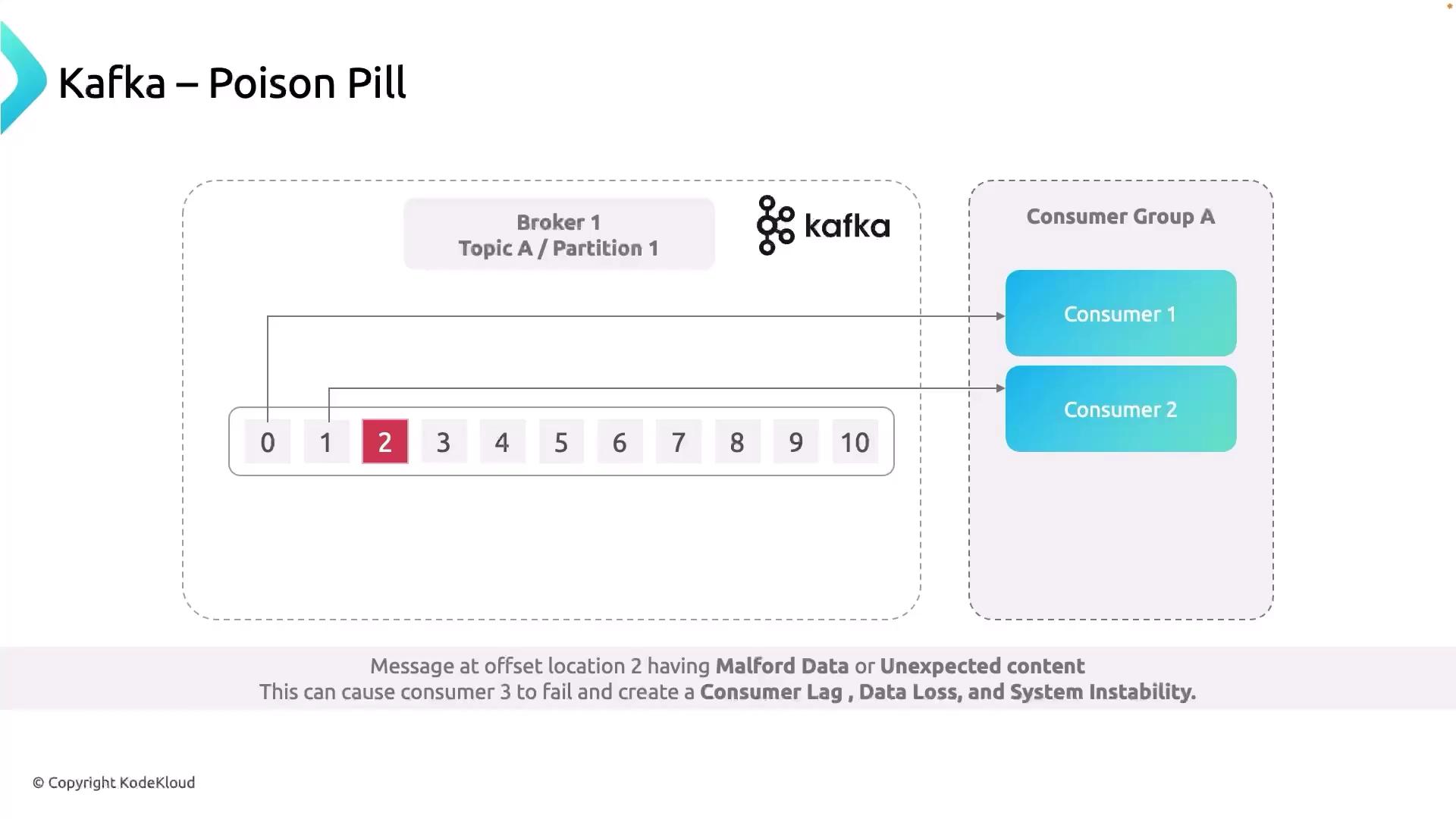

Kafka works similarly: topics consist of ordered partitions, and each partition is read by a single consumer in a consumer group. If one consumer encounters a malformed or unexpected message, it may fail repeatedly, preventing offset commits and causing a backlog. Consider a topic with three partitions and a consumer group of three consumers (one per partition). Consumers 1 and 2 process smoothly, but Consumer 3 hits a malformed message at offset 2.

A single bad message can stall an entire partition. Without detection and handling, you’ll see unbounded retry loops, increased resource consumption, and potential downtime.

Common Causes of a Kafka Poison Pill

A poison pill often stems from:| Issue | Description | Impact |

|---|---|---|

| Malformed Data | Schema doesn’t match consumer expectations (e.g., missing fields). | Deserialization errors |

| Unexpected Content | Payload format differs (e.g., text instead of JSON). | Runtime exceptions |

| Resource Exhaustion | High CPU/memory usage due to continuous retries. | Reduced throughput, OOM crashes |

| System Instability | Consumers crash or hang, leading to lag and potential data loss. | Incomplete processing, service gaps |

Strategies to Prevent and Mitigate Poison Pills

- Schema Validation

Validate messages against an Avro/JSON schema before producing. - Dead-Letter Topics

Route malformed or problematic messages to a dedicated DLQ topic for later inspection. - Backoff-and-Skip Logic

Implement retry with exponential backoff, then skip or move the message after a threshold. - Monitoring and Alerts

Use tools like Confluent Control Center or Prometheus to detect increased consumer errors.

Creating automated alerts on consumer error rates can help you catch poison pills before they impact production.