Event Streaming with Kafka

Kafka Connect Effortless Data Pipelines

Demo Kafka Connect Setting up Kafka using KRaft

In this walkthrough, you will provision an EC2 instance on AWS, install Apache Kafka in KRaft (Kafka Raft) mode, and prepare your environment to use Kafka Connect’s S3 Connector. By the end, you’ll have a running KRaft broker and controller ready to sync topics to Amazon S3.

1. Create an IAM Role for EC2

- Open the AWS Console and navigate to IAM → Roles → Create role.

- Select AWS service as the trusted entity and pick EC2.

- Attach the AmazonSSMManagedInstanceCore policy and click Next.

- Name the role

Kafka-S3-demoand finish creation.

Note

For production, restrict permissions to only the resources your application requires.

The resulting trust policy will resemble:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

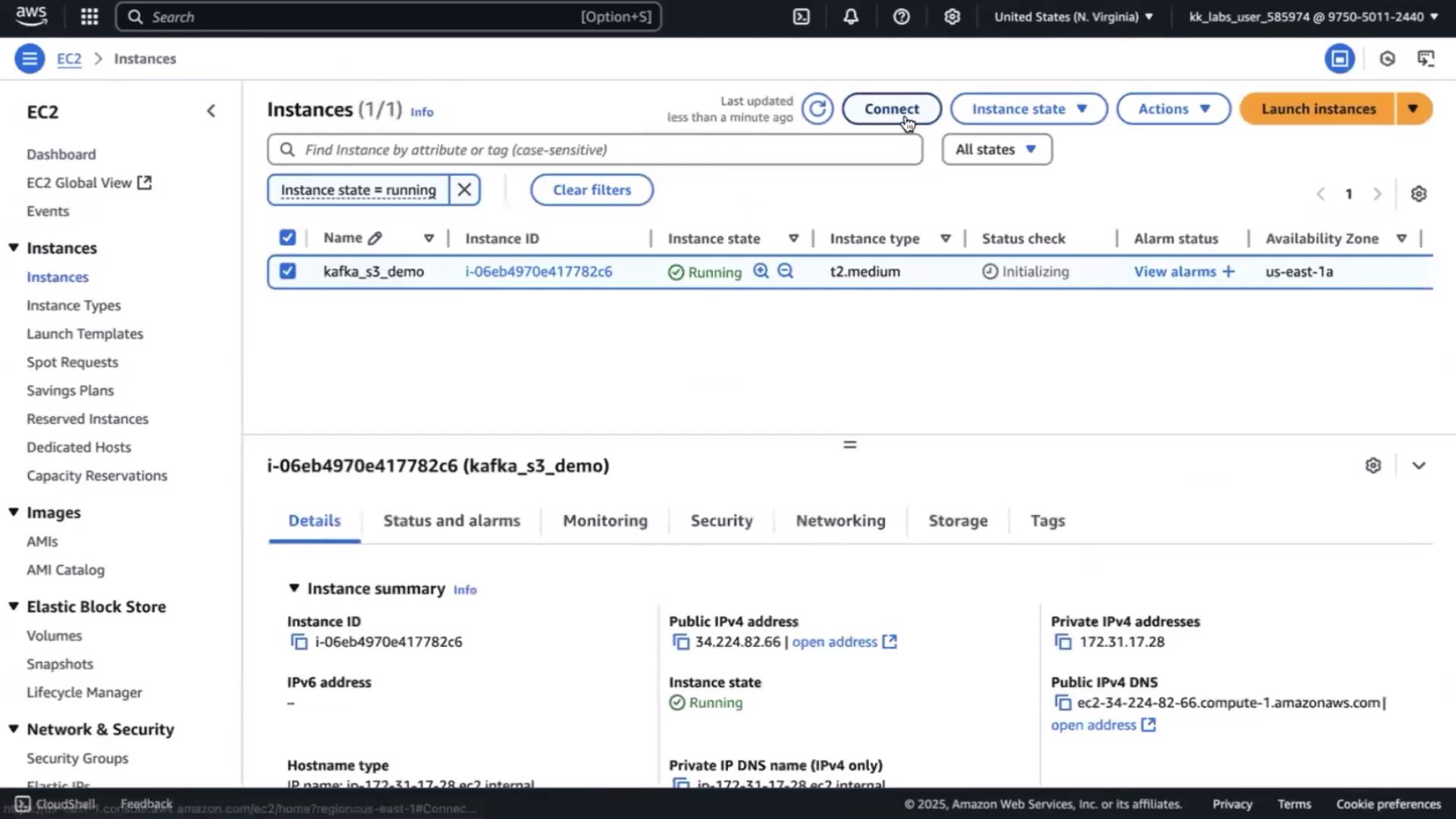

2. Launch an EC2 Instance

- Go to EC2 → Instances → Launch instance.

- Name it

Kafka-S3-demoand choose instance type t2.medium. - Under Key pair, select Proceed without a key pair (for demo only).

- Use the default security group.

- Increase the root volume to 16 GiB.

- In Advanced settings, attach the IAM role

Kafka-S3-demo. - Click Launch instance.

3. Connect via Session Manager

Once the instance state is running, select it and choose Connect → Session Manager → Connect.

In the shell session, become root and navigate home:

sudo su

cd ~

4. Download and Extract Kafka Binaries

# Fetch Kafka 3.0.0 (Scala 2.13)

wget https://downloads.apache.org/kafka/3.0.0/kafka_2.13-3.0.0.tgz

tar -xzf kafka_2.13-3.0.0.tgz

# Inspect directory structure

cd kafka_2.13-3.0.0

ls -l

You should see bin, config, and libs among other folders.

5. Install Java Runtime

Verify Java is present:

java -version

If missing, install OpenJDK 8:

yum install -y java-1.8.0-openjdk

java -version

Expected output:

openjdk version "1.8.0_442"

OpenJDK Runtime Environment Corretto-8.442.06.1 (build 1.8.0_442-b06)

OpenJDK 64-Bit Server VM Corretto-8.442.06.1 (build 25.442-b06, mixed mode)

6. Format Storage for KRaft

Generate a unique ID and format the combined logs directory:

UUID=$(uuidgen)

bin/kafka-storage.sh format \

-t $UUID \

-c config/kraft/server.properties

You should see:

Formatting /tmp/kraft-combined-logs

7. Configure Kafka for KRaft Mode

Open the KRaft properties:

vim config/kraft/server.properties

Update the following (replace <EC2_PUBLIC_IP> with your instance’s public IPv4):

process.roles=broker,controller

node.id=1

controller.quorum.voters=1@localhost:9093

######################## Socket Server Settings ############################

listeners=PLAINTEXT://0.0.0.0:9092,CONTROLLER://0.0.0.0:9093

inter.broker.listener.name=PLAINTEXT

advertised.listeners=PLAINTEXT://<EC2_PUBLIC_IP>:9092

Save and exit.

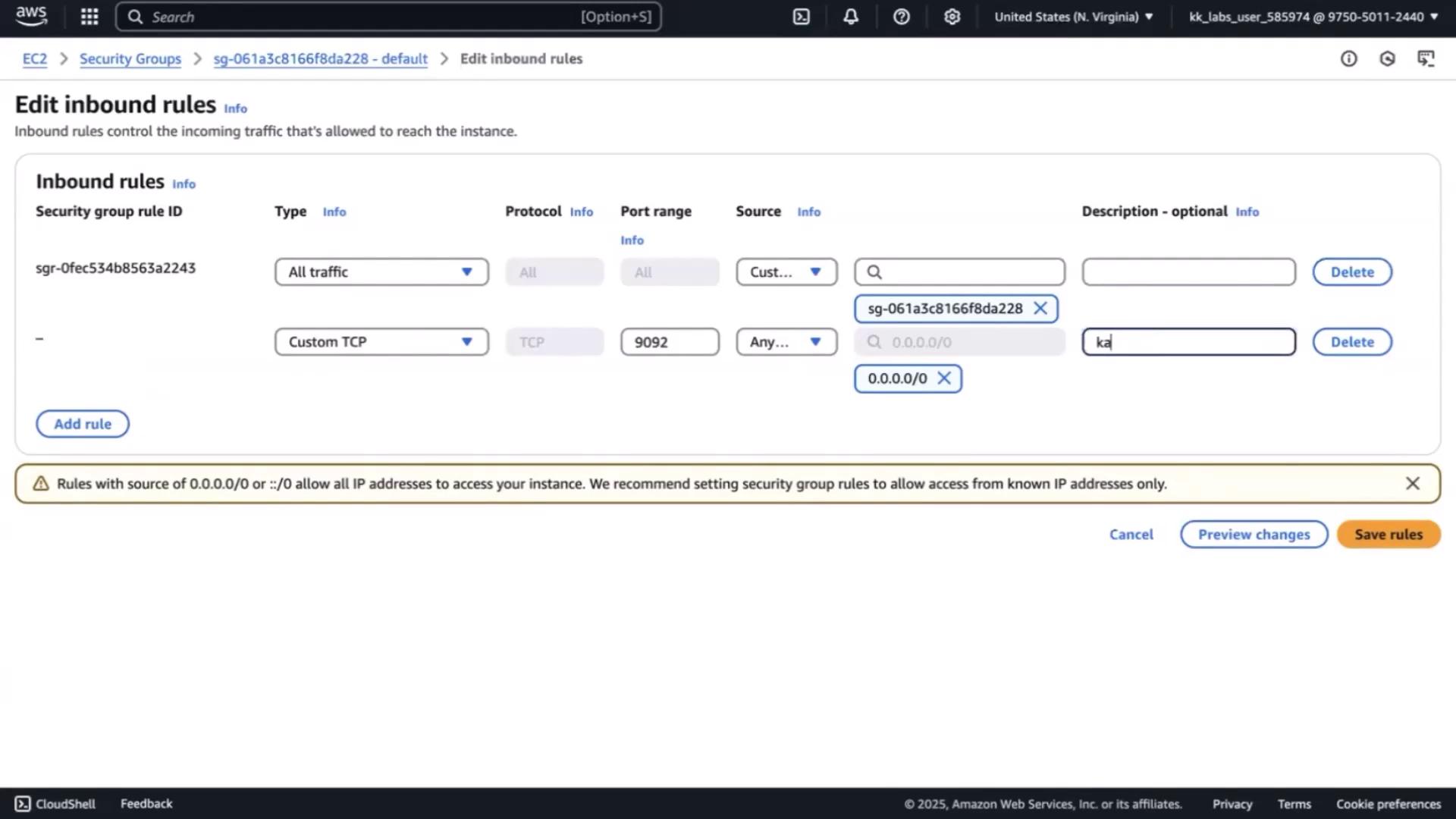

8. Open Port 9092 in Your Security Group

- In the EC2 Console, select Security Groups for your instance.

- Click Edit inbound rules.

- Add a Custom TCP rule for port 9092 from 0.0.0.0/0 (demo only).

- Description:

Kafka Brokers. - Save changes.

Warning

Allowing 0.0.0.0/0 exposes your broker to the internet. Restrict this in production.

Below is a quick reference for Kafka ports:

| Port | Protocol | Purpose |

|---|---|---|

| 9092 | PLAINTEXT | Client–broker communication |

| 9093 | CONTROLLER | Controller quorum and internal API |

9. Start Kafka in KRaft Mode

Launch the broker and controller together:

bin/kafka-server-start.sh config/kraft/server.properties

Look for logs confirming both roles:

[2025-05-10 10:57:05,750] INFO Kafka version: 3.0.0

[2025-05-10 10:57:05,750] INFO Kafka Server started (kafka.server.KafkaRaftServer)

Next Steps

With your KRaft broker running, proceed to:

- Download the Kafka S3 Connector plugin.

- Configure

connect-standalone.propertiesands3-sink.properties. - Launch the connector to start syncing topic data to Amazon S3.

Links and References

- Apache Kafka Documentation

- AWS IAM Roles

- AWS EC2 User Guide

- AWS Systems Manager Session Manager

- Kafka Connect S3 Connector

Watch Video

Watch video content