Recap: Producing Order Events

When a customer places an order in the toy shop frontend (app.py), we serialize the cart as JSON and send it to the cartevent Kafka topic:

Building the Warehouse UI Consumer

- Close the toy shop folder and open

final-projects/warehouse-uiin VS Code. - Edit

app.py, importing Kafka and configuring logging at the top:

- Add a function to poll Kafka for order events:

| Configuration Key | Description | Example |

|---|---|---|

| bootstrap.servers | Address of your Kafka broker | 3.68.92.91:9092 |

| group.id | Unique consumer group identifier | warehouse_reader_<UUID> |

| auto.offset.reset | Where to start reading if no offset exists | earliest |

| enable.auto.commit | Disable automatic offset commits for safety | False |

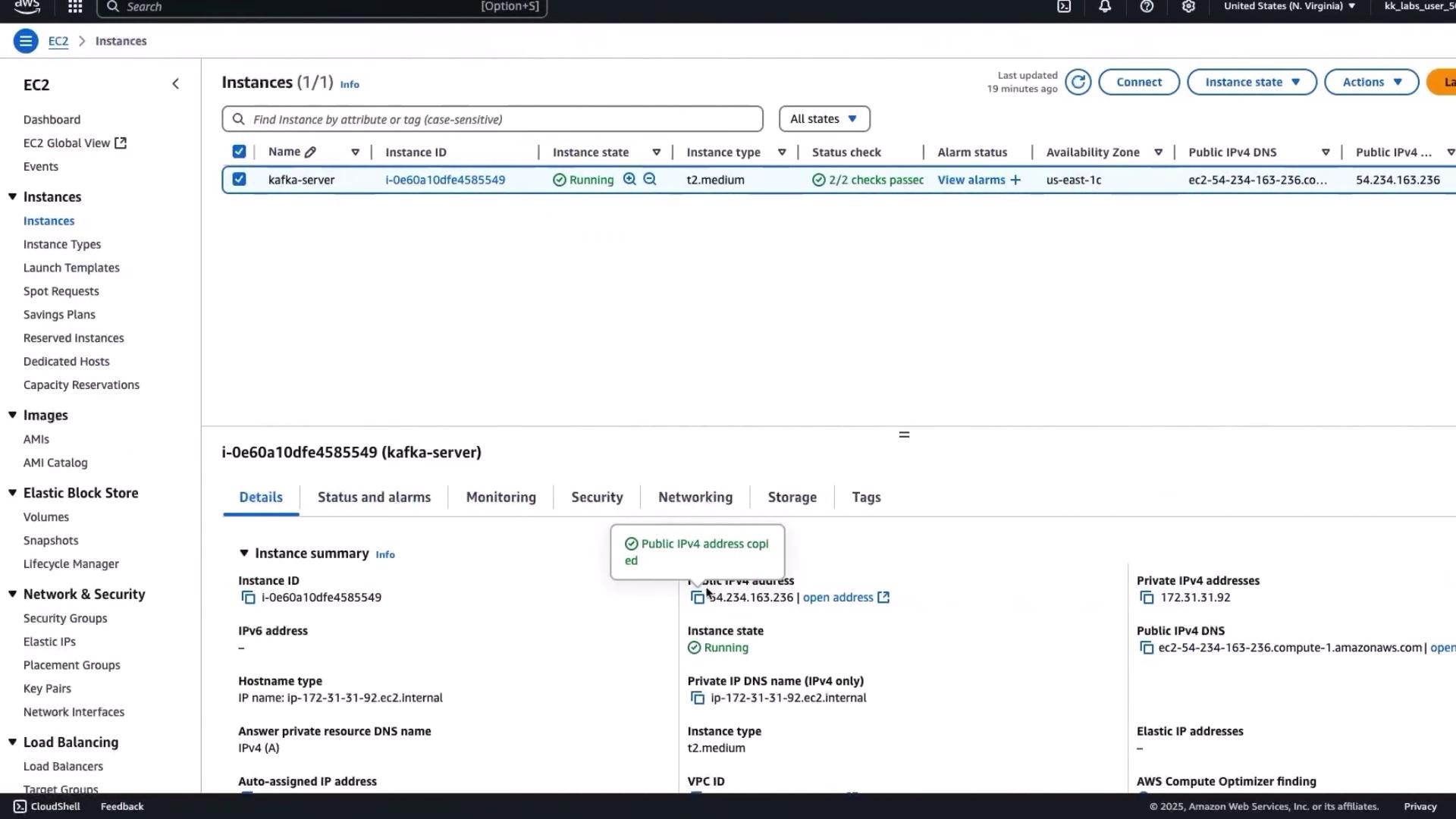

Ensure

bootstrap.servers matches your Kafka broker’s public IP. You can verify it in the AWS EC2 dashboard.

Rendering the Dashboard

Expose a Flask route that fetches orders from Kafka and renders them indashboard.html:

orders to display each customer’s details, products, and total amount.

Running and Testing the Warehouse UI

http://localhost:5000/ in your browser. The dashboard polls Kafka for new cartevent messages.

End-to-End Test

- In the toy shop frontend, add Toy 6 to the cart.

- Enter Rose as the customer name and Delhi as the address.

- Click Place Order to send the event to Kafka.

- Return to the warehouse dashboard and refresh. You should see Rose’s order listed.

Next Steps

- Auto-refresh the dashboard when new events arrive

- Persist orders to a database and map products to rack locations

- Add packing instructions or real-time inventory lookups