Exploring WebAssembly (WASM)

Compiling to WebAssembly

Optimizing Compiled WASM code

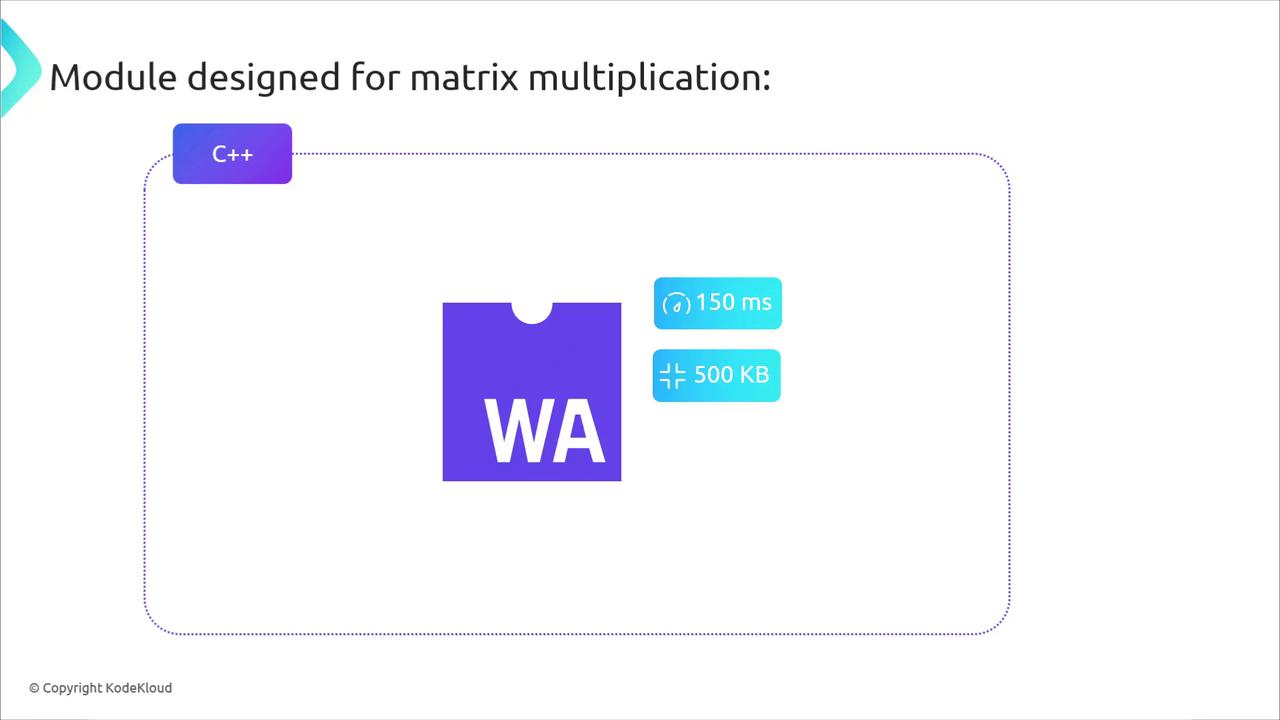

In this guide, we walk through seven practical steps to shrink and speed up a WebAssembly (WASM) module for matrix multiplication. Starting from a 500 KB C++ build running in 150 ms, you’ll learn how to apply dead code elimination, post-processing, compiler flags, memory management, runtime choices, pre-initialization, and a language switch to dramatically improve both size and performance.

1. Dead Code Elimination

Removing unused functions and redundant branches is the fastest way to get immediate savings. By running a dead code pass in your compiler or using wasm-snip, you can:

- Decrease binary size by ~100 KB (500 KB → 400 KB)

- Reduce execution time by ~10 ms (150 ms → 140 ms)

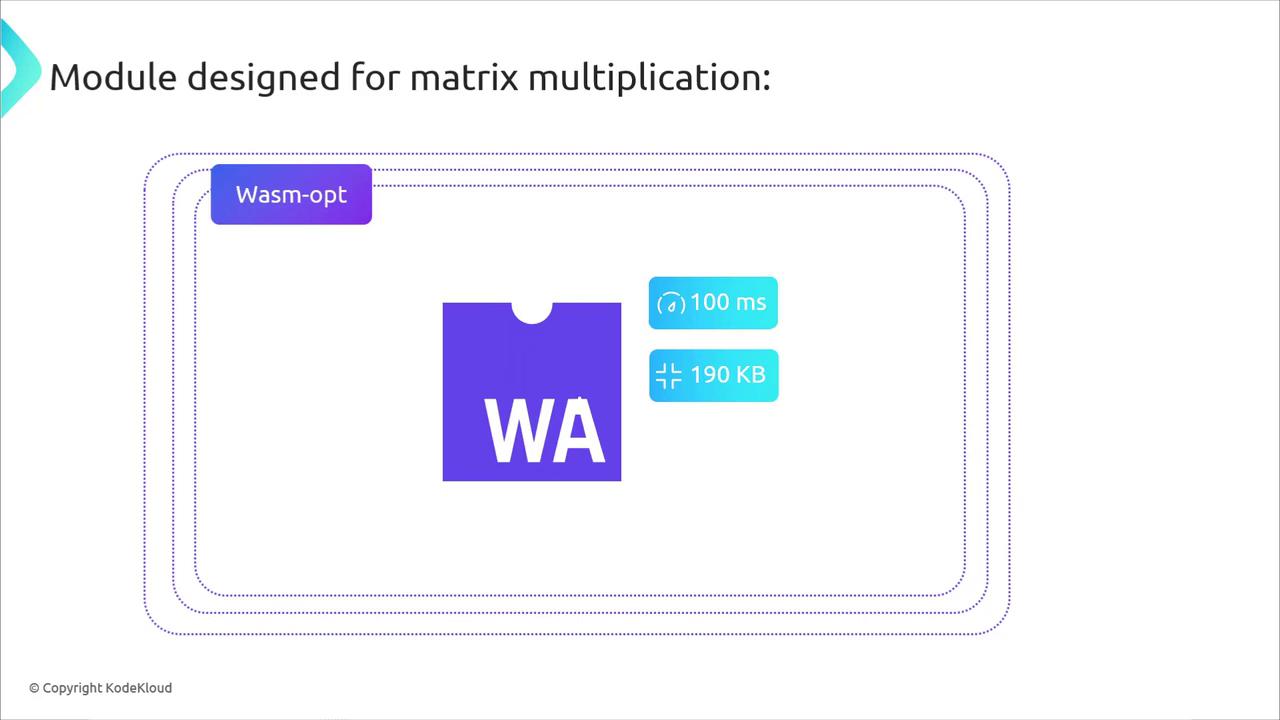

2. Post-Processing with wasm-opt

The Binaryen toolkit’s wasm-opt applies aggressive size and speed rewrites:

wasm-opt -Oz -o matrix.opt.wasm matrix.dead.wasm

Result:

- Size: 400 KB → ~190 KB

- Runtime: 140 ms → 100 ms

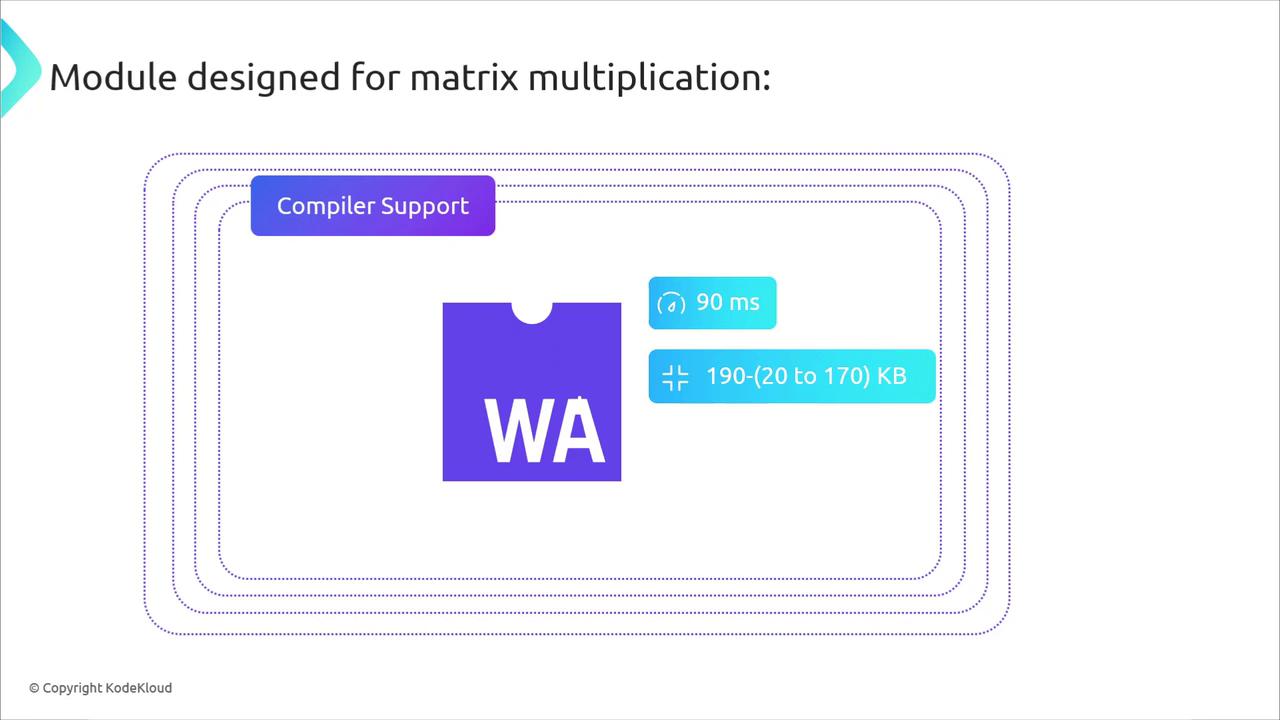

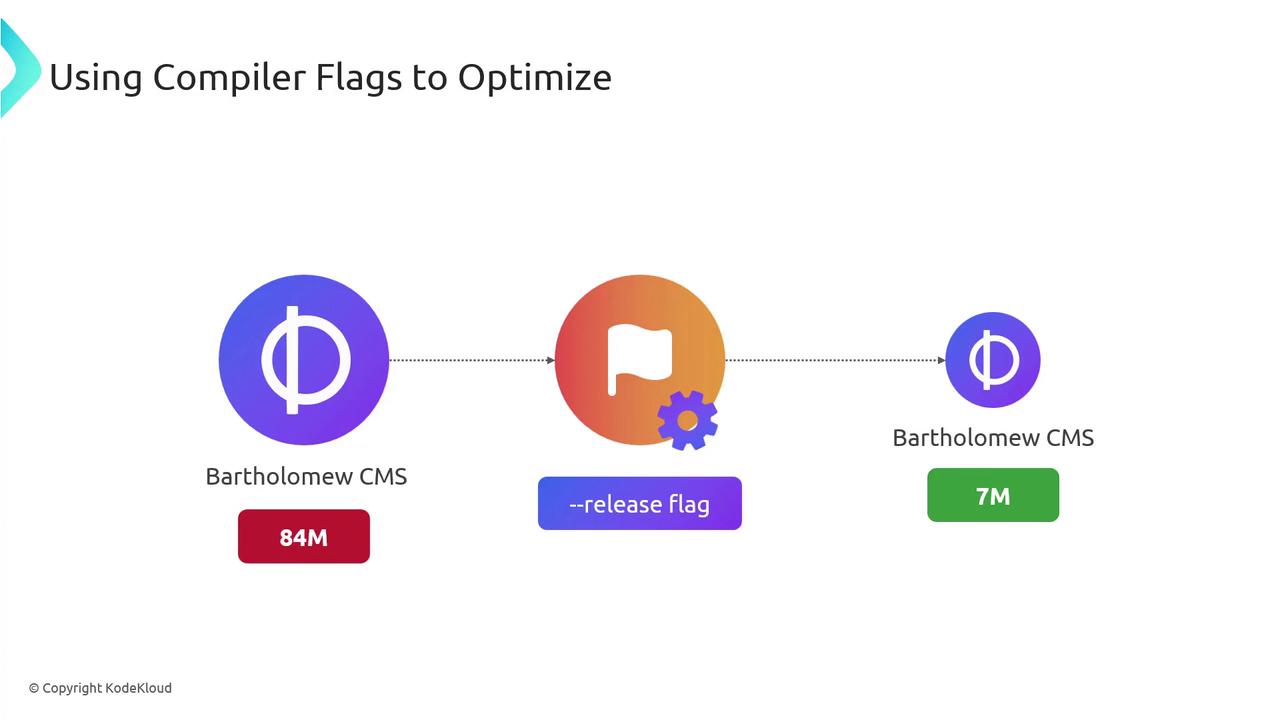

3. Compiler Flags for Maximum Speed

Leveraging Emscripten with -O3 and LTO can push performance further:

emcc matrix.cpp -O3 -flto -o matrix.emcc.wasm

- Size: 190 KB → 170 KB

- Runtime: 100 ms → 90 ms

4. Efficient Memory Management

WASM’s linear memory model can leak if allocations aren’t released. Use tools like AddressSanitizer to catch issues:

Warning

Always validate memory allocation and deallocation. Leaks in long-running modules can negate performance gains.

With no leaks, you can shave off ~5 ms:

- Runtime: 90 ms → 85 ms

- Size: unchanged at 170 KB

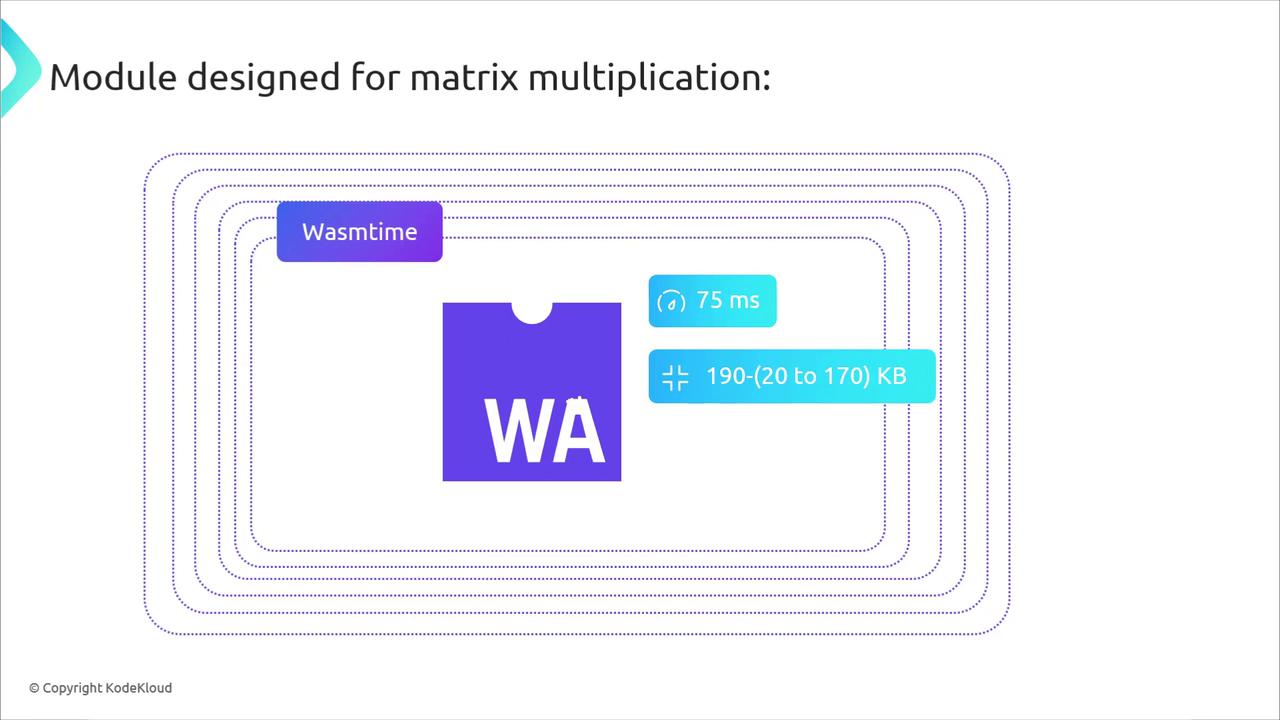

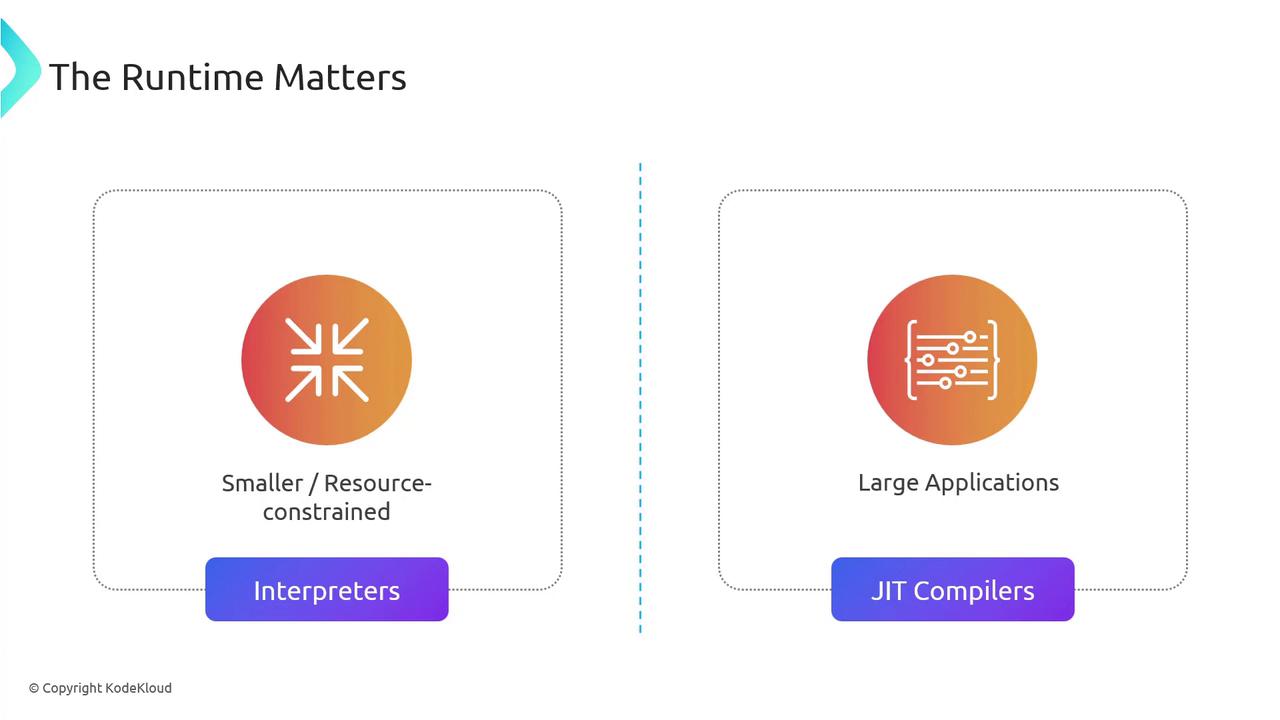

5. Runtime Selection: JIT vs. AOT

Choosing the right execution engine can yield major wins:

| Runtime Type | Examples | Impact |

|---|---|---|

| JIT | Wasmer, Wasmtime | 85 ms → 75 ms |

| AOT | Wasmer AOT, Wasmtime AOT | 75 ms → 70 ms |

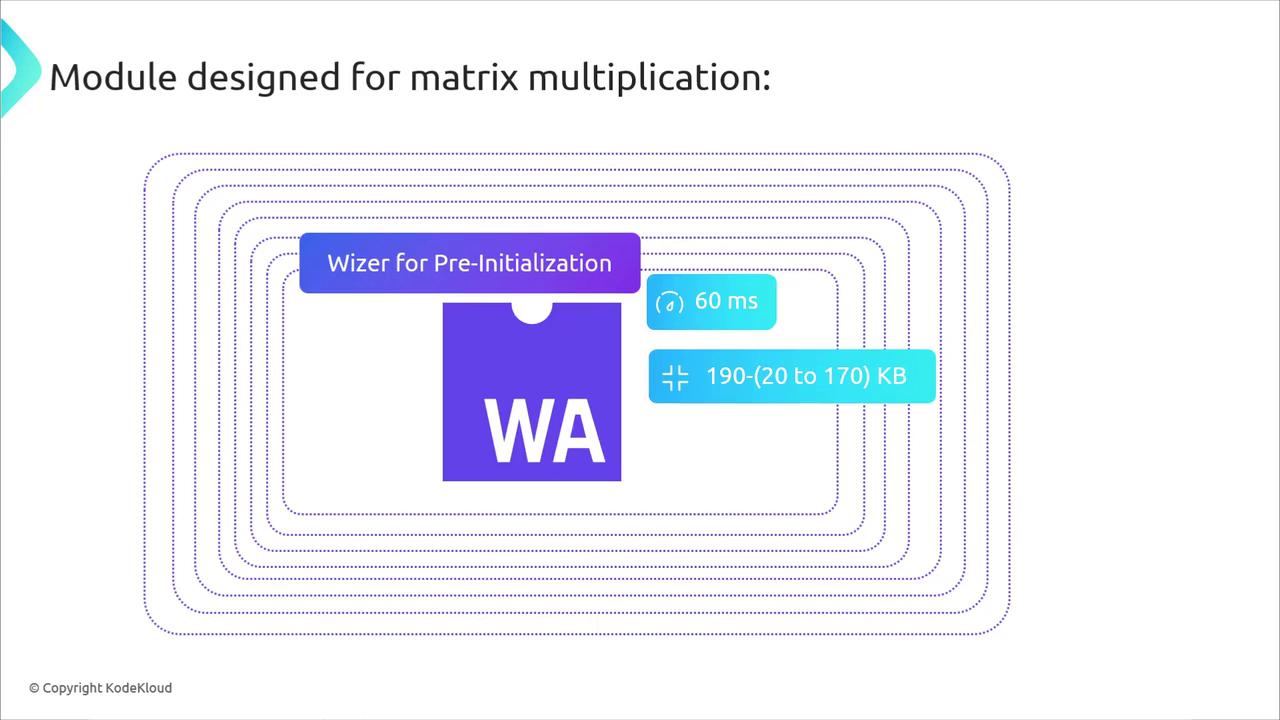

6. Pre-Initialization with Wizer

Wizer freezes module initialization state into the WASM binary. After the first run:

- Cold-start time drops from 70 ms → 60 ms

- Size remains at 170 KB

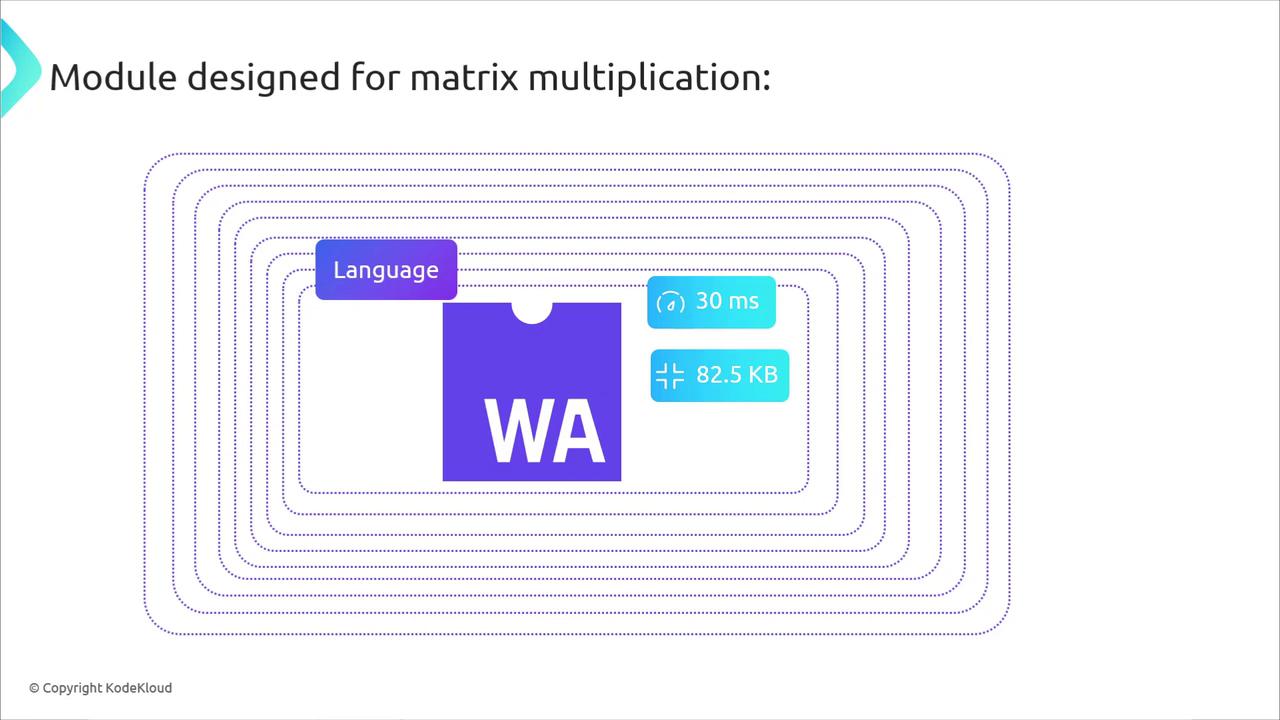

7. Language Choice: C++ vs. Rust

Switching to Rust often produces smaller, faster binaries. In a Fermyon study:

- Size: 170 KB → ~82.5 KB

- Runtime: 60 ms → 30 ms

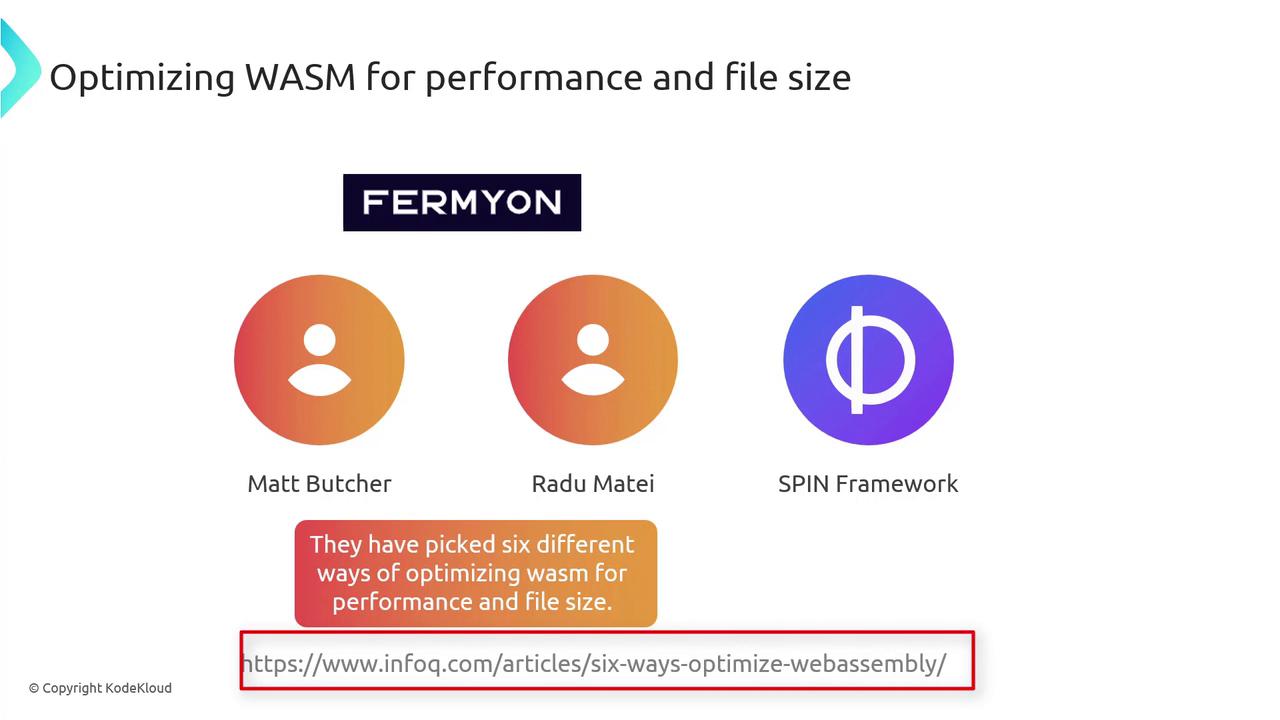

Fermyon’s research (Matt Butcher, Radu Matei) evaluated six optimization dimensions for file size and speed. Their key insights:

| Factor | Benefit | Example |

|---|---|---|

| Programming Language | Smaller, faster binaries | Rust “Hello, world!” vs. Swift |

| Compiler Flags | Drastic size reduction (-O3, --release) | Bartholomew CMS: 84 MB → 7 MB |

| Post-Processing Tools | Additional compression | Rust binary: 9 MB → 4 MB |

| Runtime Choice | JIT for flexibility, AOT for speed | Interpreters vs. JIT compilers |

| AOT Compilation & Pre-Initialization | Lower startup overhead | WASI + Wizer pre-init |

For the full technical deep dive, see Fermyon’s blog post.

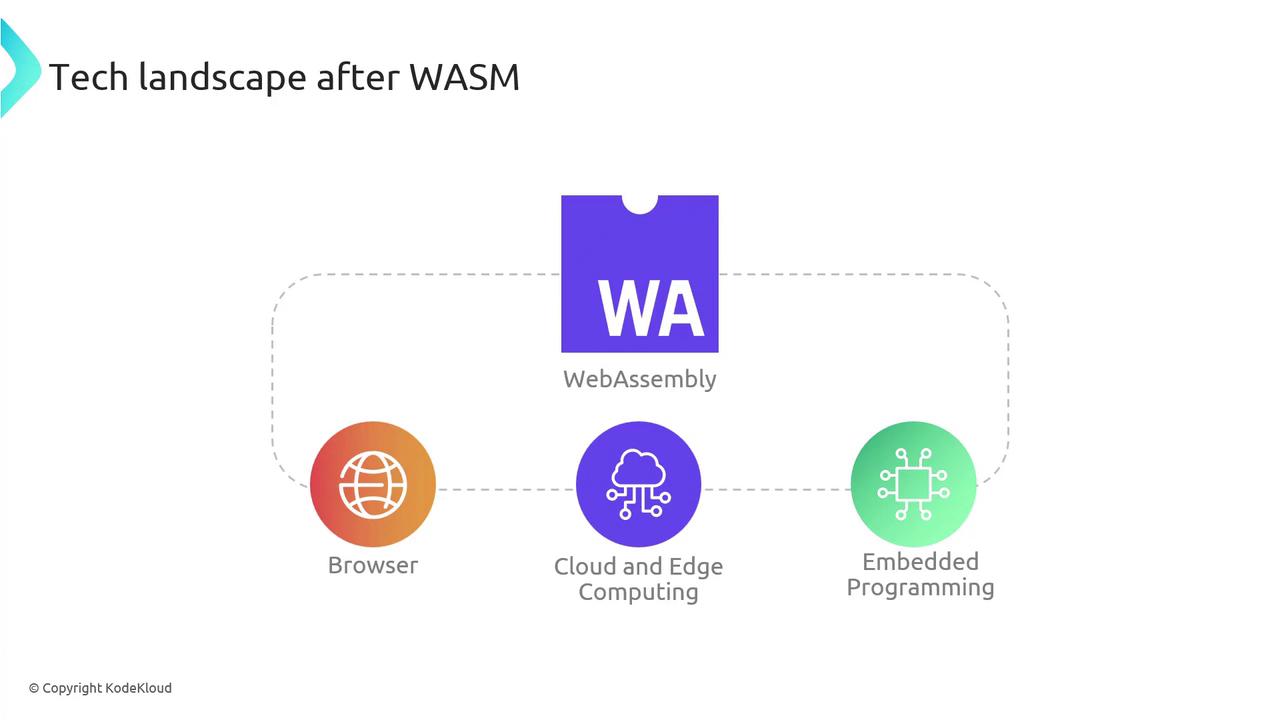

Optimizing WASM modules delivers fast load times, efficient resource usage, and better user experiences across browsers, cloud, edge, and embedded systems.

- Fast initialization is vital for web and serverless apps.

- Lower memory and CPU footprints empower mobile and IoT devices.

- Responsive performance boosts user satisfaction.

WebAssembly isn’t just for browsers—it powers plugins, edge compute, and embedded scenarios.

Effective WASM optimization ensures your modules are lean, fast, and ready for any environment.

Watch Video

Watch video content