Fundamentals of MLOps

Model Development and Training

World of CPUs and GPUs

Welcome back! In this article, we delve into the contrasting architectures of CPUs and GPUs, explore their unique strengths, and explain how they complement each other in machine learning and computational tasks. Data scientists frequently debate the need for more CPUs or GPUs, but what do these terms really mean? Let’s break it down.

Challenges in Modern Computing

Modern computing environments face four significant hurdles:

- Model Computing: The need for high-performance processors to tackle iterative calculations.

- Large Datasets: The ever-growing volume of data that must be processed quickly.

- Long Training Times: Machine learning models can require days or even weeks to train.

- Complex Deep Learning Models: Increasingly sophisticated models demand both speed and efficiency.

These challenges reveal the limitations of traditional CPU-based systems. While CPUs offer versatility, their design optimized for sequential processing makes them less ideal for modern, parallel computational tasks. This is where GPUs truly shine.

Key Differences Between CPUs and GPUs

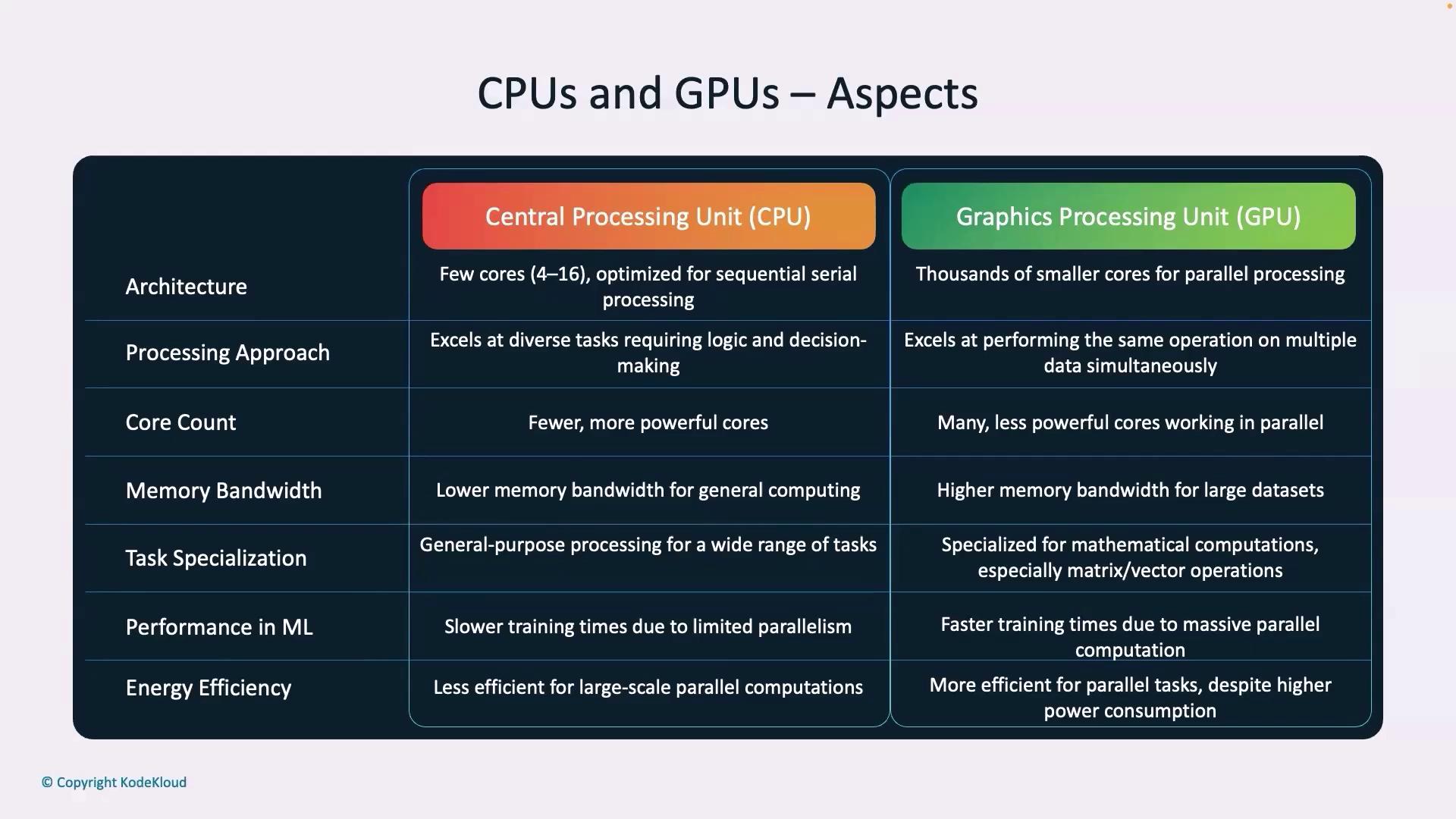

Understanding the differences is essential. Below are six critical aspects that set CPUs and GPUs apart:

1. Architecture

- CPUs: Packed with a few powerful cores optimized for sequential processing.

- GPUs: Contain thousands of smaller cores designed for parallel processing.

2. Processing Approach

- CPUs: Excel at tasks that require complex logic and decision-making—ideal for general-purpose processing.

- GPUs: Specialize in performing the same operation across multiple data points simultaneously, a feature vital for machine learning and image processing.

3. Core Count

- CPUs: Feature fewer but more powerful cores.

- GPUs: Utilize numerous less powerful cores to handle large datasets through massive parallelism.

4. Memory Bandwidth

- CPUs: Offer lower memory bandwidth, which is sufficient for everyday computing tasks.

- GPUs: Provide higher memory bandwidth, making them perfect for extensive computations in machine learning.

5. Task Specialization

- CPUs: Capable of managing a wide range of logic-intensive tasks.

- GPUs: Optimized for mathematical operations, vector computations, and other functions that underpin many machine learning algorithms.

6. Efficiency in Training

- CPUs: Their limited parallel processing can result in longer training times for machine learning models.

- GPUs: Their architecture enables significantly reduced training times, although they do come with a higher cost and challenges in provisioning due to limited availability.

Note

When data scientists request additional GPUs, it’s crucial to weigh the performance benefits against the higher cost and provisioning challenges.

Detailed Comparison Table

| Feature | CPU | GPU |

|---|---|---|

| Architecture | Few powerful cores for sequential tasks | Thousands of smaller cores for parallel tasks |

| Processing Approach | Excels in logic-intensive processing | Excels in simultaneous data operations |

| Core Count | Limited | High parallelism with multiple cores |

| Memory Bandwidth | Lower | Higher, suitable for large-scale computation |

| Task Specialization | General-purpose computing | Specialized in mathematical and vector operations |

| Efficiency in Training | Slower training times | Significantly reduced training times |

Conclusion

CPUs and GPUs are designed for distinct tasks—CPUs are optimized for a wide range of logic-intensive processes while GPUs excel in high-parallel processing needed for machine learning model training. Choosing the right processor at various stages of the MLOps lifecycle is critical to achieving optimal performance.

Thank you for reading! We look forward to continuing our discussion on cutting-edge computational technologies.

Watch Video

Watch video content