GCP Cloud Digital Leader Certification

Google Clouds solutions for machine learning and AI

Usecase for Big Data

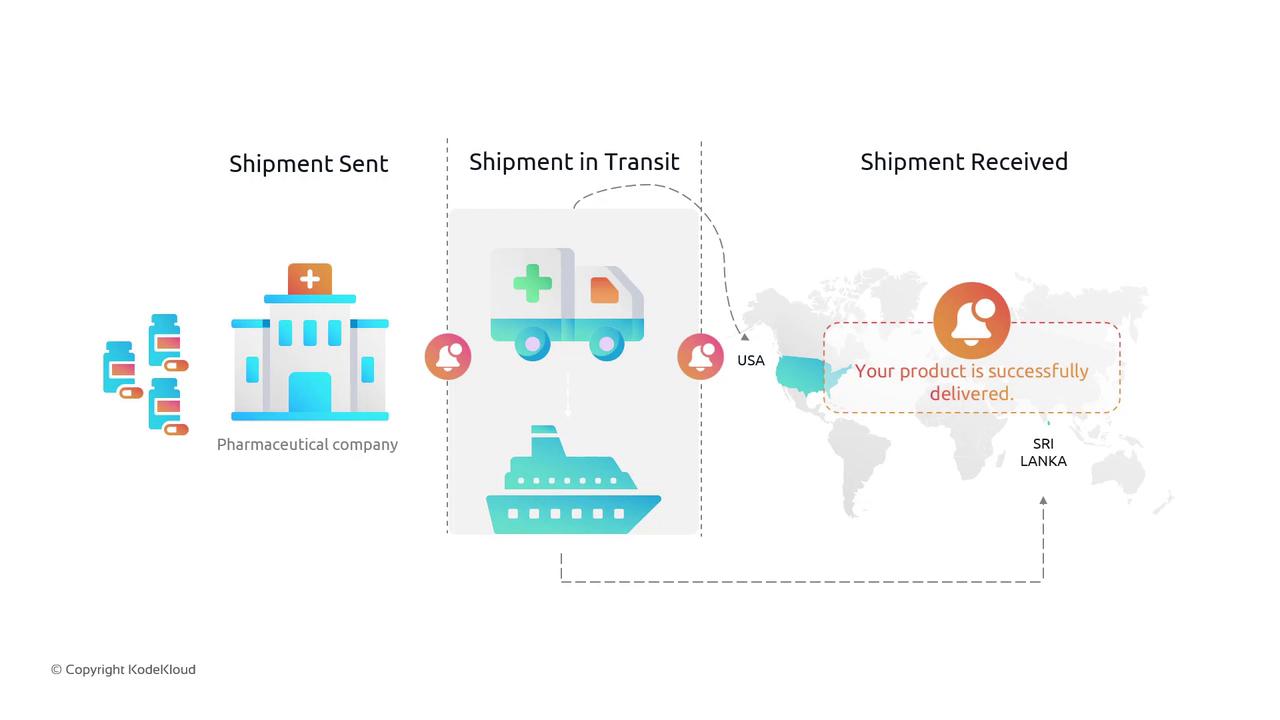

Hello and welcome back! In this lesson, we explore how a pharmaceutical company leverages big data using Google Cloud Platform (GCP) to optimize its shipment and delivery processes. This real-time data processing workflow enhances logistics efficiency and improves customer experience.

Business Process Overview

In our scenario, the pharmaceutical company distributes various drugs, prescription tablets, and health-related products. The shipment process from the warehouse to pharmacies includes several critical steps:

- A notification is sent to the pharmacy when a shipment is initiated.

- Pharmacies can track the order’s location during transit.

- A confirmation notification is sent upon successful delivery.

This end-to-end process demonstrates a real-time application where continuous monitoring and prompt notifications are essential for operational excellence.

By capturing data across the entire shipment journey, the company collects a vast volume of diverse information. This data is then transformed into actionable insights that help refine shipment strategies and boost overall efficiency.

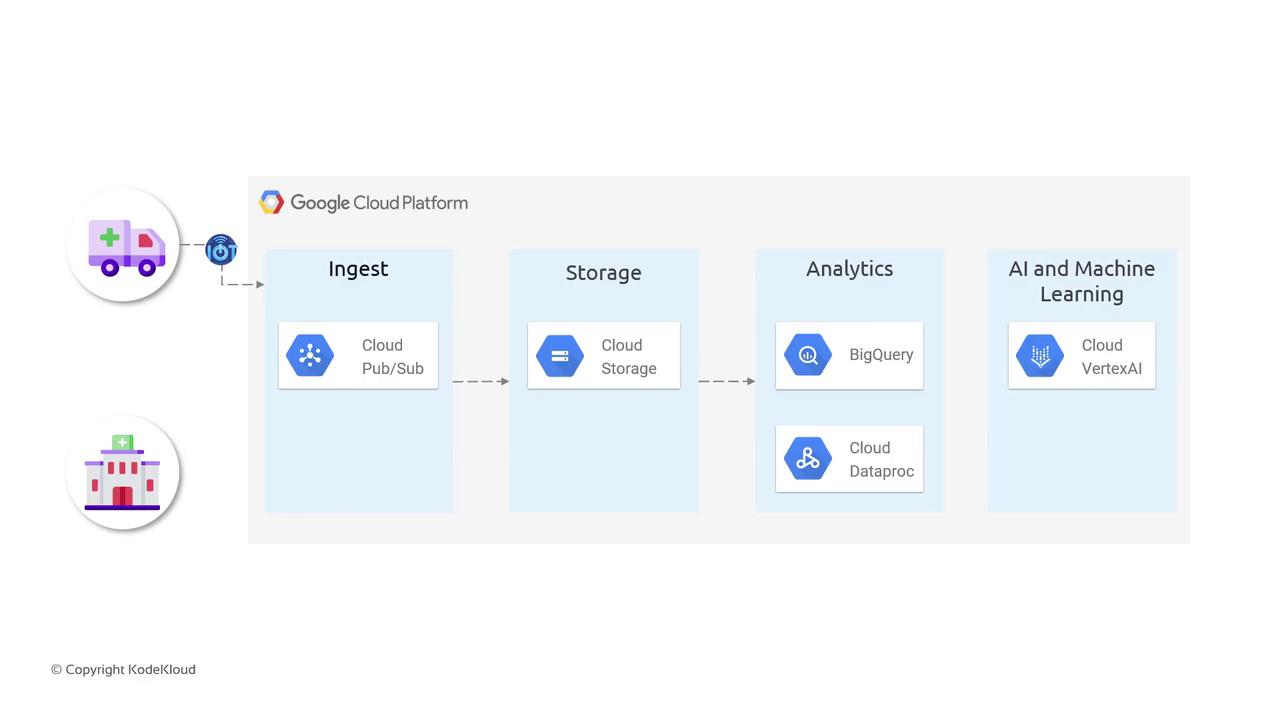

Integrating Google Cloud Services

GCP’s suite of services plays a central role in addressing the big data challenges in this scenario. Below is an overview of each service and its role in the data pipeline:

Data Ingestion with Pub/Sub

Immediately after a product leaves the warehouse, data is ingested via Internet of Things (IoT) devices into Cloud Pub/Sub. This service streams real-time events by publishing shipment data to a dedicated topic, ensuring that every event is captured as it occurs.

Data Storage with Cloud Storage

Once ingested, the data is securely stored in Cloud Storage. This cost-effective object storage solution holds vast amounts of shipment data, serving as a reliable repository for subsequent analysis and processing.

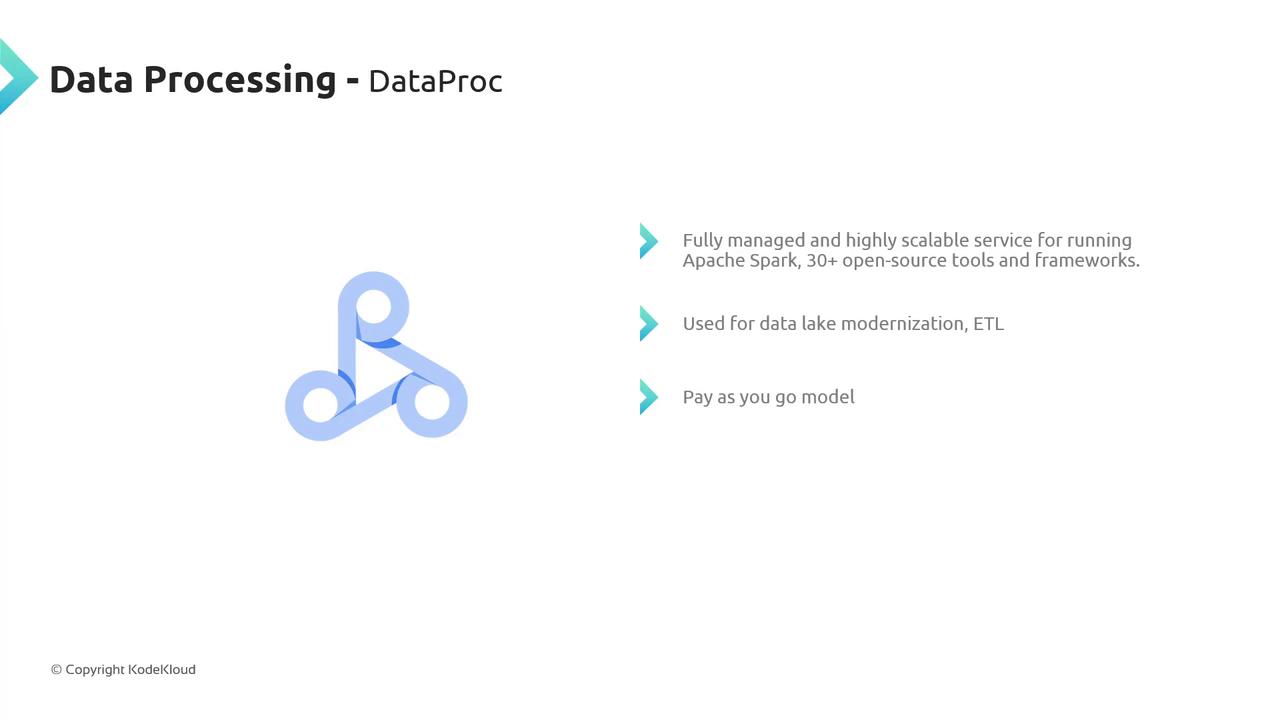

Data Processing with Dataproc

With large data volumes at play, processing is essential. Cloud Dataproc – a fully managed service supporting over 30 open-source tools (including Apache Spark and Apache Flink) – cleans and transforms the raw data. This modern ETL process is both scalable and cost-efficient under a pay-as-you-go pricing model.

Analytics with BigQuery

For in-depth analysis, the processed data is analyzed using BigQuery. Whether queried directly from BigQuery or integrated from Cloud Storage, this powerful data warehouse allows for rapid and efficient exploration of large datasets, supporting data-driven decision-making.

AI and Machine Learning with Vertex AI

The final step involves leveraging artificial intelligence to derive predictive insights. Vertex AI offers an end-to-end platform for developing, training, testing, and deploying machine learning models. It supports multiple frameworks such as TensorFlow and scikit-learn, making it a versatile tool for data science engineers.

Summary of the Data Pipeline

- Data Ingestion: Real-time events are streamed via Pub/Sub as soon as the shipment process starts.

- Data Storage: Cloud Storage holds the extensive shipment data securely.

- Data Processing: Dataproc processes and cleans the raw data for better analysis.

- Data Analysis: BigQuery allows for quick querying and deep insights.

- AI/ML Deployment: Vertex AI facilitates the development and deployment of machine learning models.

Key Takeaway

Google Cloud Platform services collectively establish a robust and scalable data pipeline that effectively addresses the big data challenges encountered during the pharmaceutical shipment process. This seamless integration not only supports real-time monitoring but also drives strategic decision-making through advanced analytics and AI.

In summary, GCP provides a comprehensive suite of services that enable real-time data ingestion, efficient storage, streamlined processing, and advanced analytics. This integrated solution optimizes shipment tracking and lays the groundwork for deploying AI and machine learning models to further enhance operational decision-making.

That concludes our lesson on leveraging GCP for big data applications in the pharmaceutical industry. Thank you for reading, and we look forward to exploring more topics in the next lesson.

Watch Video

Watch video content