GCP Cloud Digital Leader Certification

Google Clouds solutions for machine learning and AI

Demo PubSub

Welcome to this comprehensive lesson on integrating and managing Pub/Sub within Google Cloud Platform (GCP). In this guide, you will learn how to create a Pub/Sub topic, publish JSON messages to it, and understand the subscription process. We will also explore how to connect Pub/Sub with services like BigQuery and Cloud Storage for real-time analytics and batch data processing.

Accessing the Pub/Sub Interface

After logging into your GCP console and confirming you are in the desired project, follow these steps to access the Pub/Sub interface:

- Enter "Pub/Sub" in the search bar and select it.

- If prompted to enable the API, proceed with the activation (this demo assumes the API is already enabled).

On the left-hand side of the Pub/Sub console, you will see several options:

- Topics: Temporary storage or streams where incoming data is held.

- Subscriptions: Connect to a topic to read the data and allow for further processing by other applications.

- Snapshots: Create backups of your topic.

- Schemas: (Not covered in this lesson) Define the structure for your messages.

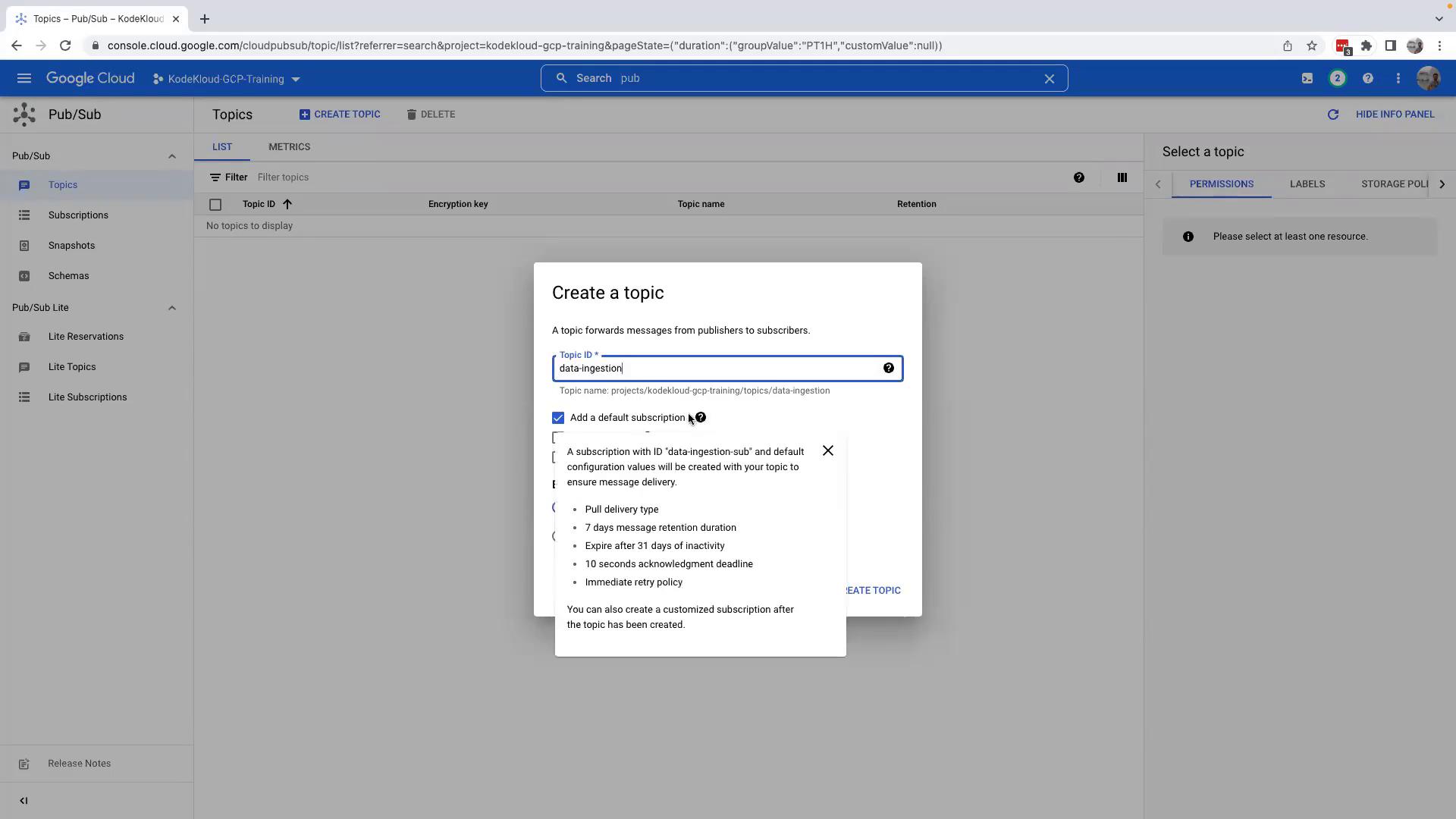

Creating a Pub/Sub Topic

Follow these steps to create a Pub/Sub topic that will serve as your data pipeline:

- Click on Create Topic.

- Provide a unique topic name (e.g., "data-ingestion").

- Ensure the option "Add a default subscription" is selected.

Once you click Create Topic, the topic along with its default subscription is established. This unique topic name becomes the ingestion endpoint for your applications that will push data.

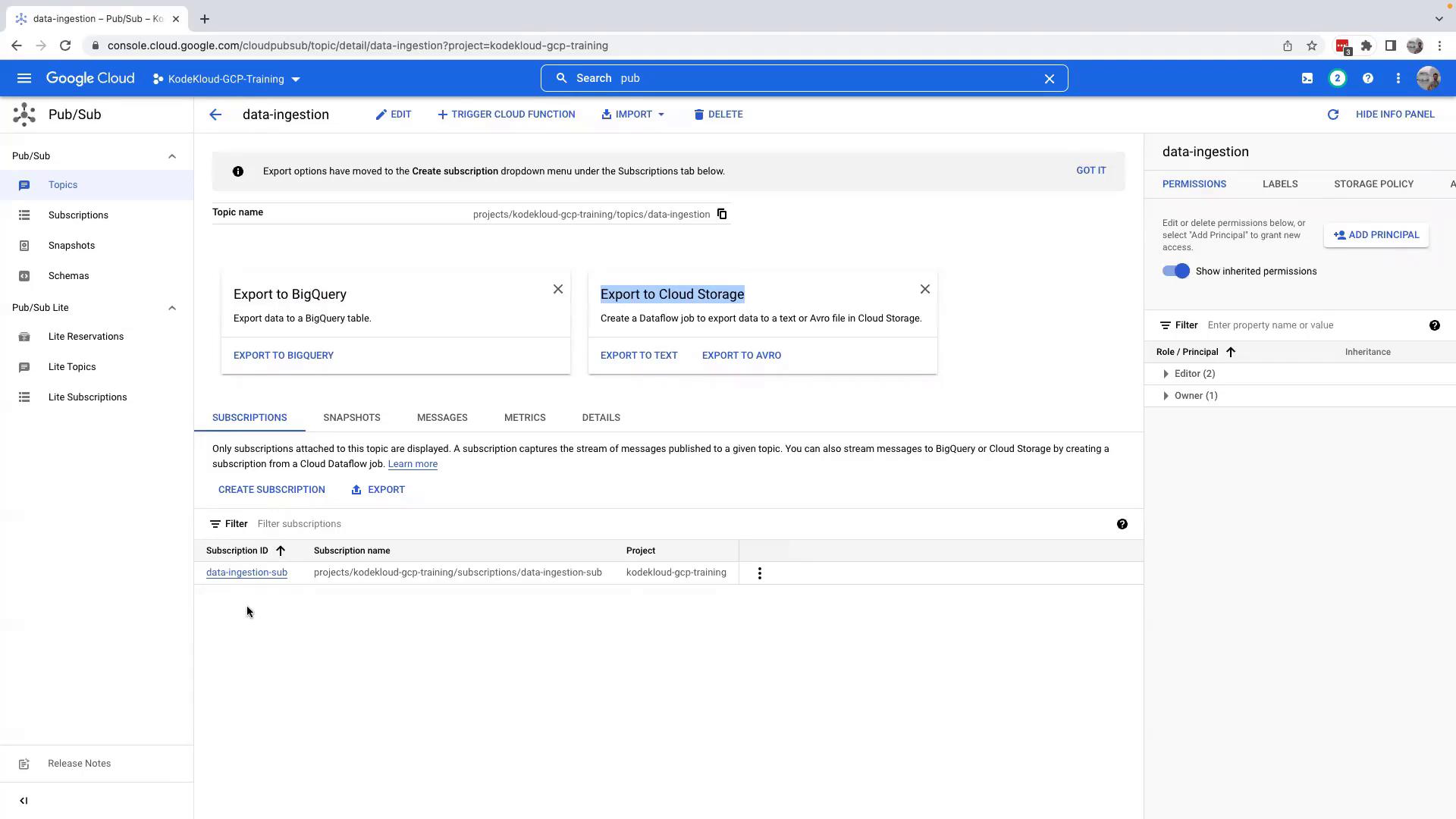

Exploring Topic Details and Subscriptions

After creating the topic, click on it to view detailed settings and options:

- The topic details page provides insights on sending data to BigQuery or Cloud Storage.

- The default subscription is clearly visible and is configured to pull data from the topic.

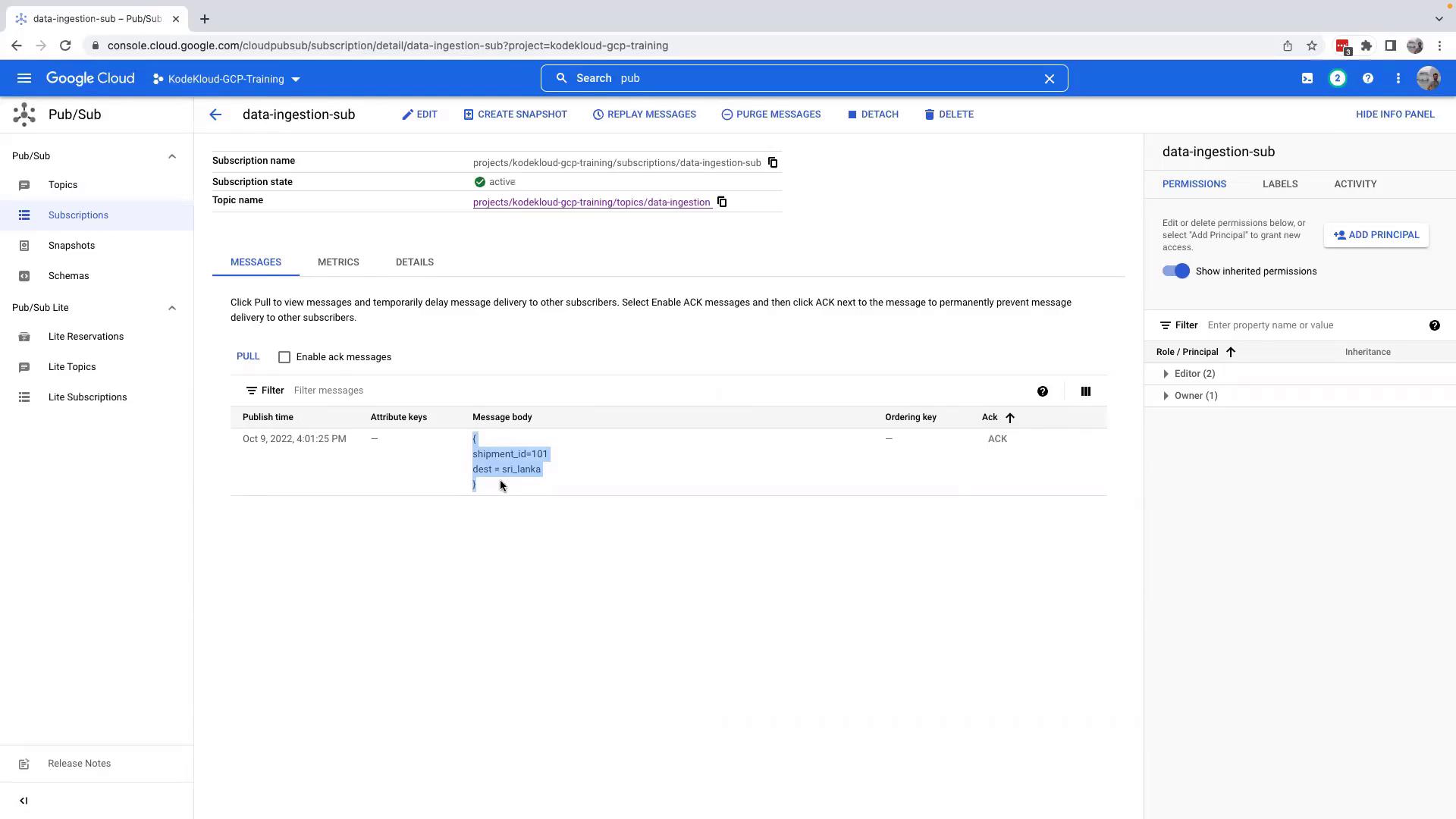

Next, switch to the Subscriptions section. Here, you can find options like "Messages" accompanied by a "pull" button to retrieve the messages from your topic.

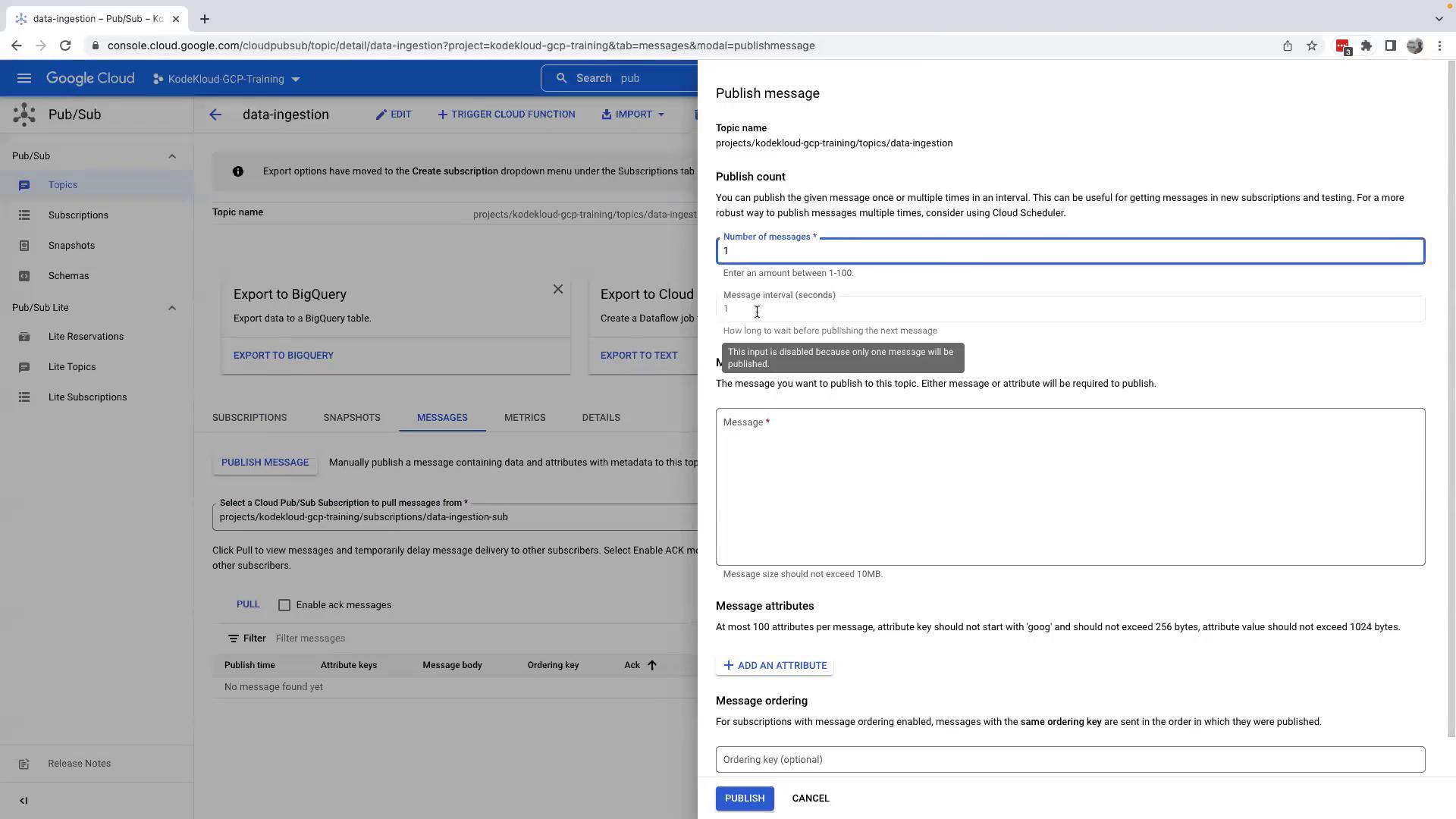

Publishing Messages to Your Topic

To demonstrate publishing, follow these steps to push messages and validate the subscription retrieval:

- Return to your topic and click on Messages.

- Initiate message publishing by selecting the appropriate subscription.

- Enter your message using JSON format.

For example, your initial message can be:

{

"id": 101

}

Enhance the message with additional details:

{

"shipment_id": 101,

"dest": "sri_lanka"

}

After entering the desired message, click Publish to push it into the topic.

Then, navigate back to the Subscriptions section, select your subscription, and click Pull to retrieve the message from the topic. The message details will then be displayed, confirming that the subscription successfully processed the published content.

Understanding Data Ingestion and Retention Options

Pub/Sub is designed for real-time data streaming and offers flexible data ingestion capabilities:

- Data can be exported directly to BigQuery for instant analytics.

- If the data requires further processing (e.g., cleansing or computation), it can first be transferred to Cloud Storage, then loaded into BigQuery.

By default, a topic retains data for seven days. You can extend the retention period up to a maximum of 31 days by adjusting the topic settings.

Important

Remember: A Pub/Sub topic is optimized for streaming real-time data rather than serving as a permanent data repository. Ensure that data is either consumed by a subscription or exported to long-term storage.

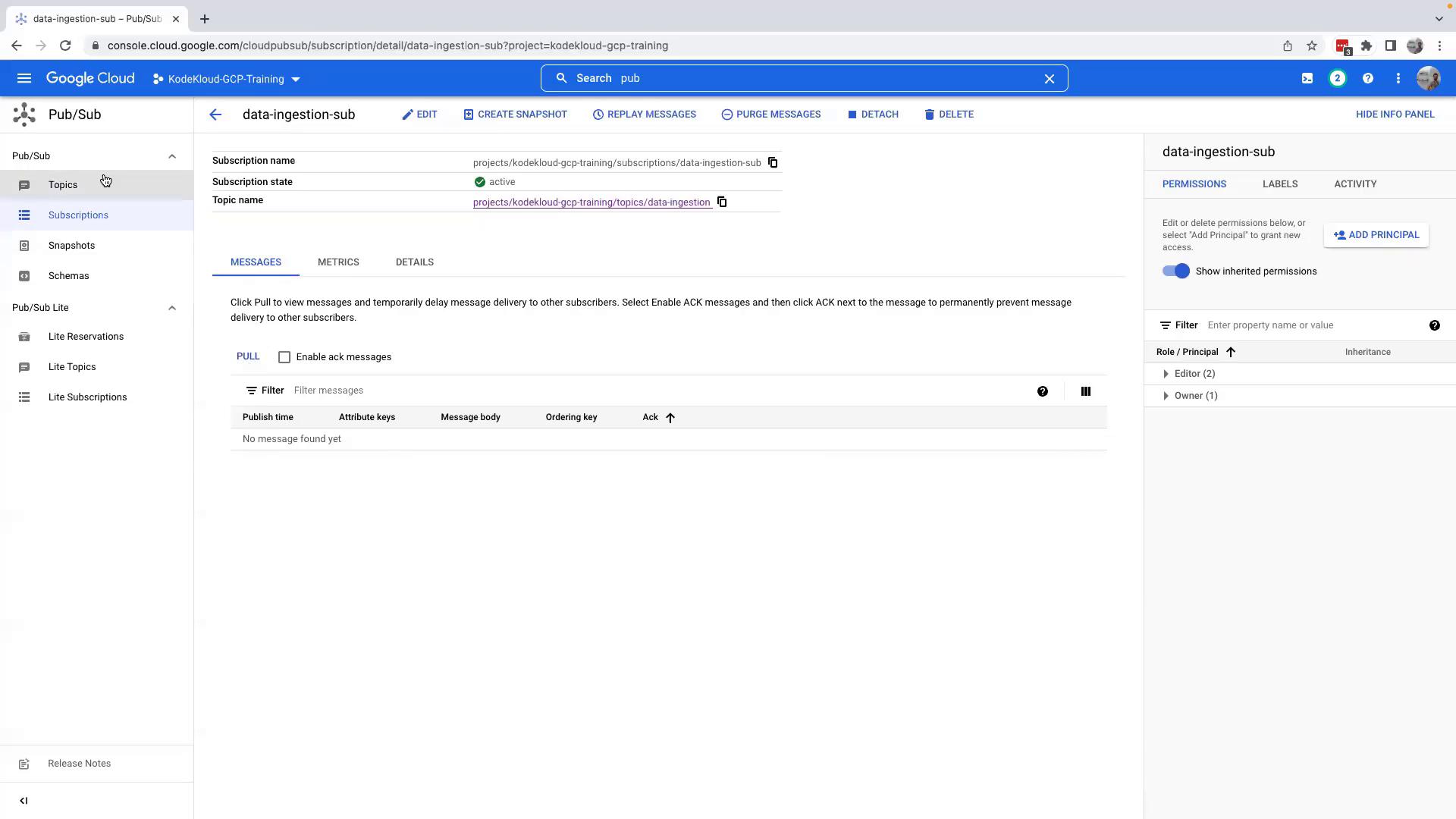

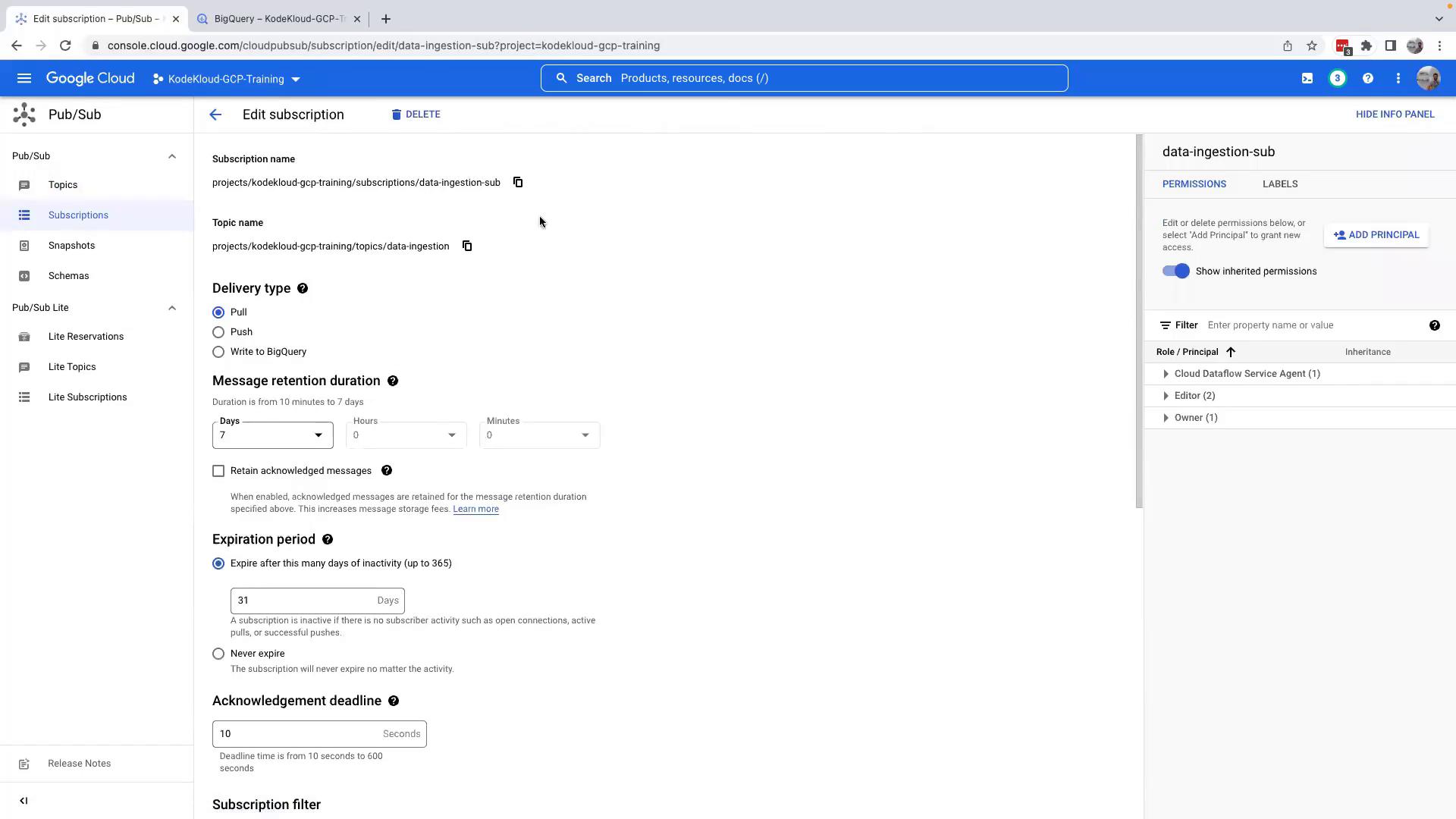

Configuring and Editing Subscriptions

To optimize how your data is processed, you can modify the configuration settings for your subscriptions. To do this:

- Navigate to the Subscriptions section.

- Click on Edit for the subscription you want to modify.

Within the edit view, you can configure various options such as:

- Directly streaming data export to BigQuery (ensure that your BigQuery datasets and tables are set up in advance).

- Adjusting message retention settings.

- Changing delivery options for real-time analytics.

These configurations facilitate near real-time data analytics by enabling a seamless data flow into BigQuery or further processing via Cloud Storage.

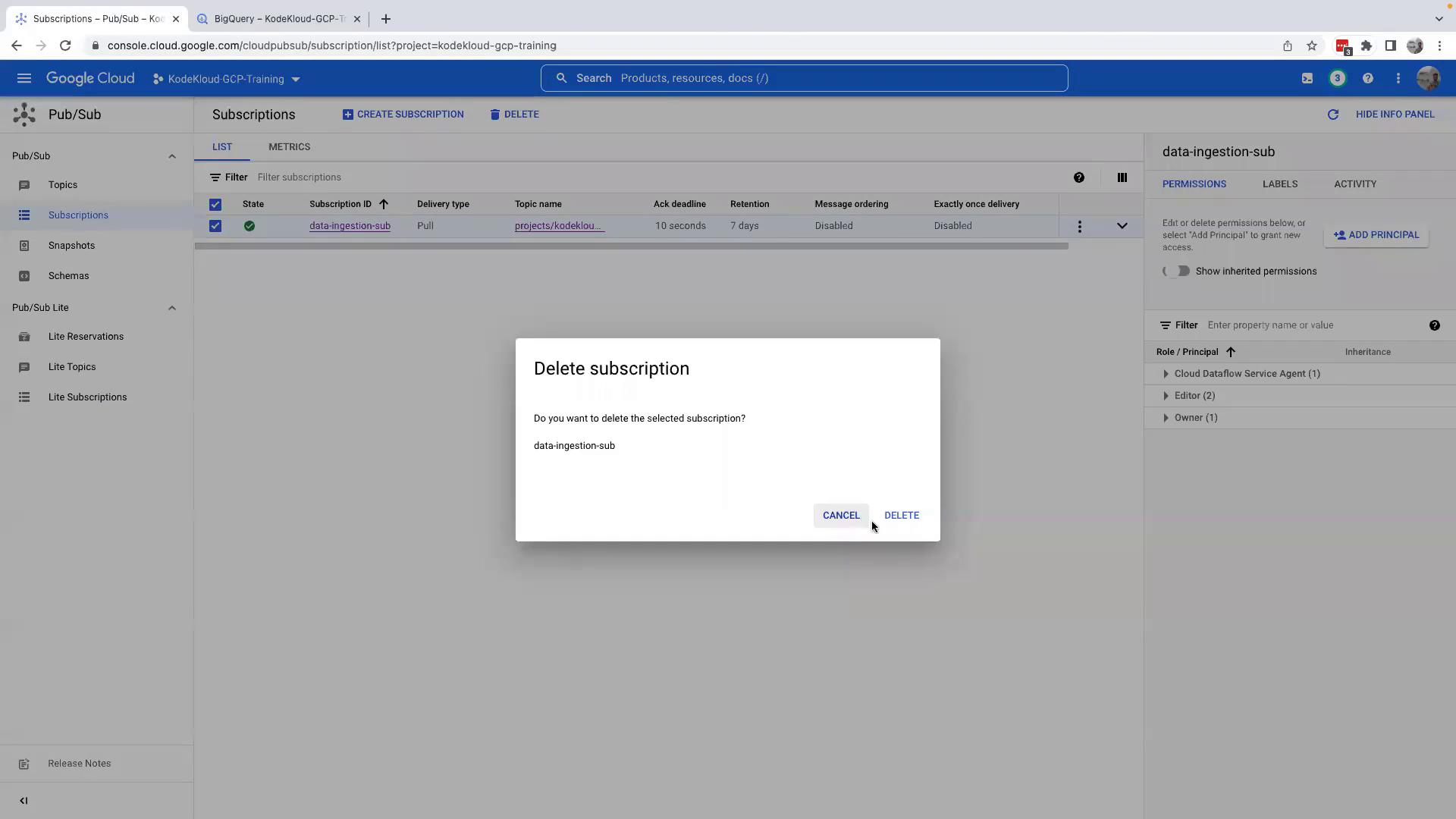

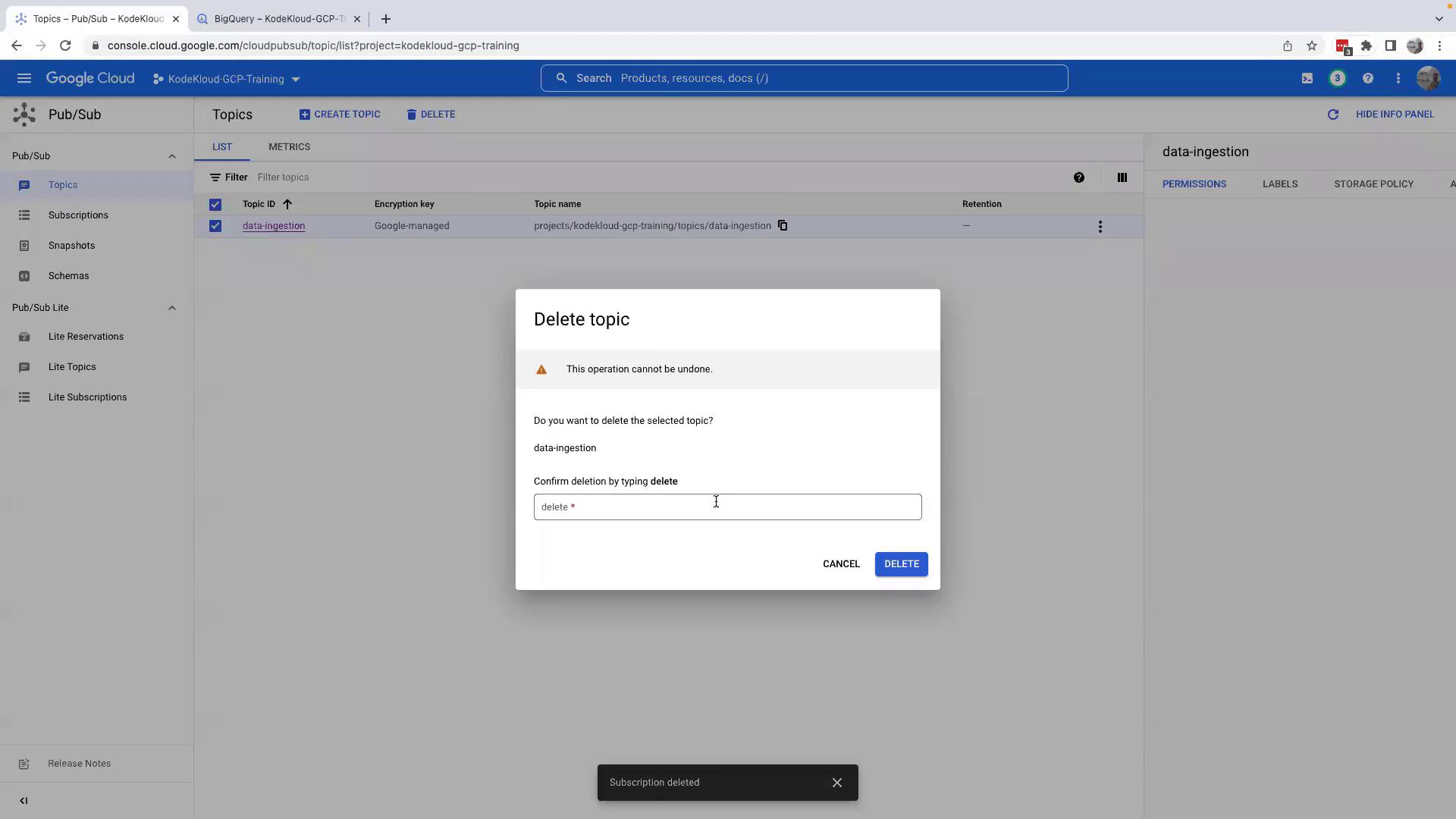

Cleaning Up Resources

Before proceeding to further lessons—where integration with Cloud Storage and BigQuery is explored—it's essential to clean up the resources created during this demo. To do so:

- Navigate back to the topic.

- Delete the subscription by clicking on the Delete button.

- Finally, delete the topic.

Note that once a topic is deleted, its data is irretrievable. If the data is critical, consider enabling a snapshot before deletion.

Warning

Deleting topics and subscriptions is irreversible. Make sure to export or backup necessary data before performing deletion.

Conclusion

In this lesson, you have learned how to effectively create and manage Pub/Sub topics and subscriptions in GCP, publish JSON-based messages, and configure data export options to BigQuery and Cloud Storage. As you continue your journey, the next lesson will delve deeper into leveraging BigQuery for advanced data analysis.

Thank you for following along and happy streaming!

Watch Video

Watch video content