- A cluster is the house itself.

- A node pool is a group of rooms within that house.

- Control plane (master) upgrade

- Node pool (worker) upgrade

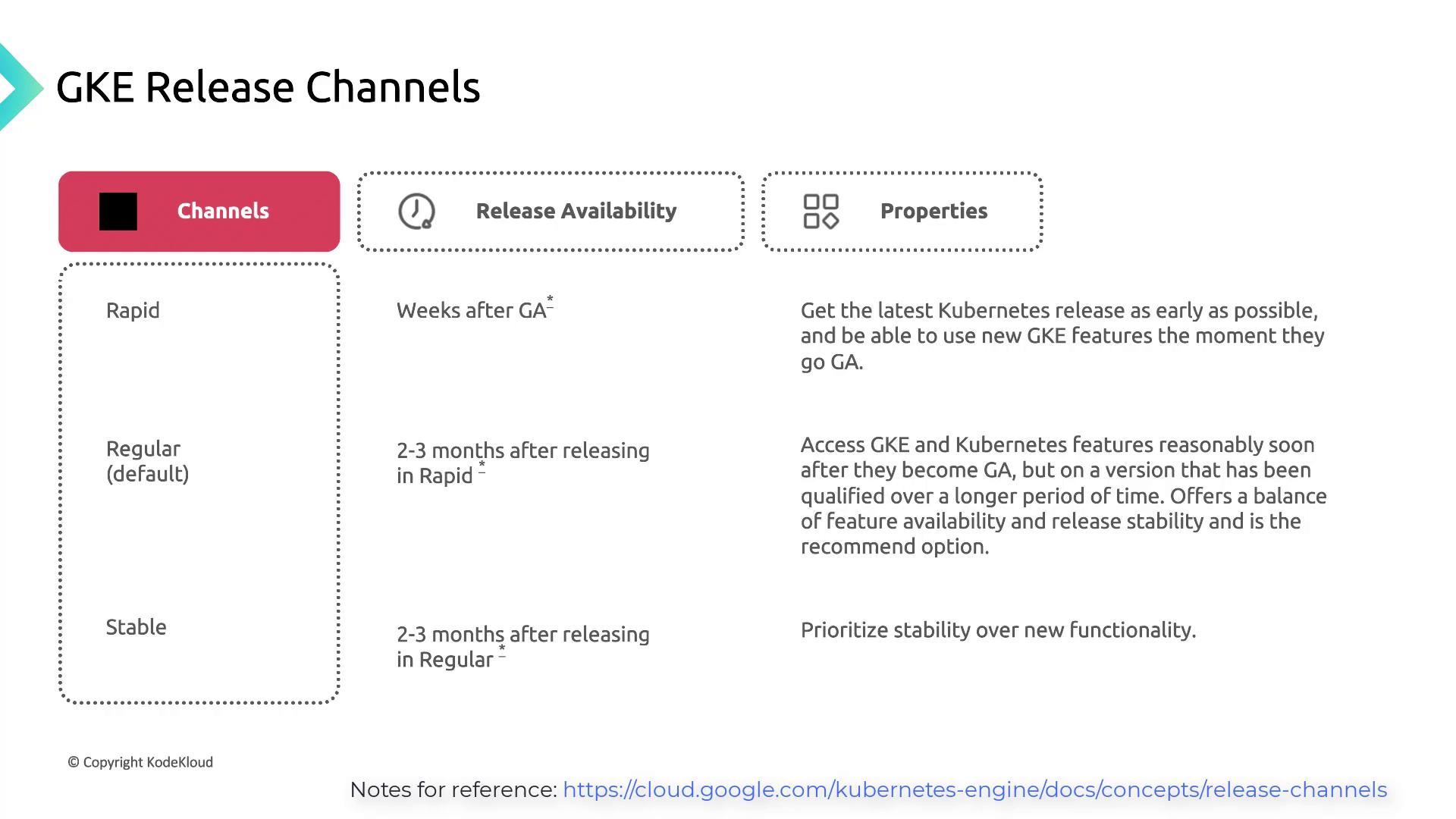

Understanding GKE Release Channels

Release channels let you balance stability and feature velocity by grouping GKE versions:

| Channel | Availability | Best For |

|---|---|---|

| Rapid | Days after Kubernetes GA | Early testing of new Kubernetes APIs |

| Regular | 2–3 months after Rapid | Balanced stability with new features |

| Stable | 2–3 months after Regular | Maximum stability, minimal changes |

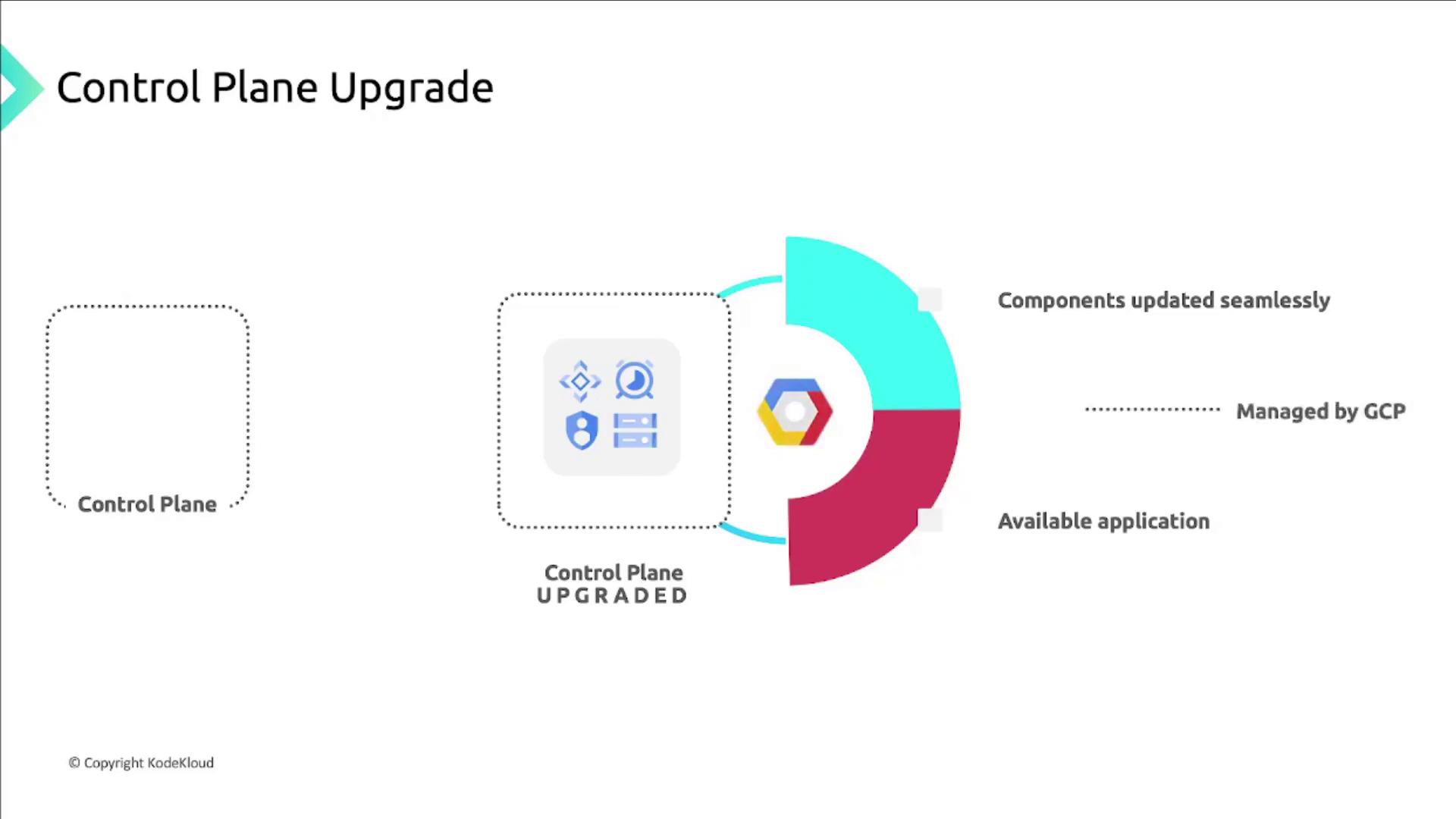

1. Control Plane Upgrade

When GKE releases a new Kubernetes version, it upgrades the control plane first—either automatically (auto-upgrade enabled) or manually (user-initiated). Google Cloud manages this process transparently to avoid workload interruption.

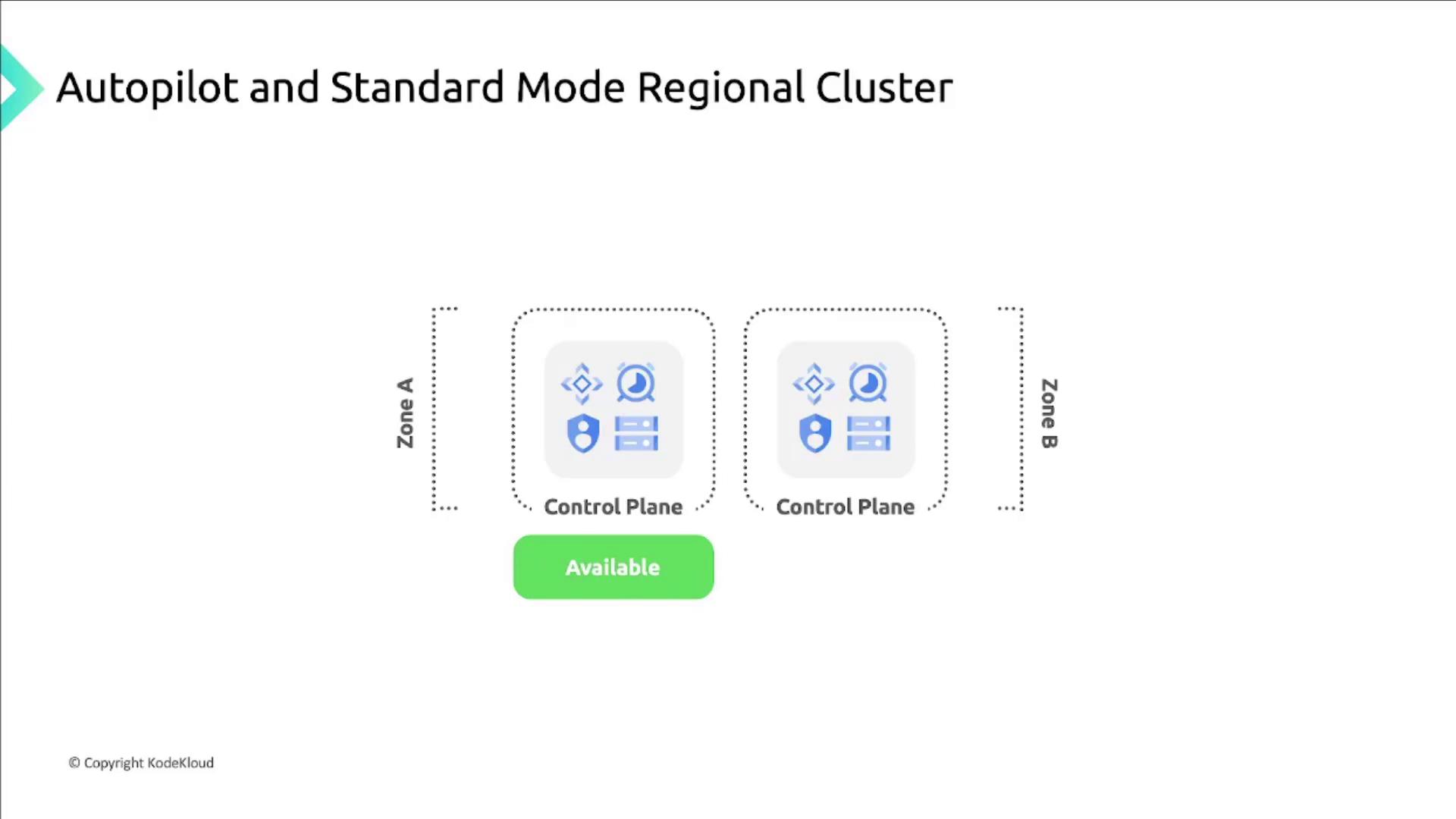

Regional vs. Zonal Clusters

- Regional Clusters (Autopilot & Standard)

Deploy multiple control plane replicas across zones. GKE upgrades one replica at a time in undefined order to maintain high availability.

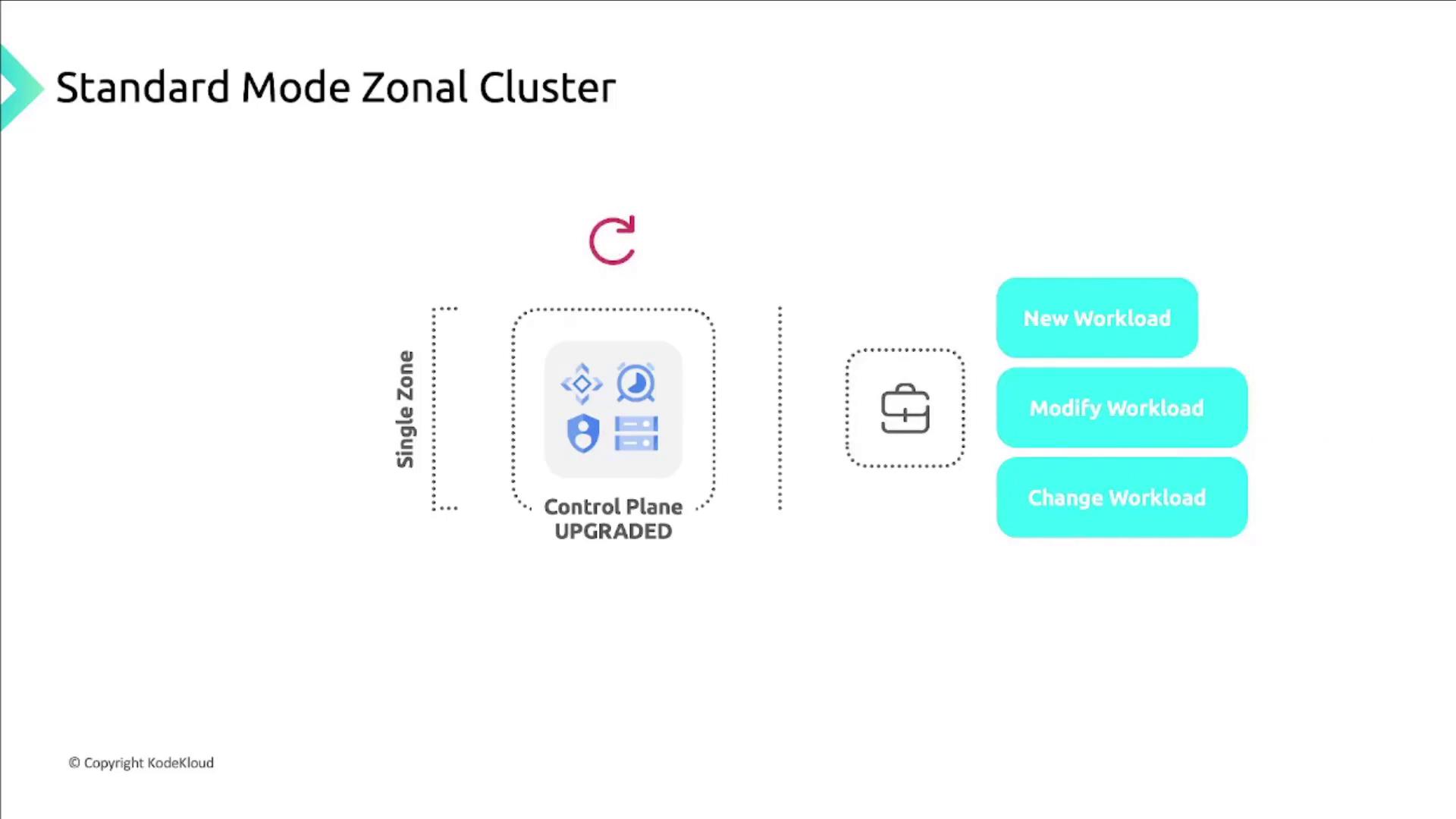

- Zonal Clusters (Standard Only)

A single control plane per zone is upgraded in place. Your workloads stay online, but you cannot deploy new workloads or change configurations until the upgrade completes.

Control plane upgrades are managed by GKE and cannot be disabled, but you can schedule maintenance windows or exclude specific dates.

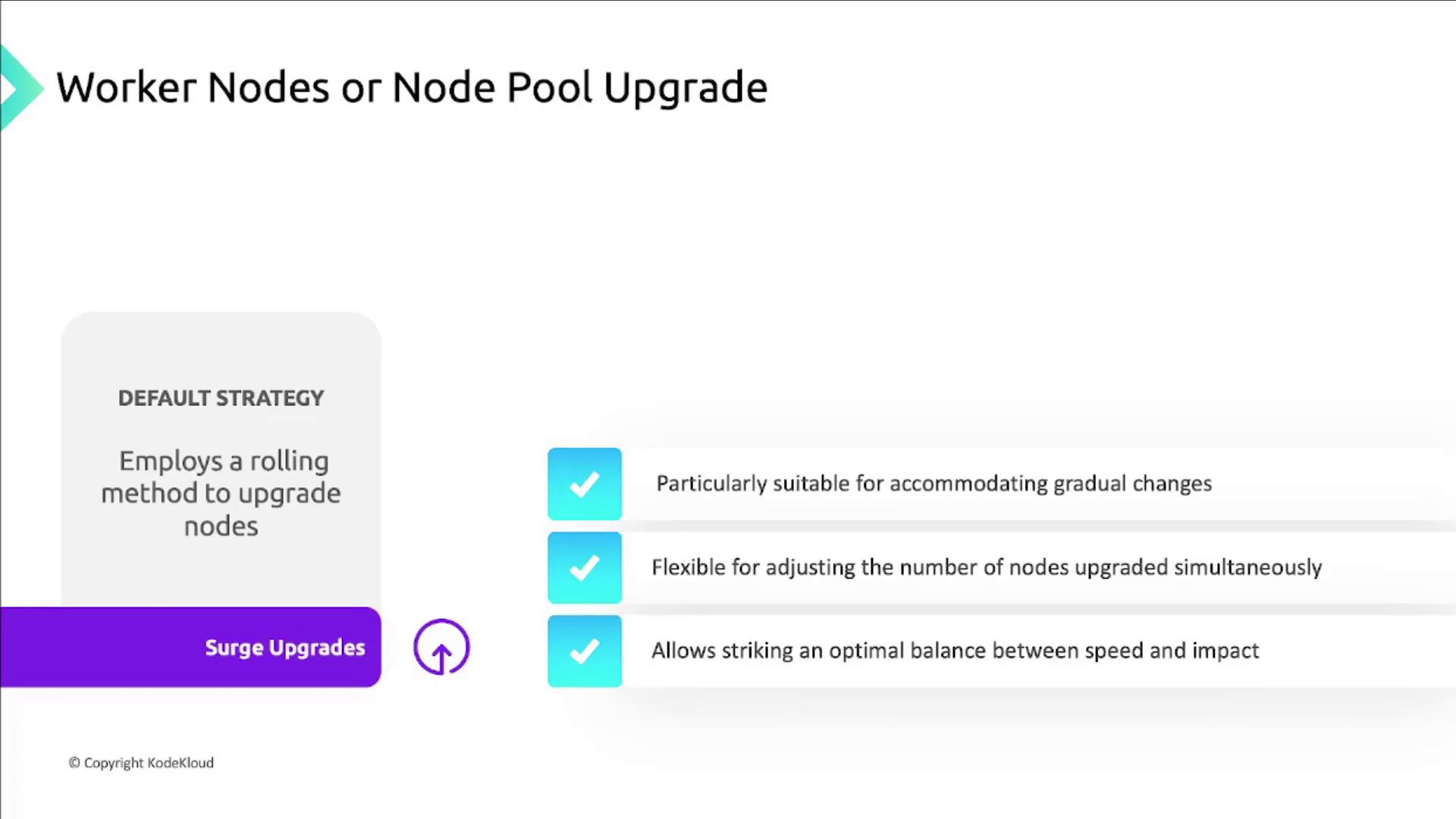

2. Node Pool Upgrade Strategies

GKE offers flexible upgrade strategies for node pools to ensure cluster availability:Surge (Rolling) Upgrades

By default, GKE performs a surge upgrade, replacing nodes one by one while keeping your cluster operational.

You can adjust

maxSurge and maxUnavailable settings in your upgrade policy to fine-tune parallelism and downtime.Blue-Green Upgrades

Maintain two parallel environments:- Blue: Current, stable node pool

- Green: New node pool with updated kubelet

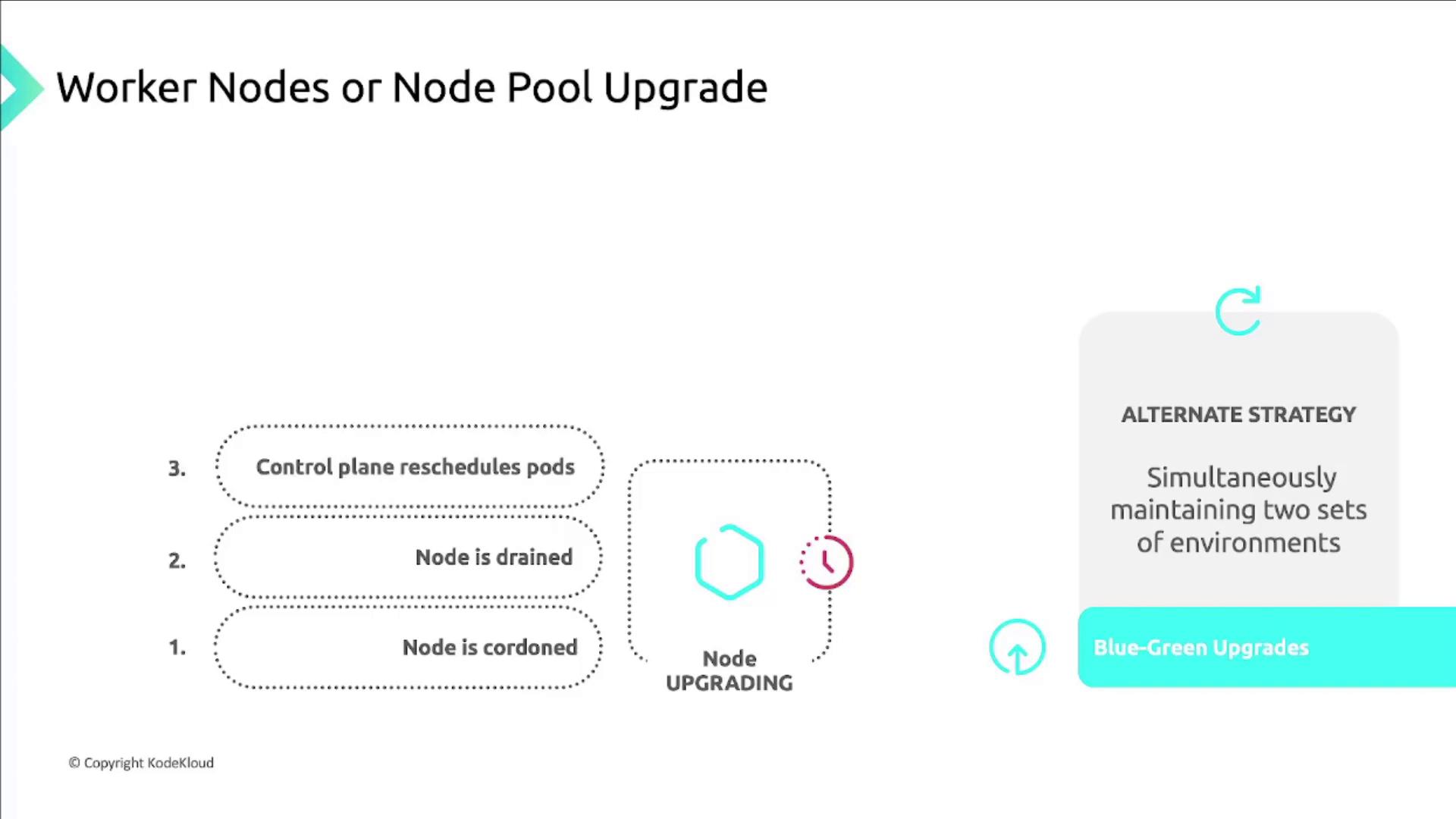

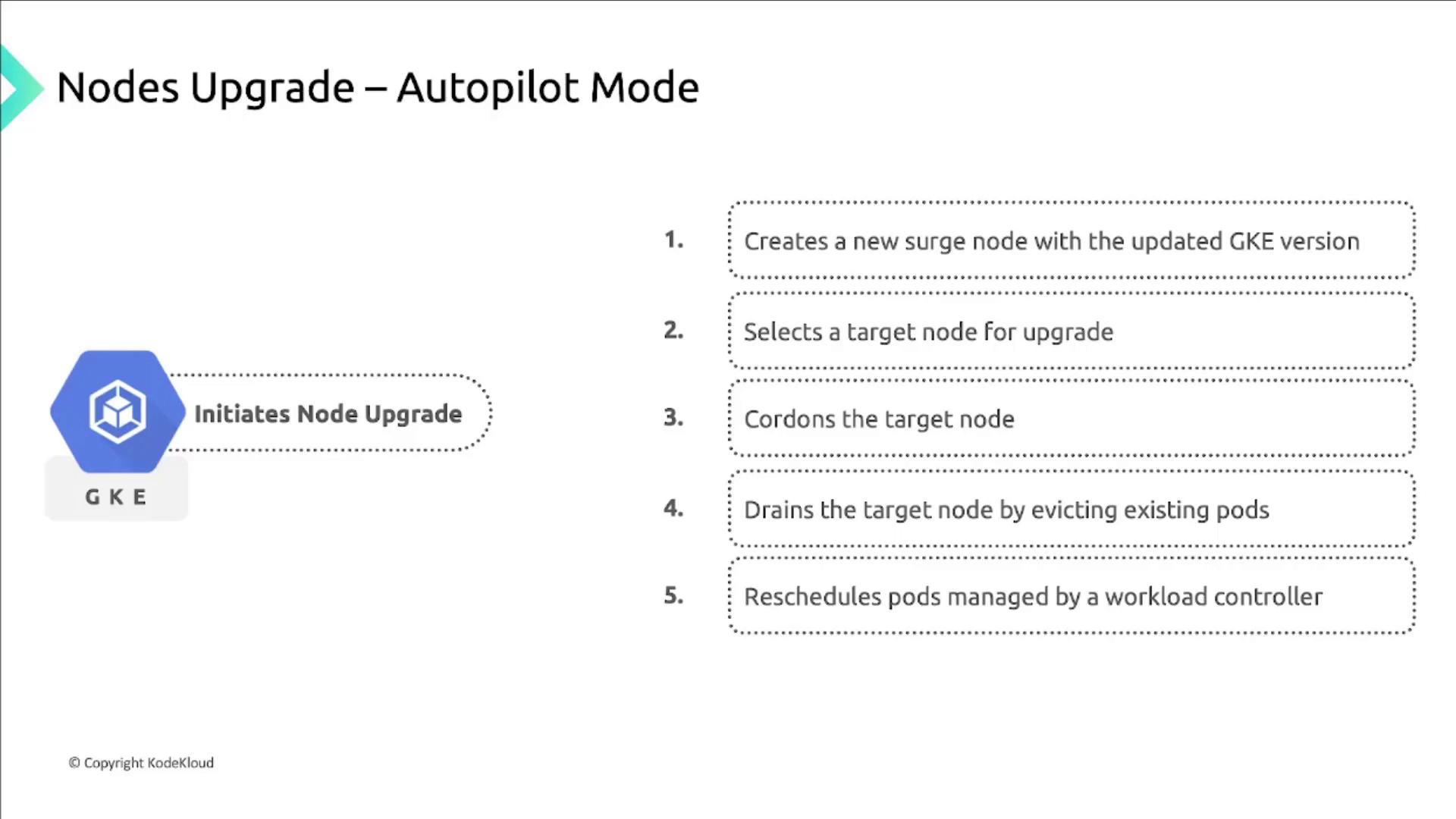

Node Upgrade Workflow

- Cordoning: Marks the node unschedulable.

- Draining: Evicts running pods.

- Rescheduling: Control plane reschedules controller-managed pods; unschedulable pods enter pending state.

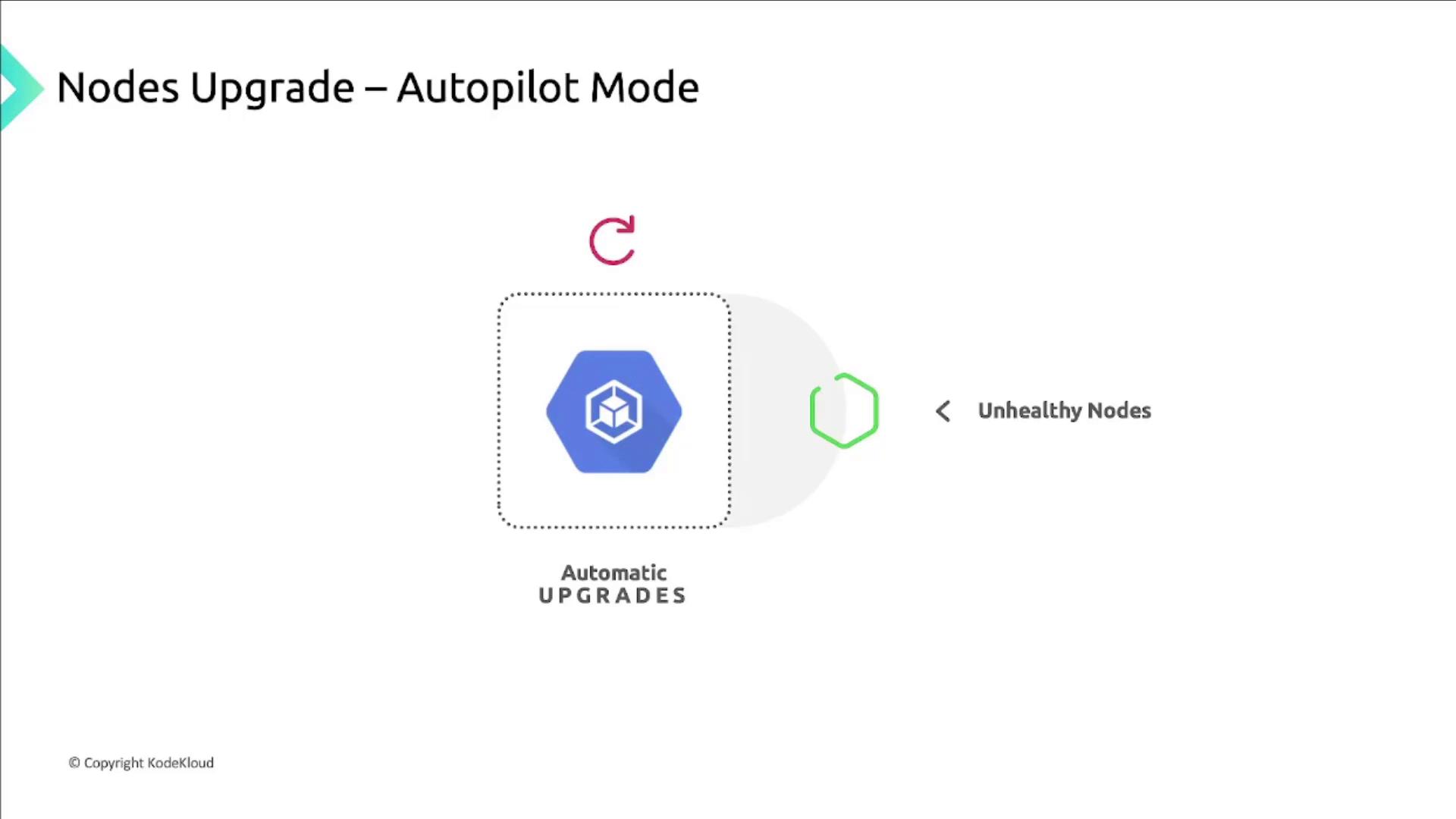

3. Automatic Node Upgrades in Autopilot Mode

Autopilot clusters automatically upgrade both control plane and node pools to the same GKE version. Nodes with similar specs are grouped, and GKE uses surge upgrades to update up to 20 nodes concurrently.

- Static pods on a node are deleted and not rescheduled.

- If a spike in unhealthy nodes occurs, GKE pauses the rollout for diagnostics.

Static pods will not be automatically recreated during Autopilot upgrades. Ensure you back up critical static workloads.

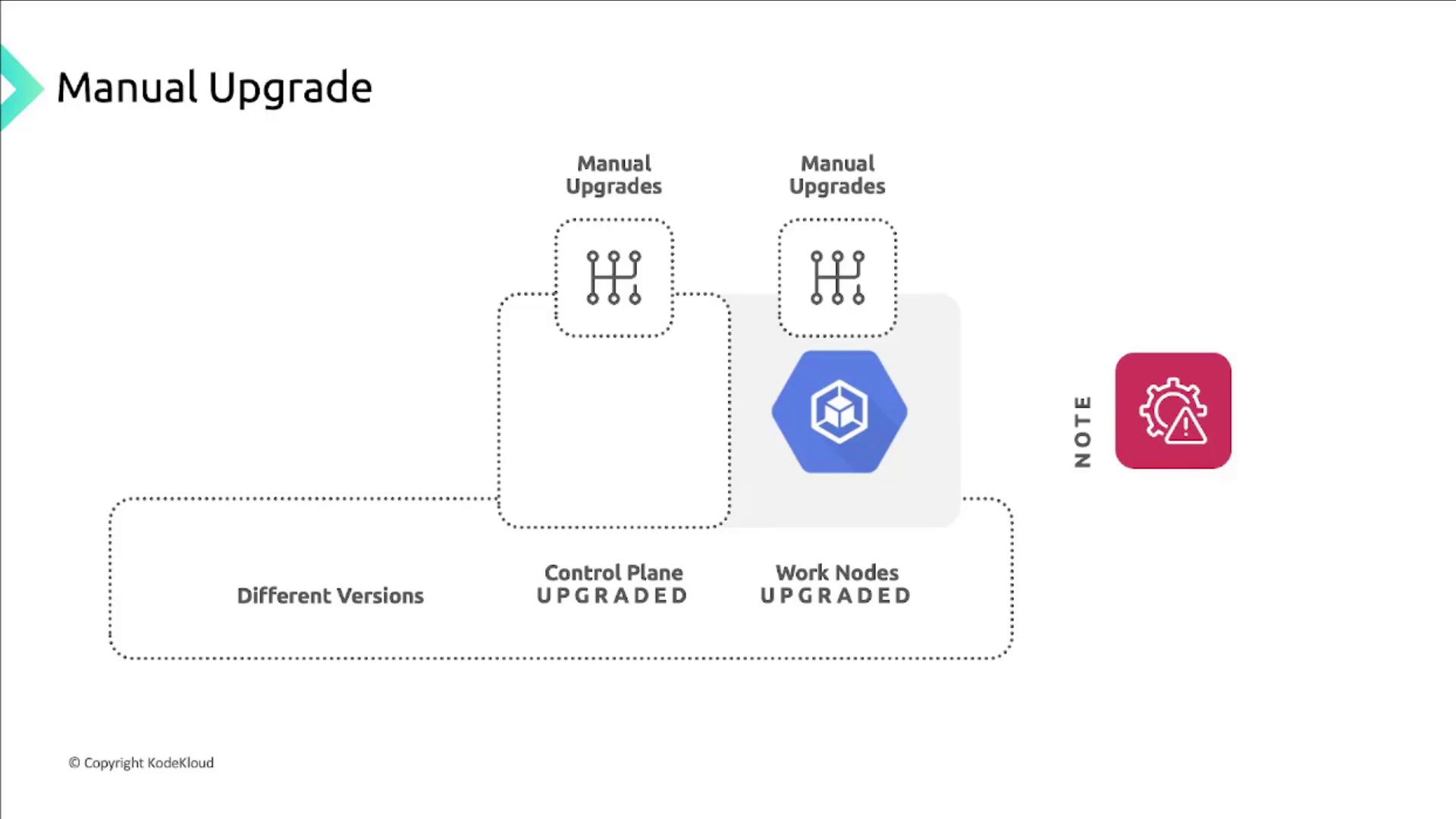

4. Manual Upgrades

You can override automatic upgrades and manually set versions for control plane and node pools:

- Autopilot: Only the control plane version is configurable. Nodes upgrade once your selected version becomes the channel default.

- Standard: Control plane and node pool versions are individually configurable. Node auto-upgrade is on by default but can be disabled (not recommended).