Generative AI in Practice: Advanced Insights and Operations

Evolution of AI Models

Neural Networks

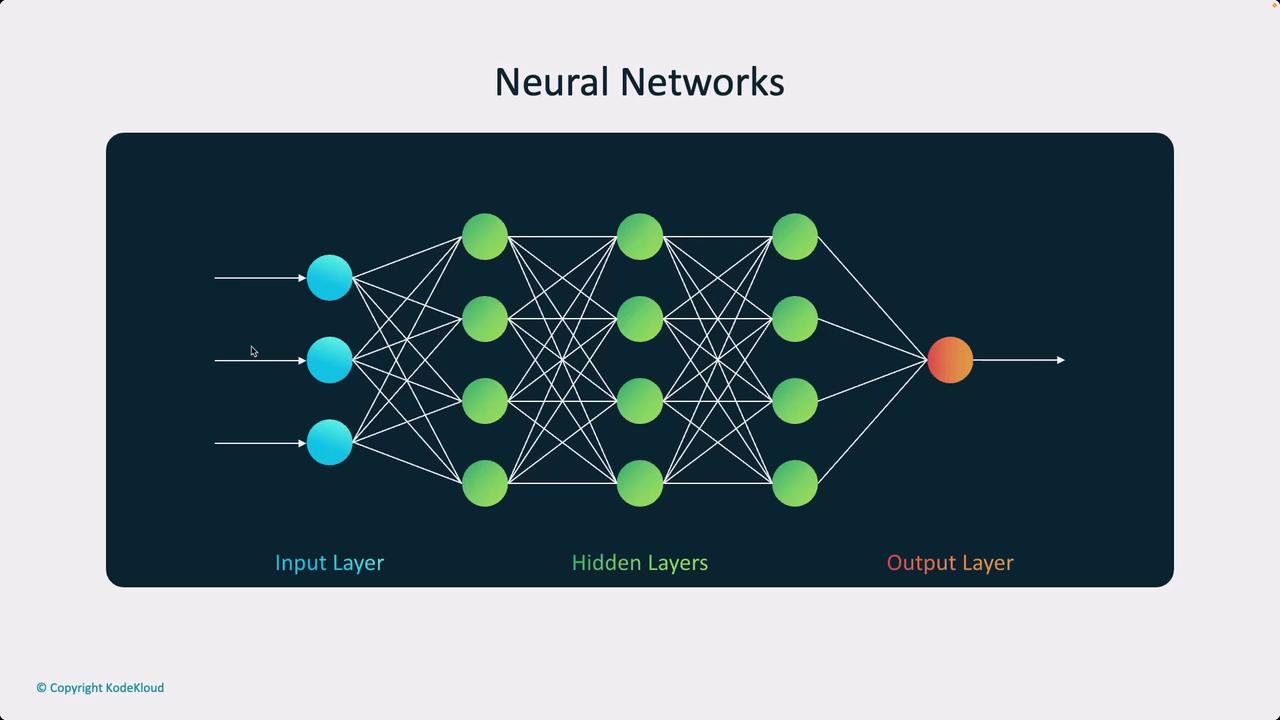

Neural networks have evolved into a cornerstone of modern artificial intelligence, garnering widespread attention for their impressive ability to learn and approximate complex functions. At their core, these systems rely on a straightforward mathematical principle: mapping inputs to outputs through layers of interconnected nodes, a concept that underpins much of today's AI innovation.

What Are Neural Networks?

Traditionally, a neural network is defined as an AI method that processes data in a manner inspired by the human brain. Though the inspiration is biological, the actual mechanism involves a series of structured calculations. Neural networks utilize the principle of universal approximation—given sufficient data and the proper configuration, they can learn to replicate the relationship between virtually any set of inputs and outputs.

The common schematic of a neural network features a network of tiny computational units (nodes) that perform simple mathematical calculations. These nodes transform input data through weighted connections into a final output. The network's depth, determined by its hidden layers, adds layers of complexity and abstraction to the representation.

Overview

Each node in a neural network performs basic operations that jointly lead to advanced learning capabilities. Even though small networks allow an easy interpretation of each unit's function, larger architectures require specialized techniques for deciphering the role of individual components.

The Building Blocks of Neural Networks

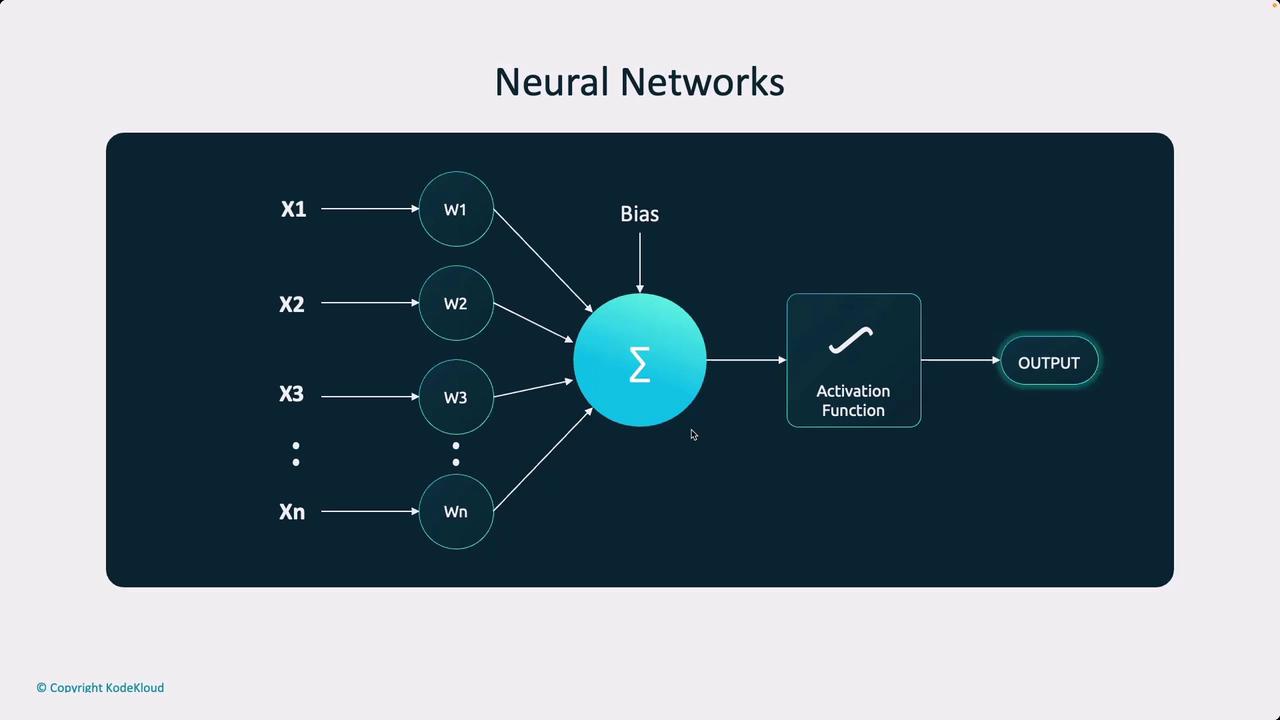

Every node in a neural network processes and retains information from its inputs through a transformation into parameters, commonly known as weights and biases:

- Weights: Determine the significance of each input.

- Biases: Allow the network to adjust the activation threshold.

Consider a real-world decision such as whether to play football outdoors. Factors like weather (x₁), energy levels, friend availability, and pitch accessibility contribute to this decision:

- Each factor is assigned a weight based on its importance.

- A bias may be added to incorporate personal preferences.

- The node sums the weighted inputs, adds the bias, and then passes the result through an activation function.

- If the result exceeds a specific threshold, the decision to play is made.

This basic operation forms the foundation of a perceptron—the simplest type of neural network unit.

When multiple perceptrons are connected, they form a complex system capable of handling sophisticated functions. While the initial structure of neural networks might seem straightforward, variations such as recurrent neural networks (RNNs), long short-term memory networks (LSTMs), and transformers have introduced new layers of complexity that continue to revolutionize the field.

Evolution of Neural Networks

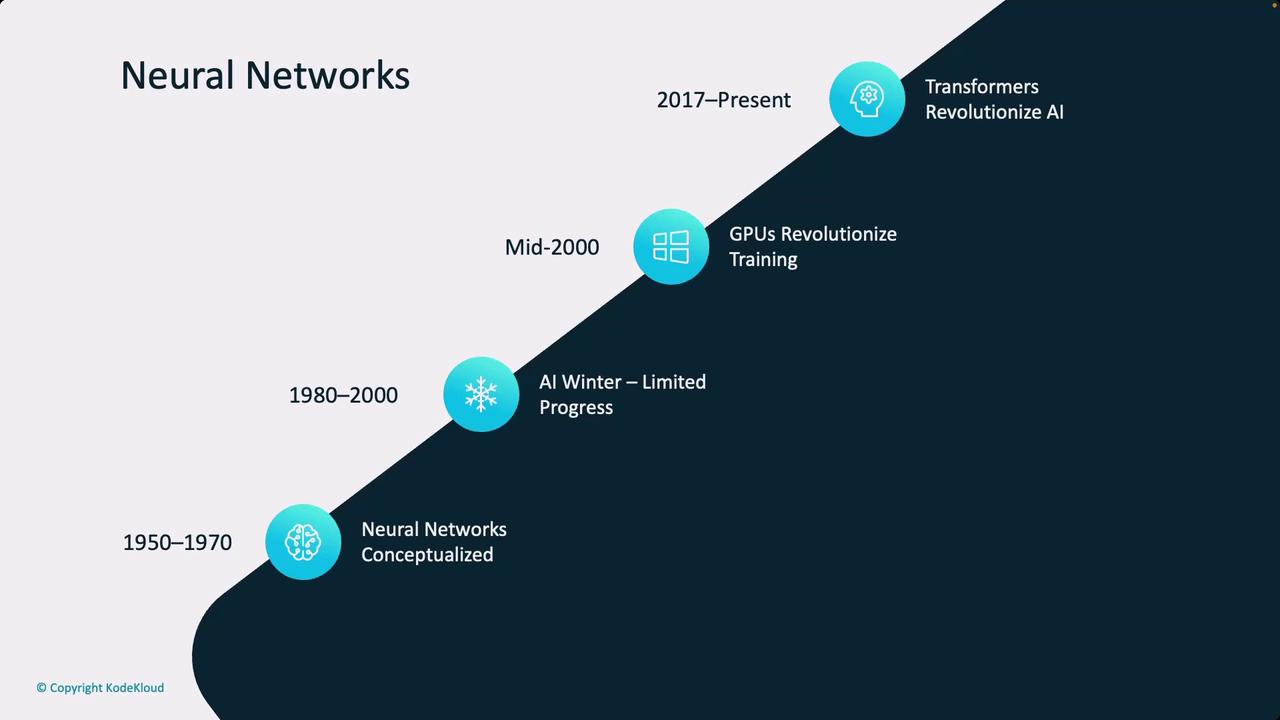

The journey of neural networks dates back to the mid-20th century. Early models laid the theoretical groundwork from the 1950s to the 1970s. However, significant advancements in computational power, especially through GPUs and CUDA technology, have enabled modern neural networks to flourish. GPUs, with their efficient matrix multiplication and parallel processing capabilities, revolutionized training processes. Additionally, the availability of vast datasets has been crucial in empowering these models.

Key Milestones

Since the introduction of transformer architectures in 2017, neural networks have pushed the boundaries of deep learning. These models are now at the forefront of research in artificial general intelligence (AGI), continuously shaping the future of AI.

Conclusion

Neural networks are not just about stacking layers and nodes; they represent a sophisticated blend of mathematics and computation that mirrors some aspects of human cognition. The evolution from simple perceptrons to advanced transformer architectures marks a continuing journey in replicating and expanding the capabilities of intelligence.

For further reading, check out our resources on Kubernetes Basics, Kubernetes Documentation, Docker Hub, and the Terraform Registry.

Watch Video

Watch video content