Generative AI in Practice: Advanced Insights and Operations

Evolution of AI Models

Transformers

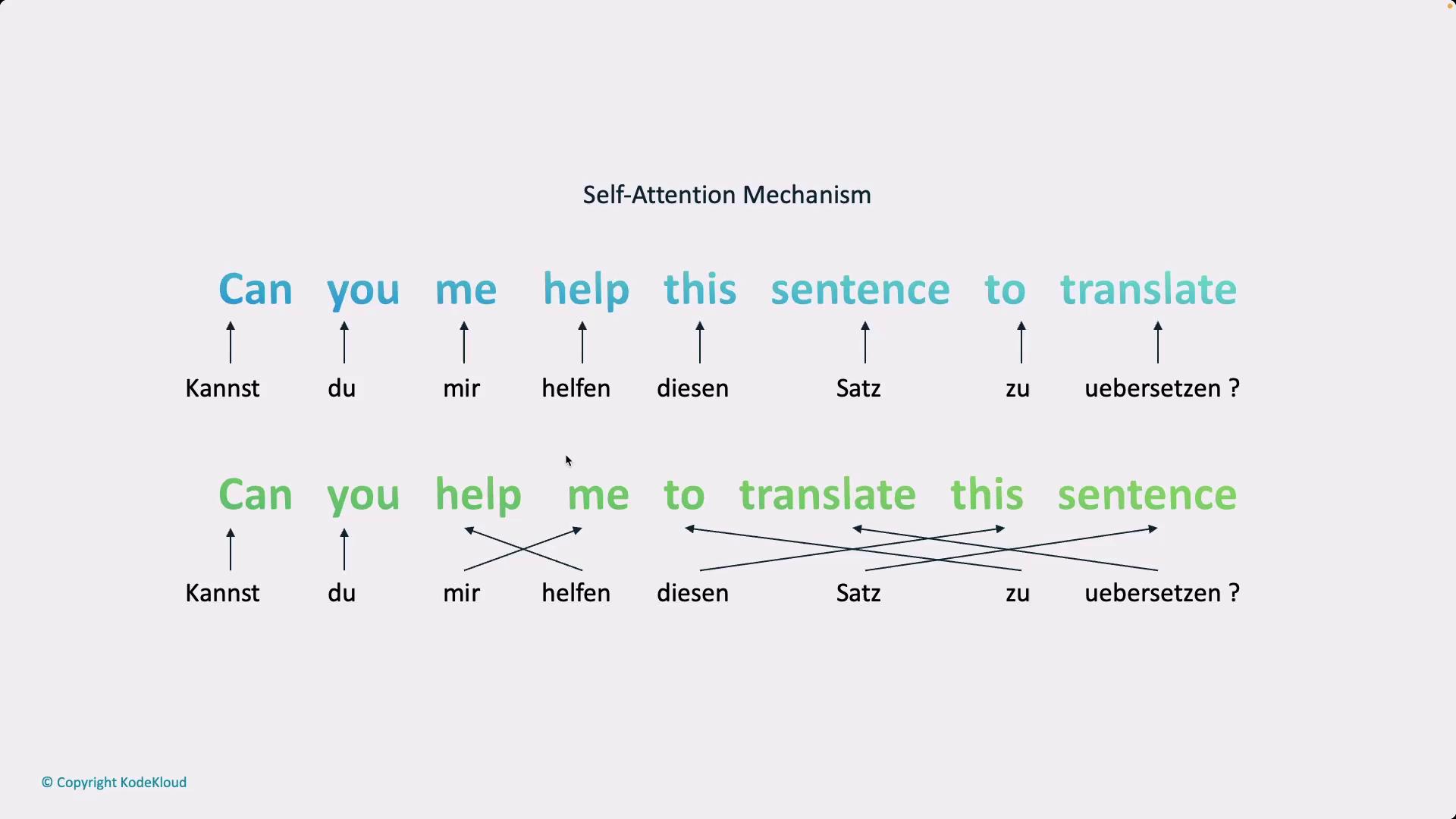

Transformers are often regarded as the brain tissue of modern AI. They efficiently approximate complex tasks by creating dynamic connections between data points—whether words in a sentence or other forms of information—much like the human brain. A key characteristic is their self-attention mechanism, which enables every element in the input to influence every other element simultaneously. This holistic analysis makes transformers especially effective for tasks such as natural language understanding.

In addition to their innovative attention mechanism, transformers leverage extensive matrix operations that are highly optimized for GPU acceleration. This reliance on efficient matrix multiplication enables these models to capture intricate relationships between data points and learn patterns well beyond basic instructions. It is one of the primary reasons transformer-based architectures dominate the field of generative AI today.

While exploring the internal workings of transformers can be fascinating, most practical applications focus on seamlessly integrating these models using high-level abstractions. This approach allows developers to train or fine-tune models like BERT and GPT without needing to delve into the low-level architectural details. New model offerings from companies like Cohere and Anthropic continue to expand the transformer ecosystem.

Before delving further into transformer mechanisms, it is important to introduce the concept of the scaling law. The scaling law posits that increasing a model's size—in terms of layers, parameters, and data—leads to better performance. Evidence suggests that, to a certain extent, there is no hard ceiling; however, the availability of clean data often imposes practical limits. The Chinchilla paper discusses an optimal token-to-parameter ratio of roughly 20 to 1, indicating that performance gains start to diminish beyond a certain model size.

Note

While the Chinchilla law provides valuable insights, practical experiments have shown that benefits plateau after a certain point. Researchers now explore combining multiple smaller models—using techniques like mixture of experts (MOE) or model reflection—to achieve performance on par with large monolithic models.

Despite their many strengths, transformers have notable challenges. They are data-inefficient, typically requiring enormous amounts of data to master even basic tasks. Their complex internal operations make them difficult to interpret, although advancements in mechanistic interpretability continue to improve our understanding. Additionally, transformers face context window limitations, which can hinder their ability to process extremely large inputs.

In practice, transformer models—such as those based on GPT-4—have demonstrated capabilities that outperform humans in specialized tasks like medical diagnosis when fine-tuned appropriately. Their massive memory and parallel processing capabilities provide significant advantages for complex applications.

Before moving forward, it is essential to understand the hardware that makes these capabilities possible. GPUs have revolutionized AI due to their immense parallel processing power, performing thousands of operations simultaneously and executing matrix computations rapidly. In addition to GPUs, specialized hardware like NVIDIA's tensor cores, TPUs, and Intel’s Gaudi further accelerate deep learning workloads.

One of the most intriguing aspects of transformer evolution is the emergence of advanced capabilities that go beyond simple input-output mapping. Without explicit training for advanced reasoning or deep contextual understanding, these models demonstrate complex problem-solving abilities. Their emerging qualities have transformed them from basic Q&A systems into tools exhibiting aspects of artificial general intelligence.

Modern AI research is also exploring complementary paradigms such as neurosymbolic AI. This approach combines the pattern recognition prowess of neural networks with the logical inference strengths of symbolic reasoning. By integrating transformer-based generative models with additional predictive systems (like those based on XGBoost or other statistical methods), developers can create compound AI systems that effectively tackle a wide range of real-world problems.

In the next section, we will delve deeper into the training processes of models similar to GPT-4, exploring topics such as backpropagation, weight tuning, and other foundational elements of deep learning. Thank you for reading.

Watch Video

Watch video content