Generative AI in Practice: Advanced Insights and Operations

Evolution of AI Models

Training Model Fine Tuning

This article delves into the process of building models like GPT-4 using neural networks and the Transformer architecture. Although the training process is both time-consuming and demanding in terms of human expertise, it ultimately involves a series of well-defined steps.

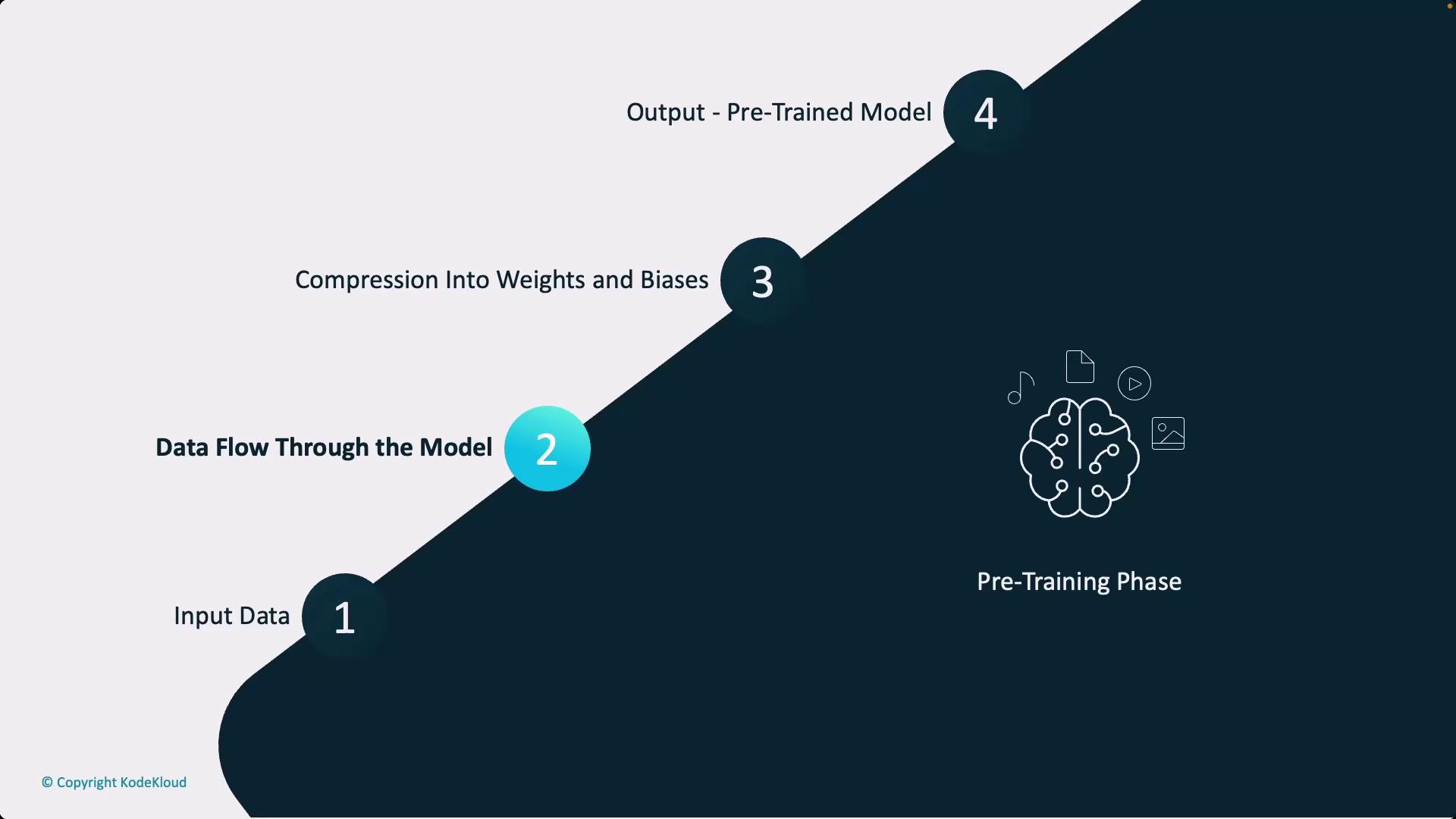

Pre-Training: Building the Base Model

The first phase involves combining the model’s architecture with vast amounts of raw data to pre-train the model. This step passes terabytes of data through the model, compressing the information into the model’s weights and biases. Think of it as distilling the content of the internet—or an extensive collection of books and documents—into a compact, efficient form that the model can utilize.

The output from pre-training is a base model that has internalized a wide variety of patterns and information. However, note that this process is inherently lossy; a model compressed to a gigabyte cannot encapsulate every detail from terabytes or even exabytes of raw data. Base models excel at generating text continuations reminiscent of their training sources (e.g., Wikipedia-style text if trained on Wikipedia data) but often fall short for interactive applications or conversations requiring rich context.

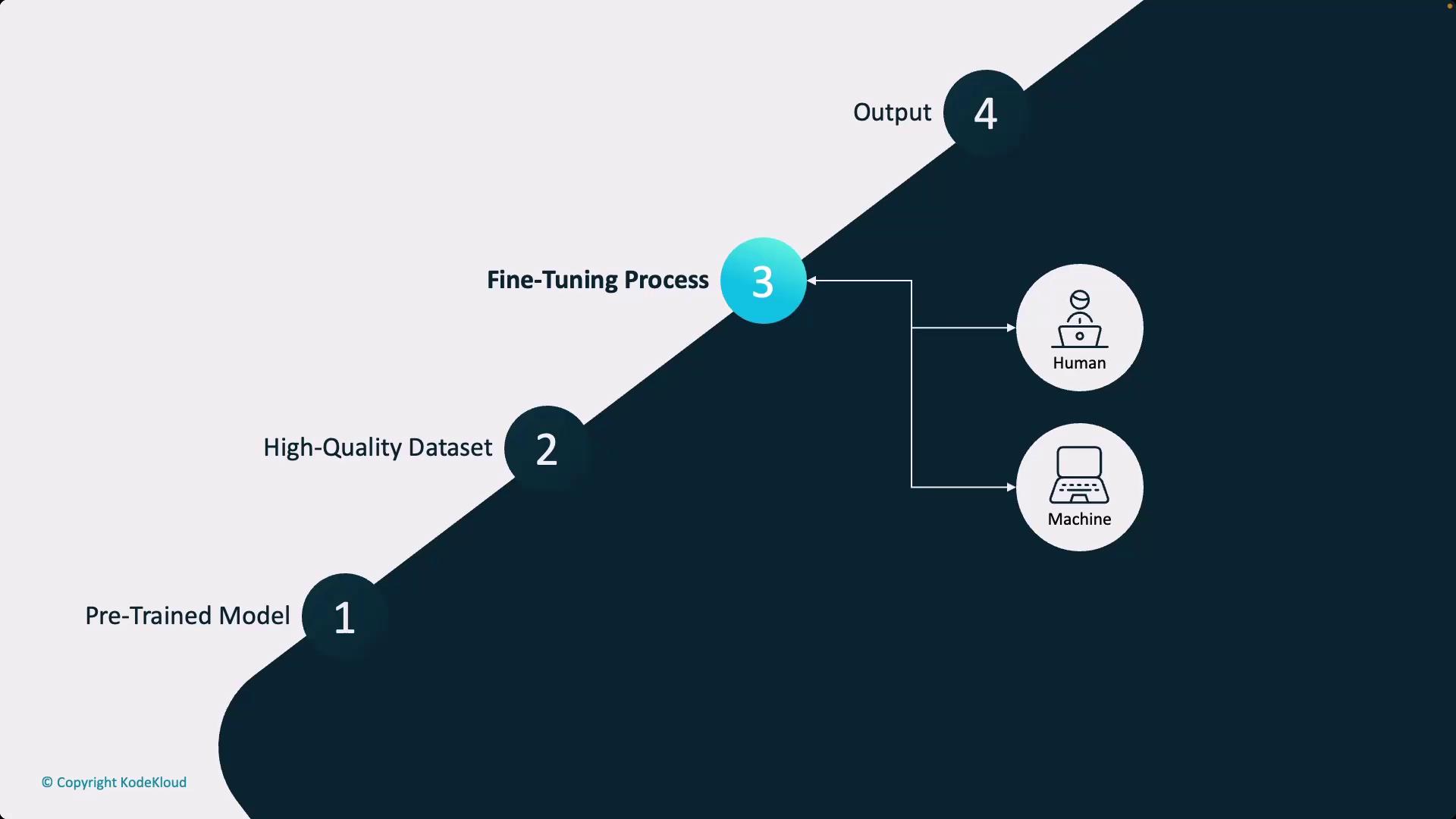

Fine-Tuning: Customizing the Base Model

Fine-tuning adapts the base model to more specific tasks or aligns it with specialized conversational styles. In this phase, the model undergoes additional training on a high-quality, domain-specific dataset. Supervised fine-tuning is commonly applied, and platforms like Azure offer user-friendly interfaces to facilitate this procedure.

Note

Fine-tuning is straightforward in theory, but obtaining high-quality data and defining effective evaluation metrics are critical challenges. These factors are essential to ensure the model improves without compromising performance.

RLHF: Refining with Human Feedback

An optional step, widely adopted by major model providers, is Reinforcement Learning from Human Feedback (RLHF). Also called constitutional AI when incorporating an additional AI layer to check guideline alignment, this process involves human or machine evaluation of the fine-tuned model outputs to verify they meet the desired standards. Reinforcement learning is then used iteratively to align the model more closely with the preferred output characteristics.

Overview of the Training Process

The overall training process consists of:

- Pre-Training: Compressing extensive raw data into a base model through the model's weights and biases.

- Fine-Tuning: Adapting this base model with high-quality, domain-specific data for targeted applications.

- RLHF (Optional): Utilizing reinforcement learning with human (or AI) feedback to further refine the model's output.

The result is an enhanced, deployment-ready model. In many enterprise contexts, models are pre-trained and fine-tuned by external providers. However, in regulated domains, understanding and potentially managing the fine-tuning and RLHF processes is essential.

Warning

When operating in regulated environments, ensure you comprehend the intricate details of both the pre-training data and the fine-tuning process. This understanding is crucial when performing these steps in-house.

Watch Video

Watch video content