Generative AI in Practice: Advanced Insights and Operations

Prompting Techniques in LLM

Small vs Large Language Models

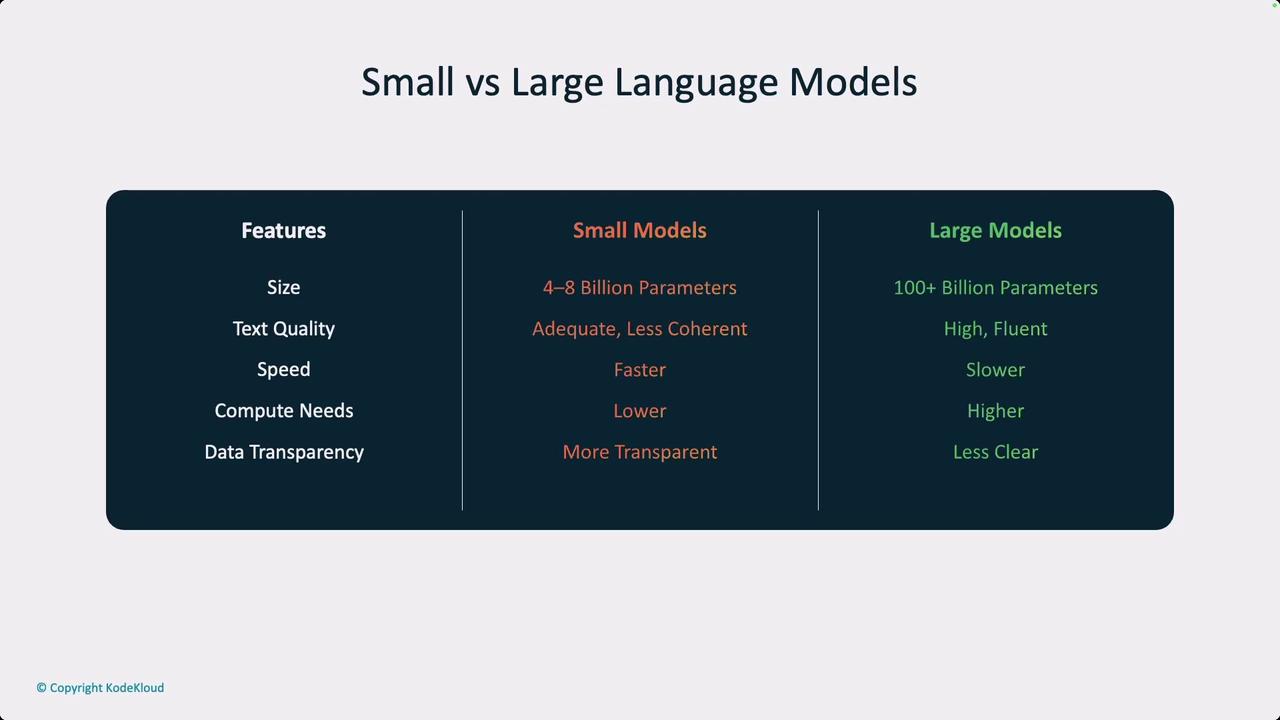

In this article, we dive deep into the impact of model size on language models by comparing two primary approaches: smaller models with 4 to 8 billion parameters versus larger ones with over 100 billion parameters. Although definitions may evolve over time, the current industry standard generally classifies models in the 4 to 8 billion parameter range as small and those exceeding 100 billion parameters as very large.

AI experts, lead architects, and CTOs often debate whether to invest in multiple small models or to rely on a single, more powerful large model. Smaller models offer the advantage of being more cost-effective and easier to fine-tune. They usually deliver more deterministic behavior due to a limited training dataset. However, their simplicity might limit capabilities such as high-quality reasoning and nuanced conversations. On the other hand, large models excel in complex reasoning and free-flow text generation, although they demand more compute power and specialized hardware.

Key Point

While small models offer efficiency and lower operating costs, large models bring superior fluency and advanced reasoning, making the choice highly dependent on the application.

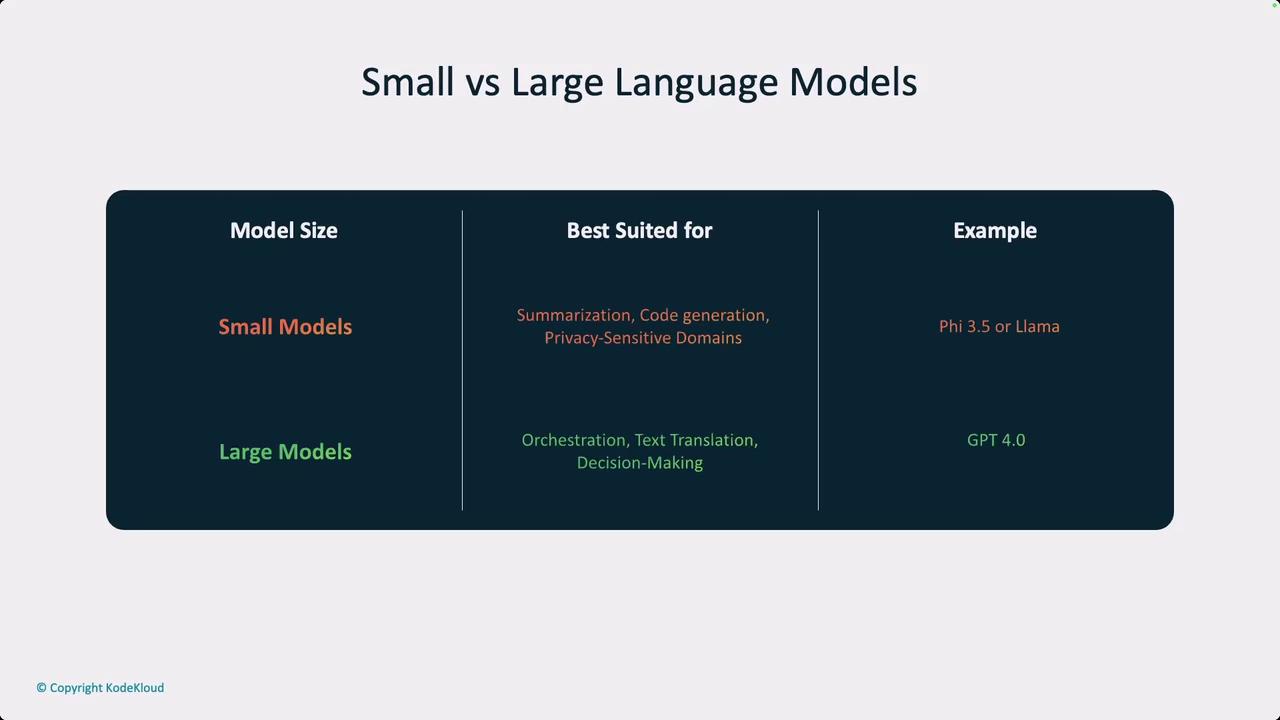

For instance, multi-agent architectures using multiple small models can sometimes outperform a single large model by leveraging techniques like chain-of-thought reasoning and reflective processes. Performance considerations remain crucial as well; running a small model on a CPU can be significantly faster than deploying a large model on a GPU cluster, especially in scenarios where low latency is critical. This is particularly important in use cases like summarizing structured documents, where even minimal latency increases due to network calls can be problematic under strict data privacy regulations.

Large models like those of the GPT-3/4 families are ideal for tasks that demand deep reasoning and orchestration. Conversely, smaller models from families such as Llama are well-suited for functions like summarization and code generation where extreme reasoning isn’t paramount. Ultimately, selecting between a small or large model depends on the specific application, data sensitivity, and available compute resources.

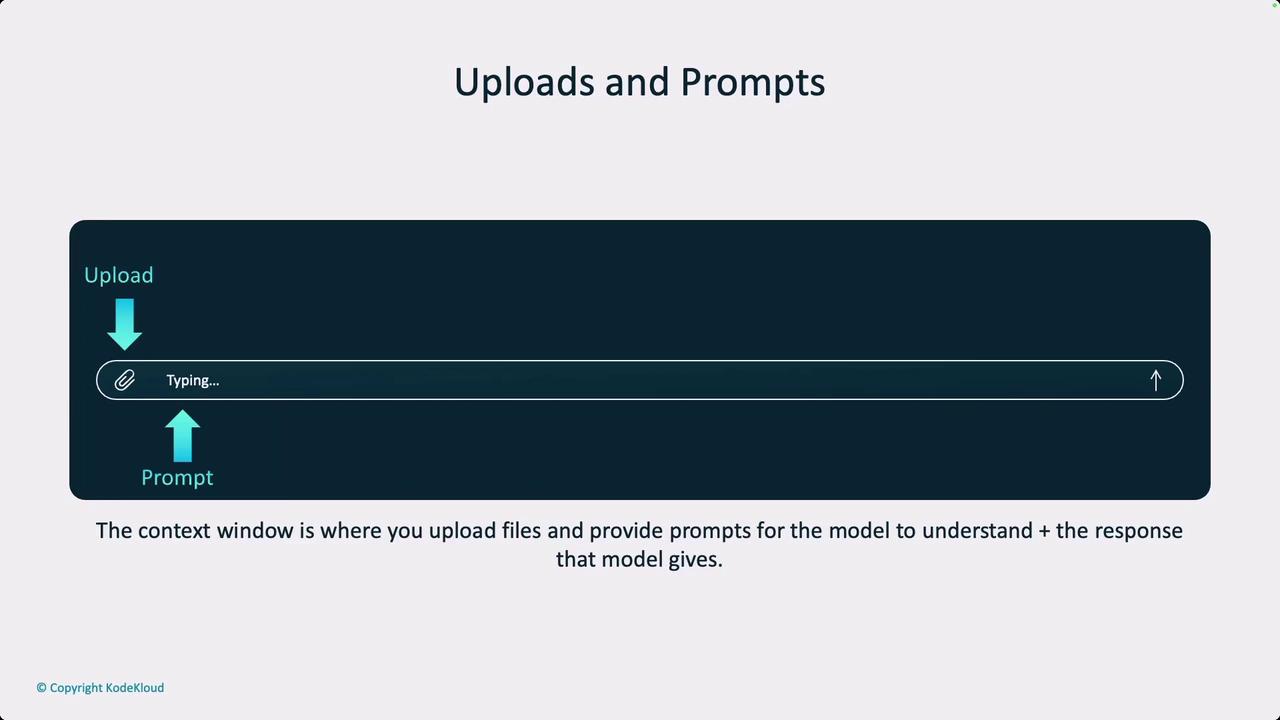

Another critical aspect of language model performance is the role of prompting and context. Prompts guide models through a process known as context learning. The model’s context window—which includes both the input tokens and generated output—plays a pivotal role. For example, if a model claims to support 128k tokens, that figure comprises the tokens provided as input plus the tokens it produces.

Consideration

When working with models that have extremely large context windows (such as Gemini’s support for up to 1 million tokens), be aware that there is a risk of losing track of key information. The model may disproportionately focus on either the beginning or the end of the sequence, which can affect performance.

The challenge of processing massive token contexts—often termed "needle in a haystack"—requires more than simply concatenating unrelated datasets. A coherent, well-curated dataset is essential for models to maintain context relevance over extended token inputs.

Cost efficiency is another pivotal factor, particularly in enterprise settings where token usage drives operational expenses. Even though token costs are gradually decreasing, optimizing the use of a model’s context window is critical, especially when handling large-scale deployments such as processing customer feedback, review videos, or detailed video transcripts in industries like automotive manufacturing.

In summary, selecting between a small and a large language model requires a careful assessment of multiple factors: specific use cases, the need for reasoning and orchestration versus determinism and speed, as well as data sensitivity and cost implications. By comprehensively understanding these trade-offs, practitioners can make informed decisions that align with both technical needs and business objectives amid the rapidly evolving landscape of generative AI.

Watch Video

Watch video content