Generative AI in Practice: Advanced Insights and Operations

Prompting Techniques in LLM

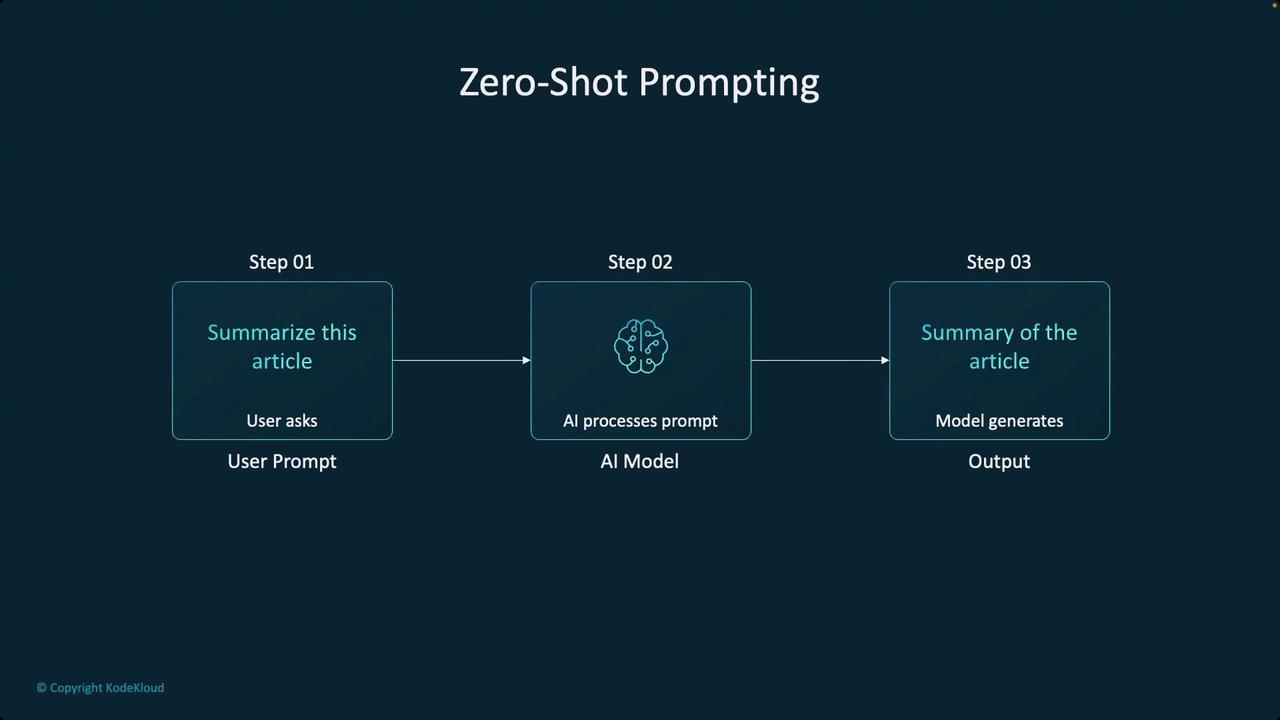

Zero Shot Prompting

In this article, we explore the concept of zero-shot prompting—a technique used to interact with large language models (LLMs) and foundation models without providing prior examples. As transformers and other foundation models continue to revolutionize artificial intelligence, it becomes crucial to ensure these models understand our specific use cases and datasets. Generally, there are two primary approaches to tailor these models:

Fine-Tuning

Fine-tuning involves adjusting the neural network's weights to better capture the intricacies of our data. While this method can be highly effective, it is often expensive, less flexible, and may not offer the most optimal results for every scenario.In-Context Learning

This approach supplies the necessary context directly within the prompt during the interaction. Through careful prompt engineering, we can significantly enhance the model's output. Although this method has tremendous potential, its power is sometimes underestimated.

Many applications naturally call for zero-shot prompting, where a direct question is posed to the model without any guiding examples. For instance, consider the task of summarizing an article purely via zero-shot prompting. In such cases, the model interprets the prompt and generates a summary based solely on its understanding. In contrast, including example summaries can sometimes improve the outcome by offering clearer guidance.

The decision between using zero-shot prompting or example-based techniques largely depends on the specific application and the model's available context window. As these models become more sophisticated and context windows broaden, the balance between providing examples and relying solely on zero-shot techniques may evolve.

Note

For further insights into improving AI-driven outputs, consider exploring advanced prompt engineering techniques and comparing the benefits of zero-shot prompting versus example-based prompts.

In the subsequent sections of this article, we will dive deeper into prompt engineering methodologies, outline the nuances between different prompting techniques, and examine how the structure of a prompt can substantially influence the quality of the model's output.

For more foundational concepts, check out these resources:

Watch Video

Watch video content