Kubernetes Autoscaling

Horizontal Pod Autoscaler HPA

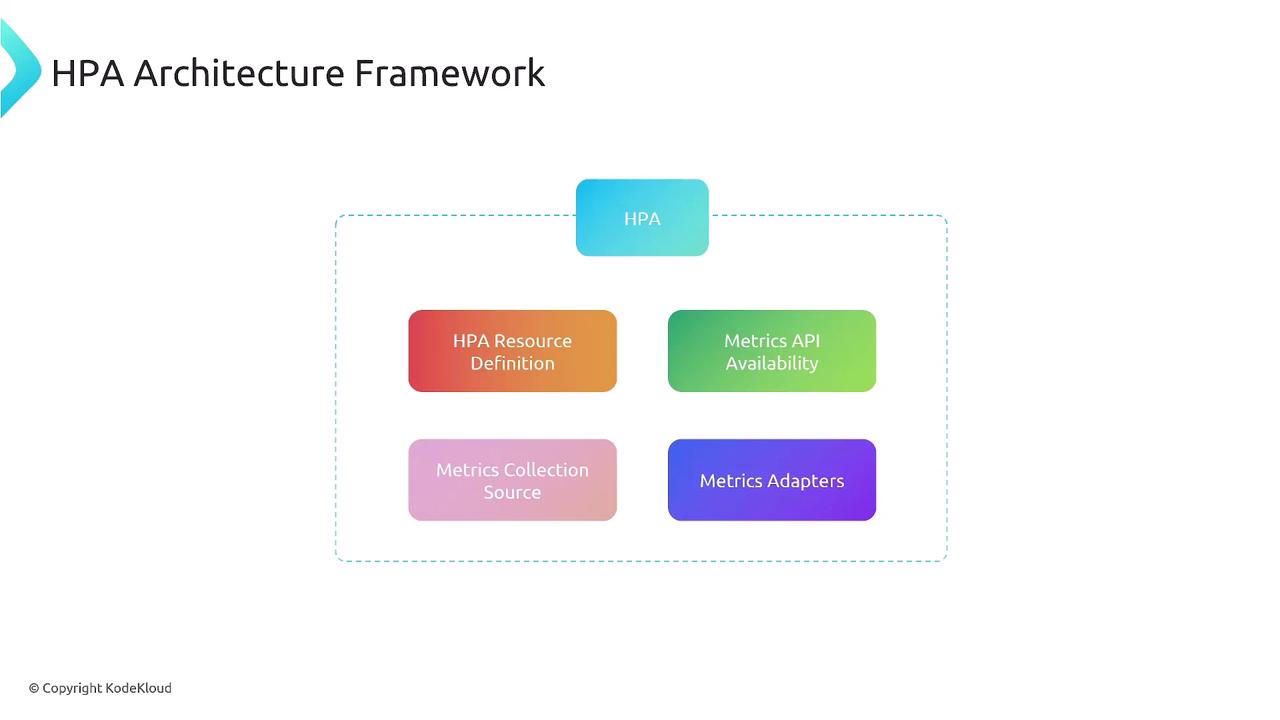

HPA Architecture

The Horizontal Pod Autoscaler (HPA) automatically adjusts the number of pod replicas in your deployments, StatefulSets, or ReplicaSets by monitoring live metrics. This guide walks through the HPA architecture, from core components to control loops, illustrating how Kubernetes leverages resource, custom, and external metrics to scale workloads.

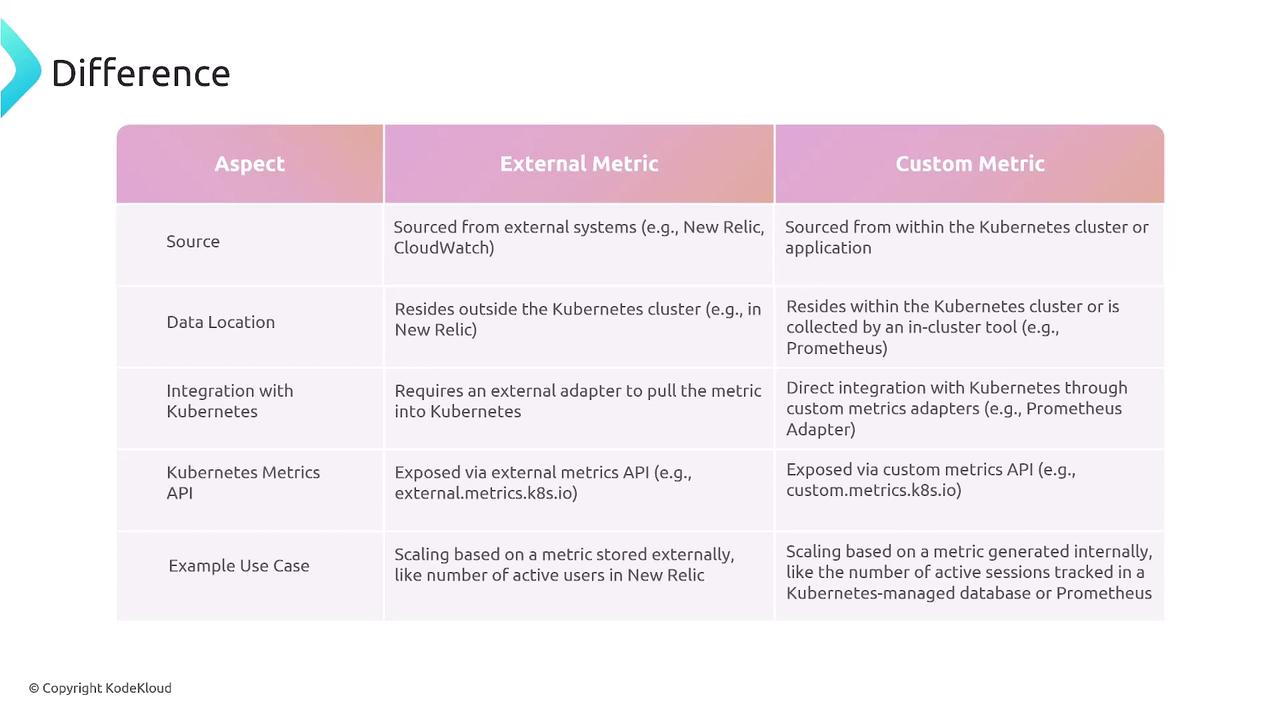

| Metric Category | Description | API Endpoint |

|---|---|---|

| Resource | CPU & memory usage | metrics.k8s.io |

| Custom | In-cluster application metrics | custom.metrics.k8s.io |

| External | Third-party or cloud metrics | external.metrics.k8s.io |

1. HPA Resource Definition

Define which workload to scale, the minimum/maximum replicas, and the metric targets.

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: my-statefulset-hpa

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: StatefulSet

name: my-statefulset

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

Here, the HPA keeps average CPU utilization around 50%, scaling the StatefulSet between 2 and 10 pods.

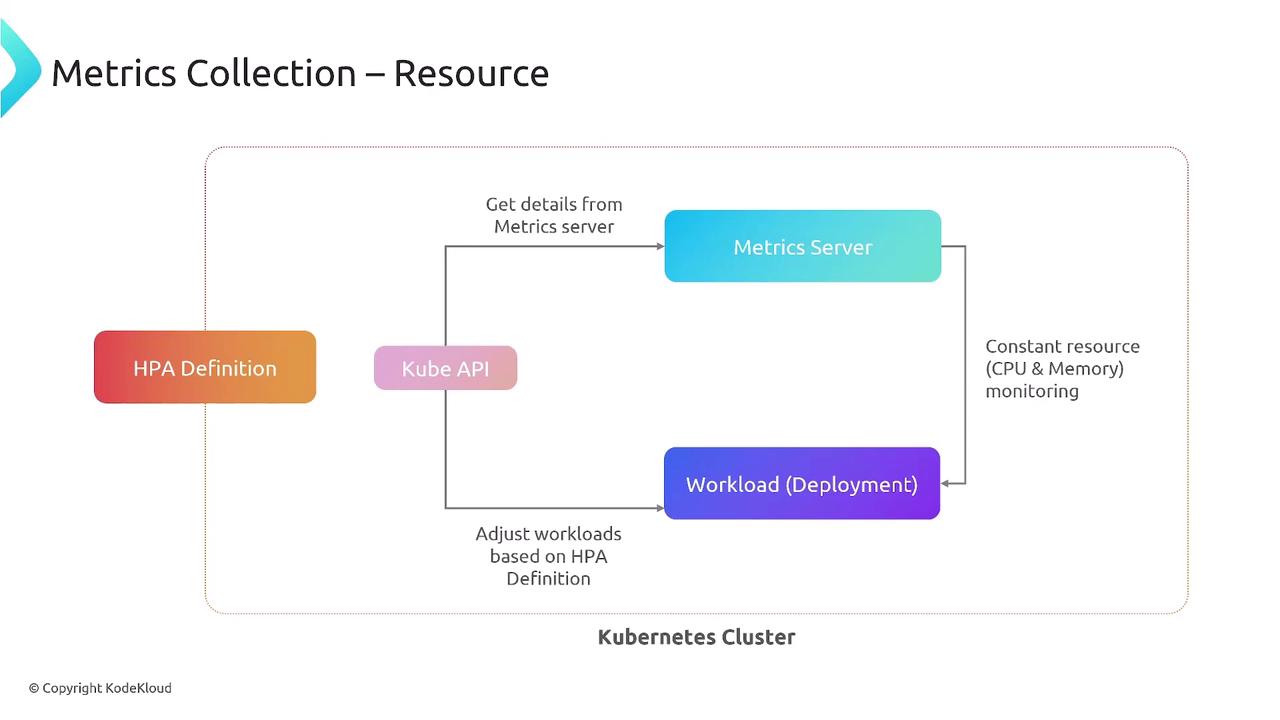

2. Native Resource Metrics Collection

By default, HPA retrieves CPU and memory metrics from the Kubernetes Metrics Server via metrics.k8s.io.

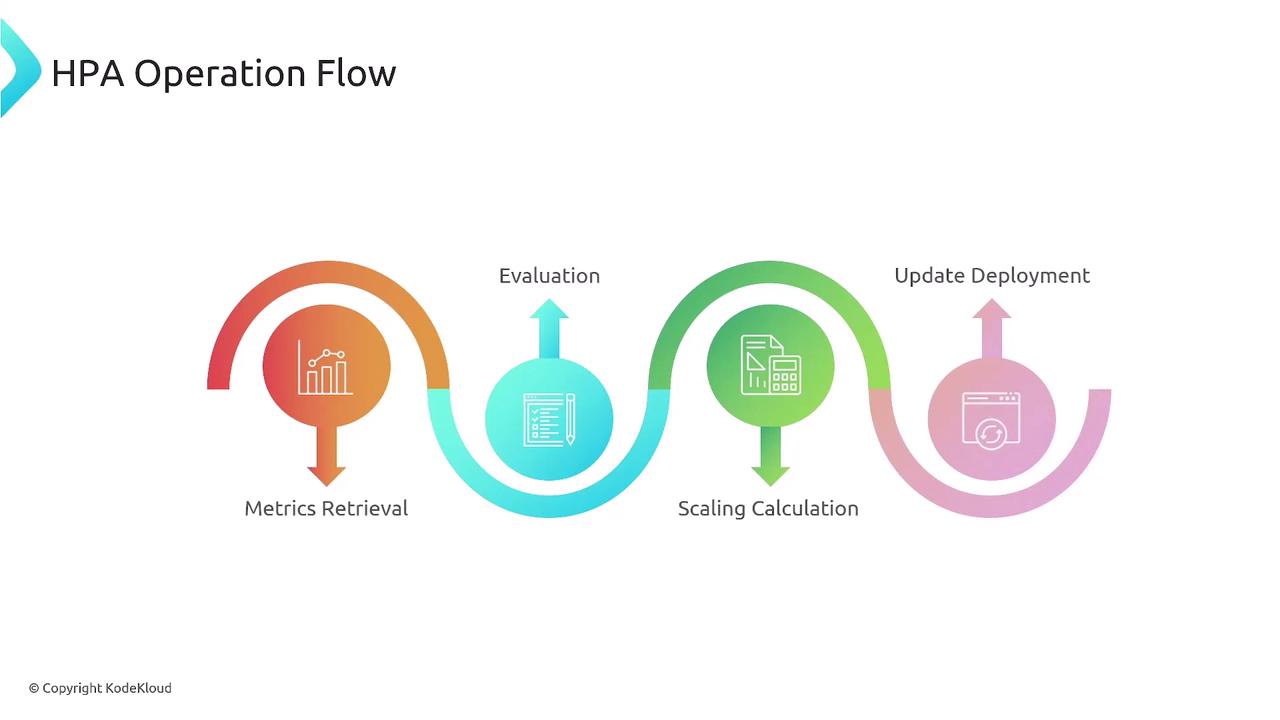

The HPA control loop (every 15s by default) performs:

- Fetch metrics (CPU/memory) from Metrics Server

- Compare to target thresholds

- Compute desired replica count

- Patch the target workload

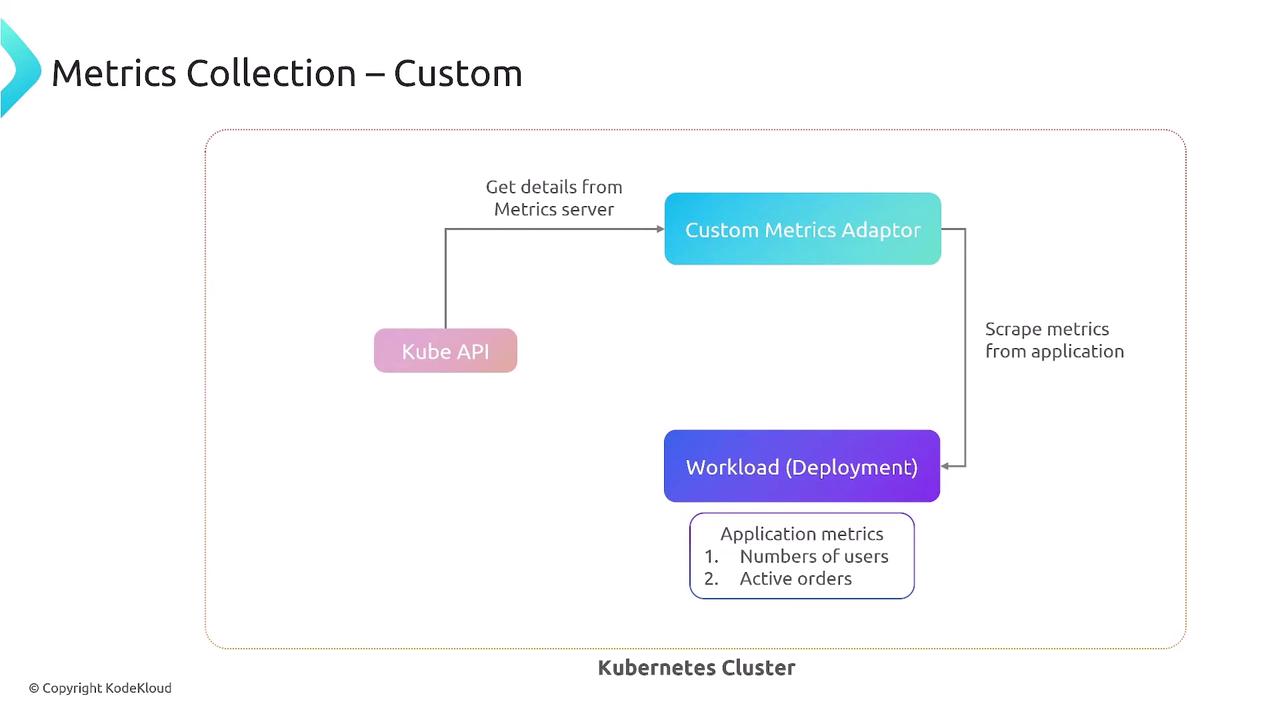

3. Custom Metrics (In-Cluster)

Use custom.metrics.k8s.io and an adapter (e.g., Prometheus Adapter) to scale on application-specific metrics.

Example: scale a Deployment on HTTP requests per second.

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: my-app-hpa

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

minReplicas: 2

maxReplicas: 10

metrics:

- type: Pods

pods:

metric:

name: http_requests_per_second

target:

type: AverageValue

averageValue: "100"

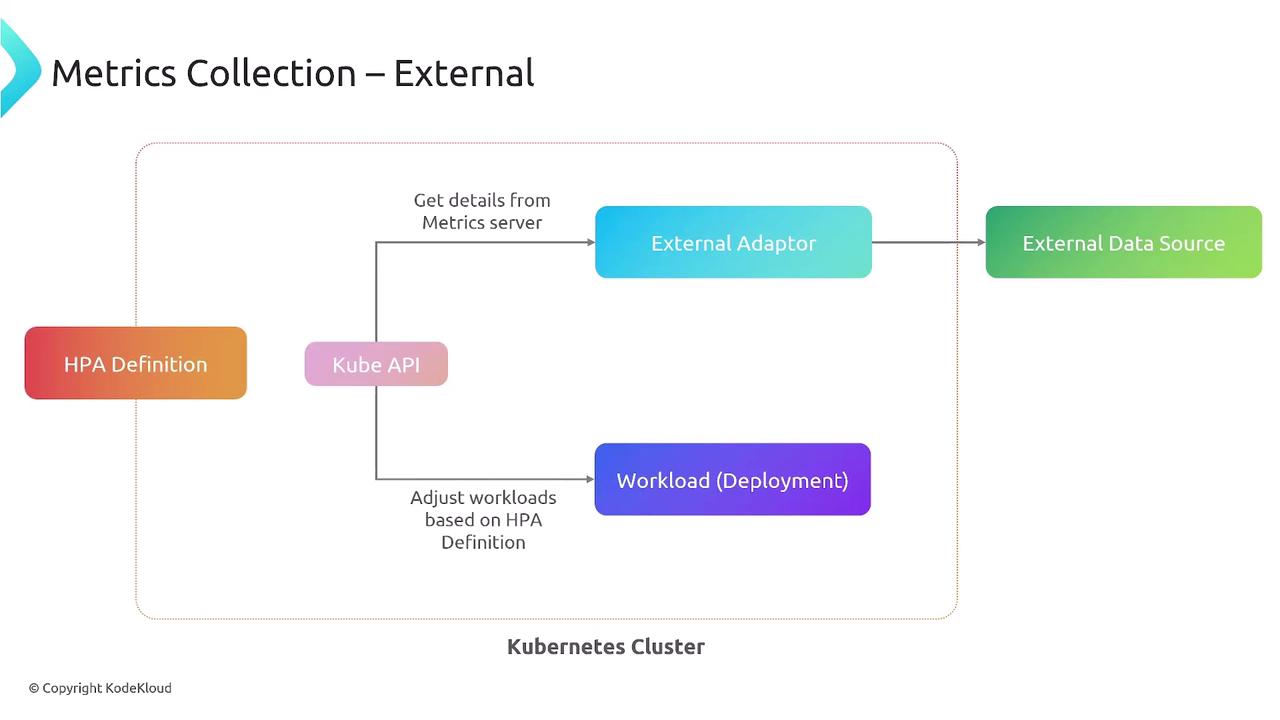

4. External Metrics (Outside Cluster)

Leverage metrics from external systems—cloud providers, SaaS tools—via external.metrics.k8s.io and a suitable adapter.

Example using New Relic response time:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: my-app-hpa

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

minReplicas: 2

maxReplicas: 10

metrics:

- type: External

external:

metric:

name: newrelic.app.response_time

target:

type: Value

value: "500"

5. Metrics APIs and Adapters

Kubernetes exposes three metric APIs:

| Metric Type | API Endpoint | Adapter Example |

|---|---|---|

| Resource | metrics.k8s.io | Metrics Server |

| Custom | custom.metrics.k8s.io | Prometheus Adapter |

| External | external.metrics.k8s.io | New Relic Adapter, AWS CloudWatch Adapter |

Note

Adapters translate between Kubernetes and metric providers, enabling HPA to consume non-native metrics.

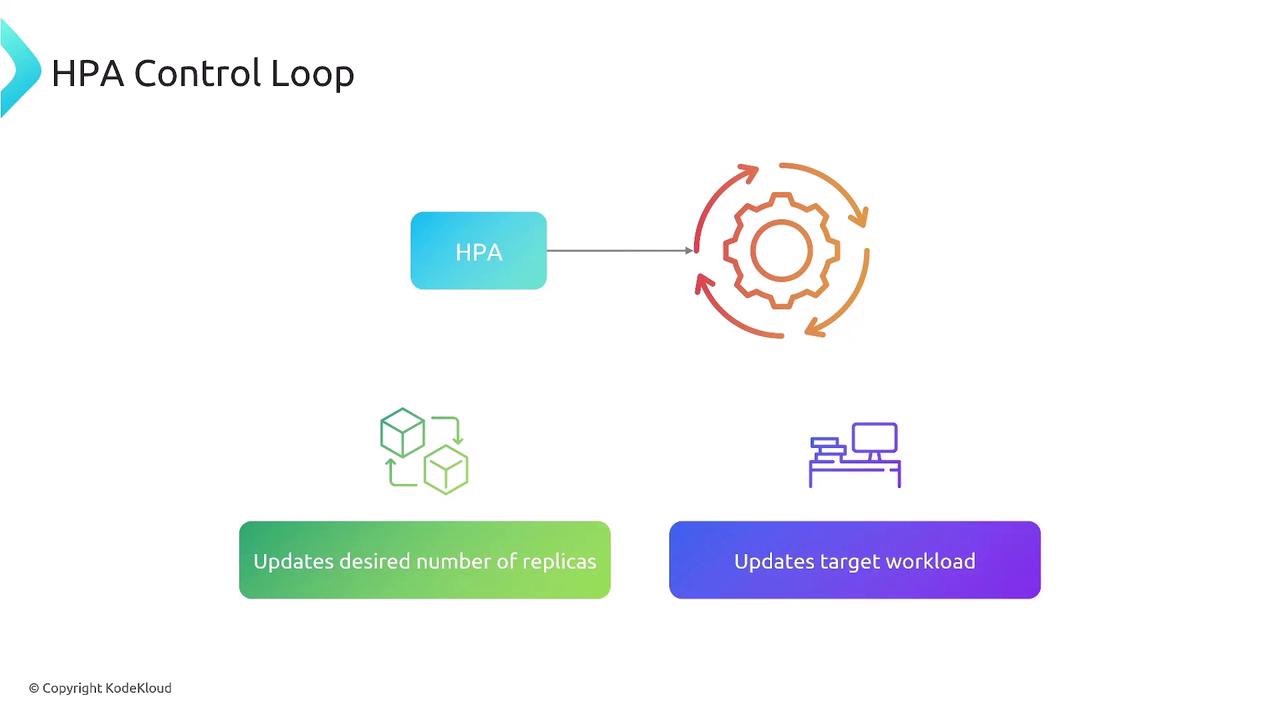

6. HPA Control Loop and Operation Flow

The HPA controller continuously runs a loop to keep your pods scaled to demand.

Key Considerations

- Ensure the Metrics Server is installed for resource metrics.

- Deploy custom/external adapters before referencing their APIs.

- Configure scale-up/down policies and stabilization windows to avoid flapping.

- Tune the control loop interval and thresholds based on workload patterns.

Warning

Incorrect thresholds or missing adapters can lead to no scaling or unexpected behavior. Always test HPA configurations in a staging environment.

Links and References

Watch Video

Watch video content

Practice Lab

Practice lab