Kubernetes Autoscaling

Manual Scaling

Course Overview

Welcome to the Kubernetes Autoscaling course! In this lesson, you’ll learn how to automate resource scaling to keep your applications resilient, responsive, and cost-efficient under varying traffic patterns.

Why Autoscaling Matters

When your online store faces a sudden surge—like a flash sale—manual scaling can’t react fast enough. Autoscaling in Kubernetes works like a smart thermostat for your cluster, adding capacity when demand spikes and removing it as traffic eases, ensuring optimal performance and cost control.

Kubernetes Autoscaling Architecture

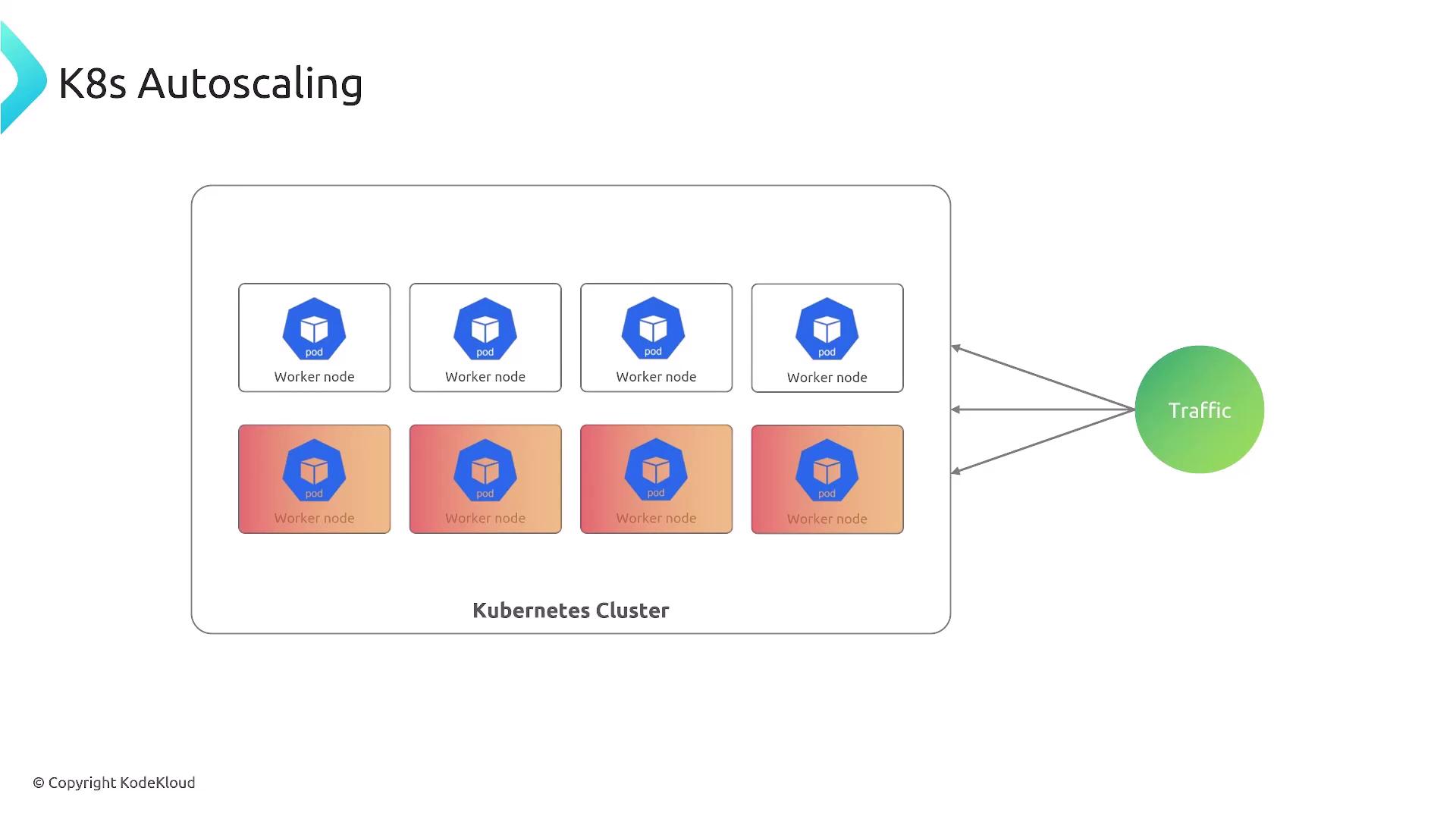

Kubernetes autoscaling relies on close collaboration between:

- Control Plane: Makes scaling decisions based on metrics and cluster state.

- Worker Nodes: Run your application pods and scale out/in as directed.

- Pods: The smallest deployable units, which increase or decrease in count or resource allocation.

When load increases, the control plane triggers pod replicas and may provision additional nodes. In case of node failures, workloads shift to healthy nodes, keeping applications available.

Requirements

Make sure you have the Kubernetes Metrics Server installed to power autoscaling with CPU, memory, and custom metrics.

Refer to the Metrics Server setup guide.

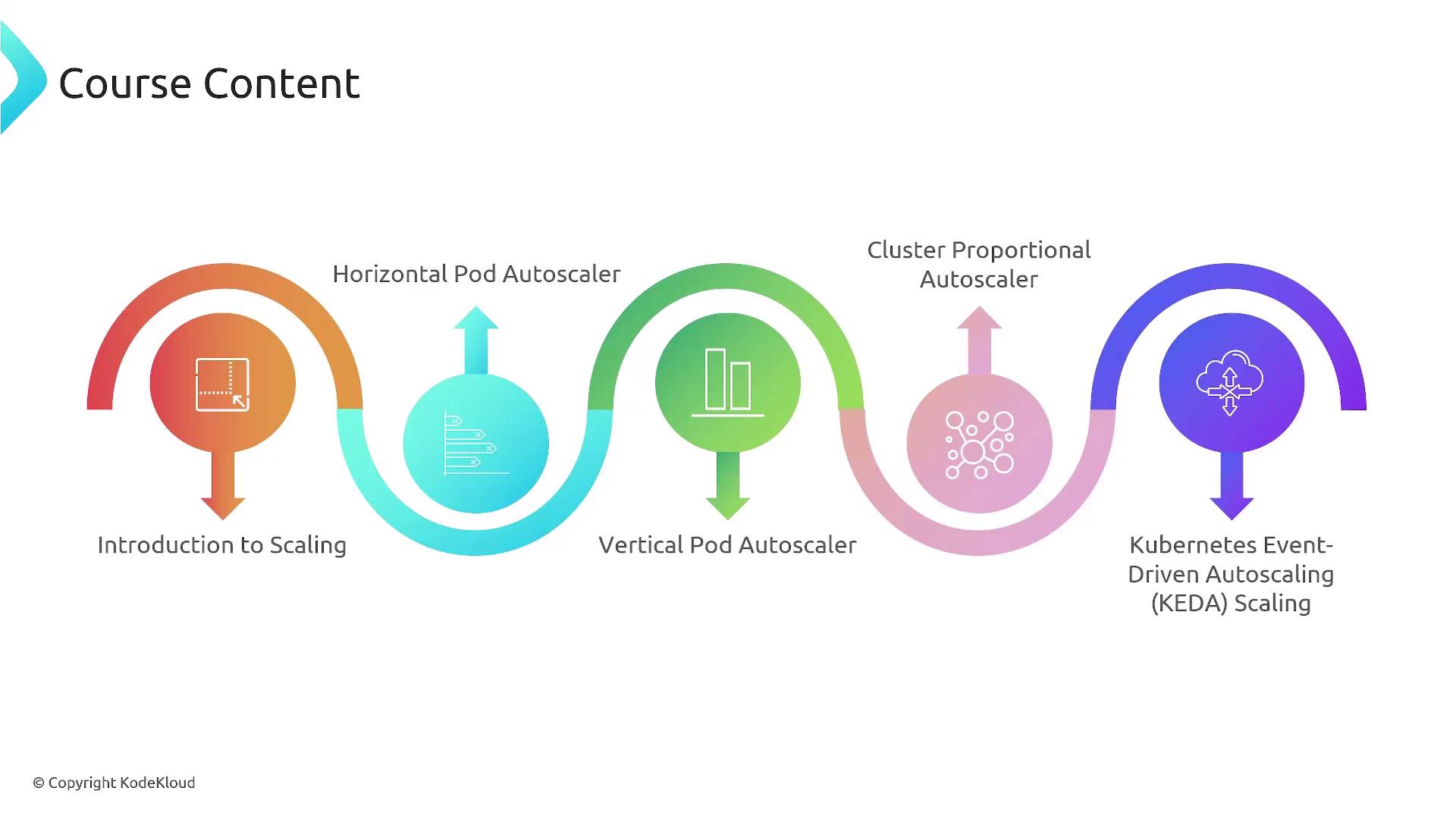

Course Content

Below is an overview of the topics we’ll cover in this lesson:

| Topic | Description |

|---|---|

| Fundamentals of Scaling | Key concepts, use cases, and scaling patterns. |

| Horizontal Pod Autoscaler (HPA) | Auto-adjust pod replica count based on CPU, memory, or custom metrics. Learn more. |

| Vertical Pod Autoscaler (VPA) | Recommends or enforces CPU and memory requests/limits for pods. |

| Cluster Proportional Autoscaler (CPA) | Scales resources in proportion to cluster size. |

| Kubernetes Event-Driven Autoscaling (KEDA) | Reacts to external event sources (e.g., Kafka, SQS). Explore KEDA. |

| Cluster Autoscaler | Adjusts node count to fit pod scheduling requirements. |

Cluster Autoscaler Caution

Scaling nodes can impact cloud costs. Always review your budget and set safe limits when configuring the Cluster Autoscaler.

Learning Outcomes

By the end of this lesson, you will be able to:

- Explain core autoscaling concepts in Kubernetes.

- Configure and deploy HPA, VPA, CPA, KEDA, and Cluster Autoscaler.

- Implement best practices to ensure reliable, cost-effective scaling.

Let’s dive in and start mastering Kubernetes autoscaling!

Watch Video

Watch video content