Kubernetes Autoscaling

Vertical Pod Autoscaler VPA

Summary Closure and Limitation of HPA VPA

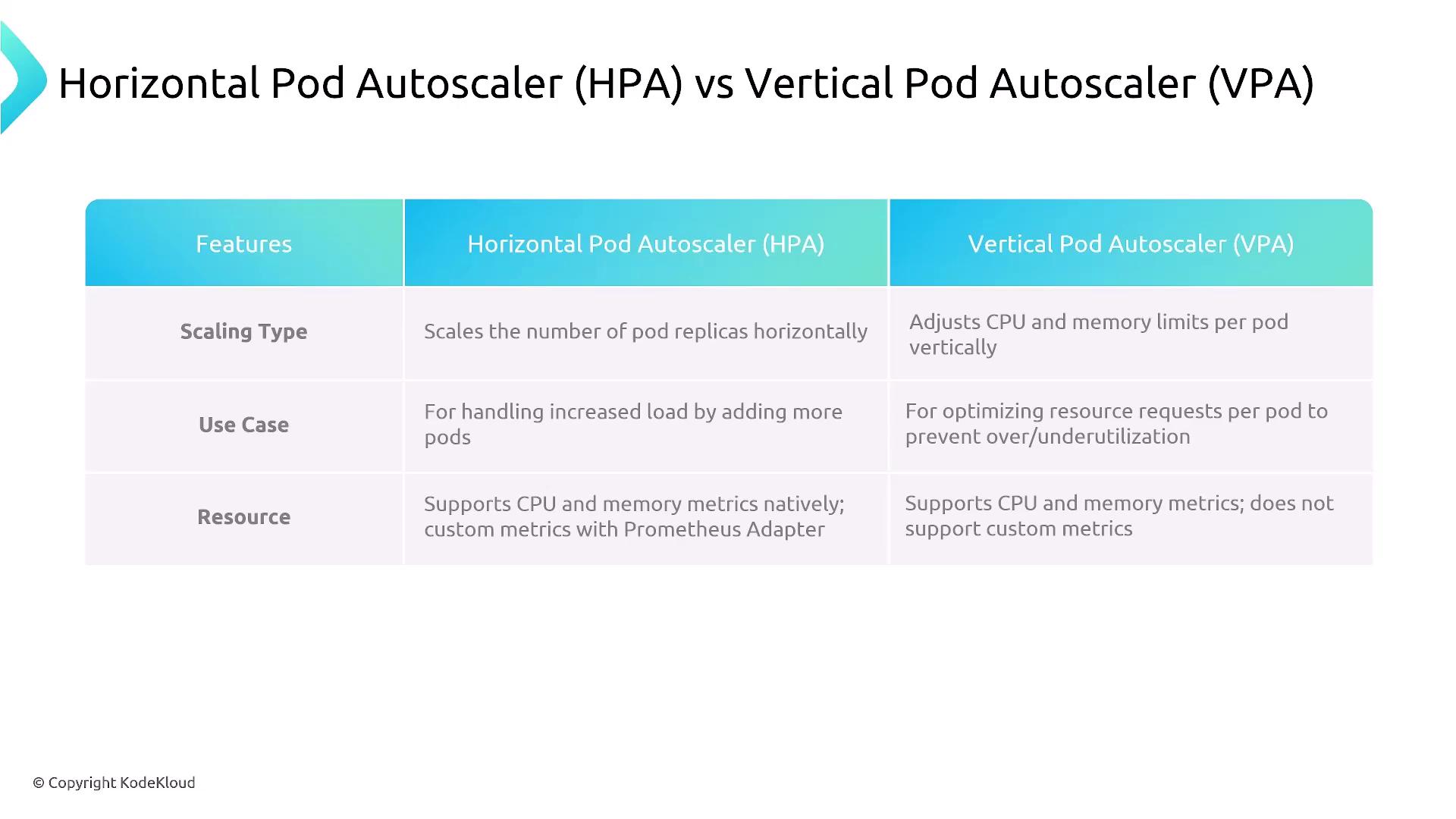

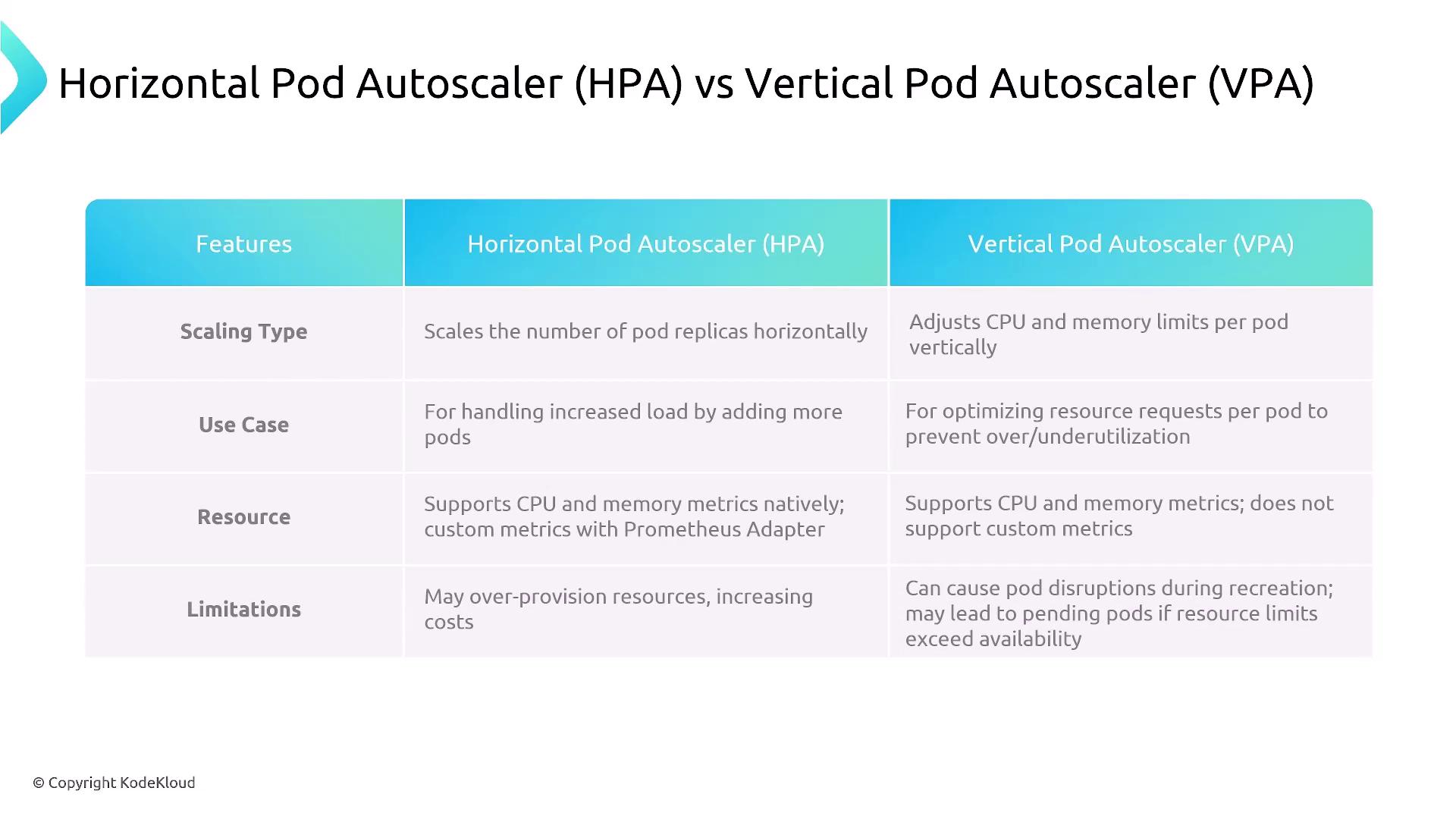

In this guide, we revisit Kubernetes autoscaling, comparing the Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA). You’ll learn when to use each autoscaler, how to combine them safely, and what limitations to watch for in production.

Key Kubernetes Autoscaling Tools

| Autoscaler | Scaling Type | Metrics | Ideal Use Case | Pod Restarts |

|---|---|---|---|---|

| Horizontal Pod Autoscaler (HPA) | Horizontal | CPU (native), custom (Prometheus adapter) | Stateless apps, varying traffic | No |

| Vertical Pod Autoscaler (VPA) | Vertical | CPU/memory requests | Stateful or resource-sensitive services | Yes (updates resource settings, triggers restarts) |

Core Concepts

- HPA: Automatically adjusts the number of pod replicas based on metrics such as CPU utilization or custom metrics via the Prometheus adapter.

- VPA: Recommends or applies CPU/memory resource adjustments to existing pods, which can trigger restarts when updating requests and limits.

Pairing HPA and VPA

You can safely combine both autoscalers by delegating different metrics:

- Let HPA scale horizontally using custom metrics (e.g., queue length, request latency).

- Let VPA handle resource recommendations for CPU and memory.

Warning

Avoid running HPA and VPA on the same metric (CPU or memory) to prevent conflicting recommendations and pod flapping.

Known Limitations of VPA

Pod Recreation

VPA updates resource requests by restarting pods, which can briefly disrupt service.HPA Conflicts

Using HPA and VPA on identical metrics (CPU/memory) often leads to scaling loops.Admission Controller

If VPA-recommended resources exceed node capacity, the admission plugin may block pod creation.Unmanaged Pods

VPA only adjusts pods owned by controllers (e.g., Deployment, StatefulSet), ignoring standalone pods.

Additional Considerations

OOM Handling

VPA can proactively prevent out-of-memory crashes by increasing memory requests, but it may miss rare edge cases.Large-Cluster Performance

VPA’s behavior in large-scale environments is not fully validated; conduct thorough testing before production rollout.Resource Saturation

Recommendations exceeding available capacity cause pods to stay inPendingstate.Overlapping Recommendations

Multiple VPA resources applied to the same pods can generate scheduling contention.

Note

Integrate the Cluster Autoscaler to dynamically add nodes when pods remain pending.

Conclusion

Select the right autoscaler based on your workload profile:

- Use HPA for horizontally scalable, stateless applications with fluctuating demand.

- Use VPA for fine-tuning resource allocations in stateful or resource-sensitive services.

- Combine HPA and VPA only when each operates on distinct metrics to avoid conflicts.

Autoscaling optimizes application performance and infrastructure costs. Always validate autoscaler behavior and limitations in your environment before going live.

Further Reading

Watch Video

Watch video content