Kubernetes Networking Deep Dive

Kubernetes Services

Troubleshooting Internal Networking

When Kubernetes networking breaks, identifying the root cause quickly is crucial. This guide highlights common troubleshooting scenarios—CNI issues, network policies, DNS/service discovery, and service-endpoint-pod connectivity. Follow the structured steps below to restore cluster networking.

Networking in Kubernetes depends on: ![]()

| Scenario | Focus | Key Commands |

|---|---|---|

| CNI | Pod network agents & connectivity | kubectl get pods -n kube-system, cilium status |

| Network Policies | Ingress/Egress filters | kubectl get networkpolicies, ping, nc, curl |

| Service Discovery & DNS | CoreDNS health & resolution | kubectl logs coredns, nslookup, dig |

| Services & Endpoints | Service definitions & backends | kubectl describe svc, kubectl get endpoints |

1. Troubleshooting CNIs

All Container Network Interfaces (CNIs) run as pods. Start by validating their status:

Check CNI pod status

- Run

kubectl get pods -n kube-systemand look for restarts or CrashLoop. - Inspect events:

kubectl describe pod <cni-pod> -n kube-system. - Review logs:

kubectl logs <cni-pod> -n kube-system.

- Run

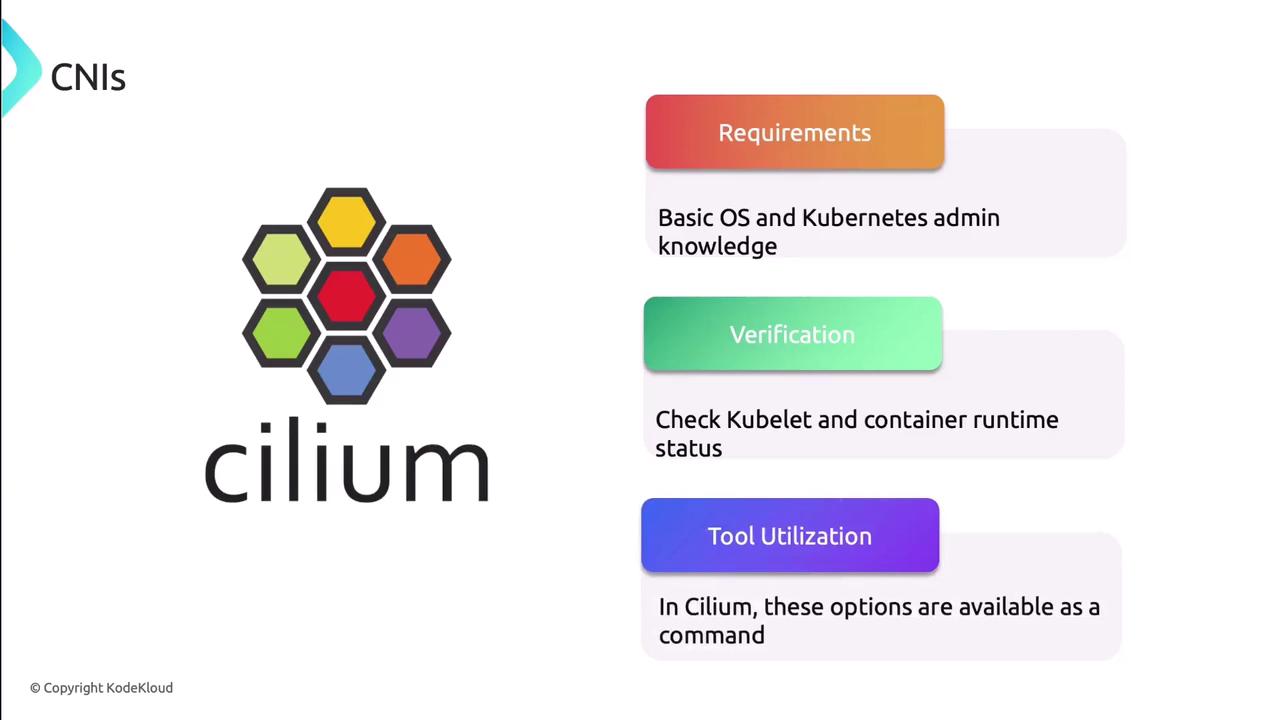

Verify node health

- Confirm

kubeletand the container runtime (Docker, containerd) are Running. - For Cilium users,

cilium node statusshows kernel modules, BPF maps, and node health.

- Confirm

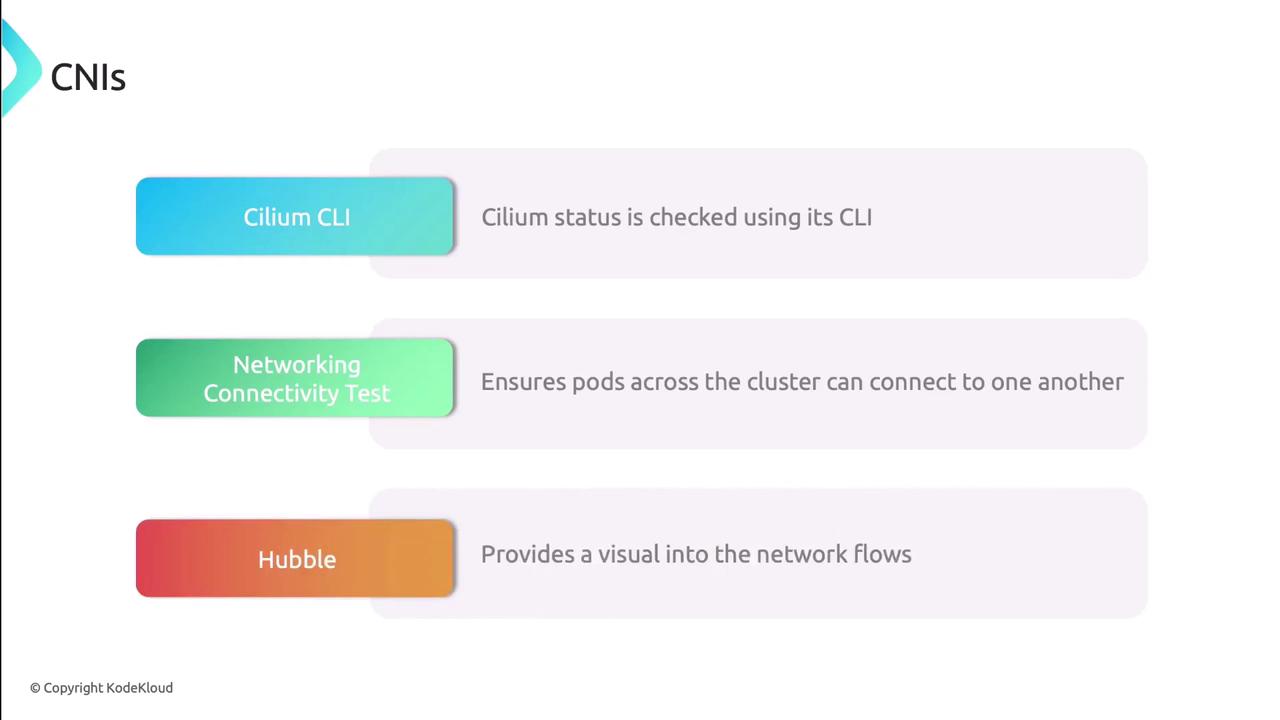

Use CNI-specific tools

Many CNIs include CLIs and connectivity tests:- Cilium CLI:

cilium status,cilium connectivity test - Hubble: Visualize flows and policy enforcement

Note

Deploy automated connectivity tests to validate pod-to-pod networking before diving deeper.

- Cilium CLI:

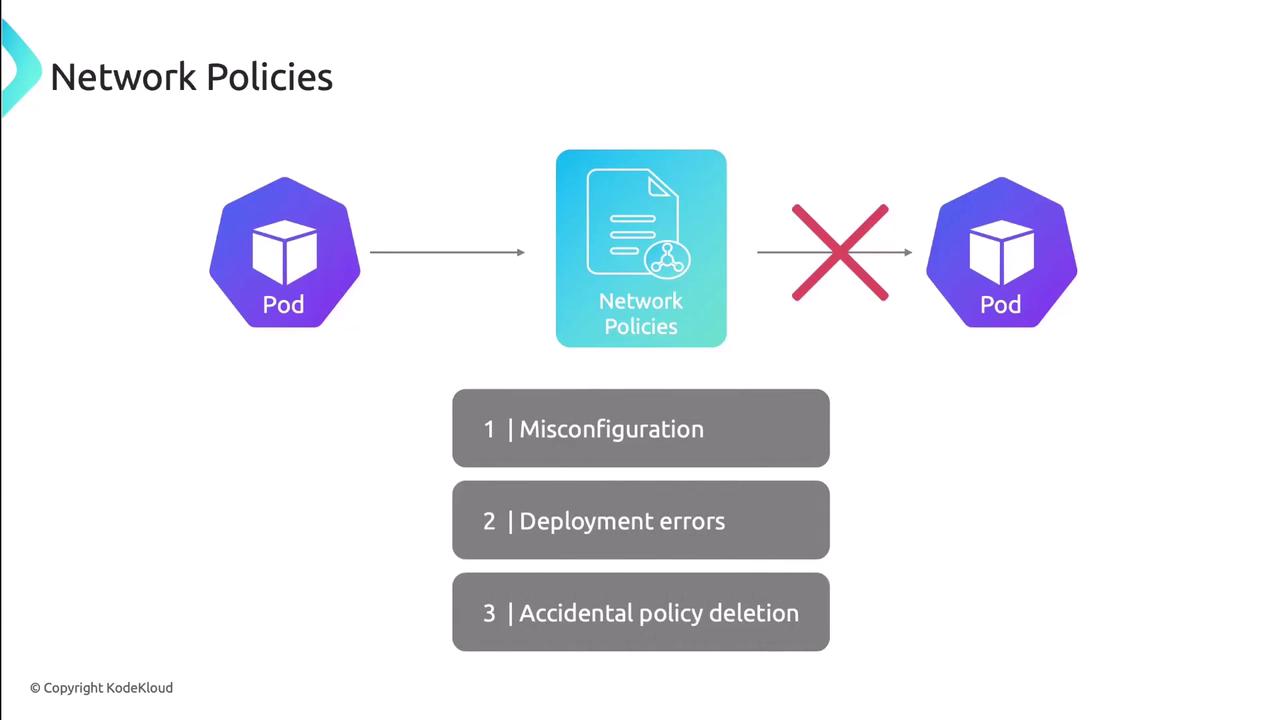

2. Troubleshooting Network Policies

Misconfigured or missing NetworkPolicies can silently block traffic:

Locate policies

kubectl get networkpolicies --all-namespacesIf no policies exist, skip to other troubleshooting areas.

Review selectors and intent

- Ensure

podSelectorandnamespaceSelectormatch the intended workload. - Overly broad selectors may catch nothing; too narrow may block all traffic.

- Ensure

Verify ingress/egress rules

An empty list blocks traffic by default. Confirm each rule explicitly allows the necessary ports and protocols.Warning

An empty network policy blocks all ingress and egress. Always define at least one rule.

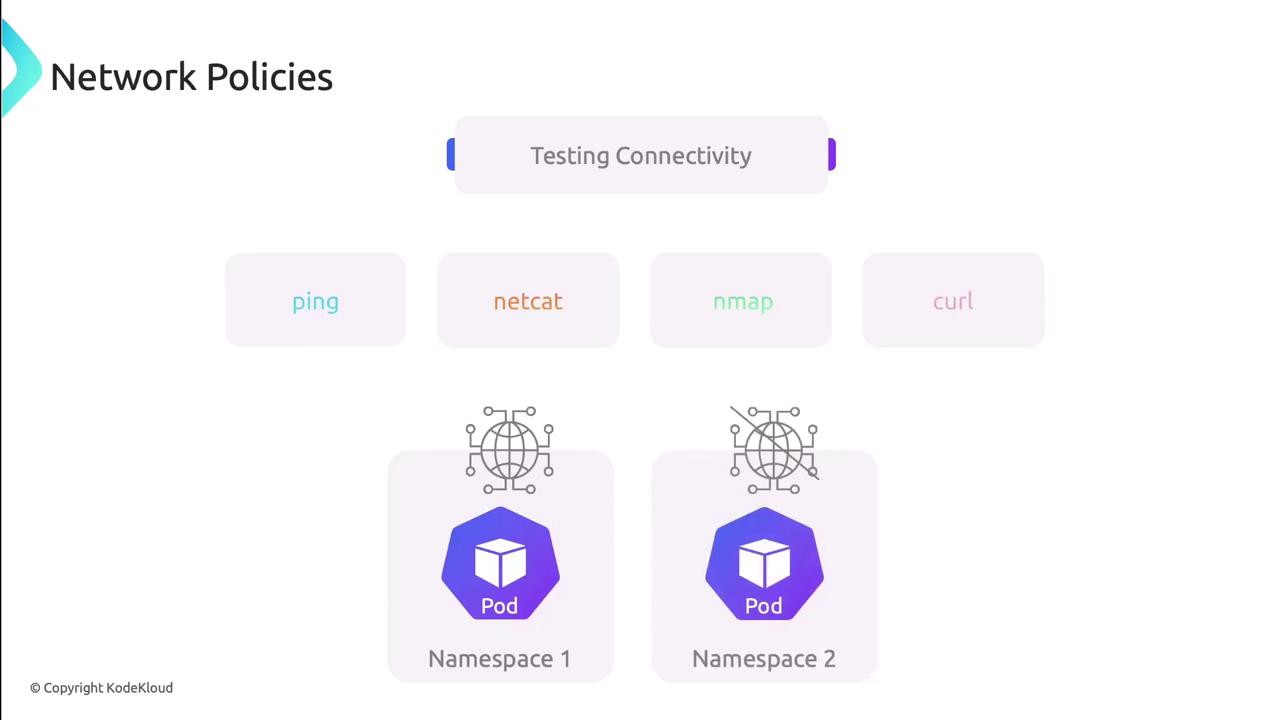

Test connectivity

Launch pods in both allowed and denied namespaces and validate traffic flows:ping <pod-IP>nc -zv <pod-IP> <port>curl http://<service>

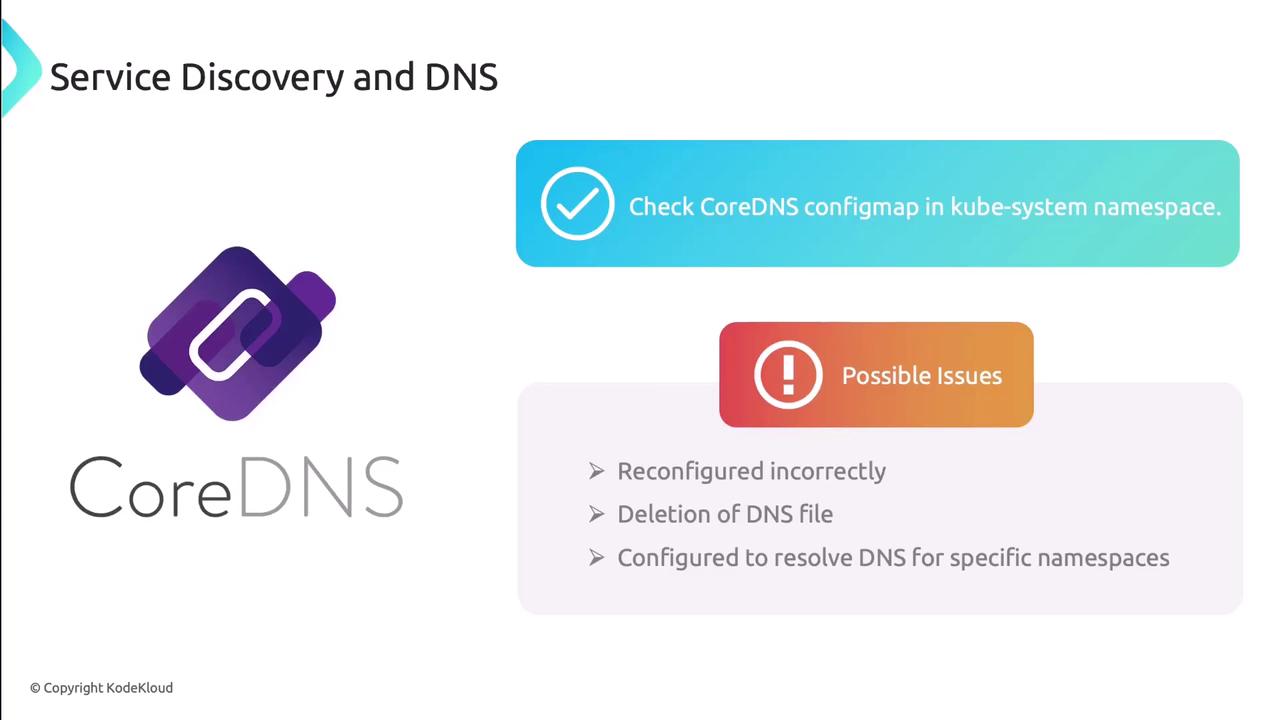

3. Troubleshooting Service Discovery & DNS

CoreDNS manages internal name resolution. Follow these steps:

Check CoreDNS pods

kubectl get pods -n kube-system -l k8s-app=kube-dnsEnsure pods are Running, then

kubectl logsfor errors.Inspect ConfigMap

kubectl get configmap coredns -n kube-system -o yamlLook for syntax errors or missing zones.

Validate pod DNS settings

Inside a test pod, check/etc/resolv.confmatches your cluster DNS IP.Test DNS resolution

nslookup kubernetes.default dig @<coredns-ip> my-service.my-namespace.svc.cluster.local

4. Troubleshooting Services, Endpoints & Pods

Connectivity issues here often stem from selector or port mismatches:

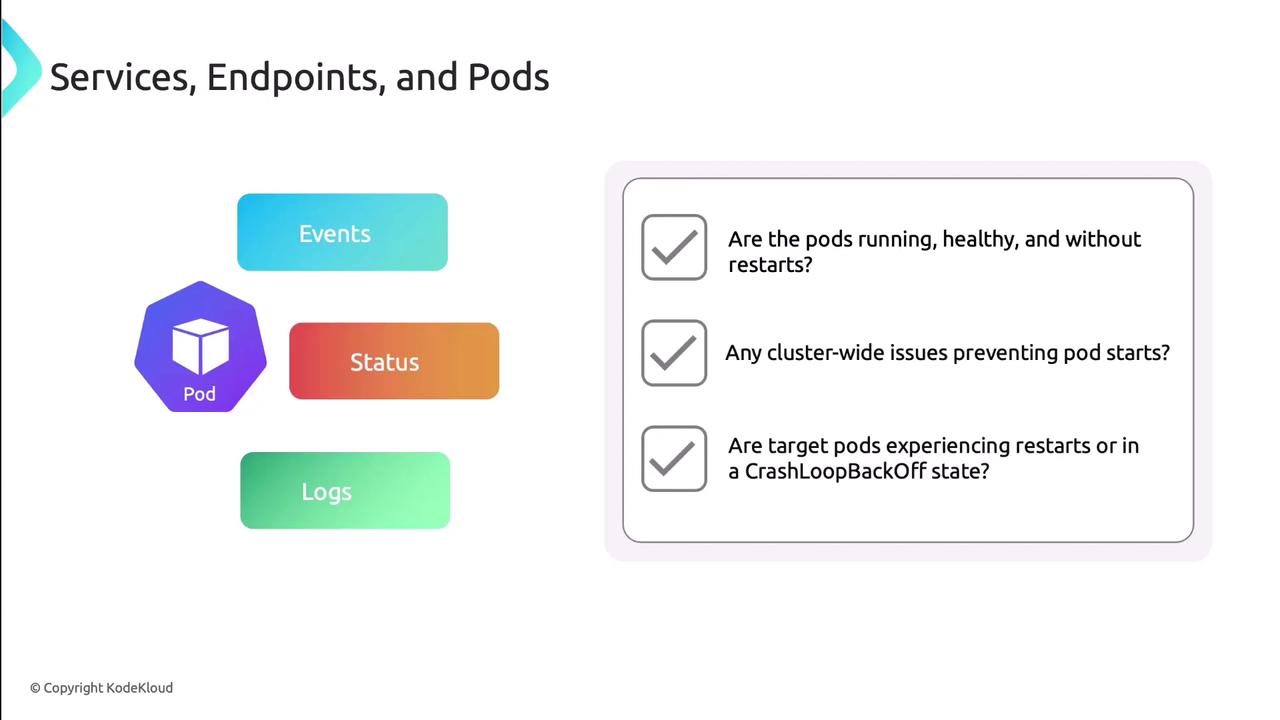

Check pod health

- Pods should be Running without restarts.

- Look for CrashLoopBackOff in

kubectl describe pod. - Review logs for errors or resource exhaustion.

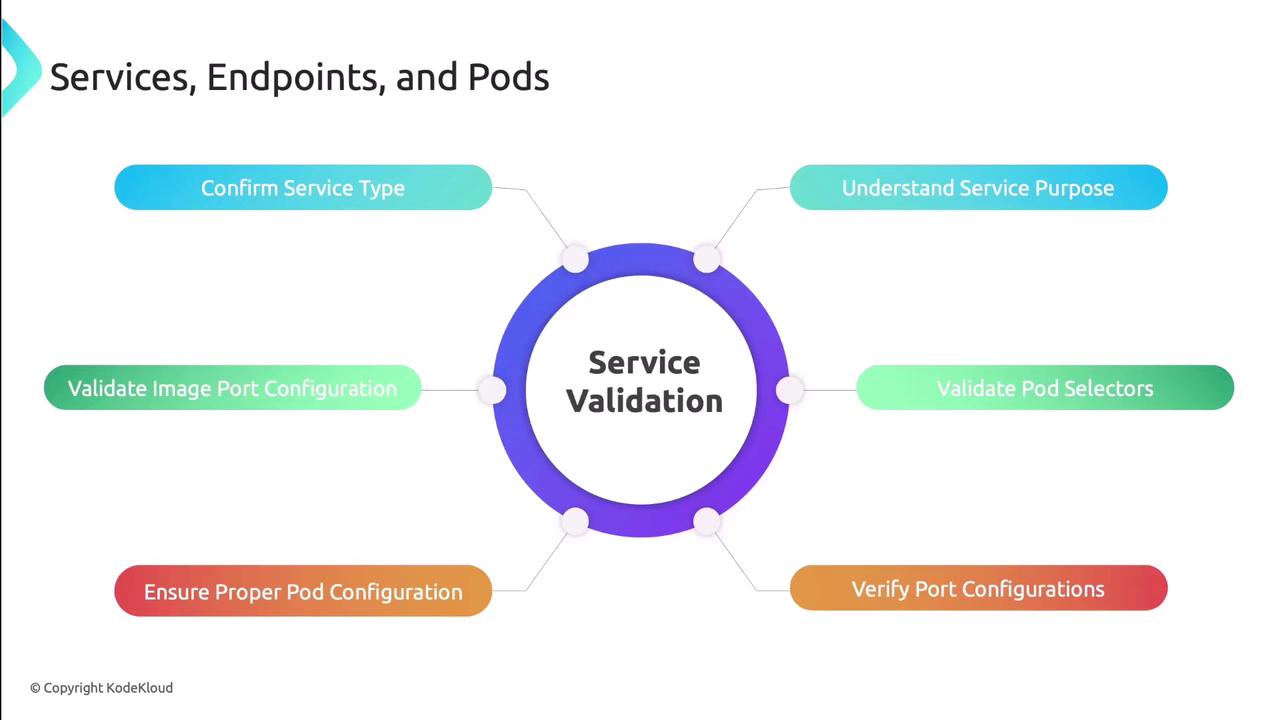

Validate services

- Confirm service type suits your use case (ClusterIP, NodePort, LoadBalancer).

- Check

spec.selectorlabels match pod labels. - Verify service ports map to container ports.

- Ensure the application listens on the advertised port.

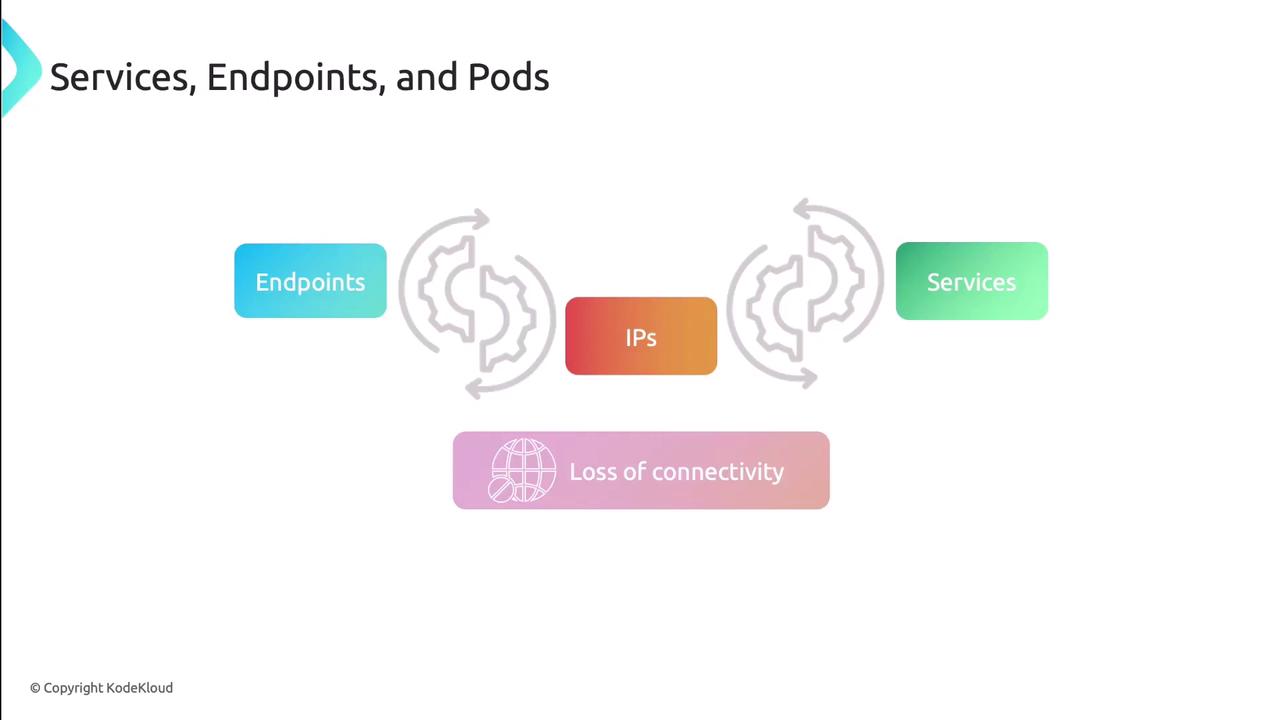

Compare Services and Endpoints

Each Service should have a corresponding Endpoints object:kubectl get endpoints <service-name>Verify the IPs match the target pods to avoid silent failures.

Port-forward as needed

kubectl port-forward svc/<service> 8080:<port>This isolates the service without external load balancers.

Next, apply these techniques on a live cluster to reinforce your troubleshooting skills.

Links and References

Watch Video

Watch video content